一、前言

本章节我们将了解K8S的相关网络概念,包括K8S的网络通讯原理,以及Service以及相关的概念,包括Endpoint,EndpointSlice,Headless service,Ingress等。

二、网络通讯原理和实现

同一K8S集群,网络通信实现可以简化为以下几个模型,Pod内容器之间的通信,同一节点内Pod间的通信,以及跨节点Pod的通信。

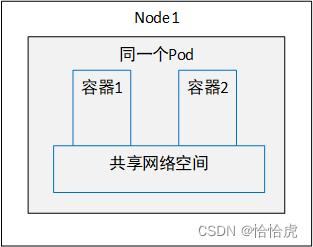

1、Pod内容器之间的通信

同一Pod内的容器是共享同一个网络命名空间的,它们就像工作在同一台机器上,可以使用localhost地址访问彼此的端口。其模型如下:

我们来看下面实例,其yaml文件如下

[root@k8s-master yaml]# cat network-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: network-pod

spec:

containers:

- name: busybox

image: busybox

command:

- "/bin/sh"

- "-c"

- "sleep 3000"

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80该Pod包含两个容器(busybox和nginx),执行该文件,创建Pod,进入到 busybox容器,访问nginx容器。

[root@k8s-master yaml]# kubectl exec -it network-pod -c busybox sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # curl

sh: curl: not found

/ # wget http://localhost

Connecting to localhost (127.0.0.1:80)

saving to 'index.html'

index.html 100% |******************************************************************************************************************************************| 612 0:00:00 ETA

'index.html' saved

/ # cat index.html

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

...可以看到,在busybox容器中访问http://localhost,可以获取到ngnix的页面。

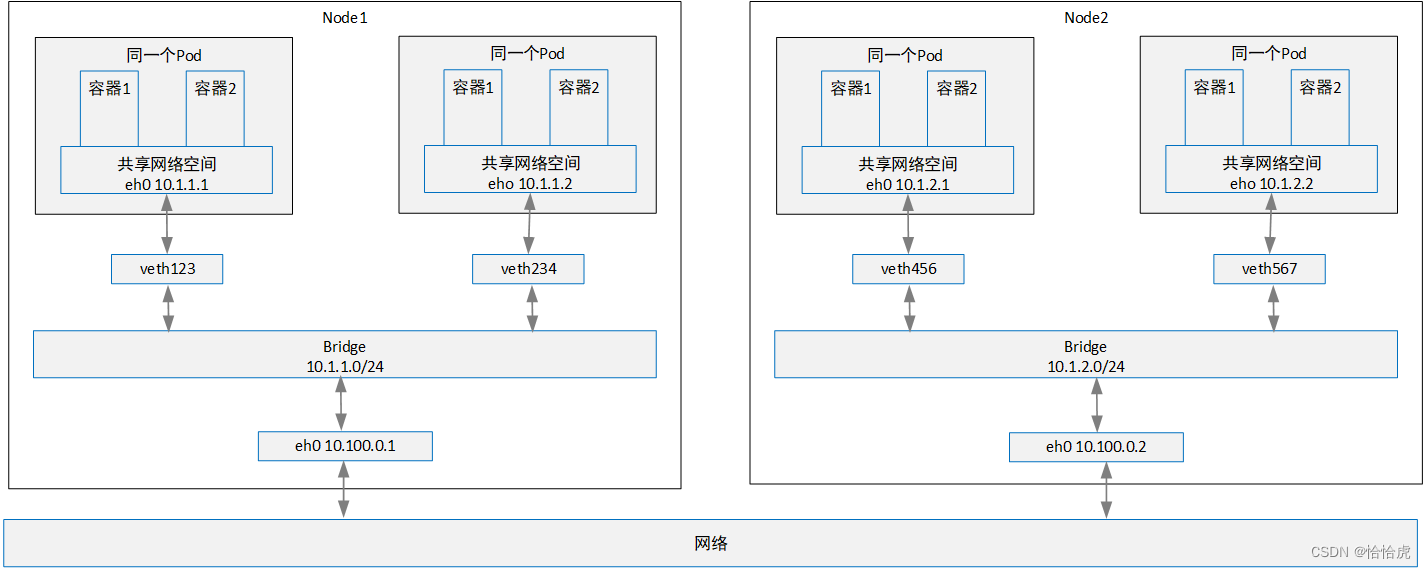

2、同一节点内Pod间的通信

其通信模型如下图所示:

同一节点Pod都关联到同一个网桥,地址段相同,从容器内发送的数据从eh0网络接口发出,再从veth接口出来,发送给网桥。网桥判断目标地址如果是同一个地址段,再由网桥发送给节点内部的对应Pod。

同样看个实例,我们将上面的Pod拆解为两个。

[root@k8s-master yaml]# cat network-busybox-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: network-busybox-pod

spec:

containers:

- name: busybox

image: busybox

command:

- "/bin/sh"

- "-c"

- "sleep 3000"[root@k8s-master yaml]# cat network-nginx-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: network-nginx-pod

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80执行这两个文件,创建Pod后,进入busybox访问nginx(ip为10.244.36.80)。

[root@k8s-master yaml]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

network-busybox-pod 1/1 Running 0 20m 10.244.36.79 k8s-node1 <none> <none>

network-nginx-pod 1/1 Running 0 19m 10.244.36.80 k8s-node1 <none> <none>

[root@k8s-master yaml]# kubectl exec -it network-busybox-pod sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # wget http://10.244.36.80

Connecting to 10.244.36.80 (10.244.36.80:80)

saving to 'index.html'

index.html 100% |*****************************************************************************************************************************************************************************************| 612 0:00:00 ETA

'index.html' saved可以看到,在busybox容器中访问http://10.244.36.80,可以获取到ngnix的页面。

3、跨节点Pod间通信

其通信模型如下:

当一个报文从一个节点容器发送到另一个节点容器,报文先通过veth到网桥,再到节点物理适配器,通过网络传到其他节点的物理适配器,在通过其网桥,最终经过veth到达目标容器。这里需要有个前提:pod 的IP地址 必须是唯一的, 跨节点的网桥必须使用非重叠 地址段,这个需要通过IP地址规划保证。

上面的实例中,两个Pod都在k8s-node1节点上,我们将上面的busybox的Pod调度到master节点上。删除busybox的Pod,并改写下yaml内容。

[root@k8s-master yaml]# cat network-busybox-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: network-busybox-pod

spec:

containers:

- name: busybox

image: busybox

command:

- "/bin/sh"

- "-c"

- "sleep 3000"

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule"执行该文件,创建Pod,可以看到新Pod调度到Master上

[root@k8s-master yaml]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

network-busybox-pod 1/1 Running 0 20s 10.244.235.202 k8s-master <none> <none>

network-nginx-pod 1/1 Running 0 24m 10.244.36.80 k8s-node1 <none> <none>进入该Pod,访问nginx

[root@k8s-master yaml]# kubectl exec -it network-busybox-pod sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # wget http://10.244.36.80

Connecting to 10.244.36.80 (10.244.36.80:80)

saving to 'index.html'

index.html 100% |*****************************************************************************************************************************************************************************************| 612 0:00:00 ETA

'index.html' saved可以看到,在busybox容器中访问http://10.244.36.80,可以获取到ngnix的页面。

三、Service

Service是K8S的核心概念之一,它是基于Pod上的业务网络层,为同一组Pod提供统一的访问入口,将请求负载转发到后端的Pod上,其作用类似于业务网关。

1、Service创建

在创建Service之前,我们先创建其后端应用,该应用使用镜像tcy83/k8s-service-app,镜像java代码如下:

@Controller

@RequestMapping("/k8s")

public class TestController {

private static Integer appId;

@GetMapping("getServiceApp")

@ResponseBody

public String getServiceApp(){

return "this this service app:"+generateAppId();

}

public static int generateAppId(){

if(appId == null){

Random random = new Random();

appId = random.nextInt(1000);

}

return appId;

}

}解释下该段代码,当访问/k8s/getServiceApp,返回一段字符串,显示应用的appId,该appId在首次访问时随机分配,后续访问将保持不变,以便区别不同的应用实例。

接下来,就采用Deployment部署3个该应用的pod。其中yaml文件内容如下:

apiVersion: apps/v1

kind: Deployment

metadata:

name: serviceapp-deployment

labels:

app: serviceapp-deployment

spec:

replicas: 3

selector:

matchLabels:

app: serviceapp-deploy

template:

metadata:

labels:

app: serviceapp-deploy

spec:

containers:

- name: k8s-service-app

image: tcy83/k8s-service-app:0.1

ports:

- containerPort: 8080创建完成后,我们看下pod运行情况:

[root@k8s-master yaml]# kubectl get pod -l app=serviceapp-deploy -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

serviceapp-deployment-649c69c89d-2k2hm 1/1 Running 0 90m 10.244.36.78 k8s-node1 <none> <none>

serviceapp-deployment-649c69c89d-h5s5g 1/1 Running 0 90m 10.244.36.119 k8s-node1 <none> <none>

serviceapp-deployment-649c69c89d-sjv55 1/1 Running 0 90m 10.244.36.123 k8s-node1 <none> <none>根据pod的ip和port,能成功访问每一个应用。

[root@k8s-master ~]# curl http://10.244.36.78:8080/k8s/getServiceApp

this this service app:400

[root@k8s-master ~]# curl http://10.244.36.119:8080/k8s/getServiceApp

this this service app:204

[root@k8s-master ~]# curl http://10.244.36.123:8080/k8s/getServiceApp

this this service app:813接下来就是重点部分,为这3个应用增加一个Service,作为统一访问入口。

[root@k8s-master yaml]# cat service-app-sv.yaml

apiVersion: v1

kind: Service

metadata:

name: service-app-service

spec:

type: ClusterIP

selector:

app: serviceapp-deploy

ports:

- port: 80

targetPort: 8080其中的属性我们稍后再分析,先执行下service-app-sv.yaml

[root@k8s-master yaml]# kubectl apply -f service-app-sv.yaml

service/service-app-service created

[root@k8s-master yaml]# kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 133d

service-app-service ClusterIP 10.104.72.224 <none> 80/TCP 13sService正常运行,其集权内部访问IP为10.104.72.224,访问下该Service

[root@k8s-master yaml]# curl http://10.104.72.224:80/k8s/getServiceApp

this this service app:400

[root@k8s-master yaml]# curl http://10.104.72.224:80/k8s/getServiceApp

this this service app:204

[root@k8s-master yaml]# curl http://10.104.72.224:80/k8s/getServiceApp

this this service app:400

[root@k8s-master yaml]# curl http://10.104.72.224:80/k8s/getServiceApp

this this service app:400

[root@k8s-master yaml]# curl http://10.104.72.224:80/k8s/getServiceApp

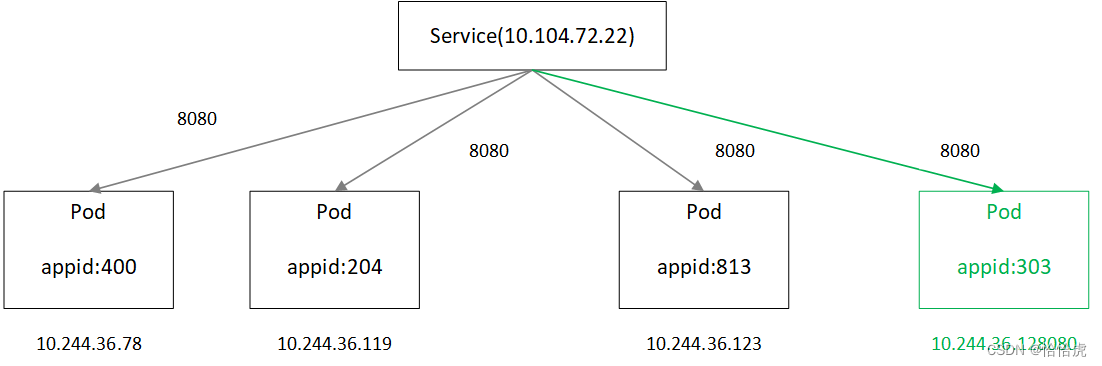

this this service app:813可以看到通过Service,能成功访问到后端的3个应用,且有负载均衡效果。我们再扩容一个新的Pod看看。

[root@k8s-master ~]# kubectl scale deployment/serviceapp-deployment --replicas=4

deployment.apps/serviceapp-deployment scaled

[root@k8s-master ~]# kubectl get pod -l app=serviceapp-deploy -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

serviceapp-deployment-649c69c89d-2k2hm 1/1 Running 0 12h 10.244.36.78 k8s-node1 <none> <none>

serviceapp-deployment-649c69c89d-h5s5g 1/1 Running 0 12h 10.244.36.119 k8s-node1 <none> <none>

serviceapp-deployment-649c69c89d-sjv55 1/1 Running 0 12h 10.244.36.123 k8s-node1 <none> <none>

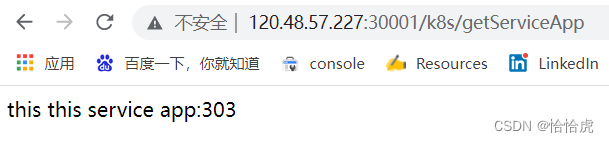

serviceapp-deployment-649c69c89d-txlt9 1/1 Running 0 88s 10.244.36.103 k8s-master <none> <none>再访问Service地址

[root@k8s-master ~]# curl http://10.104.72.224:80/k8s/getServiceApp

this this service app:303

[root@k8s-master ~]# curl http://10.104.72.224:80/k8s/getServiceApp

this this service app:813

[root@k8s-master ~]# curl http://10.104.72.224:80/k8s/getServiceApp

this this service app:400

[root@k8s-master ~]# curl http://10.104.72.224:80/k8s/getServiceApp

this this service app:204

[root@k8s-master ~]# curl http://10.104.72.224:80/k8s/getServiceApp

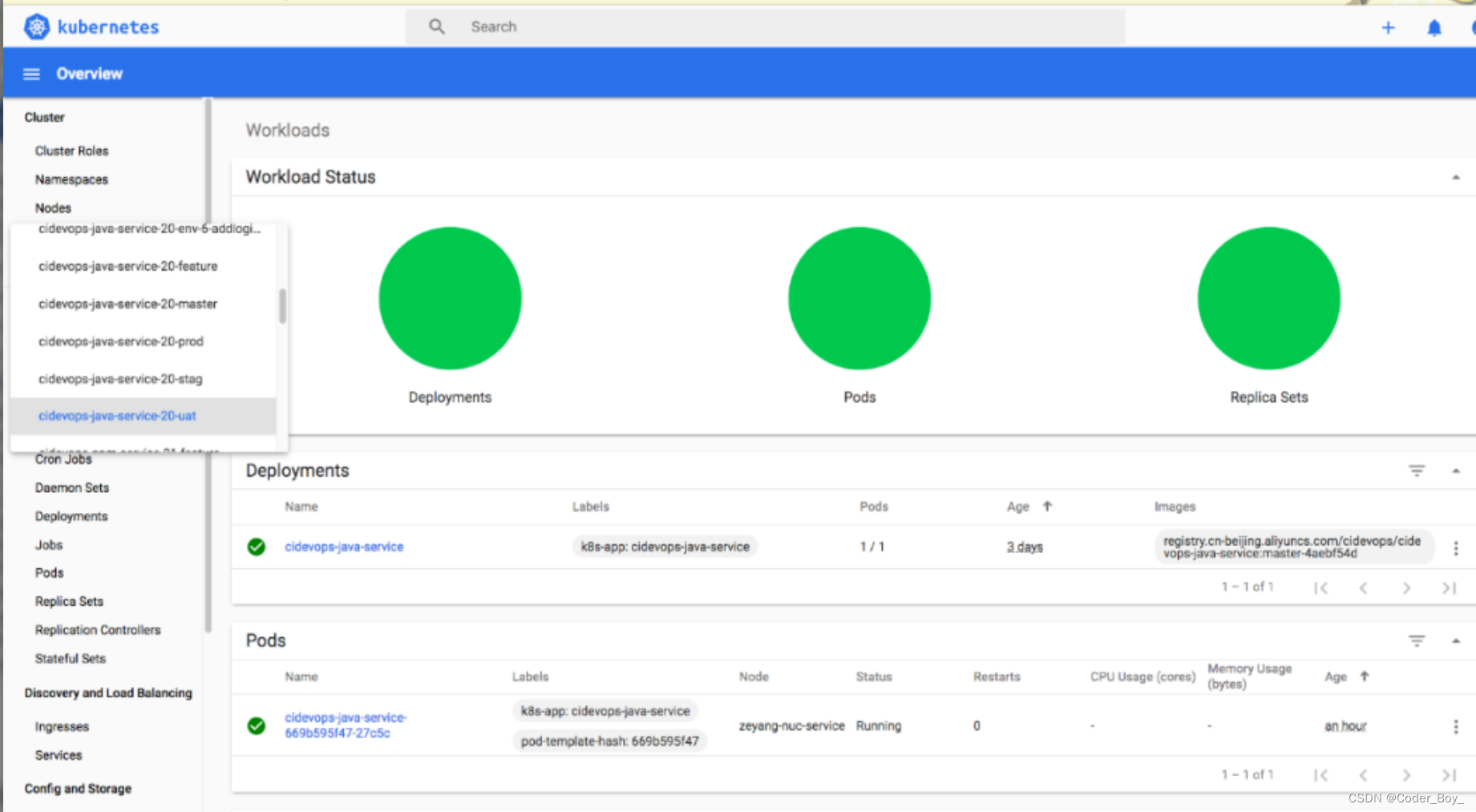

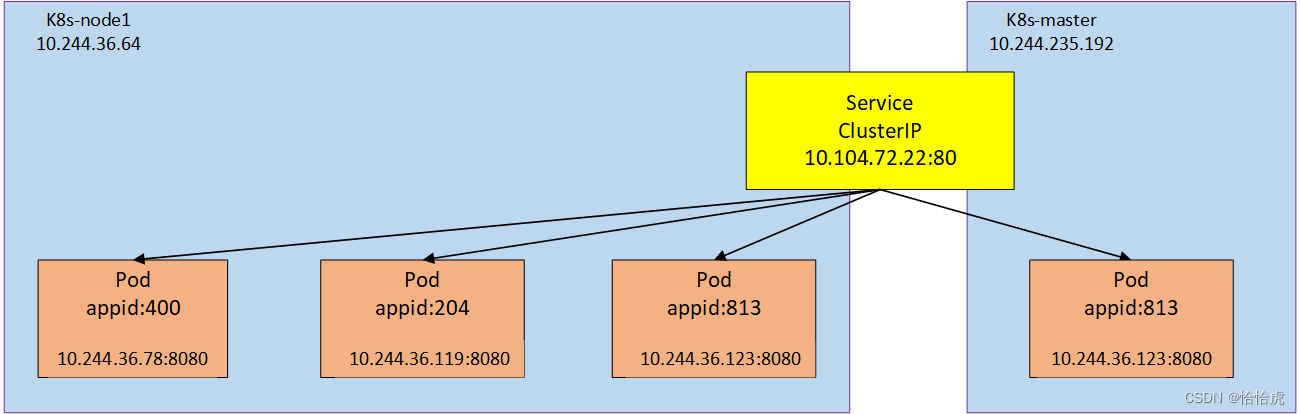

this this service app:400可以看到新扩容的应用(appid=303),已经加入到集群,能通过Service访问到,示意图如下:

2、service原理分析

通过上面的实例,我们了解了Service的具备统一访问入口的功能,那么它是如何实现的呢?

(1)属性分析

我们分析下Service的主要属性:

- type,表示Service的类型,目前支持ClusterIP,NodePort,LoadBalancer,ExternalName四种,这四种类型的作用和用法,我们在后面具体分析。

- Selector,选择那些后端应用与该Service关联,上面的例子如图所示

通过selector属性,其值为Pod的label值,这样,所有标记为该label的Pod与该service关联起来。所以,通过Service的标签选择器实现与Pod的关联。

- ports,即Service访问port,以及后端应用的目标Port。

(2)Pod的访问

Service的IP实际上是VIP(虚拟IP),请求是如何转发到后端Pod的呢?其流程如下:

(1)Pod启动完成后,由kubelet将各Pod的ip注册到master节点。

(2)Service发布完成后,由Master分配ClusterIP,并与后端Pod的ip建立映射关系,存储到master节点。

(3)kube-proxy监听到变化后,修改本地的iptabels,写入clusterIp与Podip的映射关系。

(4)运行时,当Client访问Service的ClientIp时,由iptabels截获,根据映射关系以及负载均衡策略,转发到后端应用的Pod。

以上就是Service访问的iptabels的工作模式,也是默认的工作模式,该机制确保了Pod在扩缩容时,通过修改iptabels快速响应服务实例的变化;同时,由内核区的iptabels进行寻址和负载,也大大提升了效率。

在Pod实例扩容和缩容时,及时的体现到iptabel的映射表上。

3、Service类型

前面介绍了Service有四种类型,我们具体介绍下:

(1)ClusterIP

K8S为Service分配一个集群内部IP地址,该 IP只能在集群内部访问,这也是type的默认值。

前面创建的就是ClusterIP类型,其示意图如下:

除了前面讲的通过IP访问服务,service还默认分配一个域名,其格式 为<servicename>.<namespace>.svc.<clusterdomain>,上面例子service的域名:"service-app-service.default.svc.cluster.local",我们登录到集群中某个pod,再访问该域名的地址

[root@k8s-master yaml]# kubectl exec -it nginx-deployment-86644697c5-96q8l -- /bin/sh

# curl http://service-app-service.default.svc.cluster.local/k8s/getServiceApp

this this service app:400可以看到通过Service域名能正确访问应用。

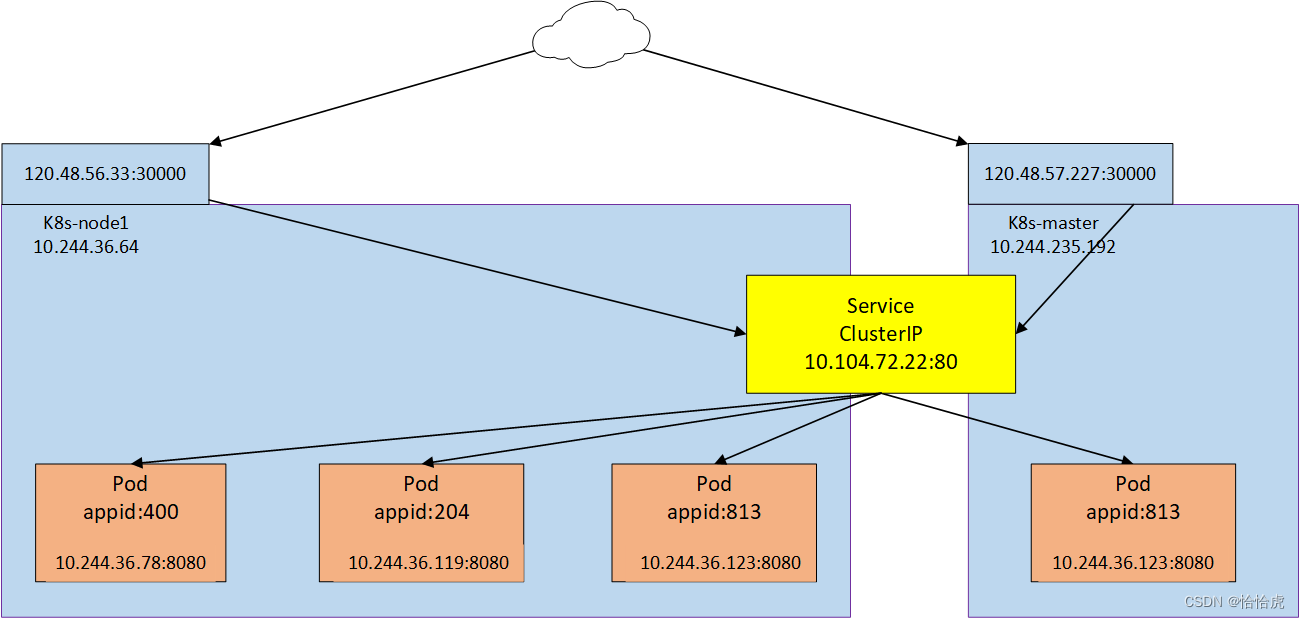

(2)NodePort

如果要对外提供服务(非集群内访问),ClusterIP模式是无法满足,此时需要采用NodePort,它是ClusterIP的扩展,在每个节点的开放一个本地静态端口(端口范围:30000-32767),该端口代理服务,通过节点上的端口可以访问服务。示意图如下:

下面我们改写下service-app-sv.yaml,将type修改为NodePort,并在ports中添加nodePort:30001

[root@k8s-master yaml]# cat service-app-sv.yaml

apiVersion: v1

kind: Service

metadata:

name: service-app-service

spec:

type: NodePort

selector:

app: serviceapp-deploy

ports:

- port: 80

targetPort: 8080

nodePort: 30001创建Service后,查看运行状态

[root@k8s-master yaml]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 137d

service-app-service NodePort 10.110.47.22 <none> 80:30001/TCP 6m11s此时我们就可以在公网上通过浏览器访问该服务

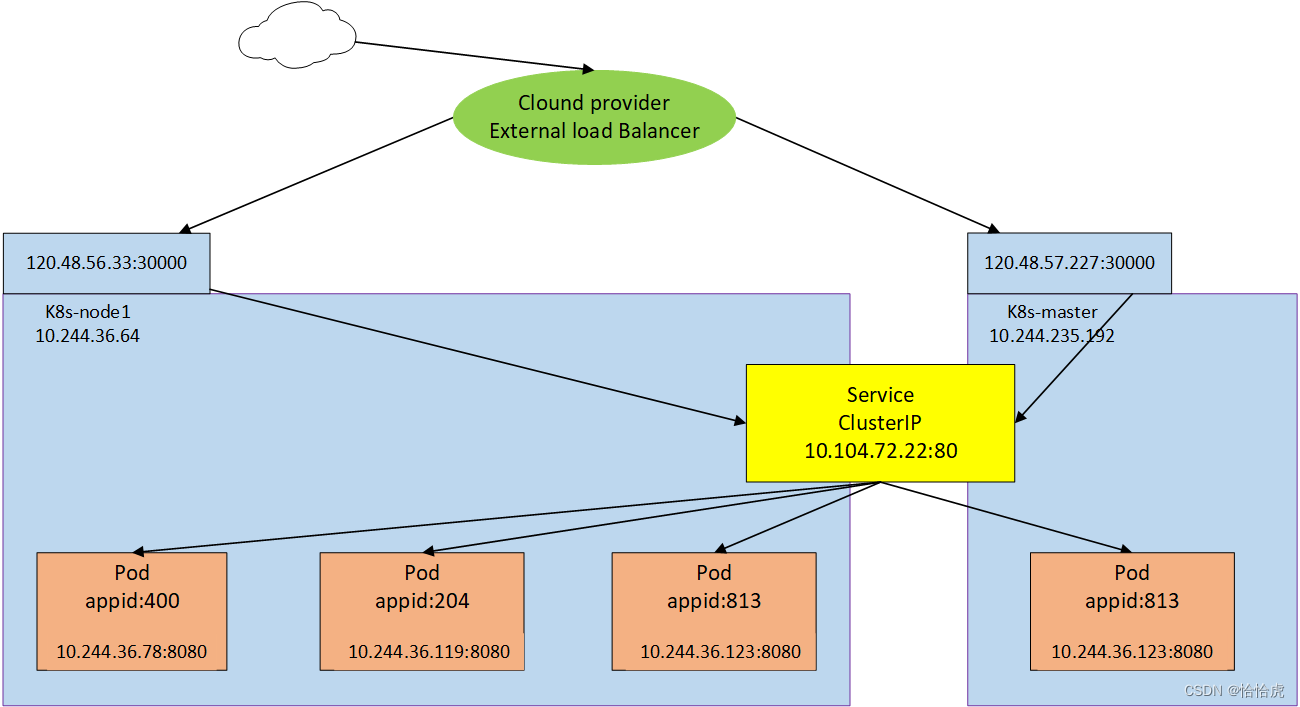

(3)LoadBalancer

公有云提供商一般提供负载均衡器向外部暴露服务,外部负载均衡器可以将流量路由到自动创建的NodePort服务和ClusterIP服务上。示意图如下:

创建一个yaml文件service-app-loadbalancer-sv.yaml

[root@k8s-master yaml]# cat service-app-loadbalancer-sv.yaml

apiVersion: v1

kind: Service

metadata:

name: service-app-service

spec:

type: LoadBalancer

selector:

app: serviceapp-deploy

ports:

- port: 80

targetPort: 8080执行该文件,并查看Service运行状态

[root@k8s-master yaml]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 141d

service-app-service LoadBalancer 10.103.95.166 <pending> 80:32243/TCP 13m已经自动分配了节点的32243端口,并映射到service的80端口,但是 EXTERNAL-IP一直处于pending状态,这是因为我没有购买共有用的负载均衡服务,购买后就会分配个负载均衡ip,实现loadbalancer。

(4)ExternalName

这种类型一般是我们在使用外集群的服务时,该服务暴露了DNS域名,就可以在本集群创建一个Service,并映射该域名,那么就可以在本集群访问该service,从而访问外集群的服务。示意图如下:

创建一个yaml文件,映射为百度的域名。

[root@k8s-master yaml]# cat service-app-externalname-sv.yaml

apiVersion: v1

kind: Service

metadata:

name: service-app-en-service

spec:

type: ExternalName

externalName: www.baidu.com执行完成后,看下创建结果

[root@k8s-master yaml]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service-app-en-service ExternalName <none> www.baidu.com <none> 84m当访问service-app-en-service.default.svc.cluster.local,集群DNS服务返回CNAME记录,其值为www.baidu.com。

为了验证下上面的结论,我们在集群中部署dns工具pod,镜像为dnsutils,其内容如下:

[root@k8s-master yaml]# cat dnsutils-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: dnsutils

spec:

containers:

- name: dnsutils

image: mydlqclub/dnsutils:1.3

imagePullPolicy: IfNotPresent

command: ["sleep","3600"]执行成功后,进入该pod内部,使用nslookup命令看下该域名的解析

[root@k8s-master yaml]# kubectl exec -it dnsutils /bin/sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # nslookup service-app-en-service.default.svc.cluster.local

Server: 10.96.0.10

Address: 10.96.0.10#53

service-app-en-service.default.svc.cluster.local canonical name = www.baidu.com.

www.baidu.com canonical name = www.a.shifen.com.

Name: www.a.shifen.com

Address: 110.242.68.4

Name: www.a.shifen.com

Address: 110.242.68.3再ping下百度的域名

[root@k8s-master yaml]# ping www.baidu.com

PING www.a.shifen.com (110.242.68.3) 56(84) bytes of data.

64 bytes from 110.242.68.3 (110.242.68.3): icmp_seq=1 ttl=52 time=4.47 ms

64 bytes from 110.242.68.3 (110.242.68.3): icmp_seq=2 ttl=52 time=4.45 ms

64 bytes from 110.242.68.3 (110.242.68.3): icmp_seq=3 ttl=52 time=4.37 ms可以看到上面确实是返回了百度域名的ip。

三、EndPoint

在上面的Pod访问模块中,我们提到服务注册自己的ip到master节点,在实际的实现过程中,这些ip并不是一个物理列表,而之后由Endpoint管理,Service关联Endpoint。

我们先查下Endpoint列表

[root@k8s-master ~]# kubectl get endpoints

NAME ENDPOINTS AGE

kubernetes 192.168.16.4:6443 142d

service-app-service 10.244.36.103:8080,10.244.36.119:8080,10.244.36.123:8080 + 1 more... 25hk8s集群中已经有了两个endpoint,其中一个是和service相同名称,其Endpoints就是pod的列表。这个Endpoint我们并没有显性的去创建,而是在创建Service时,k8s自动创建的。

那这种设计的好处是什么呢?其实就是为了解耦,Endpoint作为解耦层,可以灵活应对pod的变化,而Service不感知。

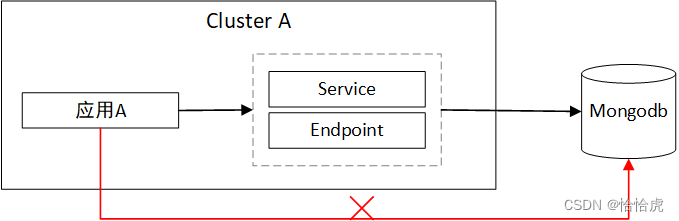

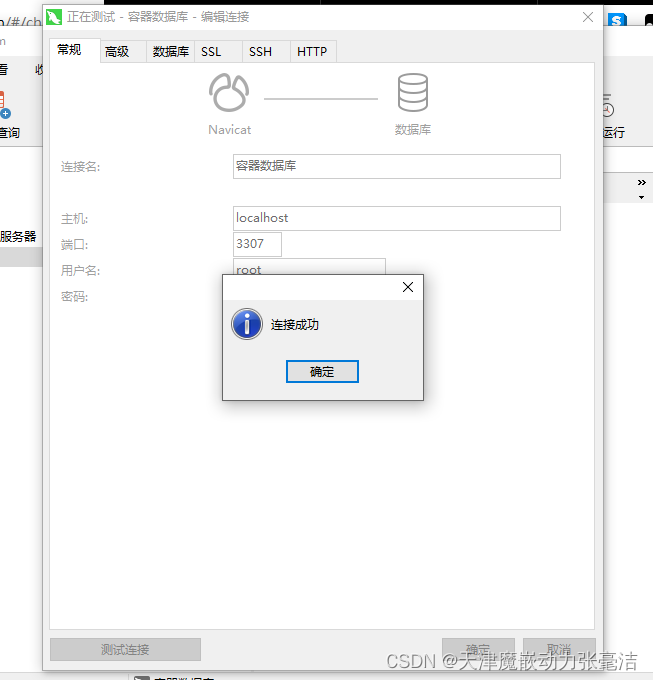

在一些实际工程中,部署在K8S集群的应用需要连接外部的数据库,或者另一个集群或者Namespace的服务作为服务的后端,这种场景下就需要用到Endpoint。

下面我们来看一个Endpoint的使用实例,如图所示:

集群外部部署了一个mongdb数据库,集群内部应用A需要连接该数据库,由于集群导致的网络隔离,无法直接访问。此时就是可以使用Service+手动配置Endpoint实现。

其yaml内容如下:

[root@k8s-master yaml]# cat mongodb_service.yaml

apiVersion: v1

kind: Service

metadata:

name: mongodb-svc

spec:

ports:

- port: 27017

targetPort: 27017

protocol: TCP

---

kind: Endpoints

apiVersion: v1

metadata:

name: mongodb-svc

subsets:

- addresses:

# 外部 mongodb IP

- ip: 192.168.16.4

ports:

# mongodb 端口

- port: 27017首先我们创建了一个名为 mongodb-svc的service,需要注意,该service没有selector选择器,也可以认为该service没有挂载后端服务,其他的和上面的service没有区别。

接着又创建一个Endpoints,其名称要为service一致(这里非常关键,service与endpoints关联关系依赖name相同),在subsets中,我们配置mongodb的ip(也可以是域名,这里仅配置master库)和端口。

集群中的应用A就 可以通过访问该服务的域名来访问mongodb数据库了,配置如下

spring:

data:

mongodb:

uri: mongodb://ai_admin:123456@mongodb-svc:27017/ai四、Endpointslice

在大型集群中,endpoint存在诸多限制

- Pod数据限制,一个服务对应一个Endpoint资源,这意味着它需要为支持相应服务的每个Pod存储IP地址和端口(网络端点),Endpoint需要存储大量的ip和端口信息,而存储在etcd中的对象的默认大小限制为1.5MB,也就是最多能存储5000个pod的ip,在大多数情况下,是满足工程要求。但是在一些大型集群中,数量超过这个限制,就存在问题。

- 更新代价大,kube-proxy 会在每个节点上运行,并监控 Endpoint 资源的任何更新,如果 Endpoint 资源中有一个端口发生更改,那么整个对象都会分发到 kube-proxy 的每个实例。如果service有5000个pod,在3000个节点情况下,每次更新将会跨节点发送 4.5GB 的数据(1.5M*3000)。再想象下,如果5000个pod都滚动更新一次,全部被替换,那么传输的数据量将超过22TB,这个是恐怖的数据。

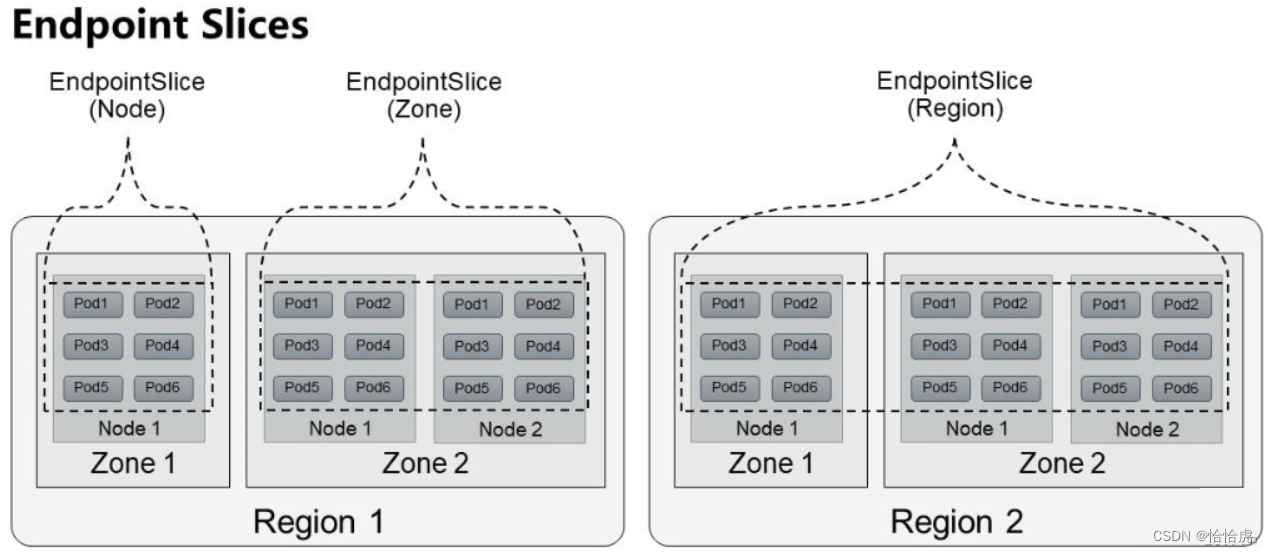

为了解决以上的Endpoint存在的限制,在是 Kubernetes 1.18 中引入的一个新的 API 对象EndpointSlice,通过分片管理一组Endpoint,这样将数据量以及更新的范围限制在一个分片内,来解决以上两个问题。

EndPointSlice根据Endpoint所在Node的拓扑信息进行分片管理,分为Node,Zone,Region三个级别,如下图所示。

接下来我们看下K8s的EndpointSlice列表

[root@k8s-master ~]# kubectl get endpointslice

NAME ADDRESSTYPE PORTS ENDPOINTS AGE

service-app-service-bf5vr IPv4 8080 10.244.36.78,10.244.36.103,10.244.36.123 + 1 more... 5d18h创建了以Endpoint名称为前缀的EndpointSlice。再来看下EndpointSlice的详情。

[root@k8s-master ~]# kubectl describe endpointslice service-app-service-bf5vr

Name: service-app-service-bf5vr

Namespace: default

Labels: endpointslice.kubernetes.io/managed-by=endpointslice-controller.k8s.io

kubernetes.io/service-name=service-app-service

Annotations: endpoints.kubernetes.io/last-change-trigger-time: 2023-03-26T10:29:05Z

AddressType: IPv4

Ports:

Name Port Protocol

---- ---- --------

<unset> 8080 TCP

Endpoints:

- Addresses: 10.244.36.78

Conditions:

Ready: true

Hostname: <unset>

TargetRef: Pod/serviceapp-deployment-649c69c89d-2k2hm

NodeName: k8s-node1

Zone: <unset>

- Addresses: 10.244.36.103

Conditions:

Ready: true

Hostname: <unset>

TargetRef: Pod/serviceapp-deployment-649c69c89d-txlt9

NodeName: k8s-node1

Zone: <unset>

- Addresses: 10.244.36.123

Conditions:

Ready: true

Hostname: <unset>

TargetRef: Pod/serviceapp-deployment-649c69c89d-sjv55

NodeName: k8s-node1

Zone: <unset>

- Addresses: 10.244.36.119

Conditions:

Ready: true

Hostname: <unset>

TargetRef: Pod/serviceapp-deployment-649c69c89d-h5s5g

NodeName: k8s-node1

Zone: <unset>

Events: <none>我们来看下重要的属性参数

- Labels的kubernetes.io/service-name,表示关联的service名称。

- AddressType,包括三种取值,IPv4,IPv6,FQDN(全限定域名)

- Endpoints,列出的每个Endpoint的信息,其中包括:

Addresses:Endpoint的IP地址;

Conditions:Endpoint状态信息,作为EndpointSlice的查询条件;

Hostname:在Endpoint中设置的主机名nostname;

TargetRef:Endpoint对应的Pod名称;

NodeName:所在的节点名

Zone,所在的zone区

Topology:拓扑信息,为基于拓扑感知的服务路由提供数据。(需要设置服务拓扑key)

总之,在大规模集群下,EndpointSlice通过对Endpoint进行分片管理来实现降低Master和各Node之间的网络传输数据量及提高整体性能的目标。同时还支持围绕双栈网络和拓扑感知路由等新功能的创新。

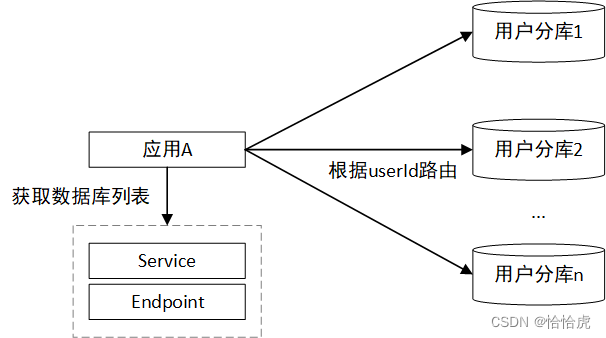

五、Headless Service

在某些应用场景中,我们仅希望Service实现服务发现,即后端服务有改变,能反映在端点列表,但是不希望使用Service的服务路由功能,即由业务获取列表后,自定义服务的负载均衡策略,决定需要连接具体哪个服务,此时,就可以使用Headless Service。一般应用在有状态服务中(在后续的Stateful控制器会介绍有状态服务类型)。

比如,有一组根据userId字段进行分片的MySql分库,当应用A读写用户信息时,根据userId的分片策略,决定路由到哪个分库。如下图所示:

下面我们将前面的service-app-sv.yaml改造成Headless Service,其yaml内如如下:

[root@k8s-master yaml]# cat service-app-headless-sv.yaml

apiVersion: v1

kind: Service

metadata:

name: service-app-headless-service

spec:

# clusterIp为None即为headless service

clusterIP: None

selector:

app: serviceapp-deploy

ports:

- port: 80

targetPort: 8080该yaml中clusterIP:None即表示Headless Service。用前面创建的四个Pod模拟分库。执行完成后,我们看下服务列表

[root@k8s-master yaml]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service-app-headless-service ClusterIP None <none> 80/TCP 7m11s可以看到其cluster ip为None,也就是该Service无法通过ip访问。前面我们介绍过每个Service都有一个默认域名,Headless Service也不例外,该Service的域名为service-app-headless-service.default.svc.cluster.local,通过nslookup指令模拟应用A客户端获取Endpoint列表。

[root@k8s-master yaml]# kubectl exec -it dnsutils /bin/sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # nslookup service-app-headless-service.default.svc.cluster.local

Server: 10.96.0.10

Address: 10.96.0.10#53

Name: service-app-headless-service.default.svc.cluster.local

Address: 10.244.36.119

Name: service-app-headless-service.default.svc.cluster.local

Address: 10.244.36.78

Name: service-app-headless-service.default.svc.cluster.local

Address: 10.244.36.103

Name: service-app-headless-service.default.svc.cluster.local

Address: 10.244.36.123

可以看到,应用A能正确获取后端应用IP列表,后续可以自行决策如何负载,连接哪个数据库分库。

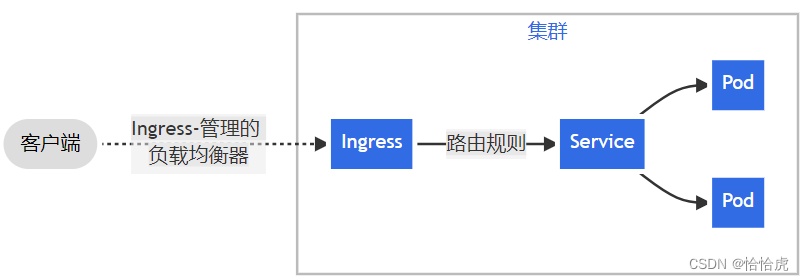

六、Ingress

前面介绍的Service都是基于IP:Port模式,即工作在四层TCP协议层。在工程实践中,我们应用更多的是需要工作在七层Http/Https层的网关服务,此时Service对象是无法满足。从1.1版本开始,K8S提供Ingress对象,通过配置转发策略,将不同的URL请求转发到后端不同的Service上,实现基于HTTP的路由机制。

其示意图如下:

Ingress需要配合Ingress控制器一起使用。Ingress主要用来配置规则,简单理解,就是Ngnix上配置的转发规则,抽象成Ingress对象,使用yaml文件创建,更改时,仅更新yaml文件即可。而Ingress Controller是真正实现负载均衡能力,它感知集群中Ingress规则变化,然后读取他,按照他自己模板生成一段 Nginx 配置,再写到 Nginx Pod 里。

简单来讲,Ingress是负责解决怎么处理的问题,Ingress Controller根据处理的策略,实现处理方式。

1、Ingress的配置规则

Ingress支持以下几种配置规则

(1)、转发到单个后端服务

这种比较简单,对于Ingress Controller IP的访问都会转发到该后端服务上,如下:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx

spec:

backend:

serviceName: nginx

servicePort: 80802、同一域名下,不同URL路径被转发到不同的服务上

yaml内容如下,在访问k8s.nginx.cn域名,根据后面的path,决定路由到对应的服务。

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx

spec:

rules:

- host: k8s.nginx.cn

http:

paths:

- path: /image

pathType: Prefix

backend:

service:

name: image

port:

number: 80

- path: /app

pathType: Prefix

backend:

service:

name: webapp

port:

number: 803、不同域名,被转发到不同的服务上

yaml内容如下,根据域名不同,转发不同的后端Service。

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx

spec:

rules:

- host: k8s.image.cn

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: image

port:

number: 80

- host: k8s.nginx.cn

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx

port:

number: 804、不使用域名转发

这个实际是第一个的变种,其yaml文件如下:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx

spec:

rules:

http:

paths:

- path: /nginx

backend:

serviceName: nginx

servicePort: 8080当访问ingress controller ip/nginx路径时,转发到后端的nginx服务上。

2、案例实践

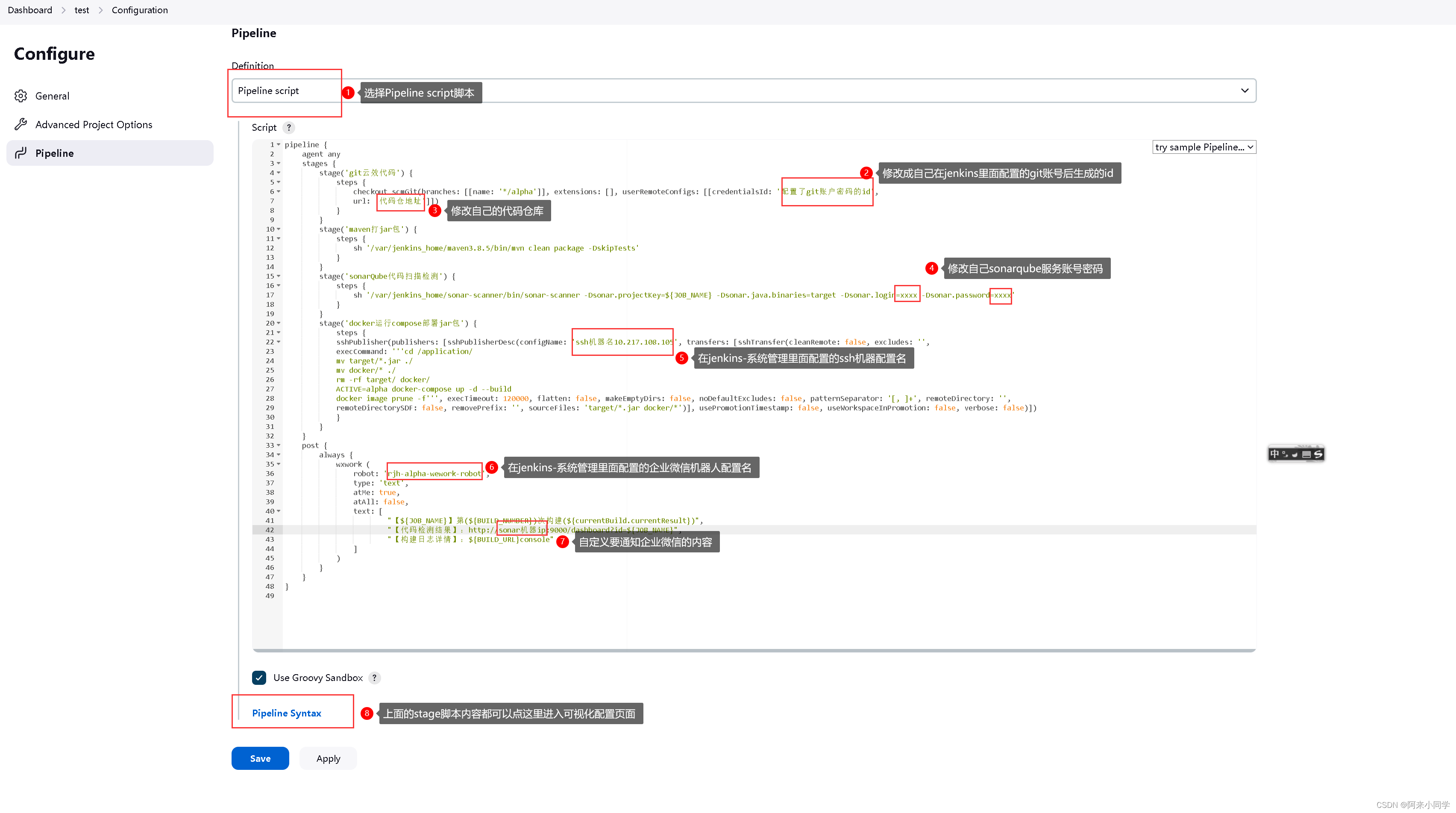

通过案例实践,看下如何实现Ingres的配置转发功能。

(1)创建Ingress controller

首先我们创建Ingress Controller, K8S目前支持AWS,GCE和Nginx控制器,我们这里使用K8s提供的Ngnix Controller 1.3.1版本

下载yaml文件后,修改其中的controller镜像地址(原有的镜像地址国内无法访问),以及增加 hostNetwork: true(打通Cluster和node的网络),demo-1.3.1.yaml文件内容参见附件。

[root@k8s-master yaml]# kubectl apply -f demo-1.3.1.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

serviceaccount/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx unchanged

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx unchanged

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

configmap/ingress-nginx-controller created

service/ingress-nginx-controller created

service/ingress-nginx-controller-admission created

deployment.apps/ingress-nginx-controller created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

ingressclass.networking.k8s.io/nginx unchanged

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission configured执行该文件成功后,可以看到在命名空间ingress-nginx下创建了一系列的对象。

[root@k8s-master yaml]# kubectl get pod,svc,ing,deploy -n ingress-nginx

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-admission-create-6sbrv 0/1 Completed 0 3m12s

pod/ingress-nginx-admission-patch-dghkc 0/1 Completed 1 3m12s

pod/ingress-nginx-controller-7dd587ccd5-8xr8j 1/1 Running 0 3m12s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx-controller LoadBalancer 10.96.244.230 <pending> 80:32148/TCP,443:31937/TCP 3m12s

service/ingress-nginx-controller-admission ClusterIP 10.107.184.217 <none> 443/TCP 3m12s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/ingress-nginx-controller 1/1 1 1 3m12s其中Ingress Controller实际是一个Pod实现的,这里就是 pod/ingress-nginx-controller-7dd587ccd5-8xr8j。

(2)创建Deployment

接着我们创建后端应用Pod,通过Nginx的镜像来模拟。

创建 命名空间为ns-ingress-test的NameSpace,并创建所属该命名空间的Deployment,yaml内容如下:

[root@k8s-master yaml]# cat ingress-pod.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ns-ingress-test

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: ns-ingress-test

labels:

app: ingress-nginx

spec:

selector:

matchLabels:

app: ingress-nginx

replicas: 2

template:

metadata:

labels:

app: ingress-nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80执行该文件后,查看pod的运行状态,完成了2个Pod的运行。

[root@k8s-master yaml]# kubectl get pods -n ns-ingress-test

NAME READY STATUS RESTARTS AGE

nginx-7d8856bf4f-cxnx8 1/1 Running 0 5m1s

nginx-7d8856bf4f-hhk7x 1/1 Running 0 5m1s(3)创建Service

接下来创建访问该应用Pod的Service对象,type为ClusterIP,内容如下:

[root@k8s-master yaml]# cat ingress-sv.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

namespace: ns-ingress-test

spec:

ports:

- port: 80

targetPort: 80

selector:

app: ingress-nginx

type: ClusterIP执行完成后,检查Service状态

[root@k8s-master yaml]# kubectl get svc -n ns-ingress-test

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx ClusterIP 10.106.13.73 <none> 80/TCP 20s到此为止,我们通过Service的IP就可以访问下nginx了。

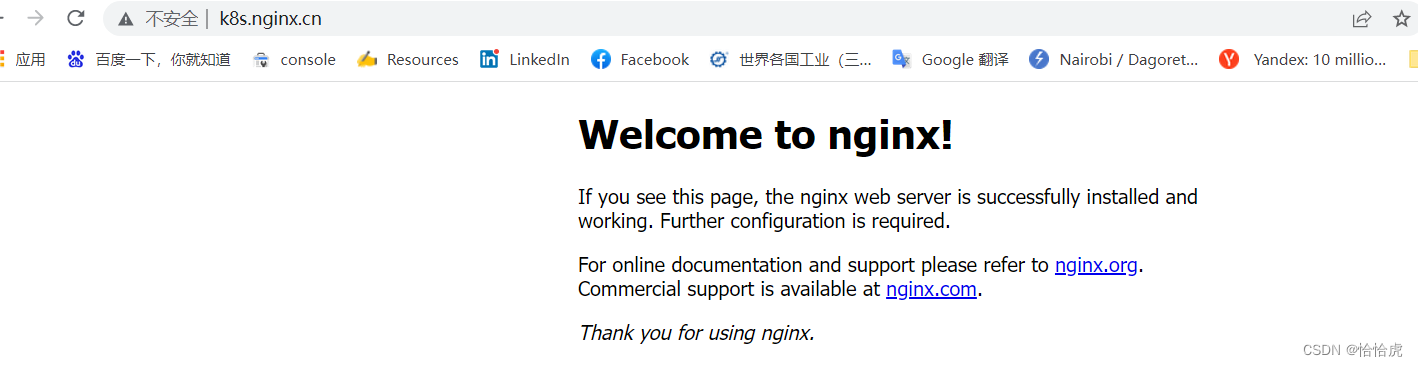

[root@k8s-master yaml]# curl 10.106.13.73:80

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

....可以看到访问正常,说明Nginx应用运行正常。

(4)创建Ingress

这也是关键一步,通过Ingress配置访问策略,其yaml文件内容如下

[root@k8s-master yaml]# cat ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx

namespace: ns-ingress-test

annotations:

kubernetes.io/ingress.class: "nginx" # 指定 Ingress Controller 的类型

spec:

ingressClassName: nginx

rules:

- host: k8s.nginx.cn

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx

port:

number: 80这里较简单,通过域名k8s.nginx.cn,访问后端Service,并指定ingressClassName为Ingress controller的名称。执行完成后,看下Ingress的状态。

[root@k8s-master yaml]# kubectl get ingress -A

NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE

ns-ingress-test nginx nginx k8s.nginx.cn 80 20m(5)检测

到此为止,我们就可以通过域名在外网访问服务了。在客户端windows的hosts文件中增加域名映射

xx.xx.xx.xx k8s.nginx.cn

xx.xx.xx.xx为所在节点的公网IP,配置完成后可以通过浏览器访问

七、总结

本章节介绍网络通讯的实现原理以及Service的相关核心概念,

1、介绍了同一Pod内容器间,同一节点不同Pod,以及不同节点Pod的通讯原理和实现。

2、Service,为同一组Pod提供统一的访问入口,通过Selector选择同组Pod,Service有四种类型,分别为ClusterIP,NodePort,LoadBalancer,ExternalNamed。Service是通过iptabels记录虚拟IP与后端Pod IP的关系,并实现流量的四层转发和负载。

3、Endpoint,Endpoint是管理后端应用的IP列表,通过Endpoint实现Service与后端应用的解耦。通过Service+手动配置Endpoint,实现对集群外应用的访问。

4、Endpointslice,在大规模集群中,Endpoint存在性能上的限制,Endpointslice通过分片管理,降低Master和各Node之间的网络传输数据量及提高整体性能。

5、Headless Service,是Service的一个变种,采用ClusterIP:None标记,一般应用于仅需要使用Service服务发现,不需要服务路由的场景中。

6、Ingress,Ingress可以实现七层路由的转发,Ingress负责规则的配置,而真正的流量转发则是由Ingress Controller实现。

附:

K8S初级入门系列之一-概述

K8S初级入门系列之二-集群搭建

K8S初级入门系列之三-Pod的基本概念和操作

K8S初级入门系列之四-Namespace/ConfigMap/Secret

K8S初级入门系列之五-Pod的高级特性

K8S初级入门系列之六-控制器(RC/RS/Deployment)

K8S初级入门系列之七-控制器(Job/CronJob/Daemonset)

K8S初级入门系列之八-网络

K8S初级入门系列之九-共享存储

K8S初级入门系列之十-控制器(StatefulSet)

K8S初级入门系列之十一-安全

K8S初级入门系列之十二-计算资源管理

附件

demo-1.3.1.yaml文件内容

apiVersion: v1

kind: Namespace

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

name: ingress-nginx

---

apiVersion: v1

automountServiceAccountToken: true

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resourceNames:

- ingress-controller-leader

resources:

- configmaps

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- coordination.k8s.io

resourceNames:

- ingress-controller-leader

resources:

- leases

verbs:

- get

- update

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- create

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- secrets

verbs:

- get

- create

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

- namespaces

verbs:

- list

- watch

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: v1

data:

allow-snippet-annotations: "true"

kind: ConfigMap

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller

namespace: ingress-nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

externalTrafficPolicy: Local

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- appProtocol: http

name: http

port: 80

protocol: TCP

targetPort: http

- appProtocol: https

name: https

port: 443

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: LoadBalancer

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

ports:

- appProtocol: https

name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

minReadySeconds: 0

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

spec:

hostNetwork: true

containers:

- args:

- /nginx-ingress-controller

- --publish-service=$(POD_NAMESPACE)/ingress-nginx-controller

- --election-id=ingress-controller-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

image: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.3.1

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: controller

ports:

- containerPort: 80

name: http

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 8443

name: webhook

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

requests:

cpu: 100m

memory: 90Mi

securityContext:

allowPrivilegeEscalation: true

capabilities:

add:

- NET_BIND_SERVICE

drop:

- ALL

runAsUser: 101

volumeMounts:

- mountPath: /usr/local/certificates/

name: webhook-cert

readOnly: true

dnsPolicy: ClusterFirst

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-create

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-create

spec:

hostNetwork: true

containers:

- args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.3.0

imagePullPolicy: IfNotPresent

name: create

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-patch

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-patch

spec:

hostNetwork: true

containers:

- args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.3.0

imagePullPolicy: IfNotPresent

name: patch

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: nginx

spec:

controller: k8s.io/ingress-nginx

---

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

webhooks:

- admissionReviewVersions:

- v1

clientConfig:

service:

name: ingress-nginx-controller-admission

namespace: ingress-nginx

path: /networking/v1/ingresses

failurePolicy: Fail

matchPolicy: Equivalent

name: validate.nginx.ingress.kubernetes.io

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

sideEffects: None

![[JAVAee]volatile关键字](https://img-blog.csdnimg.cn/469a39b738a846bb8007c90500fd9689.png)