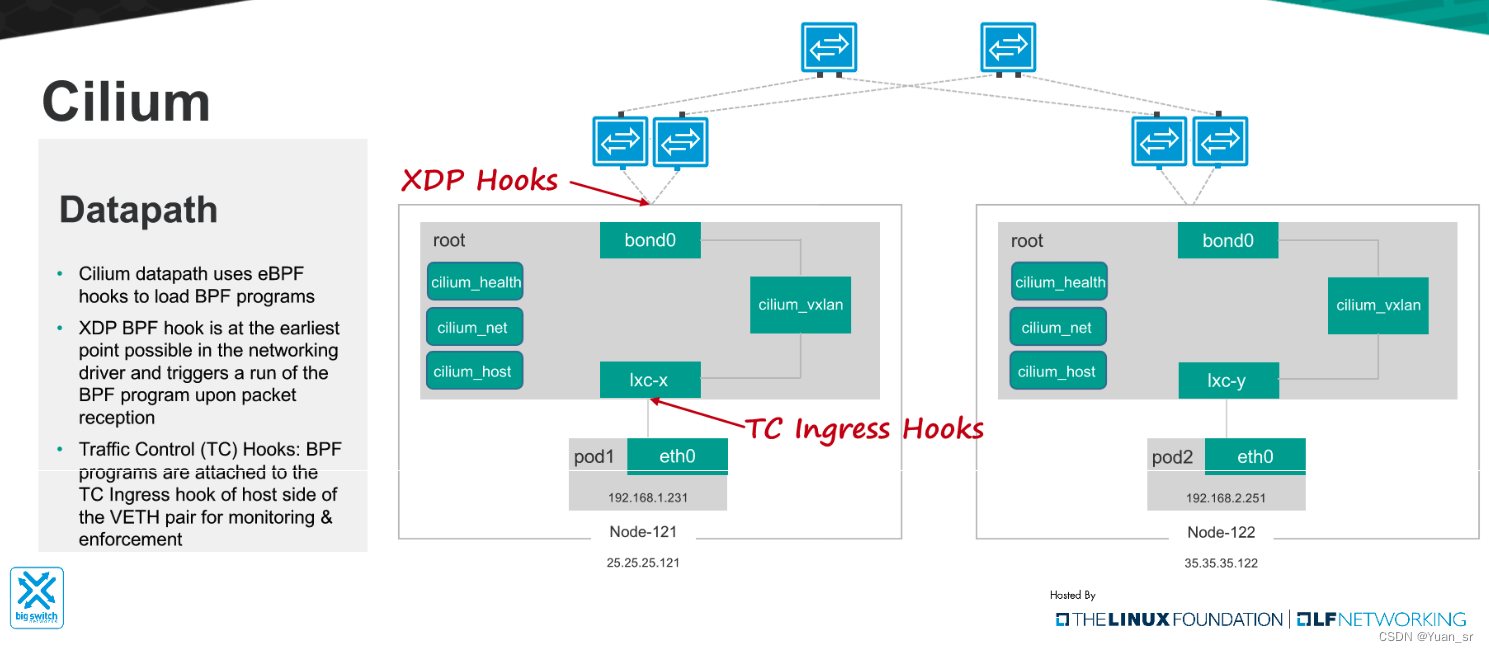

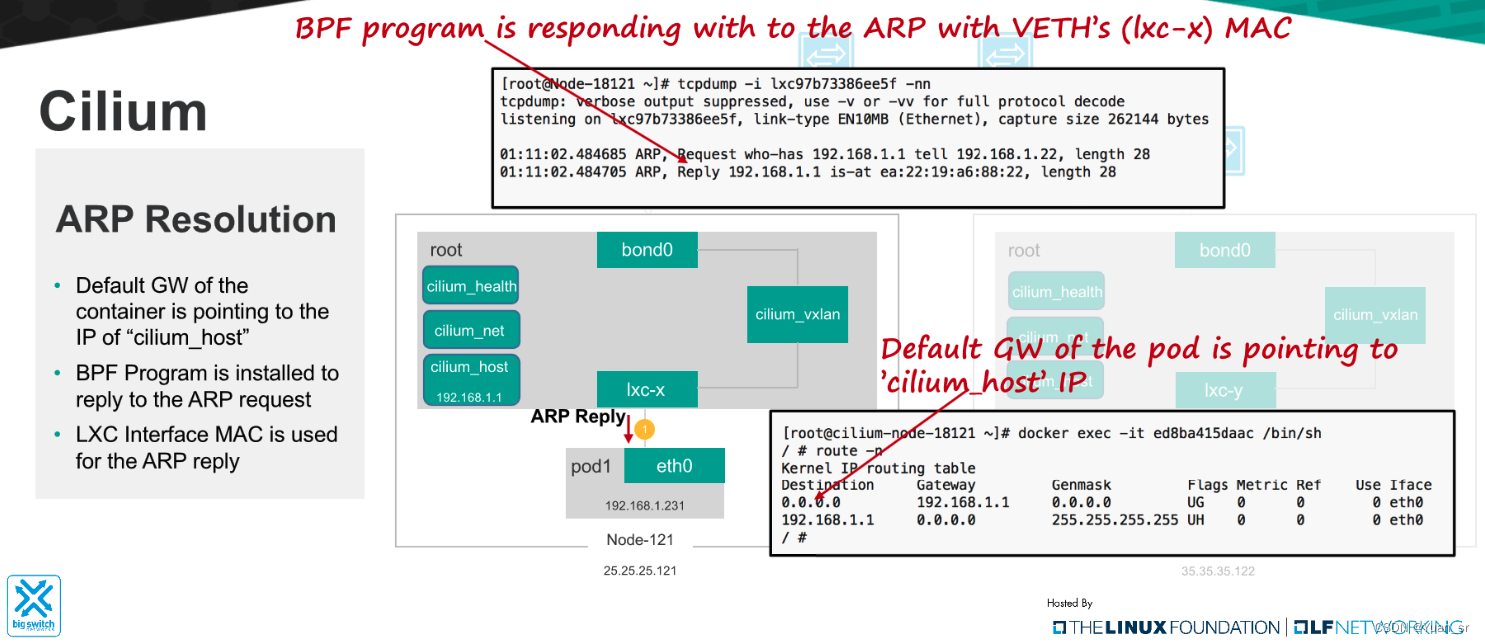

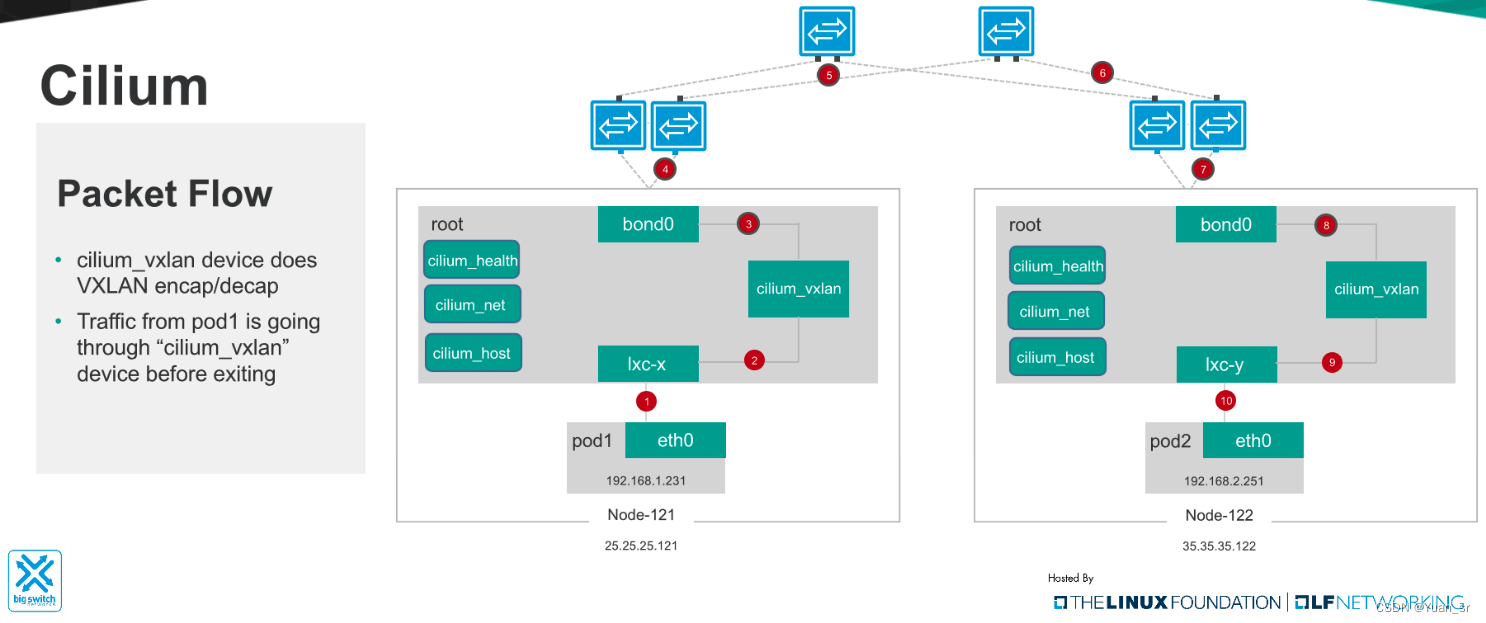

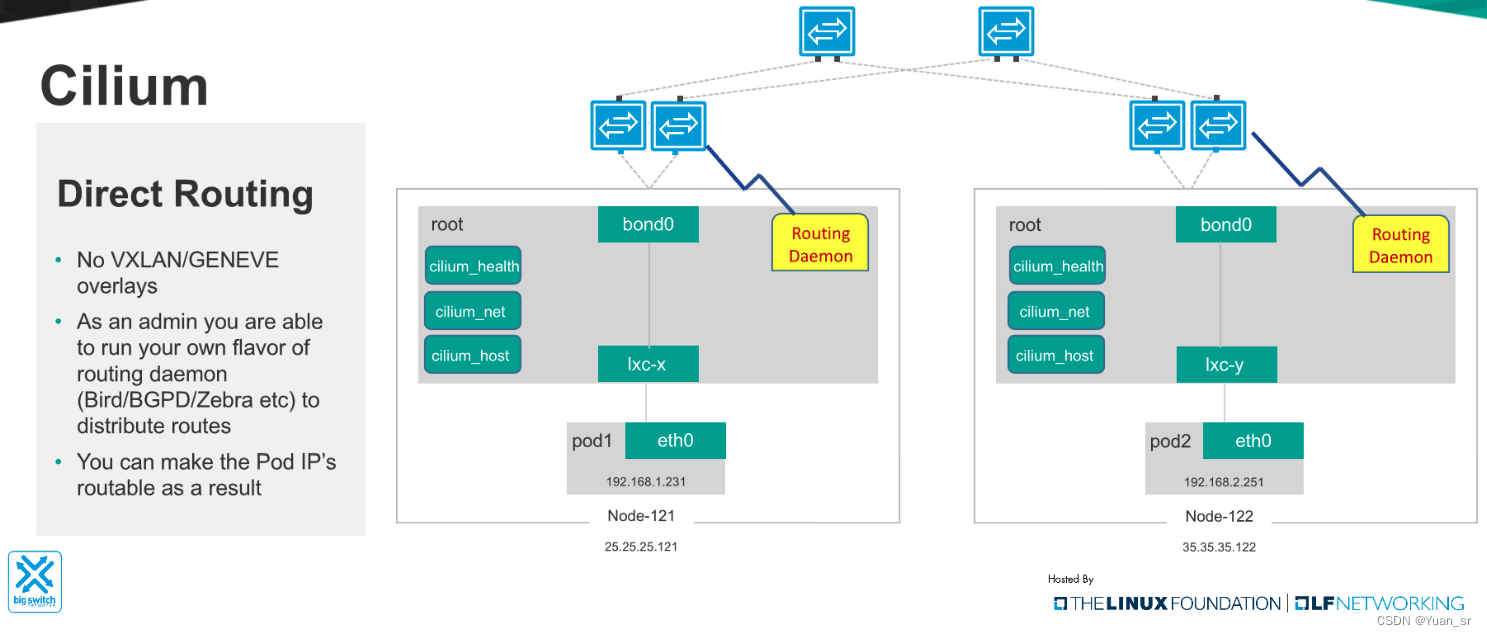

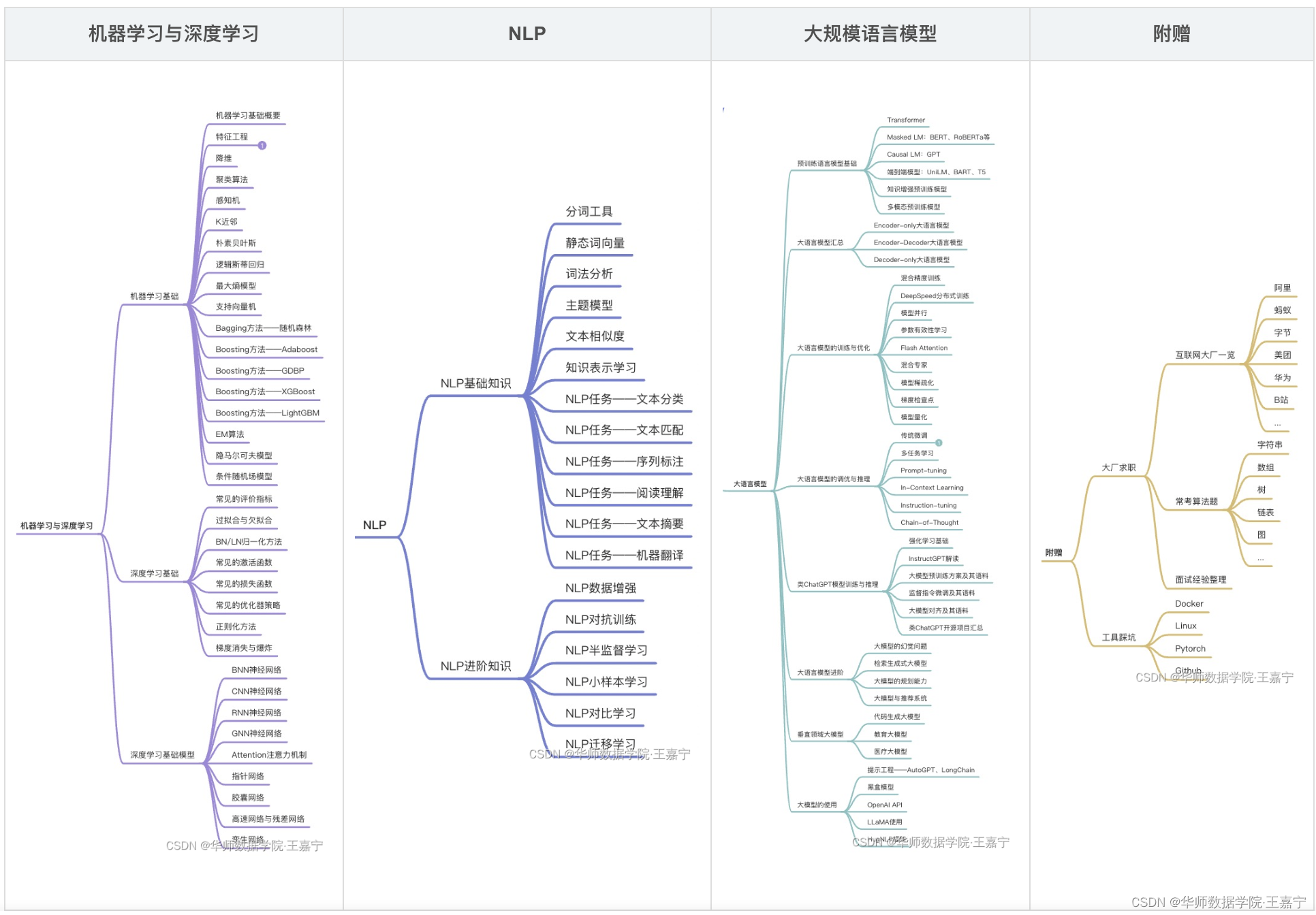

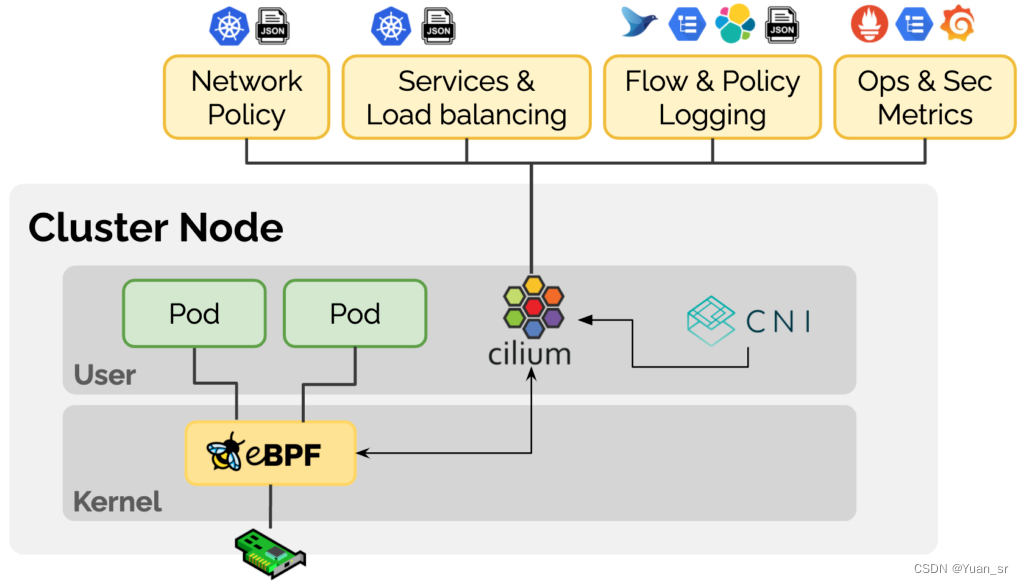

Cilium作为一款Kubernetes CNI插件,从一开始就是为大规模和高度动态的容器环境而设计,并且带来了API级别感知的网络安全管理功能,通过使用基于Linux内核特性的新技术——BPF,提供了基于service/pod/container作为标识,而非传统的IP地址,来定义和加强容器和Pod之间网络层、应用层的安全策略。因此,Cilium不仅将安全控制与寻址解耦来简化在高度动态环境中应用安全性策略,而且提供传统网络第3层、4层隔离功能,以及基于http层上隔离控制,来提供更强的安全性隔离。

另外,由于BPF可以动态地插入控制Linux系统的程序,实现了强大的安全可视化功能,而且这些变化是不需要更新应用代码或重启应用服务本身就可以生效,因为BPF是运行在系统内核中的。

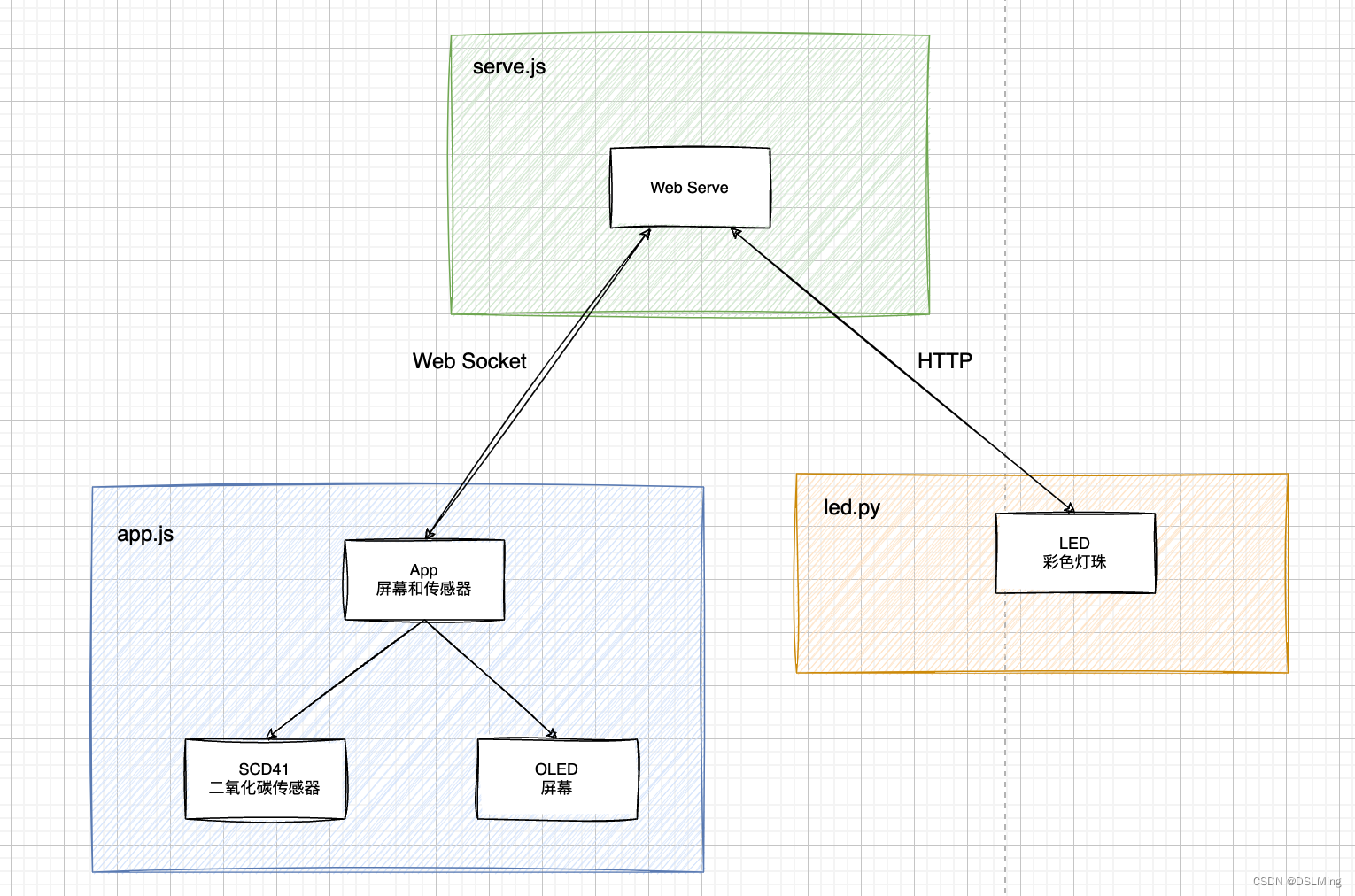

Cilium Architecture

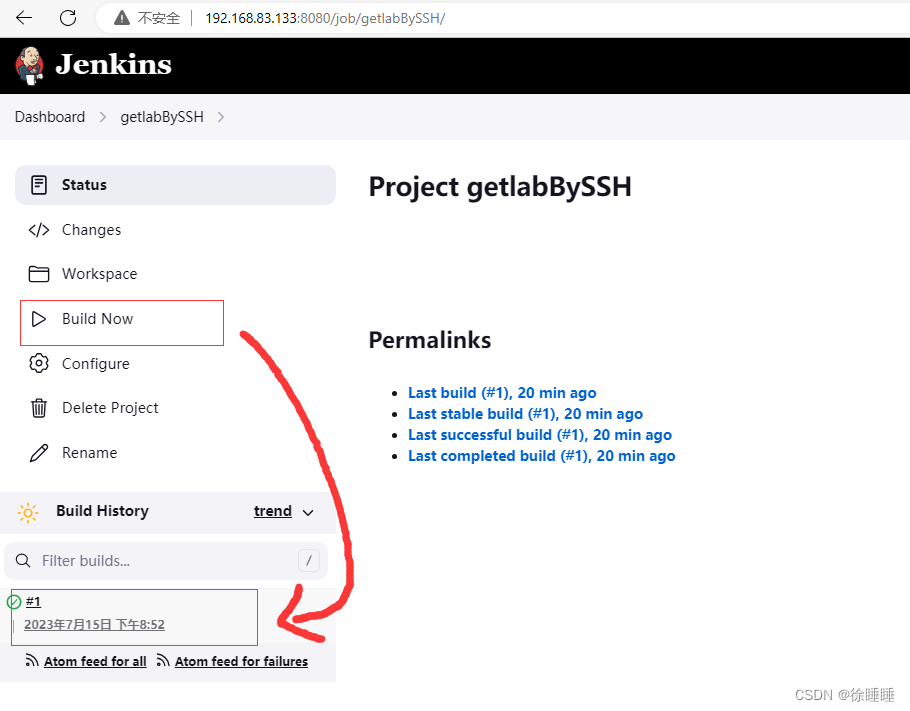

Installation Using Kubespray

kubespray部署kubernetes(containerd + cilium)

root@node1:~# kubectl get node

NAME STATUS ROLES AGE VERSION

node1 Ready control-plane 7d6h v1.24.6

node2 Ready control-plane 7d6h v1.24.6

node3 Ready <none> 7d6h v1.24.6

root@node1:~# kubectl get pods -o wide -nkube-system | grep cilium

cilium-operator-f6648bc78-5qqn8 1/1 Running 109 (3h58m ago) 7d4h 192.168.64.10 node3 <none> <none>

cilium-operator-f6648bc78-v44zf 1/1 Running 120 7d4h 192.168.64.9 node2 <none> <none>

cilium-qwkkf 1/1 Running 11 (3d4h ago) 7d4h 192.168.64.8 node1 <none> <none>

cilium-s4rdr 1/1 Running 5 (3d4h ago) 7d4h 192.168.64.10 node3 <none> <none>

cilium-v2dnw 1/1 Running 15 (3d4h ago) 7d4h 192.168.64.9 node2 <none> <none>

root@node1:~# kubectl exec -it -n kube-system cilium-qwkkf -- cilium status --verbose

Defaulted container "cilium-agent" out of: cilium-agent, mount-cgroup (init), apply-sysctl-overwrites (init), clean-cilium-state (init)

KVStore: Ok etcd: 3/3 connected, lease-ID=797e89450e0683a2, lock lease-ID=3f888935025bf628, has-quorum=true: https://192.168.64.10:2379 - 3.5.4; https://192.168.64.8:2379 - 3.5.4 (Leader); https://192.168.64.9:2379 - 3.5.4

Kubernetes: Ok 1.24 (v1.24.6) [linux/amd64]

Kubernetes APIs: ["cilium/v2::CiliumClusterwideNetworkPolicy", "cilium/v2::CiliumNetworkPolicy", "core/v1::Namespace", "core/v1::Node", "core/v1::Pods", "core/v1::Service", "discovery/v1::EndpointSlice", "networking.k8s.io/v1::NetworkPolicy"]

KubeProxyReplacement: Probe [enp0s2 192.168.64.8]

Host firewall: Disabled

CNI Chaining: none

Cilium: Ok 1.12.1 (v1.12.1-4c9a630)

NodeMonitor: Disabled

Cilium health daemon: Ok

IPAM: IPv4: 4/254 allocated from 10.233.64.0/24,

Allocated addresses:

10.233.64.220 (kube-system/coredns-665c4cc98d-l7mwz[restored])

10.233.64.236 (health)

10.233.64.54 (kube-system/dns-autoscaler-6567c8b74f-ql5bw[restored])

10.233.64.93 (router)

BandwidthManager: Disabled

Host Routing: BPF

Masquerading: BPF [enp0s2] 10.233.64.0/24 [IPv4: Enabled, IPv6: Disabled]

Clock Source for BPF: jiffies [250 Hz]

Controller Status: 38/38 healthy

Name Last success Last error Count Message

bpf-map-sync-cilium_lxc 2s ago never 0 no error

cilium-health-ep 24s ago 76h35m46s ago 0 no error

dns-garbage-collector-job 30s ago never 0 no error

endpoint-1304-regeneration-recovery never never 0 no error

endpoint-2383-regeneration-recovery never never 0 no error

endpoint-2712-regeneration-recovery never never 0 no error

endpoint-356-regeneration-recovery never never 0 no error

endpoint-gc 35s ago never 0 no error

ipcache-bpf-garbage-collection 4m55s ago never 0 no error

ipcache-inject-labels 3h35m34s ago 76h39m23s ago 0 no error

k8s-heartbeat 7s ago never 0 no error

kvstore-etcd-lock-session-renew 12h20m8s ago never 0 no error

kvstore-etcd-session-renew never never 0 no error

kvstore-locks-gc 26s ago never 0 no error

kvstore-sync-store-cilium/state/nodes/v1 30s ago never 0 no error

metricsmap-bpf-prom-sync 2s ago never 0 no error

propagating local node change to kv-store 76h39m22s ago never 0 no error

resolve-identity-1304 2m16s ago never 0 no error

restoring-ep-identity (2383) 76h38m9s ago never 0 no error

restoring-ep-identity (2712) 76h38m10s ago never 0 no error

restoring-ep-identity (356) 76h38m9s ago never 0 no error

sync-IPv4-identity-mapping (1304) 1m49s ago never 0 no error

sync-IPv4-identity-mapping (2383) 4m45s ago never 0 no error

sync-IPv4-identity-mapping (356) 4m46s ago never 0 no error

sync-endpoints-and-host-ips 22s ago never 0 no error

sync-lb-maps-with-k8s-services 76h38m9s ago never 0 no error

sync-node-with-ciliumnode (node1) 76h39m24s ago never 0 no error

sync-policymap-1304 34s ago never 0 no error

sync-policymap-2383 31s ago never 0 no error

sync-policymap-2712 30s ago never 0 no error

sync-policymap-356 30s ago never 0 no error

sync-to-k8s-ciliumendpoint (1304) 11s ago never 0 no error

sync-to-k8s-ciliumendpoint (2383) 9s ago never 0 no error

sync-to-k8s-ciliumendpoint (2712) 10s ago never 0 no error

sync-to-k8s-ciliumendpoint (356) 9s ago never 0 no error

template-dir-watcher never never 0 no error

waiting-initial-global-identities-ep (2383) 76h38m9s ago never 0 no error

waiting-initial-global-identities-ep (356) 76h38m9s ago never 0 no error

Proxy Status: OK, ip 10.233.64.93, 0 redirects active on ports 10000-20000

Global Identity Range: min 256, max 65535

Hubble: Disabled

KubeProxyReplacement Details:

Status: Probe

Socket LB: Enabled

Socket LB Protocols: TCP, UDP

Devices: enp0s2 192.168.64.8

Mode: SNAT

Backend Selection: Random

Session Affinity: Enabled

Graceful Termination: Enabled

NAT46/64 Support: Disabled

XDP Acceleration: Disabled

Services:

- ClusterIP: Enabled

- NodePort: Enabled (Range: 30000-32767)

- LoadBalancer: Enabled

- externalIPs: Enabled

- HostPort: Enabled

BPF Maps: dynamic sizing: on (ratio: 0.002500)

Name Size

Non-TCP connection tracking 262144

TCP connection tracking 524288

Endpoint policy 65535

Events 2

IP cache 512000

IP masquerading agent 16384

IPv4 fragmentation 8192

IPv4 service 65536

IPv6 service 65536

IPv4 service backend 65536

IPv6 service backend 65536

IPv4 service reverse NAT 65536

IPv6 service reverse NAT 65536

Metrics 1024

NAT 131072

Neighbor table 131072

Global policy 16384

Per endpoint policy 65536

Session affinity 65536

Signal 2

Sockmap 65535

Sock reverse NAT 65536

Tunnel 65536

Encryption: Disabled

Cluster health: 3/3 reachable (2023-07-15T16:56:09Z)

Name IP Node Endpoints

node1 (localhost) 192.168.64.8 reachable reachable

node2 192.168.64.9 reachable reachable

node3 192.168.64.10 reachable reachable

All In CNI