文章目录

- 源码资源下载

- Python环境

- 试玩controlnet

- 训练

- 数据准备

- 选一个Stable diffusion模型

- 开始训练

第一篇:https://qq742971636.blog.csdn.net/article/details/131531168

源码资源下载

目前 ControlNet 1.1 还在建设,本文这里使用源码 https://github.com/lllyasviel/ControlNet/tree/main。

此外还需要下载模型文件:https://huggingface.co/lllyasviel/ControlNet

发布在huggingface了,如何下载huggingface的模型文件,使用指令:

$ git lfs install

$ git clone https://huggingface.co/lllyasviel/ControlNet

详细log:

$ git lfs install

Git LFS initialized.

kevin@DESKTOP-J33EKGT MINGW64 /f

$ git clone https://huggingface.co/lllyasviel/ControlNet

Cloning into 'ControlNet'...

remote: Enumerating objects: 52, done.

remote: Counting objects: 100% (52/52), done.

remote: Compressing objects: 100% (33/33), done.

remote: Total 52 (delta 16), reused 52 (delta 16), pack-reused 0

Unpacking objects: 100% (52/52), 7.06 KiB | 141.00 KiB/s, done.

Filtering content: 100% (16/16), 11.80 GiB | 6.47 MiB/s, done.

Encountered 8 file(s) that may not have been copied correctly on Windows:

models/control_sd15_seg.pth

models/control_sd15_hed.pth

models/control_sd15_normal.pth

models/control_sd15_canny.pth

models/control_sd15_scribble.pth

models/control_sd15_mlsd.pth

models/control_sd15_depth.pth

models/control_sd15_openpose.pth

See: `git lfs help smudge` for more details.

Windows 的Git不能超过4GB,已知的BUG。所以这八个文件直接点下载吧,或者用Linux的Git去下载。

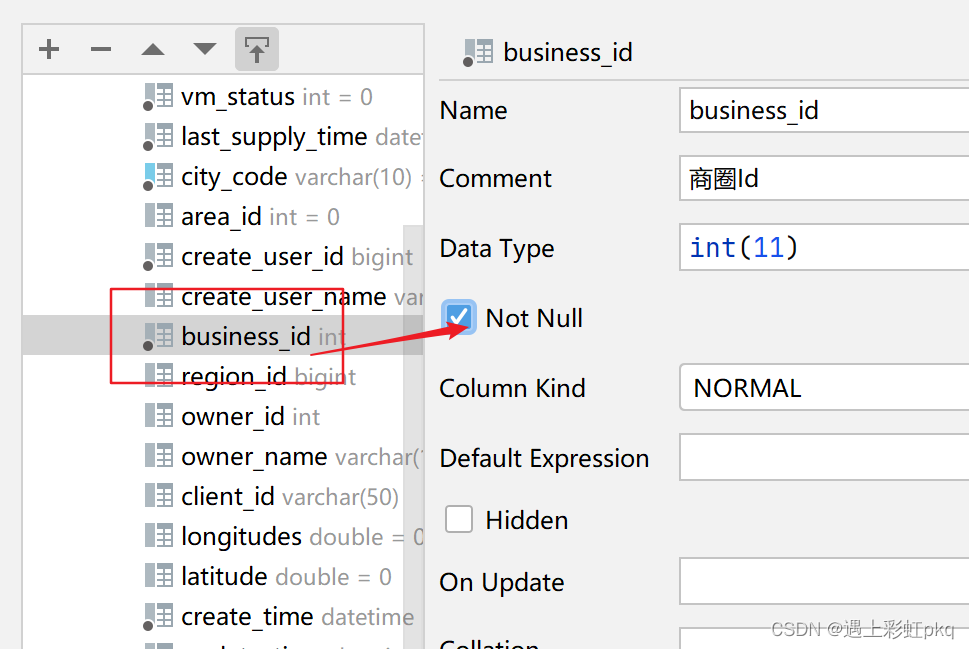

最终整个工程如下:

Python环境

用aliyun镜像才能安装完。这里先安装了一下diffusers。

conda create -n py38_diffusers python=3.8 -y

conda activate py38_diffusers

conda install pytorch==1.12.1 torchvision==0.13.1 torchaudio==0.12.1 cudatoolkit=11.6 -c pytorch -c conda-forge -y

cd diffusers-main/

pip install -e .

cd examples/

cd controlnet/

pip install -r requirements.txt

accelerate config default

cd /ssd/xiedong/workplace/ControlNet

pip install tb-nightly -i https://mirrors.aliyun.com/pypi/simple # 用aliyun镜像才能安装完

pip install -r req.txt # 用清华镜像快一些

req.txt 如下:

gradio==3.16.2

albumentations==1.3.0

opencv-python

opencv-contrib-python==4.3.0.36

imageio==2.9.0

imageio-ffmpeg==0.4.2

pytorch-lightning==1.5.0

omegaconf==2.1.1

test-tube>=0.7.5

streamlit==1.12.1

einops==0.3.0

transformers==4.19.2

webdataset==0.2.5

kornia==0.6

open_clip_torch==2.0.2

invisible-watermark>=0.1.5

streamlit-drawable-canvas==0.8.0

torchmetrics==0.6.0

timm==0.6.12

addict==2.4.0

yapf==0.32.0

prettytable==3.6.0

safetensors==0.2.7

basicsr==1.4.2

可以选择性安装:

pip install xformers

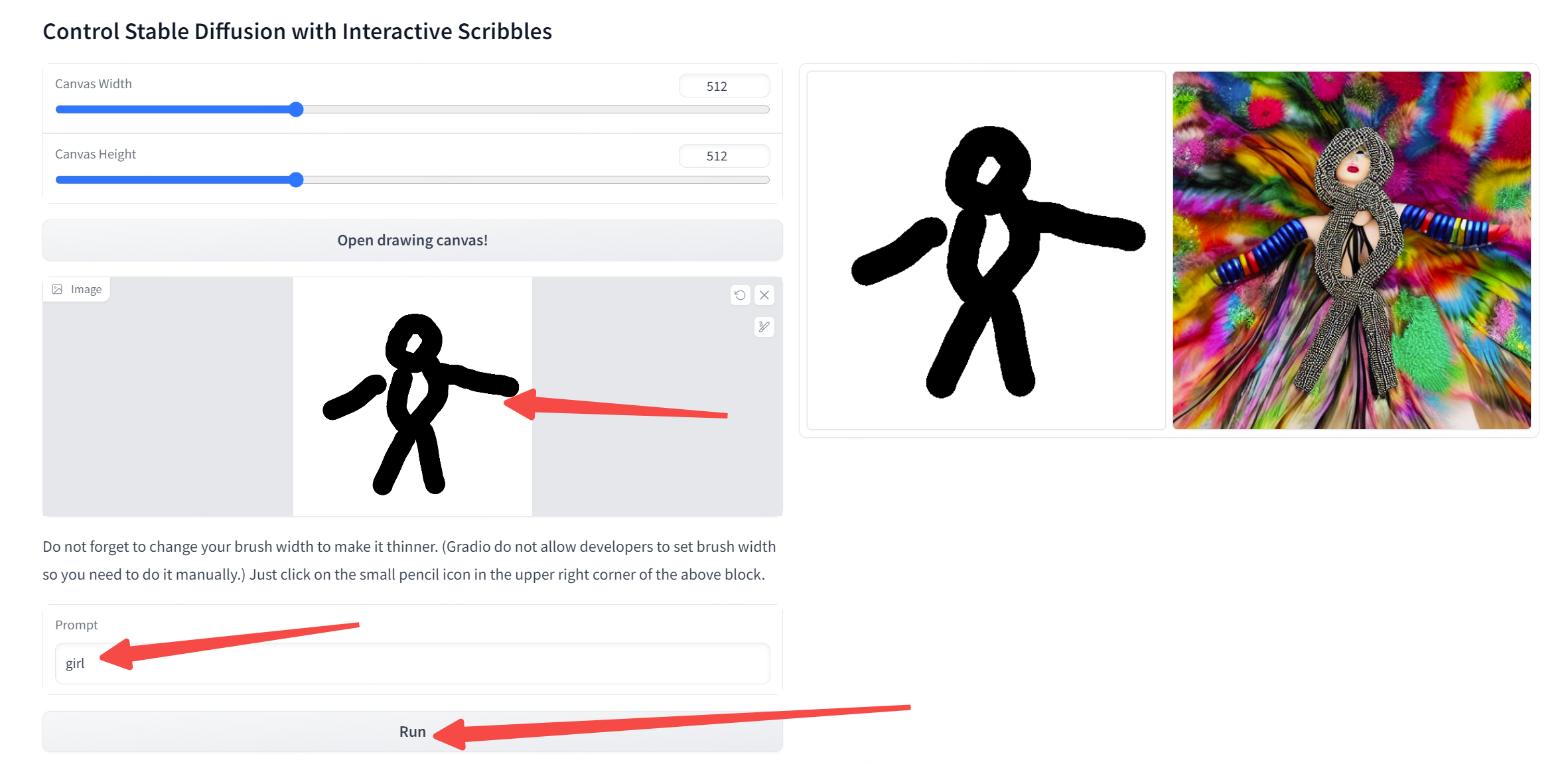

试玩controlnet

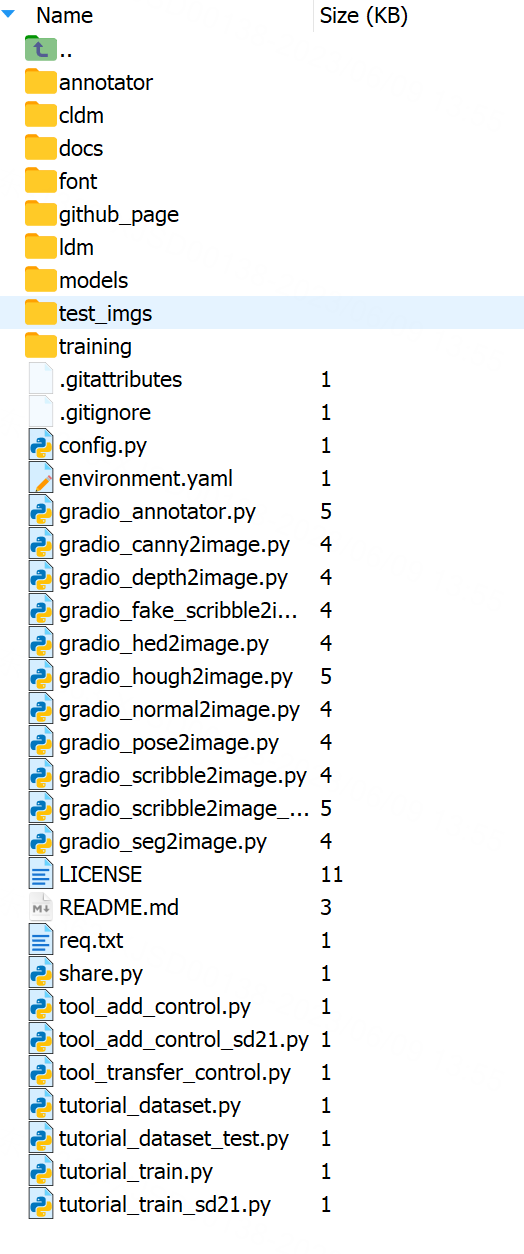

执行:

python gradio_scribble2image_interactive.py

网络问题,可能一些脚本会有问题,我这里没问题:

访问http://127.0.0.1:7860/,可以得到:

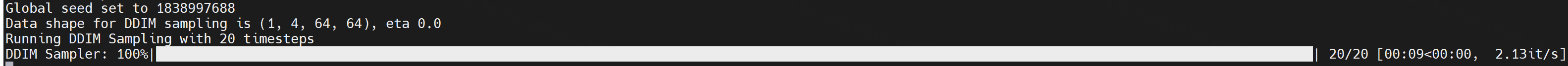

执行过程:

gpu显存占用:8847MiB

训练

数据准备

我的数据是准备训练scribble:

fake_image2scribble.py

from share import *

import config

import os

os.environ["CUDA_VISIBLE_DEVICES"] = '0'

import cv2

import einops

import gradio as gr

import numpy as np

import torch

import random

from pytorch_lightning import seed_everything

from annotator.util import resize_image, HWC3

from annotator.hed import HEDdetector, nms

from cldm.model import create_model, load_state_dict

from cldm.ddim_hacked import DDIMSampler

apply_hed = HEDdetector()

def image2hed(input_image):

input_image = HWC3(input_image)

detected_map = apply_hed(resize_image(input_image, 512))

detected_map = HWC3(detected_map)

img = resize_image(input_image, 512)

H, W, C = img.shape

detected_map = cv2.resize(detected_map, (W, H), interpolation=cv2.INTER_LINEAR)

detected_map = nms(detected_map, 127, 3.0)

detected_map = cv2.GaussianBlur(detected_map, (0, 0), 3.0)

detected_map[detected_map > 4] = 255

detected_map[detected_map < 255] = 0

hed_result = 255 - detected_map

return hed_result

if __name__ == "__main__":

target = r'/ssd/xiedong/datasets/back_img_nohaveback'

save_img = r'/ssd/xiedong/datasets/back_img_nohaveback_scribble'

if not os.path.exists(save_img):

os.makedirs(save_img)

for i in os.listdir(target):

img = cv2.imread(os.path.join(target, i))

hed_result = image2hed(img)

cv2.imwrite(os.path.join(save_img, i), hed_result)

print("done")

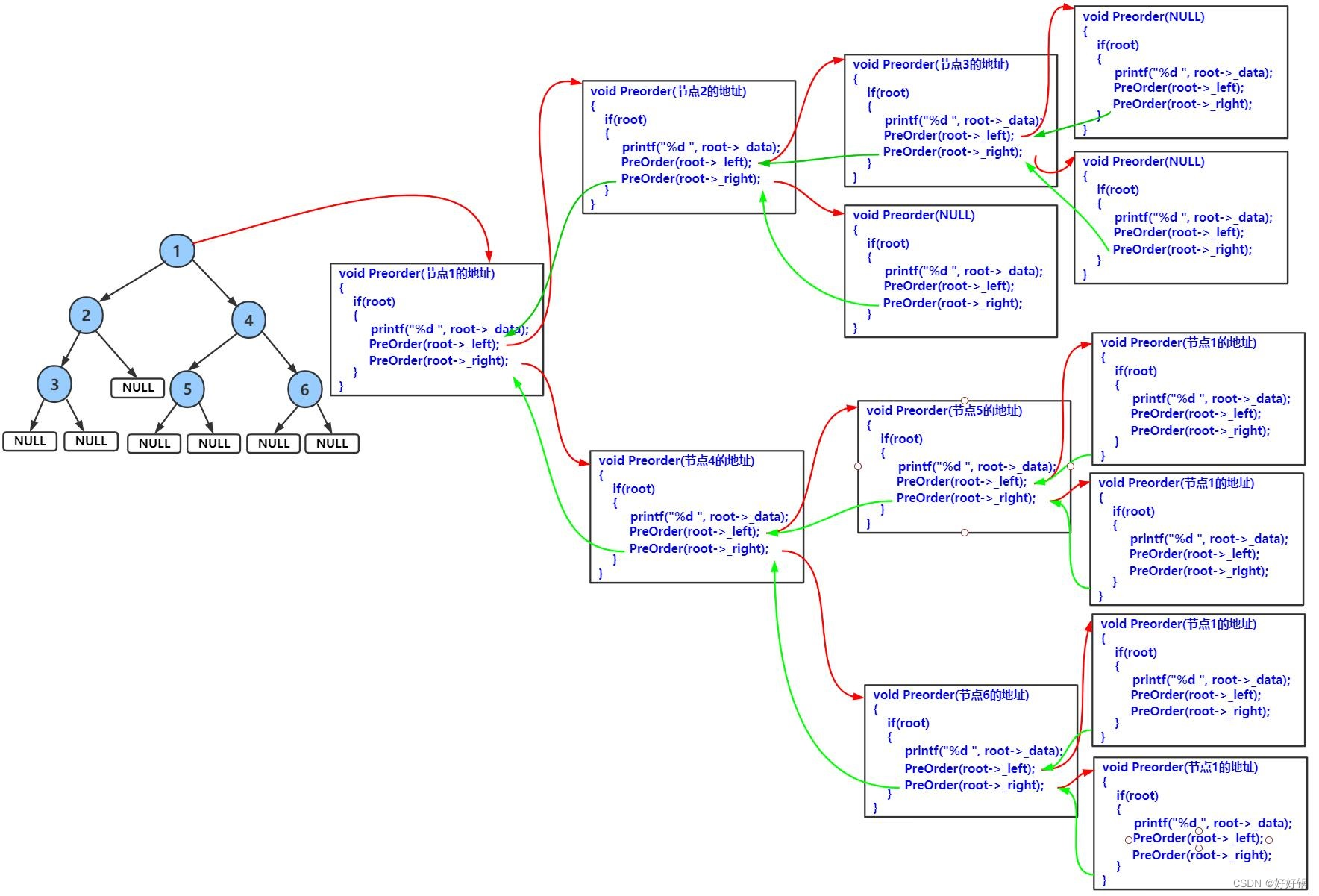

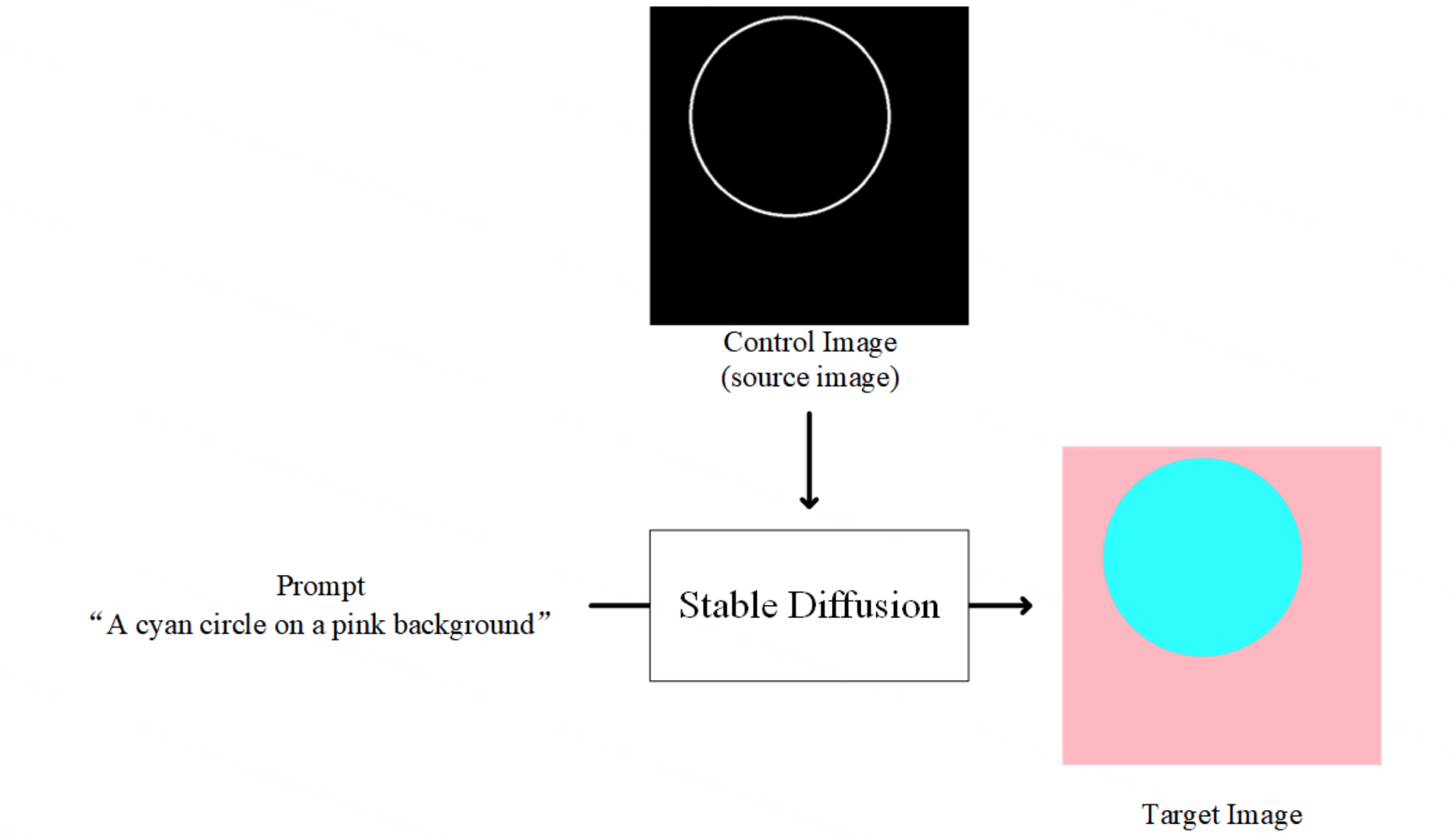

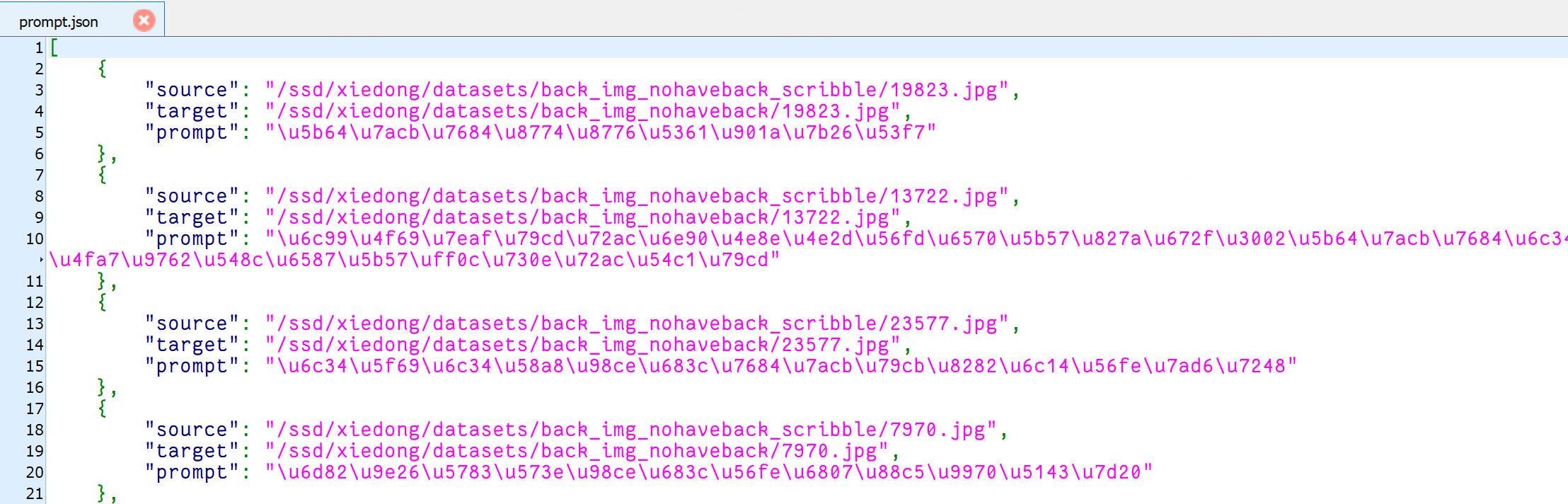

官网教程示意图如下,我这里准备用scribble作为source image,Prompt我也自己准备了。

官网的训练教程:

https://github.com/lllyasviel/ControlNet/blob/main/docs/train.md

官网的数据 fill50k数据:

https://huggingface.co/datasets/fusing/fill50k

无所谓,最终搞个json出来:

再写个dataset loader:

import json

import cv2

import numpy as np

from torch.utils.data import Dataset

class MyDataset(Dataset):

def __init__(self):

self.data = []

with open('./prompt.json', 'r') as f:

self.data = json.load(f)

def __len__(self):

return len(self.data)

def __getitem__(self, idx):

item = self.data[idx]

source_filename = item['source']

target_filename = item['target']

prompt = item['prompt']

source = cv2.imread(source_filename)

target = cv2.imread(target_filename)

# Do not forget that OpenCV read images in BGR order.

source = cv2.cvtColor(source, cv2.COLOR_BGR2RGB)

target = cv2.cvtColor(target, cv2.COLOR_BGR2RGB)

# Normalize source images to [0, 1].

source = source.astype(np.float32) / 255.0

# Normalize target images to [-1, 1].

target = (target.astype(np.float32) / 127.5) - 1.0

return dict(jpg=target, txt=prompt, hint=source)

if __name__ == '__main__':

# 打印第一个

dataset = MyDataset()

print(dataset[0])

选一个Stable diffusion模型

Then you need to decide which Stable Diffusion Model you want to control. In this example, we will just use standard SD1.5. You can download it from the official page of Stability. You want the file “v1-5-pruned.ckpt”.

(Or “v2-1_512-ema-pruned.ckpt” if you are using SD2.)

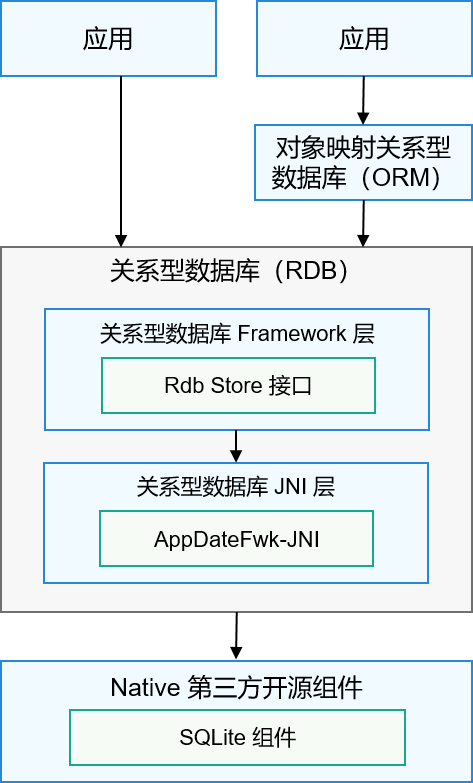

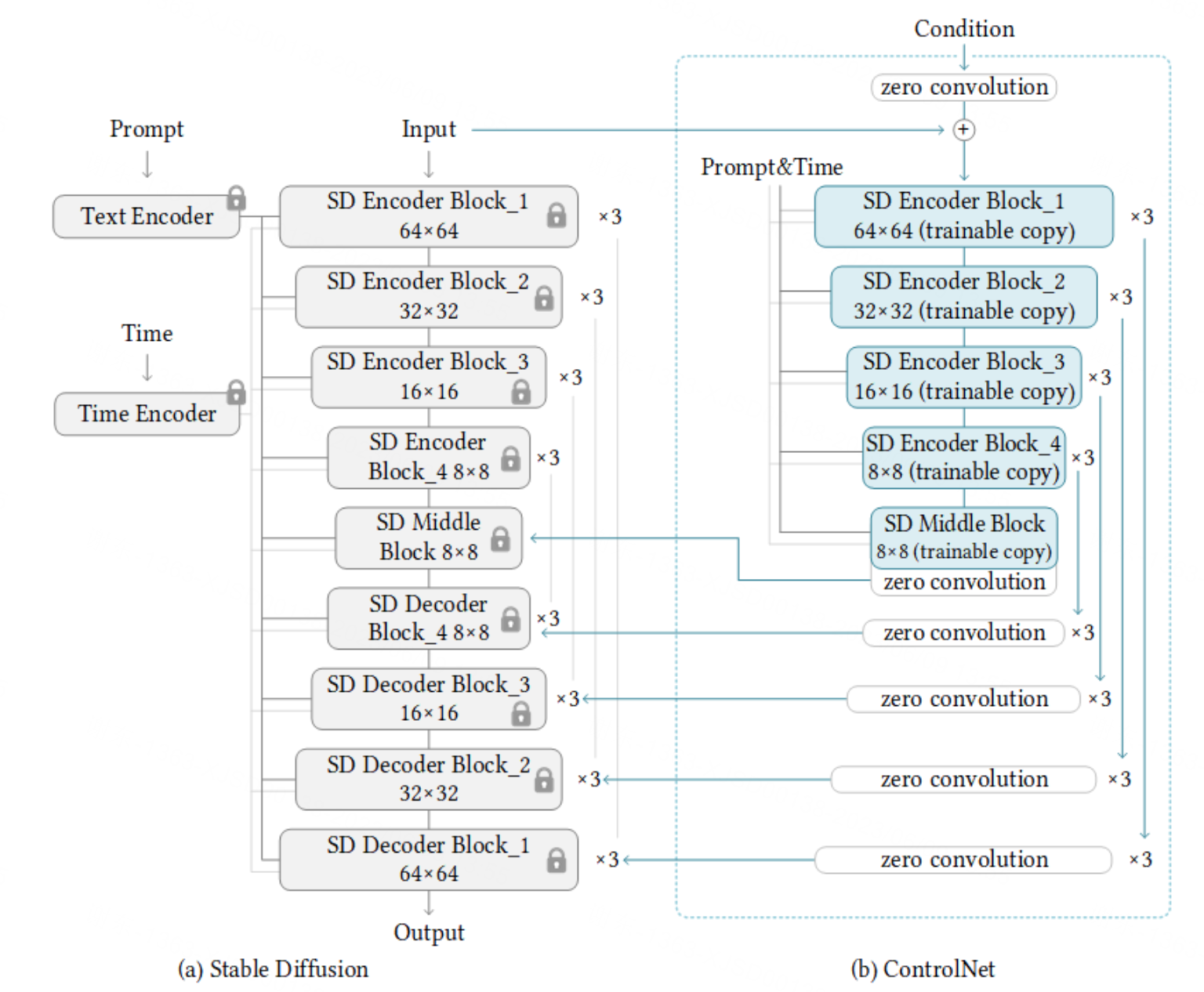

然后你需要连接一个控制网到SD模型。架构是

请注意,ControlNet内的所有权重也都是从SD复制的,因此没有任何层是从头开始训练的,并且您仍在微调整个模型。

我们为您提供了一个简单的脚本来轻松实现这一点。如果您的SD文件名为“./models/v1-5-pruned.ckpt”,并且您希望脚本将处理后的模型(SD+ControlNet)保存在位置“./models/control_sd15_ini.ckpt”,您只需运行:

【国内网络环境问题,可能要执行很多次,最快的解决办法我想你知道的。】

【./.cache/huggingface/hub/models–openai–clip-vit-large-patch14/snapshots/8d052a0f05efbaefbc9e8786ba291cfdf93e5bff/pytorch_model.bin】

python tool_add_control.py ./models/v1-5-pruned.ckpt ./models/control_sd15_ini.ckpt

Or if you are using SD2:

python tool_add_control_sd21.py ./models/v2-1_512-ema-pruned.ckpt ./models/control_sd21_ini.ckpt

This is the correct output from my machine:

(py38_diffusers) gpu16: /ssd/xiedong/workplace/ControlNet $ python tool_add_control.py ./models/v1-5-pruned.ckpt ./models/control_sd15_ini.ckpt

logging improved.

No module 'xformers'. Proceeding without it.

ControlLDM: Running in eps-prediction mode

DiffusionWrapper has 859.52 M params.

making attention of type 'vanilla' with 512 in_channels

Working with z of shape (1, 4, 32, 32) = 4096 dimensions.

making attention of type 'vanilla' with 512 in_channels

Loaded model config from [./models/cldm_v15.yaml]

These weights are newly added: logvar

These weights are newly added: control_model.zero_convs.0.0.weight

These weights are newly added: control_model.zero_convs.0.0.bias

These weights are newly added: control_model.zero_convs.1.0.weight

These weights are newly added: control_model.zero_convs.1.0.bias

These weights are newly added: control_model.zero_convs.2.0.weight

These weights are newly added: control_model.zero_convs.2.0.bias

These weights are newly added: control_model.zero_convs.3.0.weight

These weights are newly added: control_model.zero_convs.3.0.bias

These weights are newly added: control_model.zero_convs.4.0.weight

These weights are newly added: control_model.zero_convs.4.0.bias

These weights are newly added: control_model.zero_convs.5.0.weight

These weights are newly added: control_model.zero_convs.5.0.bias

These weights are newly added: control_model.zero_convs.6.0.weight

These weights are newly added: control_model.zero_convs.6.0.bias

These weights are newly added: control_model.zero_convs.7.0.weight

These weights are newly added: control_model.zero_convs.7.0.bias

These weights are newly added: control_model.zero_convs.8.0.weight

These weights are newly added: control_model.zero_convs.8.0.bias

These weights are newly added: control_model.zero_convs.9.0.weight

These weights are newly added: control_model.zero_convs.9.0.bias

These weights are newly added: control_model.zero_convs.10.0.weight

These weights are newly added: control_model.zero_convs.10.0.bias

These weights are newly added: control_model.zero_convs.11.0.weight

These weights are newly added: control_model.zero_convs.11.0.bias

These weights are newly added: control_model.input_hint_block.0.weight

These weights are newly added: control_model.input_hint_block.0.bias

These weights are newly added: control_model.input_hint_block.2.weight

These weights are newly added: control_model.input_hint_block.2.bias

These weights are newly added: control_model.input_hint_block.4.weight

These weights are newly added: control_model.input_hint_block.4.bias

These weights are newly added: control_model.input_hint_block.6.weight

These weights are newly added: control_model.input_hint_block.6.bias

These weights are newly added: control_model.input_hint_block.8.weight

These weights are newly added: control_model.input_hint_block.8.bias

These weights are newly added: control_model.input_hint_block.10.weight

These weights are newly added: control_model.input_hint_block.10.bias

These weights are newly added: control_model.input_hint_block.12.weight

These weights are newly added: control_model.input_hint_block.12.bias

These weights are newly added: control_model.input_hint_block.14.weight

These weights are newly added: control_model.input_hint_block.14.bias

These weights are newly added: control_model.middle_block_out.0.weight

These weights are newly added: control_model.middle_block_out.0.bias

Done.

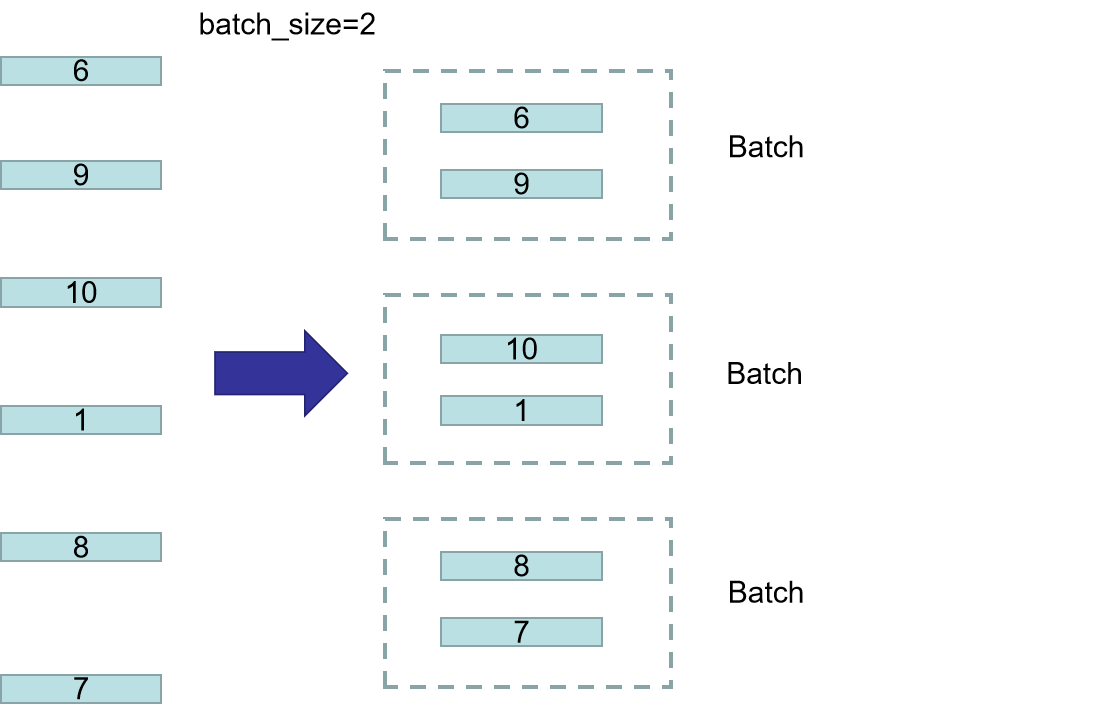

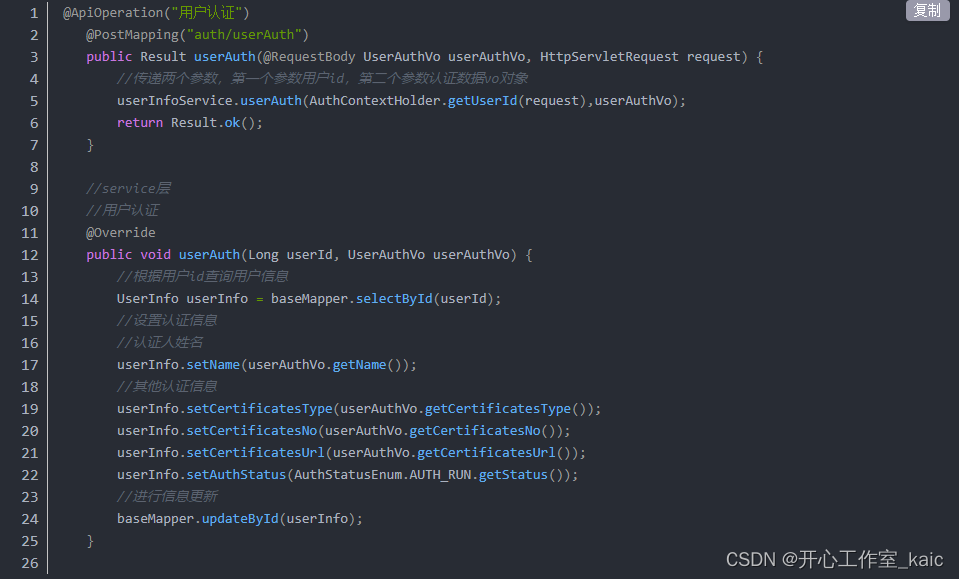

开始训练

代码很简单,超参几乎都在./models/cldm_v15.yaml。

import pytorch_lightning as pl

from torch.utils.data import DataLoader

from scribble_datasets_en import MyDataset

from cldm.logger import ImageLogger

from cldm.model import create_model, load_state_dict

# Configs

resume_path = './models/control_sd15_ini.ckpt'

batch_size = 4

logger_freq = 300

learning_rate = 1e-5

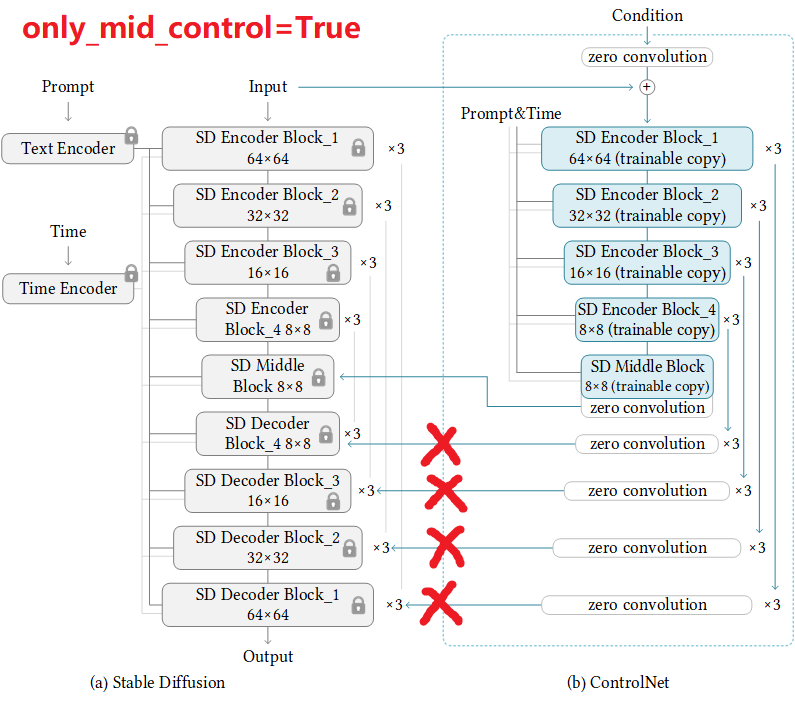

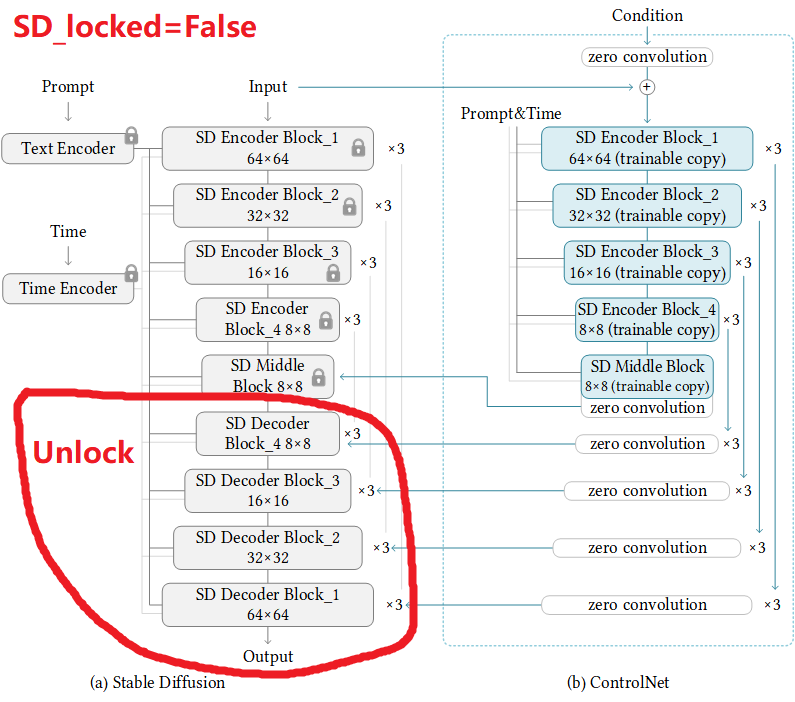

sd_locked = True

only_mid_control = False

# First use cpu to load models. Pytorch Lightning will automatically move it to GPUs.

model = create_model('./models/cldm_v15.yaml').cpu()

model.load_state_dict(load_state_dict(resume_path, location='cpu'))

model.learning_rate = learning_rate

model.sd_locked = sd_locked

model.only_mid_control = only_mid_control

# Misc

dataset = MyDataset()

dataloader = DataLoader(dataset, num_workers=1, batch_size=batch_size, shuffle=True)

logger = ImageLogger(batch_frequency=logger_freq)

trainer = pl.Trainer(gpus=1, precision=32, callbacks=[logger])

# Train!

trainer.fit(model, dataloader)

此外:

sd_locked = True

only_mid_control = False

训练开始

ControlNet$ python train_scribble_en.py

No module 'xformers'. Proceeding without it.

ControlLDM: Running in eps-prediction mode

DiffusionWrapper has 859.52 M params.

making attention of type 'vanilla' with 512 in_channels

Working with z of shape (1, 4, 32, 32) = 4096 dimensions.

making attention of type 'vanilla' with 512 in_channels

Some weights of the model checkpoint at openai/clip-vit-large-patch14 were not used when initializing CLIPTextModel: ['vision_model.encoder.layers.6.self_attn.vweight', 'vision_model.encoder.layers.21.layer_norm1.bias', 'vision_model.encoder.layers.17.self_attn.v_proj.weight', 'vision_model.encoder.layers.3.layer_norm1, 'vision_model.encoder.layers.7.mlp.fc2.weight', 'vision_model.encoder.layers.6.mlp.fc1.bias', 'vision_model.encoder.layers.16.mlp.fc1.weight', 'vision_model.engs.position_ids', 'vision_model.encoder.layers.16.self_attn.out_proj.weight', 'vision_model.encoder.layers.10.layer_norm1.bias', 'vision_model.encoder.layers.1fc2.weight', 'vision_model.encoder.layers.17.self_attn.q_proj.weight', 'vision_model.encoder.layers.9.mlp.fc2.weight', 'vision_model.encoder.layers.10.mlp.fc1.b'vision_model.encoder.layers.3.self_attn.out_proj.bias', 'vision_model.encoder.layers.5.mlp.fc2.weight', 'vision_model.encoder.layers.23.layer_norm1.weight', 'vmodel.encoder.layers.16.self_attn.k_proj.weight', 'vision_model.encoder.layers.16.self_attn.q_proj.weight', 'vision_model.encoder.layers.21.self_attn.out_proj.b'vision_model.encoder.layers.12.layer_norm2.weight', 'vision_model.encoder.layers.23.layer_norm2.weight', 'vision_model.encoder.layers.13.self_attn.k_proj.bias'ion_model.encoder.layers.3.layer_norm2.weight', 'vision_model.encoder.layers.11.self_attn.q_proj.bias', 'vision_model.encoder.layers.2.layer_norm1.weight', 'visdel.encoder.layers.15.layer_norm2.bias', 'vision_model.encoder.layers.9.mlp.fc1.bias', 'vision_model.encoder.layers.12.self_attn.v_proj.weight', 'vision_model.e.layers.22.layer_norm2.weight', 'vision_model.encoder.layers.14.self_attn.q_proj.weight', 'vision_model.encoder.layers.13.self_attn.out_proj.weight', 'vision_mocoder.layers.9.layer_norm1.weight', 'vision_model.encoder.layers.12.layer_norm1.weight', 'vision_model.encoder.layers.14.self_attn.v_proj.weight', 'vision_modeler.layers.6.mlp.fc2.weight', 'vision_model.encoder.layers.15.self_attn.k_proj.weight', 'vision_model.encoder.layers.18.self_attn.q_proj.weight', 'vision_model.e.layers.4.self_attn.out_proj.bias', 'vision_model.encoder.layers.0.mlp.fc1.bias', 'vision_model.encoder.layers.14.mlp.fc2.bias', 'vision_model.encoder.layers.16attn.q_proj.bias', 'vision_model.encoder.layers.12.self_attn.v_proj.bias', 'vision_model.encoder.layers.13.layer_norm1.weight', 'vision_model.encoder.layers.8.m.weight', 'vision_model.encoder.layers.8.mlp.fc1.bias', 'vision_model.encoder.layers.0.mlp.fc1.weight', 'vision_model.encoder.layers.9.layer_norm2.weight', 'visdel.encoder.layers.9.self_attn.q_proj.weight', 'vision_model.encoder.layers.10.self_attn.out_proj.bias', 'vision_model.encoder.layers.2.layer_norm1.bias', 'visiel.encoder.layers.6.layer_norm2.weight', 'vision_model.encoder.layers.8.self_attn.v_proj.bias', 'vision_model.post_layernorm.bias', 'vision_model.encoder.layersf_attn.out_proj.weight', 'vision_model.encoder.layers.20.layer_norm1.weight', 'vision_model.pre_layrnorm.bias', 'vision_model.encoder.layers.5.self_attn.out_pro', 'vision_model.encoder.layers.16.layer_norm1.bias', 'vision_model.encoder.layers.21.layer_norm1.weight', 'vision_model.encoder.layers.18.self_attn.q_proj.biassion_model.encoder.layers.5.self_attn.k_proj.weight', 'vision_model.encoder.layers.6.self_attn.k_proj.bias', 'vision_model.encoder.layers.16.mlp.fc2.weight', 'vmodel.encoder.layers.9.layer_norm1.bias', 'vision_model.encoder.layers.7.self_attn.out_proj.weight', 'vision_model.encoder.layers.20.self_attn.out_proj.weight',on_model.encoder.layers.22.mlp.fc2.weight', 'vision_model.encoder.layers.3.self_attn.out_proj.weight', 'vision_model.encoder.layers.22.layer_norm2.bias', 'visiol.encoder.layers.18.self_attn.out_proj.bias', 'vision_model.encoder.layers.1.self_attn.out_proj.bias', 'vision_model.encoder.layers.19.self_attn.v_proj.weight',on_model.encoder.layers.22.self_attn.k_proj.weight', 'vision_model.encoder.layers.13.self_attn.q_proj.bias', 'vision_model.encoder.layers.23.self_attn.v_proj.we 'vision_model.encoder.layers.2.self_attn.v_proj.weight', 'vision_model.encoder.layers.9.self_attn.q_proj.bias', 'vision_model.encoder.layers.16.self_attn.v_proht', 'vision_model.encoder.layers.3.mlp.fc1.weight', 'vision_model.encoder.layers.20.self_attn.v_proj.bias', 'vision_model.encoder.layers.13.self_attn.v_proj.bivision_model.encoder.layers.8.self_attn.q_proj.weight', 'vision_model.encoder.layers.21.mlp.fc1.weight', 'vision_model.encoder.layers.7.layer_norm2.weight', 'viodel.encoder.layers.7.layer_norm2.bias', 'vision_model.encoder.layers.1.self_attn.k_proj.weight', 'vision_model.encoder.layers.22.self_attn.q_proj.weight', 'visdel.encoder.layers.22.self_attn.v_proj.weight', 'vision_model.encoder.layers.8.layer_norm1.bias', 'vision_model.encoder.layers.1.self_attn.v_proj.weight', 'visiel.encoder.layers.8.self_attn.k_proj.bias', 'vision_model.encoder.layers.22.self_attn.v_proj.bias', 'vision_model.encoder.layers.1.layer_norm1.weight', 'vision_encoder.layers.3.mlp.fc1.bias', 'vision_model.encoder.layers.8.layer_norm2.weight', 'vision_model.encoder.layers.17.self_attn.out_proj.weight', 'vision_model.enlayers.7.mlp.fc2.bias', 'vision_model.encoder.layers.21.self_attn.v_proj.bias', 'vision_model.encoder.layers.11.self_attn.k_proj.weight', 'vision_model.encoder..20.self_attn.k_proj.bias', 'vision_model.encoder.layers.23.mlp.fc1.bias', 'vision_model.embeddings.class_embedding', 'vision_model.encoder.layers.15.self_attn..bias', 'vision_model.encoder.layers.15.layer_norm1.bias', 'vision_model.encoder.layers.16.mlp.fc2.bias', 'vision_model.encoder.layers.7.mlp.fc1.weight', 'visiol.encoder.layers.14.self_attn.k_proj.weight', 'vision_model.encoder.layers.3.layer_norm2.bias', 'vision_model.encoder.layers.4.self_attn.q_proj.weight', 'vision.encoder.layers.11.mlp.fc1.bias', 'vision_model.encoder.layers.9.mlp.fc1.weight', 'vision_model.encoder.layers.19.mlp.fc2.bias', 'vision_model.encoder.layers.10attn.out_proj.weight', 'vision_model.encoder.layers.19.self_attn.out_proj.weight', 'vision_model.encoder.layers.21.mlp.fc2.bias', 'vision_model.encoder.layers.2fc1.weight', 'vision_model.encoder.layers.1.layer_norm1.bias', 'vision_model.encoder.layers.14.self_attn.k_proj.bias', 'vision_model.encoder.layers.6.self_attn.oj.weight', 'vision_model.encoder.layers.6.mlp.fc1.weight', 'vision_model.encoder.layers.21.layer_norm2.bias', 'vision_model.encoder.layers.0.self_attn.v_proj.w, 'vision_model.encoder.layers.11.self_attn.k_proj.bias', 'vision_model.encoder.layers.12.mlp.fc1.weight', 'vision_model.encoder.layers.15.mlp.fc1.bias', 'visuaection.weight', 'vision_model.encoder.layers.2.self_attn.q_proj.bias', 'vision_model.encoder.layers.16.self_attn.out_proj.bias', 'vision_model.encoder.layers.23attn.k_proj.bias', 'vision_model.encoder.layers.23.self_attn.out_proj.bias', 'vision_model.encoder.layers.21.self_attn.k_proj.bias', 'vision_model.encoder.layerp.fc2.bias', 'vision_model.encoder.layers.10.layer_norm1.weight', 'vision_model.encoder.layers.22.layer_norm1.bias', 'vision_model.encoder.layers.1.self_attn.ou.weight', 'vision_model.encoder.layers.5.self_attn.v_proj.bias', 'vision_model.encoder.layers.12.self_attn.q_proj.weight', 'vision_model.encoder.layers.6.self_aproj.weight', 'vision_model.encoder.layers.22.self_attn.q_proj.bias', 'vision_model.encoder.layers.18.mlp.fc2.weight', 'vision_model.encoder.layers.16.layer_norght', 'vision_model.encoder.layers.17.layer_norm1.bias', 'vision_model.encoder.layers.11.self_attn.out_proj.bias', 'vision_model.encoder.layers.21.self_attn.q_pas', 'vision_model.encoder.layers.21.self_attn.v_proj.weight', 'vision_model.encoder.layers.20.self_attn.k_proj.weight', 'vision_model.encoder.layers.18.self_atroj.bias', 'vision_model.encoder.layers.6.self_attn.v_proj.bias', 'vision_model.encoder.layers.10.self_attn.v_proj.bias', 'vision_model.encoder.layers.7.layer_nias', 'vision_model.encoder.layers.17.self_attn.k_proj.bias', 'vision_model.encoder.layers.0.self_attn.out_proj.weight', 'vision_model.encoder.layers.7.layer_noight', 'vision_model.encoder.layers.13.layer_norm2.weight', 'vision_model.encoder.layers.14.self_attn.out_proj.bias', 'vision_model.encoder.layers.18.self_attn..weight', 'vision_model.encoder.layers.0.layer_norm2.bias', 'vision_model.encoder.layers.13.self_attn.k_proj.weight', 'vision_model.encoder.layers.0.self_attn.oj.bias', 'vision_model.encoder.layers.15.self_attn.q_proj.weight', 'vision_model.encoder.layers.14.layer_norm1.weight', 'vision_model.encoder.layers.8.self_attnj.bias', 'vision_model.encoder.layers.23.self_attn.k_proj.weight', 'vision_model.encoder.layers.13.mlp.fc1.weight', 'vision_model.encoder.layers.2.mlp.fc2.bias'ion_model.encoder.layers.19.self_attn.k_proj.weight', 'vision_model.encoder.layers.19.mlp.fc1.bias', 'vision_model.encoder.layers.4.self_attn.v_proj.bias', 'visdel.encoder.layers.10.self_attn.v_proj.weight', 'vision_model.encoder.layers.17.self_attn.k_proj.weight', 'vision_model.encoder.layers.0.self_attn.k_proj.bias',on_model.encoder.layers.23.self_attn.v_proj.bias', 'vision_model.encoder.layers.4.layer_norm1.bias', 'vision_model.encoder.layers.11.self_attn.v_proj.weight', '_model.encoder.layers.19.self_attn.v_proj.bias', 'vision_model.encoder.layers.22.mlp.fc2.bias', 'vision_model.encoder.layers.23.layer_norm1.bias', 'vision_modeler.layers.20.layer_norm2.weight', 'vision_model.encoder.layers.14.self_attn.out_proj.weight', 'vision_model.encoder.layers.19.layer_norm2.weight', 'vision_modeler.layers.6.self_attn.q_proj.bias', 'vision_model.encoder.layers.4.mlp.fc1.bias', 'vision_model.post_layernorm.weight', 'vision_model.encoder.layers.8.self_attnj.weight', 'vision_model.encoder.layers.22.self_attn.out_proj.weight', 'vision_model.encoder.layers.16.self_attn.v_proj.bias', 'vision_model.encoder.layers.4.mlweight', 'vision_model.embeddings.patch_embedding.weight', 'vision_model.encoder.layers.10.mlp.fc2.bias', 'vision_model.encoder.layers.18.mlp.fc2.bias', 'vision.encoder.layers.7.self_attn.q_proj.bias', 'vision_model.encoder.layers.2.mlp.fc1.weight', 'vision_model.encoder.layers.23.self_attn.q_proj.weight', 'vision_modeder.layers.23.self_attn.q_proj.bias', 'vision_model.encoder.layers.4.self_attn.v_proj.weight', 'vision_model.encoder.layers.10.mlp.fc1.weight', 'vision_model.enlayers.12.self_attn.q_proj.bias', 'vision_model.encoder.layers.8.self_attn.out_proj.weight', 'vision_model.encoder.layers.18.mlp.fc1.weight', 'vision_model.encoyers.8.self_attn.out_proj.bias', 'vision_model.encoder.layers.19.layer_norm2.bias', 'vision_model.encoder.layers.21.mlp.fc1.bias', 'vision_model.encoder.layers.fc2.weight', 'vision_model.encoder.layers.18.layer_norm1.bias', 'vision_model.encoder.layers.4.mlp.fc2.bias', 'vision_model.encoder.layers.4.mlp.fc2.weight', 'vmodel.encoder.layers.6.layer_norm1.weight', 'vision_model.encoder.layers.11.layer_norm1.weight', 'vision_model.encoder.layers.2.layer_norm2.bias', 'vision_modeler.layers.14.layer_norm2.bias', 'vision_model.encoder.layers.15.mlp.fc2.weight', 'vision_model.encoder.layers.17.self_attn.out_proj.bias', 'vision_model.encoders.21.self_attn.out_proj.weight', 'vision_model.encoder.layers.11.mlp.fc1.weight', 'vision_model.encoder.layers.10.self_attn.q_proj.weight', 'vision_model.encoders.0.self_attn.q_proj.bias', 'vision_model.encoder.layers.23.self_attn.out_proj.weight', 'vision_model.encoder.layers.5.self_attn.k_proj.bias', 'vision_model.enlayers.13.layer_norm2.bias', 'vision_model.encoder.layers.19.self_attn.k_proj.bias', 'vision_model.encoder.layers.19.mlp.fc1.weight', 'vision_model.encoder.layelayer_norm2.weight', 'vision_model.encoder.layers.8.mlp.fc1.weight', 'vision_model.encoder.layers.1.mlp.fc1.bias', 'vision_model.encoder.layers.17.mlp.fc2.weighision_model.encoder.layers.15.self_attn.k_proj.bias', 'vision_model.encoder.layers.13.layer_norm1.bias', 'vision_model.encoder.layers.6.mlp.fc2.bias', 'vision_mncoder.layers.12.self_attn.out_proj.bias', 'vision_model.embeddings.position_embedding.weight', 'vision_model.encoder.layers.20.mlp.fc1.bias', 'vision_model.encayers.14.mlp.fc2.weight', 'vision_model.encoder.layers.17.self_attn.v_proj.bias', 'vision_model.encoder.layers.12.mlp.fc1.bias', 'vision_model.encoder.layers.17_norm2.weight', 'vision_model.encoder.layers.1.mlp.fc1.weight', 'vision_model.encoder.layers.21.layer_norm2.weight', 'vision_model.encoder.layers.19.self_attn.qbias', 'vision_model.encoder.layers.19.layer_norm1.bias', 'vision_model.encoder.layers.18.layer_norm2.bias', 'vision_model.encoder.layers.7.self_attn.k_proj.wei'vision_model.encoder.layers.3.self_attn.v_proj.weight', 'vision_model.encoder.layers.12.layer_norm1.bias', 'vision_model.encoder.layers.23.layer_norm2.bias', '_model.encoder.layers.17.mlp.fc1.bias', 'vision_model.encoder.layers.20.mlp.fc1.weight', 'vision_model.encoder.layers.8.mlp.fc2.bias', 'vision_model.encoder.lay.self_attn.k_proj.weight', 'vision_model.encoder.layers.20.self_attn.v_proj.weight', 'vision_model.encoder.layers.5.layer_norm2.weight', 'vision_model.encoder.l8.layer_norm1.weight', 'vision_model.encoder.layers.3.mlp.fc2.weight', 'vision_model.encoder.layers.13.self_attn.v_proj.weight', 'vision_model.encoder.layers.4.norm2.bias', 'vision_model.encoder.layers.5.layer_norm2.bias', 'vision_model.encoder.layers.12.self_attn.k_proj.bias', 'vision_model.encoder.layers.5.mlp.fc1.bivision_model.encoder.layers.2.self_attn.out_proj.bias', 'vision_model.encoder.layers.5.layer_norm1.weight', 'vision_model.encoder.layers.13.self_attn.q_proj.wei'vision_model.encoder.layers.22.mlp.fc1.bias', 'vision_model.encoder.layers.20.self_attn.q_proj.weight', 'vision_model.encoder.layers.20.mlp.fc2.bias', 'vision_encoder.layers.17.layer_norm1.weight', 'vision_model.encoder.layers.19.self_attn.q_proj.weight', 'vision_model.encoder.layers.21.self_attn.q_proj.weight', 'visiel.encoder.layers.3.self_attn.q_proj.weight', 'vision_model.encoder.layers.1.mlp.fc2.weight', 'vision_model.encoder.layers.10.mlp.fc2.weight', 'vision_model.encayers.3.self_attn.v_proj.bias', 'vision_model.encoder.layers.10.self_attn.k_proj.bias', 'logit_scale', 'vision_model.encoder.layers.17.mlp.fc2.bias', 'vision_mocoder.layers.17.mlp.fc1.weight', 'vision_model.encoder.layers.15.mlp.fc1.weight', 'vision_model.encoder.layers.4.self_attn.k_proj.bias', 'vision_model.encoder.l4.layer_norm2.weight', 'vision_model.encoder.layers.7.self_attn.v_proj.weight', 'vision_model.encoder.layers.3.layer_norm1.weight', 'vision_model.encoder.layersp.fc2.weight', 'vision_model.encoder.layers.13.mlp.fc2.bias', 'vision_model.encoder.layers.22.mlp.fc1.weight', 'vision_model.encoder.layers.22.self_attn.out_pro', 'vision_model.encoder.layers.13.mlp.fc1.bias', 'vision_model.encoder.layers.12.mlp.fc2.bias', 'vision_model.encoder.layers.15.self_attn.v_proj.bias', 'vision.encoder.layers.7.self_attn.v_proj.bias', 'vision_model.encoder.layers.1.layer_norm2.bias', 'vision_model.encoder.layers.0.self_attn.k_proj.weight', 'vision_mododer.layers.1.self_attn.q_proj.bias', 'vision_model.encoder.layers.16.self_attn.k_proj.bias', 'vision_model.encoder.layers.17.self_attn.q_proj.bias', 'vision_mocoder.layers.3.self_attn.k_proj.bias', 'vision_model.encoder.layers.7.self_attn.k_proj.bias', 'vision_model.encoder.layers.5.mlp.fc1.weight', 'vision_model.encoyers.6.layer_norm2.bias', 'vision_model.encoder.layers.9.mlp.fc2.bias', 'vision_model.encoder.layers.3.self_attn.q_proj.bias', 'vision_model.encoder.layers.2.mlweight', 'vision_model.encoder.layers.9.layer_norm2.bias', 'vision_model.encoder.layers.9.self_attn.v_proj.weight', 'vision_model.encoder.layers.9.self_attn.outweight', 'vision_model.encoder.layers.1.self_attn.v_proj.bias', 'vision_model.encoder.layers.7.self_attn.out_proj.bias', 'vision_model.encoder.layers.21.mlp.fc2t', 'vision_model.encoder.layers.1.mlp.fc2.bias', 'vision_model.encoder.layers.7.self_attn.q_proj.weight', 'vision_model.encoder.layers.2.self_attn.k_proj.bias'ion_model.encoder.layers.20.self_attn.q_proj.bias', 'vision_model.encoder.layers.2.self_attn.k_proj.weight', 'vision_model.encoder.layers.6.self_attn.k_proj.wei'vision_model.encoder.layers.8.self_attn.k_proj.weight', 'text_projection.weight', 'vision_model.encoder.layers.10.self_attn.q_proj.bias', 'vision_model.encoders.12.mlp.fc2.weight', 'vision_model.encoder.layers.9.self_attn.v_proj.bias', 'vision_model.encoder.layers.2.mlp.fc1.bias', 'vision_model.encoder.layers.12.layer.bias', 'vision_model.encoder.layers.9.self_attn.k_proj.weight', 'vision_model.encoder.layers.18.self_attn.out_proj.weight', 'vision_model.encoder.layers.0.mlp.as', 'vision_model.encoder.layers.18.mlp.fc1.bias', 'vision_model.encoder.layers.15.layer_norm2.weight', 'vision_model.encoder.layers.22.layer_norm1.weight', 'vmodel.encoder.layers.11.self_attn.v_proj.bias', 'vision_model.encoder.layers.13.self_attn.out_proj.bias', 'vision_model.encoder.layers.0.layer_norm1.weight', 'vmodel.encoder.layers.20.layer_norm2.bias', 'vision_model.encoder.layers.10.self_attn.k_proj.weight', 'vision_model.encoder.layers.5.self_attn.q_proj.bias', 'visdel.encoder.layers.17.layer_norm2.bias', 'vision_model.encoder.layers.0.layer_norm2.weight', 'vision_model.pre_layrnorm.weight', 'vision_model.encoder.layers.22attn.k_proj.bias', 'vision_model.encoder.layers.5.self_attn.out_proj.weight', 'vision_model.encoder.layers.1.layer_norm2.weight', 'vision_model.encoder.layers.2_attn.out_proj.bias', 'vision_model.encoder.layers.15.self_attn.v_proj.weight', 'vision_model.encoder.layers.11.self_attn.out_proj.weight', 'vision_model.encoders.14.self_attn.v_proj.bias', 'vision_model.encoder.layers.0.layer_norm1.bias', 'vision_model.encoder.layers.4.layer_norm1.weight', 'vision_model.encoder.layersp.fc1.weight', 'vision_model.encoder.layers.11.self_attn.q_proj.weight', 'vision_model.encoder.layers.7.mlp.fc1.bias', 'vision_model.encoder.layers.11.layer_nors', 'vision_model.encoder.layers.0.self_attn.q_proj.weight', 'vision_model.encoder.layers.6.layer_norm1.bias', 'vision_model.encoder.layers.15.self_attn.out_proht', 'vision_model.encoder.layers.18.self_attn.v_proj.weight', 'vision_model.encoder.layers.10.layer_norm2.weight', 'vision_model.encoder.layers.0.self_attn.v_pas', 'vision_model.encoder.layers.16.mlp.fc1.bias', 'vision_model.encoder.layers.9.self_attn.k_proj.bias', 'vision_model.encoder.layers.14.layer_norm1.bias', 'vmodel.encoder.layers.14.mlp.fc1.bias', 'vision_model.encoder.layers.19.layer_norm1.weight', 'vision_model.encoder.layers.23.mlp.fc2.bias', 'vision_model.encoders.2.layer_norm2.weight', 'vision_model.encoder.layers.1.self_attn.k_proj.bias', 'vision_model.encoder.layers.2.self_attn.v_proj.bias', 'vision_model.encoder.lay.mlp.fc2.bias', 'vision_model.encoder.layers.8.layer_norm2.bias', 'vision_model.encoder.layers.11.mlp.fc2.weight', 'vision_model.encoder.layers.5.layer_norm1.bivision_model.encoder.layers.5.self_attn.q_proj.weight', 'vision_model.encoder.layers.11.mlp.fc2.bias', 'vision_model.encoder.layers.23.mlp.fc2.weight', 'vision_encoder.layers.20.layer_norm1.bias', 'vision_model.encoder.layers.16.layer_norm2.bias', 'vision_model.encoder.layers.5.mlp.fc2.bias', 'vision_model.encoder.layeself_attn.v_proj.bias', 'vision_model.encoder.layers.2.self_attn.out_proj.weight', 'vision_model.encoder.layers.16.layer_norm1.weight', 'vision_model.encoder.la8.layer_norm2.weight', 'vision_model.encoder.layers.19.mlp.fc2.weight', 'vision_model.encoder.layers.10.layer_norm2.bias', 'vision_model.encoder.layers.1.self_aproj.weight', 'vision_model.encoder.layers.15.layer_norm1.weight', 'vision_model.encoder.layers.19.self_attn.out_proj.bias', 'vision_model.encoder.layers.2.selfq_proj.weight', 'vision_model.encoder.layers.4.self_attn.k_proj.weight', 'vision_model.encoder.layers.14.self_attn.q_proj.bias', 'vision_model.encoder.layers.5.ttn.v_proj.weight', 'vision_model.encoder.layers.11.layer_norm1.bias', 'vision_model.encoder.layers.12.self_attn.k_proj.weight', 'vision_model.encoder.layers.15attn.out_proj.bias', 'vision_model.encoder.layers.6.self_attn.out_proj.bias', 'vision_model.encoder.layers.9.self_attn.out_proj.bias', 'vision_model.encoder.lay.self_attn.out_proj.weight', 'vision_model.encoder.layers.18.layer_norm1.weight', 'vision_model.encoder.layers.3.self_attn.k_proj.weight', 'vision_model.encoders.4.self_attn.q_proj.bias', 'vision_model.encoder.layers.11.layer_norm2.weight']

- This IS expected if you are initializing CLIPTextModel from the checkpoint of a model trained on another task or with another architecture (e.g. initializing ForSequenceClassification model from a BertForPreTraining model).

- This IS NOT expected if you are initializing CLIPTextModel from the checkpoint of a model that you expect to be exactly identical (initializing a BertForSequessification model from a BertForSequenceClassification model).

Loaded model config from [./models/cldm_v15.yaml]

Loaded state_dict from [./models/control_sd15_ini.ckpt]

GPU available: True, used: True

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

/ssd/xiedong/miniconda3/envs/py38_diffusers_1/lib/python3.8/site-packages/pytorch_lightning/trainer/configuration_validator.py:118: UserWarning: You defined a `tion_step` but have no `val_dataloader`. Skipping val loop.

rank_zero_warn("You defined a `validation_step` but have no `val_dataloader`. Skipping val loop.")

/ssd/xiedong/miniconda3/envs/py38_diffusers_1/lib/python3.8/site-packages/pytorch_lightning/trainer/configuration_validator.py:280: LightningDeprecationWarning:`LightningModule.on_train_batch_start` hook signature has changed in v1.5. The `dataloader_idx` argument will be removed in v1.7.

rank_zero_deprecation(

/ssd/xiedong/miniconda3/envs/py38_diffusers_1/lib/python3.8/site-packages/pytorch_lightning/trainer/configuration_validator.py:287: LightningDeprecationWarning:`Callback.on_train_batch_end` hook signature has changed in v1.5. The `dataloader_idx` argument will be removed in v1.7.

rank_zero_deprecation(

LOCAL_RANK: 0 - CUDA_VISIBLE_DEVICES: [1]

| Name | Type | Params

---------------------------------------------------------

0 | model | DiffusionWrapper | 859 M

1 | first_stage_model | AutoencoderKL | 83.7 M

2 | cond_stage_model | FrozenCLIPEmbedder | 123 M

3 | control_model | ControlNet | 361 M

---------------------------------------------------------

1.2 B Trainable params

206 M Non-trainable params

1.4 B Total params

5,710.058 Total estimated model params size (MB)

/ssd/xiedong/miniconda3/envs/py38_diffusers_1/lib/python3.8/site-packages/pytorch_lightning/trainer/data_loading.py:110: UserWarning: The dataloader, train_data, does not have many workers which may be a bottleneck. Consider increasing the value of the `num_workers` argument` (try 56 which is the number of cpus on thisne) in the `DataLoader` init to improve performance.

rank_zero_warn(

Epoch 0: 0%| | 0/1056 [00:00<?, /ssd/xiedong/miniconda3/envs/py38_diffusers_1/lib/python3.8/site-packages/pytorch_lightning/utilities/data.py:56: UserWarning: Trying to infer the `batch_size` n ambiguous collection. The batch size we found is 4. To avoid any miscalculations, use `self.log(..., batch_size=batch_size)`.

warning_cache.warn(

Data shape for DDIM sampling is (4, 4, 64, 64), eta 0.0

Running DDIM Sampling with 50 timesteps

DDIM Sampler: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████|

Epoch 0: 21%|▏| 224/1056 [04:53<18:11, 1.31s/it, loss=0.0611, v_num=2, train/loss_simple_step=0.0623, train/loss_vlb_step=0.000236, train/loss_step=0.0623, glEpoch 0: 28%|▎| 300/1056 [06:23<16:07, 1.28s/it, loss=0.0611, v_num=2, train/loss_simple_step=0.020, train/loss_vlb_step=7.54e-5, train/loss_step=0.020, globaData shape for DDIM sampling is (4, 4, 64, 64), eta 0.0

Running DDIM Sampling with 50 timesteps

DDIM Sampler: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 50/50 [00:28<00:00

Epoch 0: 57%|██████████▊ | 600/1056 [12:40<09:38, 1.27s/it, loss=0.0745, v_num=2, train/loss_simple_step=0.0428, train/loss_vlb_step=0.000152, train/loData shape for DDIM sampling is (4, 4, 64, 64), eta 0.0

Running DDIM Sampling with 50 timesteps

DDIM Sampler: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████

Epoch 0: 58%|███▍ | 609/1056 [13:20<09:47, 1.31s/it, loss=0.0665, v_num=2, train/loss_simple_step=0.0532, train/loss_vlb_step=0.000262, train/loss_step=0.053Epoch 0: 67%|▋| 712/1056 [15:16<07:22, 1.29s/it, loss=0.0603, v_num=2, train/loss_simple_step=0.0232, train/loss_vlb_step=8.41e-5, train/loss_step=0.0232, gloEpoch 0: 85%|▊| 900/1056 [18:48<03:15, 1.25s/it, loss=0.0569, v_num=2, train/loss_simple_step=0.00959, train/loss_vlb_step=3.75e-5, train/loss_step=0.00959, gData shape for DDIM sampling is (4, 4, 64, 64), eta 0.0

Running DDIM Sampling with 50 timesteps

DDIM Sampler: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 50/50 [00:28<00:00

Epoch 0: 90%|▉| 953/1056 [20:17<02:11, 1.28s/it, loss=0.067, v_num=2, train/loss_simple_step=0.0193, train/loss_vlb_step=7.23e-5, train/loss_step=0.0193, globEpoch 0: 100%|▉| 1055/1056 [22:11<00:01, 1.26s/it, loss=0.0567, v_num=2, train/loss_simple_step=0.0556, train/loss_vlb_step=0.000276, train/loss_step=0.0556, g/ssd/xiedong/miniconda3/envs/py38_diffusers_1/lib/python3.8/site-packages/pytorch_lightning/utilities/data.py:56: UserWarning: Trying to infer the `batch_size` from an ambiguous collection. The batch size we found is 1. To avoid any miscalculations, use `self.log(..., batch_size=batch_size)`.

warning_cache.warn(

Epoch 1: 0%| | 0/1056 [00:00<?, ?it/s, loss=0.0593, v_num=2, train/loss_simple_step=0.126, train/loss_vlb_step=0.000617, train/loss_step=0.126, global_step=10Data shape for DDIM sampling is (4, 4, 64, 64), eta 0.0

Running DDIM Sampling with 50 timesteps

DDIM Sampler: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████| 50/50 [00:27<00:00, 1.80it/s]

Epoch 1: 28%|▎| 300/1056 [06:06<15:23, 1.22s/it, loss=0.0465, v_num=2, train/loss_simple_step=0.0711, train/loss_vlb_step=0.000249, train/loss_step=0.0711, glData shape for DDIM sampling is (4, 4, 64, 64), eta 0.0

Running DDIM Sampling with 50 timesteps

DDIM Sampler: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████| 50/50 [00:28<00:00, 1.78it/s]

Epoch 1: 57%|▌| 600/1056 [12:12<09:16, 1.22s/it, loss=0.0632, v_num=2, train/loss_simple_step=0.0698, train/loss_vlb_step=0.000314, train/loss_step=0.0698, glData shape for DDIM sampling is (4, 4, 64, 64), eta 0.0

Running DDIM Sampling with 50 timesteps

DDIM Sampler: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████| 50/50 [00:28<00:00, 1.78it/s]

Epoch 1: 61%|▌| 649/1056 [13:37<08:32, 1.26s/it, loss=0.0602, v_num=2, train/loss_simple_step=0.0739, train/loss_vlb_step=0.00045

训练文件被保存在 lightning_logs 目录。