本文参考:

MobileNet网络_-断言-的博客-CSDN博客_mobile-ne

Conv2d中的groups参数(分组卷积)怎么理解? 【分组卷积可以减少参数量、且不容易过拟合(类似正则化)】_马鹏森的博客-CSDN博客_conv groups

Pytorch MobileNetV1 学习_DevinDong123的博客-CSDN博客

1 为什么使用MobileNet网络

传统卷积神经网络,内存需求大、运算量大导致无法在移动设备以及嵌入式设备上运行。

MobileNet网络提供了轻量级CNN网络。相比于传统卷积神经网络,在准确率小幅降低的前提下大大减少模型参数与运算量。

2 普通卷积、DW + PW的比较

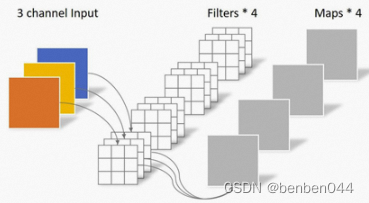

(1)传统卷积

传统卷积把特征提取和特征组合一次完成并输出,其特点是:

- 卷积核channel = 输入特征矩阵channel

- 输出特征矩阵channel = 卷积核个数

(2) DW + PW卷积

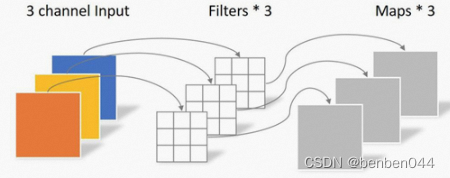

DW卷积其特点为:

- 卷积核channel = 1

- 输入特征矩阵channel = 卷积核个数 = 输出特征矩阵channel

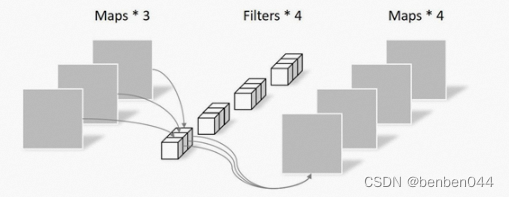

PW卷积其特点为:

- 卷积核channel = 输入特征矩阵channel

- 卷积核大小 = 1

- 卷积核个数 = 输出特征矩阵channel

(3)参数量对比

假设某一网络卷积层,其卷积核大小为3*3,输入通道为16,输出通道为32。

常规CNN的参数量为:(3*3*16+1)*32=4640个。

深度可分离卷积参数量计算:

先用16个大小为3*3的卷积核(3*3*1)作用于16个通道的输入图像,得到了16个特征图。在做融合操作之前,接着用32个大小为1*1的卷积核(1*1*16)遍历上述得到16个特征图。则参数量为:(3*3*1+1)*16+(1*1*16+1)*32=706个。

在进行depthwise卷积时只使用了一种维度为in_channels的卷积核进行特征提取(没有进行特征组合);在进行pointwise卷积时只使用了output_channels种维度为in_channles*1*1的卷积核进行特征组合。

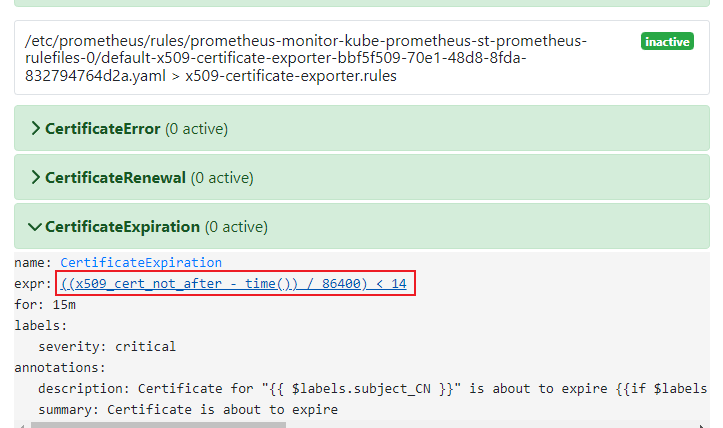

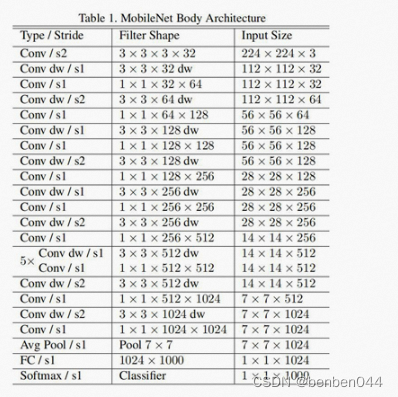

3 MobileNet网络的模型结构

4 DW网络构造

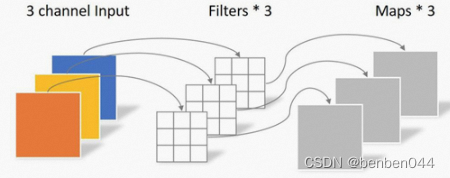

DW的卷积改变了卷积核的输入通道数,本来卷积核的输入通道数=输入特征矩阵的通道数,而DW卷积输入通道数则是为1。

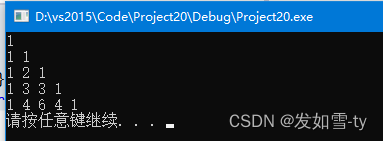

此时用到nn.Conv2d中的groups参数,表示对输入feature map进行分组,然后每组分别卷积,每组的卷积核输入通道数 = 输入特征矩阵通道数 / groups。

如果groups=输入特征矩阵的通道数,则刚好形成了如下的卷积效果:

5 MobileNet pytorch实现及剪枝

import torch

import torch.nn as nn

from nni.compression.pytorch.pruning import L1NormPruner

from nni.compression.pytorch.speedup import ModelSpeedup

class BasicConv2dBlock(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, downsample=True, **kwargs):

super(BasicConv2dBlock, self).__init__()

stride = 2 if downsample else 1

self.conv = nn.Conv2d(in_channels, out_channels, kernel_size, stride=stride, **kwargs)

self.bn = nn.BatchNorm2d(out_channels)

self.relu = nn.ReLU(inplace=True)

def forward(self, x):

x = self.conv(x)

x = self.bn(x)

x = self.relu(x)

return x

class DepthSeperabelConv2dBlock(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, **kwargs):

super(DepthSeperabelConv2dBlock, self).__init__()

# 深度卷积

self.depth_wise = nn.Sequential(

nn.Conv2d(in_channels, in_channels, kernel_size, groups=in_channels, **kwargs),

nn.BatchNorm2d(in_channels),

nn.ReLU(inplace=True)

)

# 逐点卷积

self.point_wise = nn.Sequential(

nn.Conv2d(in_channels, out_channels, 1),

nn.BatchNorm2d(out_channels),

nn.ReLU(inplace=True)

)

def forward(self, x):

x = self.depth_wise(x)

x = self.point_wise(x)

return x

class MobileNet(nn.Module):

def __init__(self, class_num=100):

super(MobileNet, self).__init__()

self.stem = nn.Sequential(

BasicConv2dBlock(3, 32, kernel_size=3, padding=1, bias=False),

DepthSeperabelConv2dBlock(32, 64, kernel_size=3, padding=1, bias=False)

)

self.conv1 = nn.Sequential(

DepthSeperabelConv2dBlock(64, 128, kernel_size=3, stride=2, padding=1, bias=False),

DepthSeperabelConv2dBlock(128, 128, kernel_size=3, padding=1, bias=False)

)

self.conv2 = nn.Sequential(

DepthSeperabelConv2dBlock(128, 256, kernel_size=3, stride=2, padding=1, bias=False),

DepthSeperabelConv2dBlock(256, 256, kernel_size=3, padding=1, bias=False)

)

self.conv3 = nn.Sequential(

DepthSeperabelConv2dBlock(256, 512, kernel_size=3, stride=2, padding=1, bias=False),

DepthSeperabelConv2dBlock(512, 512, kernel_size=3, padding=1, bias=False),

DepthSeperabelConv2dBlock(512, 512, kernel_size=3, padding=1, bias=False),

DepthSeperabelConv2dBlock(512, 512, kernel_size=3, padding=1, bias=False),

DepthSeperabelConv2dBlock(512, 512, kernel_size=3, padding=1, bias=False),

DepthSeperabelConv2dBlock(512, 512, kernel_size=3, padding=1, bias=False)

)

self.conv4 = nn.Sequential(

DepthSeperabelConv2dBlock(512, 1024, kernel_size=3, stride=2, padding=1, bias=False),

DepthSeperabelConv2dBlock(1024, 1024, kernel_size=3, padding=1, bias=False)

)

self.fc = nn.Linear(1024, class_num)

self.avg = nn.AdaptiveAvgPool2d(1)

def forward(self, x):

x = self.stem(x)

x = self.conv1(x)

x = self.conv2(x)

x = self.conv3(x)

x = self.conv4(x)

x = self.avg(x)

x = x.view(x.size(0), -1)

x = self.fc(x)

return x

def main():

config_list = [{

'sparsity_per_layer':0.5,

'op_types': ['Conv2d']

}]

model = MobileNet(10)

print('-----------raw model------------')

print(model)

pruner = L1NormPruner(model, config_list)

_, masks = pruner.compress()

for name, mask in masks.items():

print(name, ' sparsity: ', '{:.2f}'.format(mask['weight'].sum() / mask['weight'].numel()))

pruner._unwrap_model()

ModelSpeedup(model, torch.rand(1, 3, 512, 512), masks).speedup_model()

print('------------after speedup------------')

print(model)

if __name__ == '__main__':

main()以上代码不仅实现了mobilenet,还通过nni对模型进行了剪枝。

nni剪枝需要15G以上的内存,所以电脑内存没个20G跑不起来。

整体日志如下:

-----------raw model------------

MobileNet(

(stem): Sequential(

(0): BasicConv2dBlock(

(conv): Conv2d(3, 32, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(1): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=32, bias=False)

(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(32, 64, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

)

(conv1): Sequential(

(0): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=64, bias=False)

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(64, 128, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(1): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=128, bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

)

(conv2): Sequential(

(0): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=128, bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(128, 256, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(1): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=256, bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

)

(conv3): Sequential(

(0): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=256, bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(1): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=512, bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(2): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=512, bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(3): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=512, bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(4): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=512, bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(5): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=512, bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(512, 512, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

)

(conv4): Sequential(

(0): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=512, bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(512, 1024, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(1): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(1024, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=1024, bias=False)

(1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(1024, 1024, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

)

(fc): Linear(in_features=1024, out_features=10, bias=True)

(avg): AdaptiveAvgPool2d(output_size=1)

)

stem.0.conv sparsity: 0.50

stem.1.depth_wise.0 sparsity: 0.50

stem.1.point_wise.0 sparsity: 0.50

conv1.0.depth_wise.0 sparsity: 0.50

conv1.0.point_wise.0 sparsity: 0.50

conv1.1.depth_wise.0 sparsity: 0.50

conv1.1.point_wise.0 sparsity: 0.50

conv2.0.depth_wise.0 sparsity: 0.50

conv2.0.point_wise.0 sparsity: 0.50

conv2.1.depth_wise.0 sparsity: 0.50

conv2.1.point_wise.0 sparsity: 0.50

conv3.0.depth_wise.0 sparsity: 0.50

conv3.0.point_wise.0 sparsity: 0.50

conv3.1.depth_wise.0 sparsity: 0.50

conv3.1.point_wise.0 sparsity: 0.50

conv3.2.depth_wise.0 sparsity: 0.50

conv3.2.point_wise.0 sparsity: 0.50

conv3.3.depth_wise.0 sparsity: 0.50

conv3.3.point_wise.0 sparsity: 0.50

conv3.4.depth_wise.0 sparsity: 0.50

conv3.4.point_wise.0 sparsity: 0.50

conv3.5.depth_wise.0 sparsity: 0.50

conv3.5.point_wise.0 sparsity: 0.50

conv4.0.depth_wise.0 sparsity: 0.50

conv4.0.point_wise.0 sparsity: 0.50

conv4.1.depth_wise.0 sparsity: 0.50

conv4.1.point_wise.0 sparsity: 0.50

[2022-12-06 18:37:49] INFO (nni.compression.pytorch.speedup.compressor/MainThread) start to speed up the model

[2022-12-06 18:37:53] INFO (FixMaskConflict/MainThread) {'stem.0.conv': 1, 'stem.1.depth_wise.0': 1, 'stem.1.point_wise.0': 1, 'conv1.0.depth_wise.0': 1, 'conv1.0.point_wise.0': 1, 'conv1.1.depth_wise.0': 1, 'conv1.1.point_wise.0': 1, 'conv2.0.depth_wise.0': 1, 'conv2.0.point_wise.0': 1, 'conv2.1.depth_wise.0': 1, 'conv2.1.point_wise.0': 1, 'conv3.0.depth_wise.0': 1, 'conv3.0.point_wise.0': 1, 'conv3.1.depth_wise.0': 1, 'conv3.1.point_wise.0': 1, 'conv3.2.depth_wise.0': 1, 'conv3.2.point_wise.0': 1, 'conv3.3.depth_wise.0': 1, 'conv3.3.point_wise.0': 1, 'conv3.4.depth_wise.0': 1, 'conv3.4.point_wise.0': 1, 'conv3.5.depth_wise.0': 1, 'conv3.5.point_wise.0': 1, 'conv4.0.depth_wise.0': 1, 'conv4.0.point_wise.0': 1, 'conv4.1.depth_wise.0': 1, 'conv4.1.point_wise.0': 1}

[2022-12-06 18:37:53] INFO (FixMaskConflict/MainThread) dim0 sparsity: 0.500000

[2022-12-06 18:37:53] INFO (FixMaskConflict/MainThread) dim1 sparsity: 0.000000

[2022-12-06 18:37:53] INFO (FixMaskConflict/MainThread) Dectected conv prune dim" 0

[2022-12-06 18:37:53] INFO (nni.compression.pytorch.speedup.compressor/MainThread) infer module masks...

[2022-12-06 18:37:53] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for stem.0.conv

[2022-12-06 18:37:54] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for stem.0.bn

[2022-12-06 18:37:55] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for stem.0.relu

[2022-12-06 18:37:55] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for stem.1.depth_wise.0

[2022-12-06 18:37:57] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for stem.1.depth_wise.1

[2022-12-06 18:37:59] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for stem.1.depth_wise.2

[2022-12-06 18:38:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for stem.1.point_wise.0

[2022-12-06 18:38:02] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for stem.1.point_wise.1

[2022-12-06 18:38:05] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for stem.1.point_wise.2

[2022-12-06 18:38:07] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv1.0.depth_wise.0

[2022-12-06 18:38:08] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv1.0.depth_wise.1

[2022-12-06 18:38:09] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv1.0.depth_wise.2

[2022-12-06 18:38:09] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv1.0.point_wise.0

[2022-12-06 18:38:11] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv1.0.point_wise.1

[2022-12-06 18:38:12] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv1.0.point_wise.2

[2022-12-06 18:38:13] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv1.1.depth_wise.0

[2022-12-06 18:38:14] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv1.1.depth_wise.1

[2022-12-06 18:38:16] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv1.1.depth_wise.2

[2022-12-06 18:38:17] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv1.1.point_wise.0

[2022-12-06 18:38:18] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv1.1.point_wise.1

[2022-12-06 18:38:20] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv1.1.point_wise.2

[2022-12-06 18:38:20] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv2.0.depth_wise.0

[2022-12-06 18:38:21] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv2.0.depth_wise.1

[2022-12-06 18:38:21] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv2.0.depth_wise.2

[2022-12-06 18:38:21] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv2.0.point_wise.0

[2022-12-06 18:38:22] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv2.0.point_wise.1

[2022-12-06 18:38:23] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv2.0.point_wise.2

[2022-12-06 18:38:23] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv2.1.depth_wise.0

[2022-12-06 18:38:24] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv2.1.depth_wise.1

[2022-12-06 18:38:25] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv2.1.depth_wise.2

[2022-12-06 18:38:25] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv2.1.point_wise.0

[2022-12-06 18:38:26] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv2.1.point_wise.1

[2022-12-06 18:38:26] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv2.1.point_wise.2

[2022-12-06 18:38:27] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.0.depth_wise.0

[2022-12-06 18:38:27] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.0.depth_wise.1

[2022-12-06 18:38:27] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.0.depth_wise.2

[2022-12-06 18:38:27] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.0.point_wise.0

[2022-12-06 18:38:27] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.0.point_wise.1

[2022-12-06 18:38:28] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.0.point_wise.2

[2022-12-06 18:38:28] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.1.depth_wise.0

[2022-12-06 18:38:28] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.1.depth_wise.1

[2022-12-06 18:38:29] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.1.depth_wise.2

[2022-12-06 18:38:29] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.1.point_wise.0

[2022-12-06 18:38:29] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.1.point_wise.1

[2022-12-06 18:38:30] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.1.point_wise.2

[2022-12-06 18:38:30] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.2.depth_wise.0

[2022-12-06 18:38:30] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.2.depth_wise.1

[2022-12-06 18:38:30] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.2.depth_wise.2

[2022-12-06 18:38:31] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.2.point_wise.0

[2022-12-06 18:38:31] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.2.point_wise.1

[2022-12-06 18:38:31] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.2.point_wise.2

[2022-12-06 18:38:31] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.3.depth_wise.0

[2022-12-06 18:38:32] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.3.depth_wise.1

[2022-12-06 18:38:32] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.3.depth_wise.2

[2022-12-06 18:38:32] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.3.point_wise.0

[2022-12-06 18:38:33] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.3.point_wise.1

[2022-12-06 18:38:33] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.3.point_wise.2

[2022-12-06 18:38:33] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.4.depth_wise.0

[2022-12-06 18:38:34] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.4.depth_wise.1

[2022-12-06 18:38:34] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.4.depth_wise.2

[2022-12-06 18:38:34] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.4.point_wise.0

[2022-12-06 18:38:34] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.4.point_wise.1

[2022-12-06 18:38:35] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.4.point_wise.2

[2022-12-06 18:38:35] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.5.depth_wise.0

[2022-12-06 18:38:35] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.5.depth_wise.1

[2022-12-06 18:38:36] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.5.depth_wise.2

[2022-12-06 18:38:36] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.5.point_wise.0

[2022-12-06 18:38:36] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.5.point_wise.1

[2022-12-06 18:38:36] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv3.5.point_wise.2

[2022-12-06 18:38:36] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv4.0.depth_wise.0

[2022-12-06 18:38:37] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv4.0.depth_wise.1

[2022-12-06 18:38:37] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv4.0.depth_wise.2

[2022-12-06 18:38:37] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv4.0.point_wise.0

[2022-12-06 18:38:37] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv4.0.point_wise.1

[2022-12-06 18:38:37] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv4.0.point_wise.2

[2022-12-06 18:38:37] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv4.1.depth_wise.0

[2022-12-06 18:38:37] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv4.1.depth_wise.1

[2022-12-06 18:38:37] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv4.1.depth_wise.2

[2022-12-06 18:38:37] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv4.1.point_wise.0

[2022-12-06 18:38:37] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv4.1.point_wise.1

[2022-12-06 18:38:37] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for conv4.1.point_wise.2

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for avg

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for .aten::size.83

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for .aten::Int.84

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for .aten::view.85

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.jit_translate/MainThread) View Module output size: [8, -1]

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update mask for fc

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the fc

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the .aten::view.85

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the .aten::Int.84

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the .aten::size.83

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the avg

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv4.1.point_wise.2

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv4.1.point_wise.1

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv4.1.point_wise.0

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv4.1.depth_wise.2

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv4.1.depth_wise.1

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv4.1.depth_wise.0

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv4.0.point_wise.2

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv4.0.point_wise.1

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv4.0.point_wise.0

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv4.0.depth_wise.2

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv4.0.depth_wise.1

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv4.0.depth_wise.0

[2022-12-06 18:38:38] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.5.point_wise.2

[2022-12-06 18:38:39] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.5.point_wise.1

[2022-12-06 18:38:39] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.5.point_wise.0

[2022-12-06 18:38:39] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.5.depth_wise.2

[2022-12-06 18:38:39] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.5.depth_wise.1

[2022-12-06 18:38:39] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.5.depth_wise.0

[2022-12-06 18:38:39] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.4.point_wise.2

[2022-12-06 18:38:39] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.4.point_wise.1

[2022-12-06 18:38:40] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.4.point_wise.0

[2022-12-06 18:38:40] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.4.depth_wise.2

[2022-12-06 18:38:40] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.4.depth_wise.1

[2022-12-06 18:38:40] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.4.depth_wise.0

[2022-12-06 18:38:40] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.3.point_wise.2

[2022-12-06 18:38:40] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.3.point_wise.1

[2022-12-06 18:38:40] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.3.point_wise.0

[2022-12-06 18:38:41] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.3.depth_wise.2

[2022-12-06 18:38:41] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.3.depth_wise.1

[2022-12-06 18:38:41] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.3.depth_wise.0

[2022-12-06 18:38:41] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.2.point_wise.2

[2022-12-06 18:38:41] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.2.point_wise.1

[2022-12-06 18:38:41] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.2.point_wise.0

[2022-12-06 18:38:41] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.2.depth_wise.2

[2022-12-06 18:38:41] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.2.depth_wise.1

[2022-12-06 18:38:42] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.2.depth_wise.0

[2022-12-06 18:38:42] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.1.point_wise.2

[2022-12-06 18:38:42] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.1.point_wise.1

[2022-12-06 18:38:42] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.1.point_wise.0

[2022-12-06 18:38:42] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.1.depth_wise.2

[2022-12-06 18:38:42] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.1.depth_wise.1

[2022-12-06 18:38:42] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.1.depth_wise.0

[2022-12-06 18:38:42] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.0.point_wise.2

[2022-12-06 18:38:43] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.0.point_wise.1

[2022-12-06 18:38:43] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.0.point_wise.0

[2022-12-06 18:38:43] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.0.depth_wise.2

[2022-12-06 18:38:43] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.0.depth_wise.1

[2022-12-06 18:38:43] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv3.0.depth_wise.0

[2022-12-06 18:38:43] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv2.1.point_wise.2

[2022-12-06 18:38:43] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv2.1.point_wise.1

[2022-12-06 18:38:44] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv2.1.point_wise.0

[2022-12-06 18:38:44] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv2.1.depth_wise.2

[2022-12-06 18:38:44] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv2.1.depth_wise.1

[2022-12-06 18:38:45] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv2.1.depth_wise.0

[2022-12-06 18:38:45] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv2.0.point_wise.2

[2022-12-06 18:38:45] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv2.0.point_wise.1

[2022-12-06 18:38:46] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv2.0.point_wise.0

[2022-12-06 18:38:46] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv2.0.depth_wise.2

[2022-12-06 18:38:46] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv2.0.depth_wise.1

[2022-12-06 18:38:46] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv2.0.depth_wise.0

[2022-12-06 18:38:47] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv1.1.point_wise.2

[2022-12-06 18:38:47] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv1.1.point_wise.1

[2022-12-06 18:38:48] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv1.1.point_wise.0

[2022-12-06 18:38:49] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv1.1.depth_wise.2

[2022-12-06 18:38:49] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv1.1.depth_wise.1

[2022-12-06 18:38:50] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv1.1.depth_wise.0

[2022-12-06 18:38:50] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv1.0.point_wise.2

[2022-12-06 18:38:51] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv1.0.point_wise.1

[2022-12-06 18:38:52] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv1.0.point_wise.0

[2022-12-06 18:38:52] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv1.0.depth_wise.2

[2022-12-06 18:38:53] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv1.0.depth_wise.1

[2022-12-06 18:38:53] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the conv1.0.depth_wise.0

[2022-12-06 18:38:54] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the stem.1.point_wise.2

[2022-12-06 18:38:55] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the stem.1.point_wise.1

[2022-12-06 18:38:56] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the stem.1.point_wise.0

[2022-12-06 18:38:57] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the stem.1.depth_wise.2

[2022-12-06 18:38:58] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the stem.1.depth_wise.1

[2022-12-06 18:38:58] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the stem.1.depth_wise.0

[2022-12-06 18:38:59] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the stem.0.relu

[2022-12-06 18:38:59] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the stem.0.bn

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Update the indirect sparsity for the stem.0.conv

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) resolve the mask conflict

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace compressed modules...

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: stem.0.conv, op_type: Conv2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: stem.0.bn, op_type: BatchNorm2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 6

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: stem.0.relu, op_type: ReLU)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: stem.1.depth_wise.0, op_type: Conv2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: stem.1.depth_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 6

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: stem.1.depth_wise.2, op_type: ReLU)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: stem.1.point_wise.0, op_type: Conv2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: stem.1.point_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 14

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: stem.1.point_wise.2, op_type: ReLU)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv1.0.depth_wise.0, op_type: Conv2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv1.0.depth_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 14

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv1.0.depth_wise.2, op_type: ReLU)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv1.0.point_wise.0, op_type: Conv2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv1.0.point_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 36

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv1.0.point_wise.2, op_type: ReLU)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv1.1.depth_wise.0, op_type: Conv2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv1.1.depth_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 36

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv1.1.depth_wise.2, op_type: ReLU)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv1.1.point_wise.0, op_type: Conv2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv1.1.point_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 30

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv1.1.point_wise.2, op_type: ReLU)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv2.0.depth_wise.0, op_type: Conv2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv2.0.depth_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 30

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv2.0.depth_wise.2, op_type: ReLU)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv2.0.point_wise.0, op_type: Conv2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv2.0.point_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 62

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv2.0.point_wise.2, op_type: ReLU)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv2.1.depth_wise.0, op_type: Conv2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv2.1.depth_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 62

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv2.1.depth_wise.2, op_type: ReLU)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv2.1.point_wise.0, op_type: Conv2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv2.1.point_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 63

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv2.1.point_wise.2, op_type: ReLU)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.0.depth_wise.0, op_type: Conv2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.0.depth_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 63

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.0.depth_wise.2, op_type: ReLU)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.0.point_wise.0, op_type: Conv2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.0.point_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 121

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.0.point_wise.2, op_type: ReLU)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.1.depth_wise.0, op_type: Conv2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.1.depth_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 121

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.1.depth_wise.2, op_type: ReLU)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.1.point_wise.0, op_type: Conv2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.1.point_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 128

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.1.point_wise.2, op_type: ReLU)

[2022-12-06 18:39:00] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.2.depth_wise.0, op_type: Conv2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.2.depth_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 128

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.2.depth_wise.2, op_type: ReLU)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.2.point_wise.0, op_type: Conv2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.2.point_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 125

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.2.point_wise.2, op_type: ReLU)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.3.depth_wise.0, op_type: Conv2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.3.depth_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 125

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.3.depth_wise.2, op_type: ReLU)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.3.point_wise.0, op_type: Conv2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.3.point_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 135

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.3.point_wise.2, op_type: ReLU)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.4.depth_wise.0, op_type: Conv2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.4.depth_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 135

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.4.depth_wise.2, op_type: ReLU)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.4.point_wise.0, op_type: Conv2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.4.point_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 117

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.4.point_wise.2, op_type: ReLU)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.5.depth_wise.0, op_type: Conv2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.5.depth_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 117

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.5.depth_wise.2, op_type: ReLU)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.5.point_wise.0, op_type: Conv2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.5.point_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 125

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv3.5.point_wise.2, op_type: ReLU)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv4.0.depth_wise.0, op_type: Conv2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv4.0.depth_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 125

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv4.0.depth_wise.2, op_type: ReLU)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv4.0.point_wise.0, op_type: Conv2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv4.0.point_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 265

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv4.0.point_wise.2, op_type: ReLU)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv4.1.depth_wise.0, op_type: Conv2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv4.1.depth_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 265

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv4.1.depth_wise.2, op_type: ReLU)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv4.1.point_wise.0, op_type: Conv2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv4.1.point_wise.1, op_type: BatchNorm2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace batchnorm2d with num_features: 512

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: conv4.1.point_wise.2, op_type: ReLU)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: avg, op_type: AdaptiveAvgPool2d)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Warning: cannot replace (name: .aten::size.83, op_type: aten::size) which is func type

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Warning: cannot replace (name: .aten::Int.84, op_type: aten::Int) which is func type

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) Warning: cannot replace (name: .aten::view.85, op_type: aten::view) which is func type

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) replace module (name: fc, op_type: Linear)

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compress_modules/MainThread) replace linear with new in_features: 512, out_features: 10

[2022-12-06 18:39:01] INFO (nni.compression.pytorch.speedup.compressor/MainThread) speedup done

------------after speedup------------

MobileNet(

(stem): Sequential(

(0): BasicConv2dBlock(

(conv): Conv2d(3, 6, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn): BatchNorm2d(6, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

)

(1): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(6, 6, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=6, bias=False)

(1): BatchNorm2d(6, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(6, 14, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(14, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

)

(conv1): Sequential(

(0): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(14, 14, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=14, bias=False)

(1): BatchNorm2d(14, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(14, 36, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(36, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(1): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(36, 36, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=36, bias=False)

(1): BatchNorm2d(36, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(36, 30, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(30, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

)

(conv2): Sequential(

(0): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(30, 30, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=30, bias=False)

(1): BatchNorm2d(30, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(30, 62, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(62, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(1): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(62, 62, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=62, bias=False)

(1): BatchNorm2d(62, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(62, 63, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(63, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

)

(conv3): Sequential(

(0): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(63, 63, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=63, bias=False)

(1): BatchNorm2d(63, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(63, 121, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(121, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(1): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(121, 121, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=121, bias=False)

(1): BatchNorm2d(121, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(121, 128, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(2): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=128, bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(128, 125, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(125, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(3): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(125, 125, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=125, bias=False)

(1): BatchNorm2d(125, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(125, 135, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(135, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(4): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(135, 135, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=135, bias=False)

(1): BatchNorm2d(135, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(135, 117, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(117, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(5): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(117, 117, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=117, bias=False)

(1): BatchNorm2d(117, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(117, 125, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(125, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

)

(conv4): Sequential(

(0): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(125, 125, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), groups=125, bias=False)

(1): BatchNorm2d(125, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(125, 265, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(265, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(1): DepthSeperabelConv2dBlock(

(depth_wise): Sequential(

(0): Conv2d(265, 265, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=265, bias=False)

(1): BatchNorm2d(265, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(point_wise): Sequential(

(0): Conv2d(265, 512, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

)

(fc): Linear(in_features=512, out_features=10, bias=True)

(avg): AdaptiveAvgPool2d(output_size=1)

)