Kubernetes 1.27 版本基于(haproxy+keepalived)部署高可用集群

- 二、系统架构

- 2.1 架构基本需求

- 2.2 架构图

- 三、环境准备

- 3.1 云服务或虚拟机清单

- 3.2 升级操作系统内核

- 3.3 设置hostname

- 3.4 修改hosts文件映射(注意替换你的规划每一台机器的IP)

- 3.5 关闭防火墙

- 3.6 禁用swap分区

- 3.7 关闭selinux

- 3.8 时间同步

- 3.9 添加网桥过滤及内核转发配置文件

- 3.10 安装ipset及ipvsadm

- 3.11 配置ssh免密登录(master01执行)

- 四、部署etcd集群(master节点执行)

- 4.1 安装etcd

- 4.2 生成etcd配置相关文件(master01)

- 4.3 启动etcd

- 五、安装负载均衡器 haproxy + keepalived(master节点执行)

- 5.1 haproxy+keepalived高可用负载均衡原理

- 5.2 开始安装keepalived 和 haproxy

- 5.3 配置 haproxy

- 5.3.1 创建haproxy配置文件

- 5.4 配置keepalived

- 5.4.1 创建keepalived配置文件(master01)

- 5.4.2 创建keepalived配置文件(master02)

- 5.4.3 创建keepalived配置文件(master03)

- 5.4.4 健康检查脚本

- 5.6 启动keepalived+haproxy服务并验证

- 六、安装docker(所有节点执行)

- 6.1 安装

- 6.2 配置docker

- 6.3 启动docker

- 七、安装cri-dockerd(所有节点执行)

- 7.1 下载cri-dockerd并安装cri-dockerd

- 7.2 配置cri-dockerd

- 7.3 重启cri-dockerd

- 八、安装k8s集群

- 8.1配置国内yum源(所有节点)

- 8.2 安装kubeadm、kubectl、kubelet(所有节点)

- 8.3 配置kubelet(所有节点)

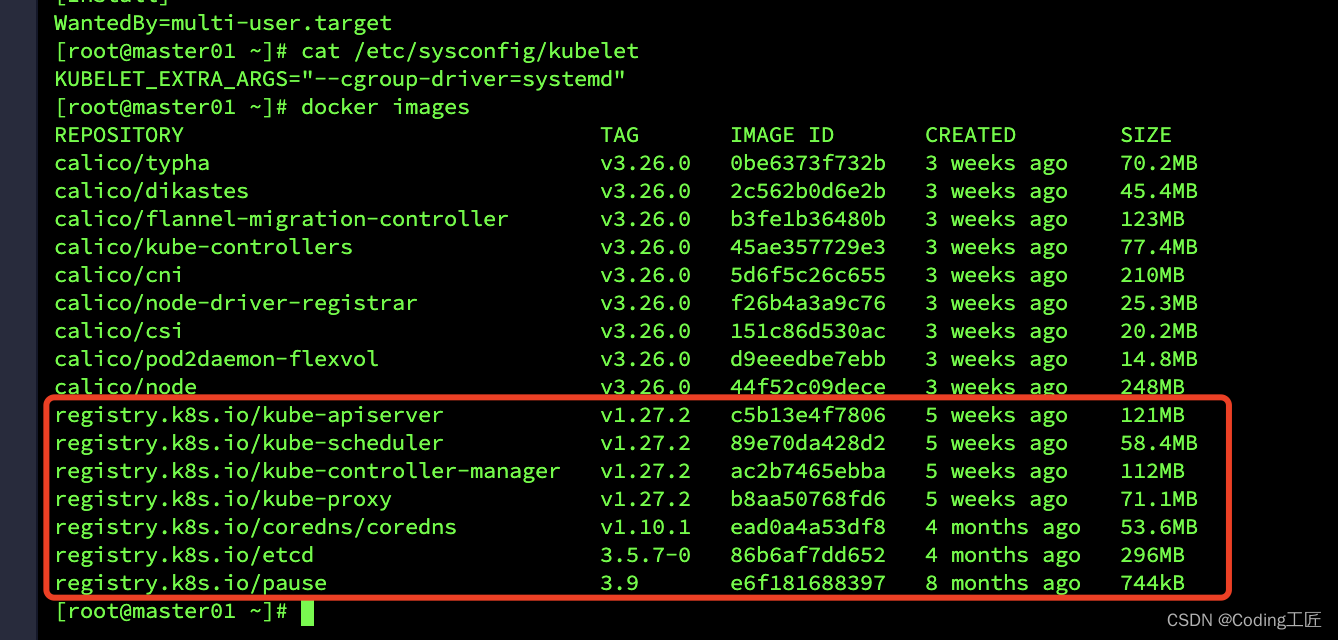

- 8.4 准备镜像 (所有节点)

- 安装初始化k8s节点 (master01)

- 8.5 复制证书至其他master节点加入集群 (从master01节点到02,03节点)

- 8.6 删除多余证书,并加入集群(02、03)

- 8.7 加入集群(02、03)

- 8.8 检查验证集群状态(master01)

- 8.9 worker节点加入集群(worker01)

- 九、安装网络插件Calico

- 9.1 设置GitHub加速

- 9.2 部署Calico(3.26.0版本)

- 9.2.1 提前准备Calico镜像 (所有节点都需要准备)

- 9.2.2 部署Calico网络插件(master01节点)

- 十、创建服务

- 10.1 创建Nginx测试服务

二、系统架构

2.1 架构基本需求

- 配置三台机器 kubeadm 的最低要求 给主节点

- 配置三台机器 kubeadm 的最低要求 给工作节点

- 在集群中,所有计算机之间的完全网络连接(公网或私网)

- 所有机器上的 sudo 权限

- 每台设备对系统中所有节点的 SSH 访问

- 在所有机器上安装 kubeadm 和 kubelet,kubectl 是可选的。

2.2 架构图

三、环境准备

3.1 云服务或虚拟机清单

准备4台,2G或更大内存,2核或以上CPU,30G以上硬盘 物理机或云主机或虚拟机 2、系统centos 7.x

| 服务器 | 主机名 | IP | 最低配置 |

|---|---|---|---|

| 虚拟机 | master01 | 10.211.55.13 | 2C2G |

| 虚拟机 | master02 | 10.211.55.14 | 2C2G |

| 虚拟机 | master03 | 10.211.55.15 | 2C2G |

| 虚拟机 | worker01 | 10.211.55.16 | 2C2G |

3.2 升级操作系统内核

# 导入elrepo gpg key

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

# 安装elrepo yum源仓库

yum -y install https://www.elrepo.org/elrepo-release-7.0-4.el7.elrepo.noarch.rpm

# 安装kernel-lt版本,ml为长期稳定版本,lt为长期维护版本

yum --enablerepo="elrepo-kernel" -y install kernel-lt.x86_64

# 设置grub2默认引导为0

grub2-set-default 0

# 重新生成grub2引导文件

grub2-mkconfig -o /boot/grub2/grub.cfg

# 重启

reboot

3.3 设置hostname

配置各个虚拟机的hostname,确保集群各个虚拟机的hostname不一样。

#根据规划设置主机名

#master01节点执行

hostnamectl set-hostname master01

#master02节点执行

hostnamectl set-hostname master02

#master03节点执行

hostnamectl set-hostname master03

#worker01节点执行

hostnamectl set-hostname worker01

3.4 修改hosts文件映射(注意替换你的规划每一台机器的IP)

给所有主机添加hosts文件内容,使其能互相通过主机名访问。

#在所有机器上执行

cat >> /etc/hosts << EOF

10.211.55.12 vip

10.211.55.13 master01

10.211.55.14 master02

10.211.55.15 master03

10.211.55.16 worker01

EOF

3.5 关闭防火墙

这里简单直接关闭防火墙

#关闭防火墙

systemctl stop firewalld && systemctl disable firewalld

3.6 禁用swap分区

kubernetes的想法是将实例紧密包装到尽可能接近100%。 所有的部署应该与CPU /内存限制固定在一起。 所以如果调度程序发送一个pod到一台机器,它不应该使用交换。 设计者不想交换,因为它会减慢速度,关闭swap主要是为了性能考虑。

#关闭

swap swapoff -a && sed -ri 's/.*swap.*/#&/' /etc/fstab

3.7 关闭selinux

关于selinux的原因(关闭selinux以允许容器访问宿主机的文件系统)

#关闭

selinux sed -i 's/enforcing/disabled/' /etc/selinux/config && setenforce 0

3.8 时间同步

同步pod和宿主机时区

#时间同步

yum install ntpdate -y && ntpdate time.windows.com

3.9 添加网桥过滤及内核转发配置文件

# 创建k8s.conf文件

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness = 0

EOF

# 加载br_netfilter模块

modprobe br_netfilter

# 使配置生效

sysctl -p /etc/sysctl.d/k8s.conf

# 查看是否加载

lsmod | grep br_netfilter

3.10 安装ipset及ipvsadm

# 安装ipset及ipvsadm

yum -y install ipset ipvsadm

# 配置ipvsadm模块加载方式,添加需要加载的模块

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

# 授权、运行、检查加载

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack

3.11 配置ssh免密登录(master01执行)

ssh-keygen

# 然后一直回车

拷贝密钥文件至其他服务器

# 进入/root/.ssh

cd /root/.ssh

# 查看文件

ls

# 复制文件并重命名

cp id_rsa.pub authorized_keys

# 拷贝至其他服务器

for i in 14 15 16

do

scp -r /root/.ssh 10.211.55.$i:/root/

done

四、部署etcd集群(master节点执行)

4.1 安装etcd

# 安装etcd

yum -y install etcd

4.2 生成etcd配置相关文件(master01)

# 创建文件

vim etcd_install.sh

# 粘贴以下内容

etcd1=10.211.55.13

etcd2=10.211.55.14

etcd3=10.211.55.15

TOKEN=smartgo

ETCDHOSTS=($etcd1 $etcd2 $etcd3)

NAMES=("master01" "master02" "master03")

for i in "${!ETCDHOSTS[@]}"; do

HOST=${ETCDHOSTS[$i]}

NAME=${NAMES[$i]}

cat << EOF > /tmp/$NAME.conf

# [member]

ETCD_NAME=$NAME

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="http://$HOST:2380"

ETCD_LISTEN_CLIENT_URLS="http://$HOST:2379,http://127.0.0.1:2379"

#[cluster]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://$HOST:2380"

ETCD_INITIAL_CLUSTER="${NAMES[0]}=http://${ETCDHOSTS[0]}:2380,${NAMES[1]}=http://${ETCDHOSTS[1]}:2380,${NAMES[2]}=http://${ETCDHOSTS[2]}:2380"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_INITIAL_CLUSTER_TOKEN="$TOKEN"

ETCD_ADVERTISE_CLIENT_URLS="http://$HOST:2379"

EOF

done

ls /tmp/master*

scp /tmp/master02.conf $etcd2:/etc/etcd/etcd.conf

scp /tmp/master03.conf $etcd3:/etc/etcd/etcd.conf

cp /tmp/master02.conf /etc/etcd/etcd.conf

rm -f /tmp/master*.conf

# 执行脚本文件

sh etcd_install.sh

4.3 启动etcd

# 启动etcd

systemctl enable --now etcd

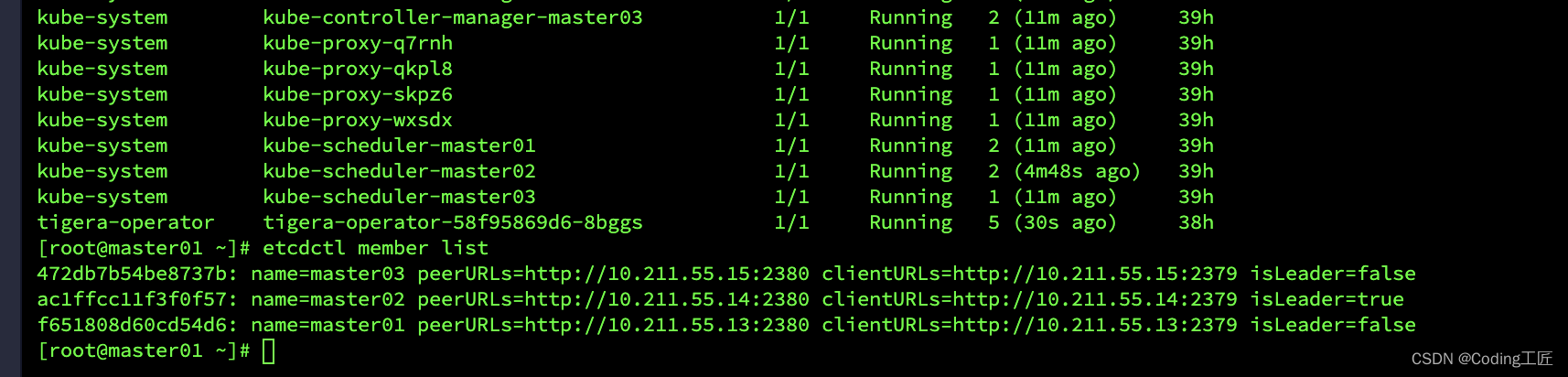

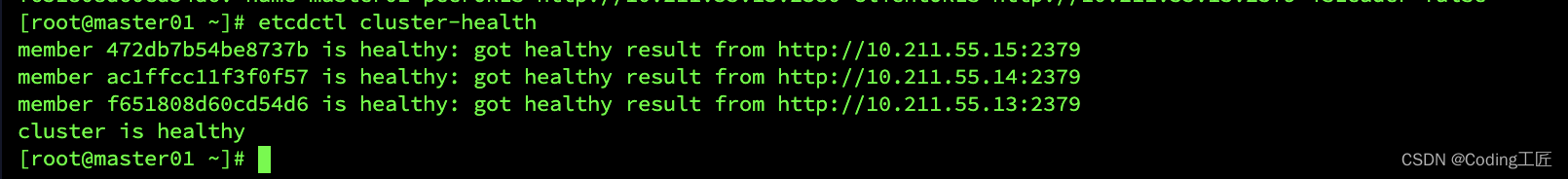

检查etcd是否正常

etcdctl member list

etcdctl cluster-health

五、安装负载均衡器 haproxy + keepalived(master节点执行)

5.1 haproxy+keepalived高可用负载均衡原理

在两台Haproxy的主机上分别运行着一个Keepalived实例,这两个Keepalived争抢同一个虚IP地址,两个Haproxy也尝试去绑定这同一个虚IP地址上的端口。显然,同时只能有一个Keepalived抢到这个虚IP,抢到了这个虚IP的Keepalived主机上的Haproxy便是当前的MASTER。Keepalived内部维护一个权重值,权重值最高的Keepalived实例能够抢到虚IP。同时Keepalived会定期check本主机上的HAProxy状态,状态OK时权重值增加。

5.2 开始安装keepalived 和 haproxy

在三台master集群节点上安装高可用负载均衡组件keepalived和 haproxy 。

#安装keepalived 和 haproxy

yum install haproxy keepalived -y

5.3 配置 haproxy

首先我们先把原始的haproxy配置都统一备份一下,以便后续操作。在/etc/haproxy目录创建haproxy.cfg文件。

5.3.1 创建haproxy配置文件

cat > /etc/haproxy/haproxy.cfg << EOF

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

# to have these messages end up in /var/log/haproxy.log you will

# need to:

# 1) configure syslog to accept network log events. This is done

# by adding the '-r' option to the SYSLOGD_OPTIONS in

# /etc/sysconfig/syslog

# 2) configure local2 events to go to the /var/log/haproxy.log

# file. A line like the following can be added to

# /etc/sysconfig/syslog

#

# local2.* /var/log/haproxy.log

#

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

#---------------------------------------------------------------------

# kubernetes apiserver frontend which proxys to the backends

#---------------------------------------------------------------------

frontend kubernetes-apiserver

mode tcp

bind *:16443

option tcplog

default_backend kubernetes-apiserver

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend kubernetes-apiserver

mode tcp

balance roundrobin

server master01 10.211.55.13:6443 check

server master02 10.211.55.14:6443 check

server master03 10.211.55.15:6443 check

#---------------------------------------------------------------------

# collection haproxy statistics message

#---------------------------------------------------------------------

listen stats

bind *:1080

stats auth admin:awesomePassword

stats refresh 5s

stats realm HAProxy\ Statistics

stats uri /admin?stats

EOF

5.4 配置keepalived

首先我们先把原始的keepalived配置都统一备份一下,以便后续操作。然后我们在/etc/keepalived/目录新建keepalived.conf文件和check_apiserver.sh 。

5.4.1 创建keepalived配置文件(master01)

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface eth0 #注意修改网卡

mcast_src_ip 10.211.55.13 #注意修改IP

virtual_router_id 51

priority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.211.55.12 #注意修改虚拟IP

}

track_script {

chk_apiserver

}

}

EOF

5.4.2 创建keepalived配置文件(master02)

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface eth0 #注意修改网卡

mcast_src_ip 10.211.55.14 #注意修改IP

virtual_router_id 51

priority 99

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.211.55.12 #注意修改虚拟IP

}

track_script {

chk_apiserver

}

}

EOF

5.4.3 创建keepalived配置文件(master03)

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface eth0 #注意修改网卡

mcast_src_ip 10.211.55.15 #注意修改IP

virtual_router_id 51

priority 99

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.211.55.12 #注意修改虚拟IP

}

track_script {

chk_apiserver

}

}

5.4.4 健康检查脚本

cat > /etc/keepalived/check_apiserver.sh <<"EOF"

#!/bin/bash

err=0

for k in $(seq 1 3)

do

check_code=$(pgrep haproxy)

if [[ $check_code == "" ]]; then

err=$(expr $err + 1)

sleep 1

continue

else

err=0

break

fi

done

if [[ $err != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

EOF

# 授权

chmod +x /etc/keepalived/check_apiserver.sh

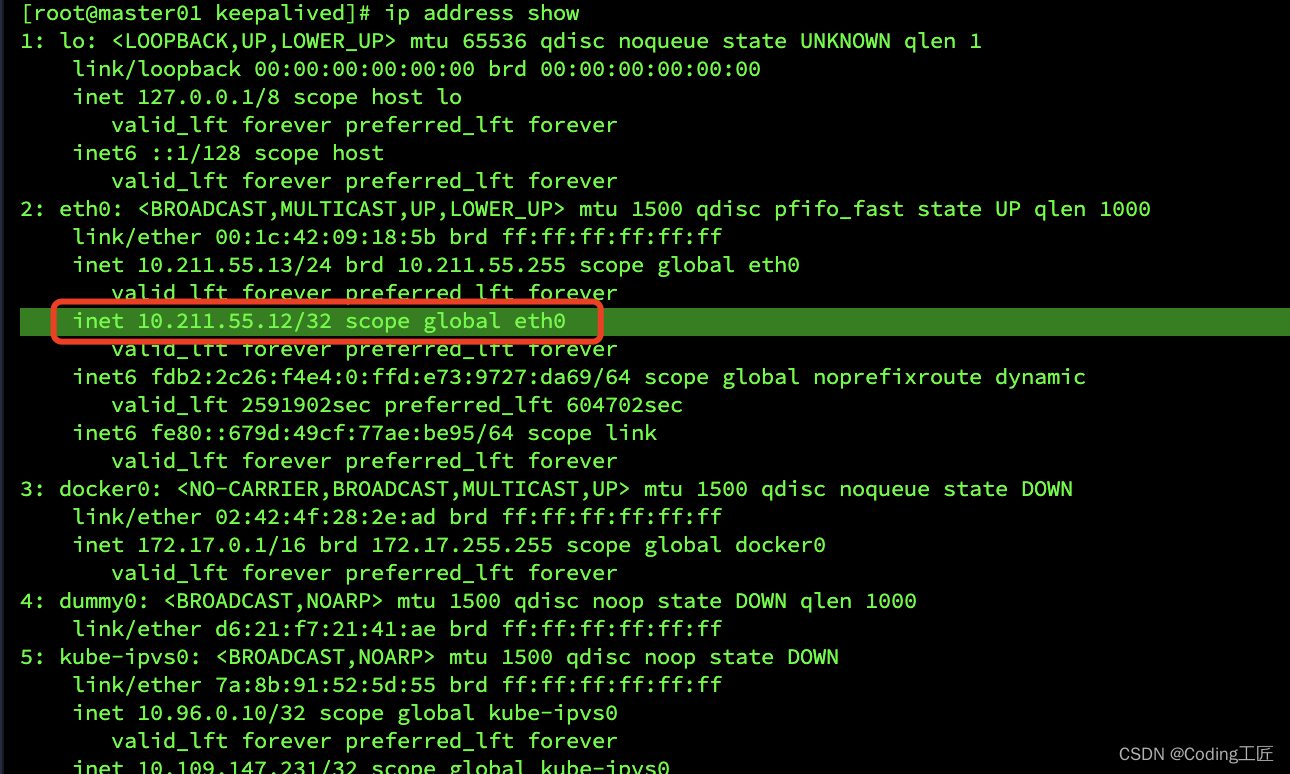

5.6 启动keepalived+haproxy服务并验证

# 启动

systemctl daemon-reload && systemctl enable --now haproxy && systemctl enable --now keepalived

# 验证

ip address show

六、安装docker(所有节点执行)

6.1 安装

#下载yum源

yum -y install wget && wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

#安装

yum -y install docker-ce && systemctl enable docker && systemctl start docker

6.2 配置docker

其中"exec-opts": [“native.cgroupdriver=systemd”]为cgroup方式

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": "registry-mirrors": [

"https://ebkn7ykm.mirror.aliyuncs.com",

"https://docker.mirrors.ustc.edu.cn",

"http://f1361db2.m.daocloud.io",

"https://registry.docker-cn.com",

"http://hub-mirror.c.163.com",

"https://registry.cn-hangzhou.aliyuncs.com"

],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

6.3 启动docker

sudo systemctl daemon-reload && sudo systemctl restart docker

七、安装cri-dockerd(所有节点执行)

7.1 下载cri-dockerd并安装cri-dockerd

# 创建文件夹

mkdir /data/cri-dockerd -p

cd /data/cri-dockerd

#下载cri-dockerd rpm包

wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.3/cri-dockerd-0.3.3-3.el7.x86_64.rpm

# 安装cri-dockerd

yum install -y cri-dockerd-0.3.3-3.el7.x86_64.rpm

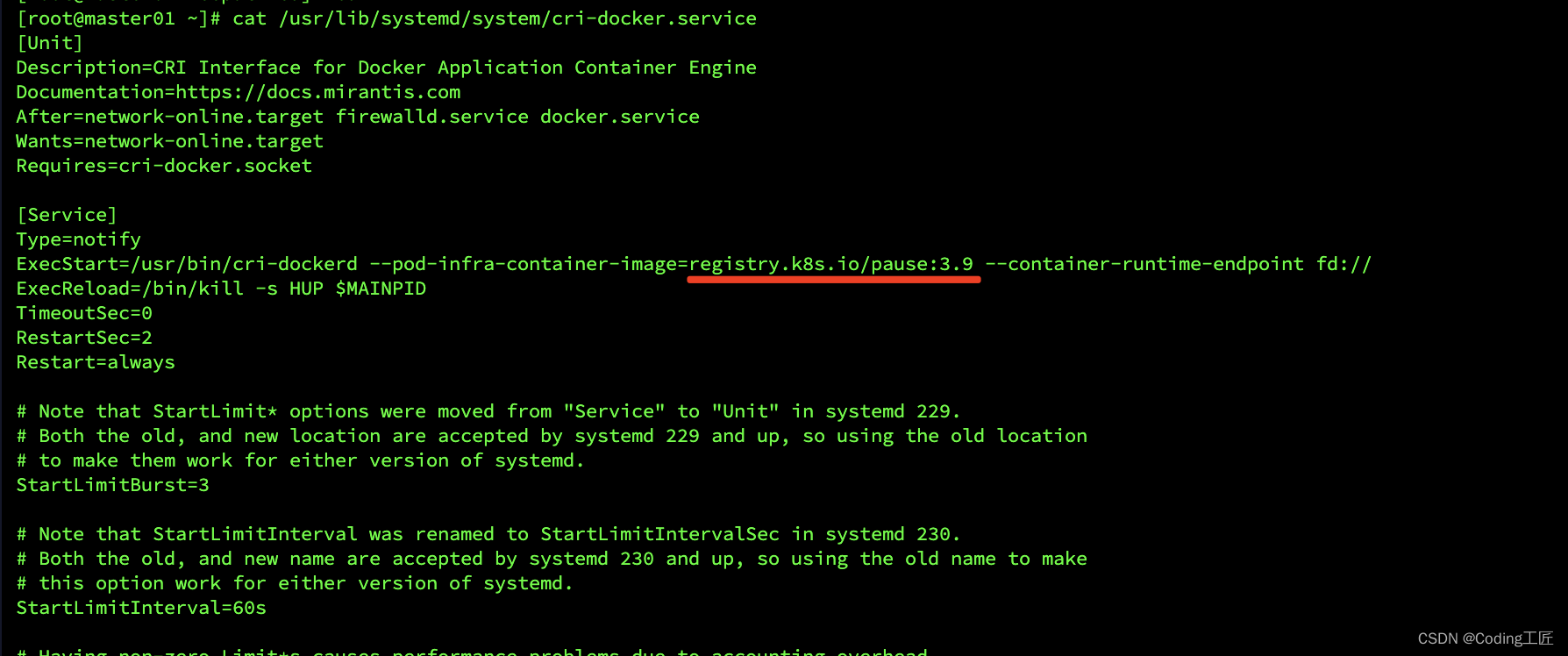

7.2 配置cri-dockerd

# 修改配置文件

vim /usr/lib/systemd/system/cri-docker.service

# 第10行改为

ExecStart=/usr/bin/cri-dockerd --pod-infra-container-image=registry.k8s.io/pause:3.9 --container-runtime-endpoint fd://

7.3 重启cri-dockerd

# 重启

systemctl start cri-docker && systemctl enable cri-docker

八、安装k8s集群

8.1配置国内yum源(所有节点)

cat > /etc/yum.repos.d/k8s.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

8.2 安装kubeadm、kubectl、kubelet(所有节点)

# 安装指定版本

yum -y install kubeadm-1.27.2-0 kubelet-1.27.2-0 kubectl-1.27.2-0

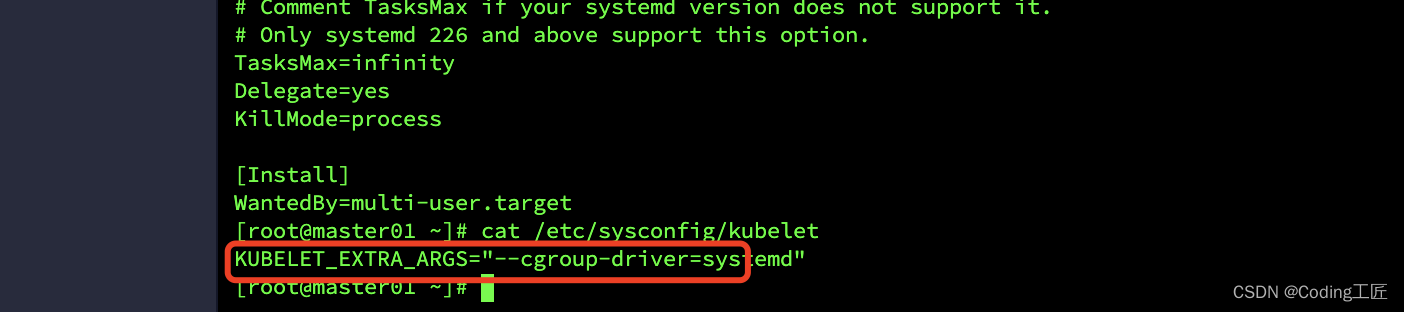

8.3 配置kubelet(所有节点)

vim /etc/sysconfig/kubelet

# 删除原有内容,填入以下内容

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

# kubelet开机启动

systemctl enable kubelet

8.4 准备镜像 (所有节点)

由于国外镜像被墙,需要魔法才能获取,所以这边没有使用魔法,直接从国内下载后重新命名方式准备镜像

cat >> alik8simages.sh << EOF

#!/bin/bash

list='kube-apiserver:v1.27.2

kube-controller-manager:v1.27.2

kube-scheduler:v1.27.2

kube-proxy:v1.27.2

pause:3.9

coredns/coredns:v1.10.1

etcd:3.5.7-0'

for item in ${list}

do

docker pull registry.aliyuncs.com/google_containers/$item && docker tag registry.aliyuncs.com/google_containers/$item registry.k8s.io/$item && docker rmi registry.aliyuncs.com/google_containers/$item

done

EOF

安装初始化k8s节点 (master01)

---

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.211.55.13 #节点IP

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/cri-dockerd.sock

---

apiVersion: kubeadm.k8s.io/v1beta3

kind: ClusterConfiguration

kubernetesVersion: 1.27.2

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

apiServerCertSANs:

- 10.211.55.12

controlPlaneEndpoint: "10.211.55.12:16443" #虚拟IP

etcd:

external:

endpoints: #etcd 集群地址

- http://10.211.55.13:2379

- http://10.211.55.14:2379

- http://10.211.55.15:2379

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

logging:

flushFrequency: 0

options:

json:

infoBufferSize: "0"

verbosity: 0

memorySwap: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

执行下面命令初始化

#初始化

kubeadm init --config kubeadm-config.yaml --upload-certs --v=9

执行初始化完成后输出如下视为成功,需要保存以下内容进行后面集群join操作

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 10.211.55.12:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:5d53d0f711115fbc37817eeca72b1399a28d85ecd787c0660e74465a82293319 \

--control-plane --certificate-key 657a0df9aa44419ea3267fc5cbfc644a7889a7e4ecdc87663be87e774f09c976 \

--cri-socket unix://var/run/cri-dockerd.sock

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.211.55.12:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:5d53d0f711115fbc37817eeca72b1399a28d85ecd787c0660e74465a82293319 \

--cri-socket unix://var/run/cri-dockerd.sock

执行如下命令创建kubectl命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

8.5 复制证书至其他master节点加入集群 (从master01节点到02,03节点)

# master01执行

# 复制证书至其他master节点

vim cp-k8s-cert.sh

# 贴入以下内容

for host in 14 15; do

scp -r /etc/kubernetes/pki 10.211.55.$host:/etc/kubernetes/

done

# 执行脚本

sh cp-k8s-cert.sh

8.6 删除多余证书,并加入集群(02、03)

# master02、master03执行

cd /etc/kubernetes/pki/

rm -rf api*

rm -rf front-proxy-client.*

ls

8.7 加入集群(02、03)

注意:如果不加最后的–cri-socket unix://var/run/cri-dockerd.sock,会报错

这里需要使用你01节点初始化成功后的命令,以下是我集群的,需要注意注意!!!

kubeadm join 10.211.55.12:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:5d53d0f711115fbc37817eeca72b1399a28d85ecd787c0660e74465a82293319 \

--control-plane --certificate-key 657a0df9aa44419ea3267fc5cbfc644a7889a7e4ecdc87663be87e774f09c976 \

--cri-socket unix://var/run/cri-dockerd.sock

加入集群成功后,创建kubectl命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

8.8 检查验证集群状态(master01)

kubectl get pods -n kube-system

8.9 worker节点加入集群(worker01)

kubeadm join 10.211.55.12:16443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:5d53d0f711115fbc37817eeca72b1399a28d85ecd787c0660e74465a82293319 \

--cri-socket unix://var/run/cri-dockerd.sock

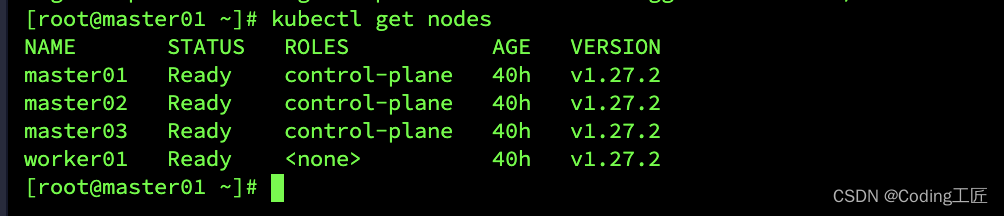

通过下面命令查询是否正常加入集群

kubectl get nodes

九、安装网络插件Calico

9.1 设置GitHub加速

# 获取github地址

ping raw.githubusercontent.com

# 64 bytes from raw.githubusercontent.com (185.199.108.133): icmp_seq=2 ttl=128 time=271 ms

# 修改 /etc/hosts

vim /etc/hosts

# 添加

185.199.108.133 raw.githubusercontent.com

9.2 部署Calico(3.26.0版本)

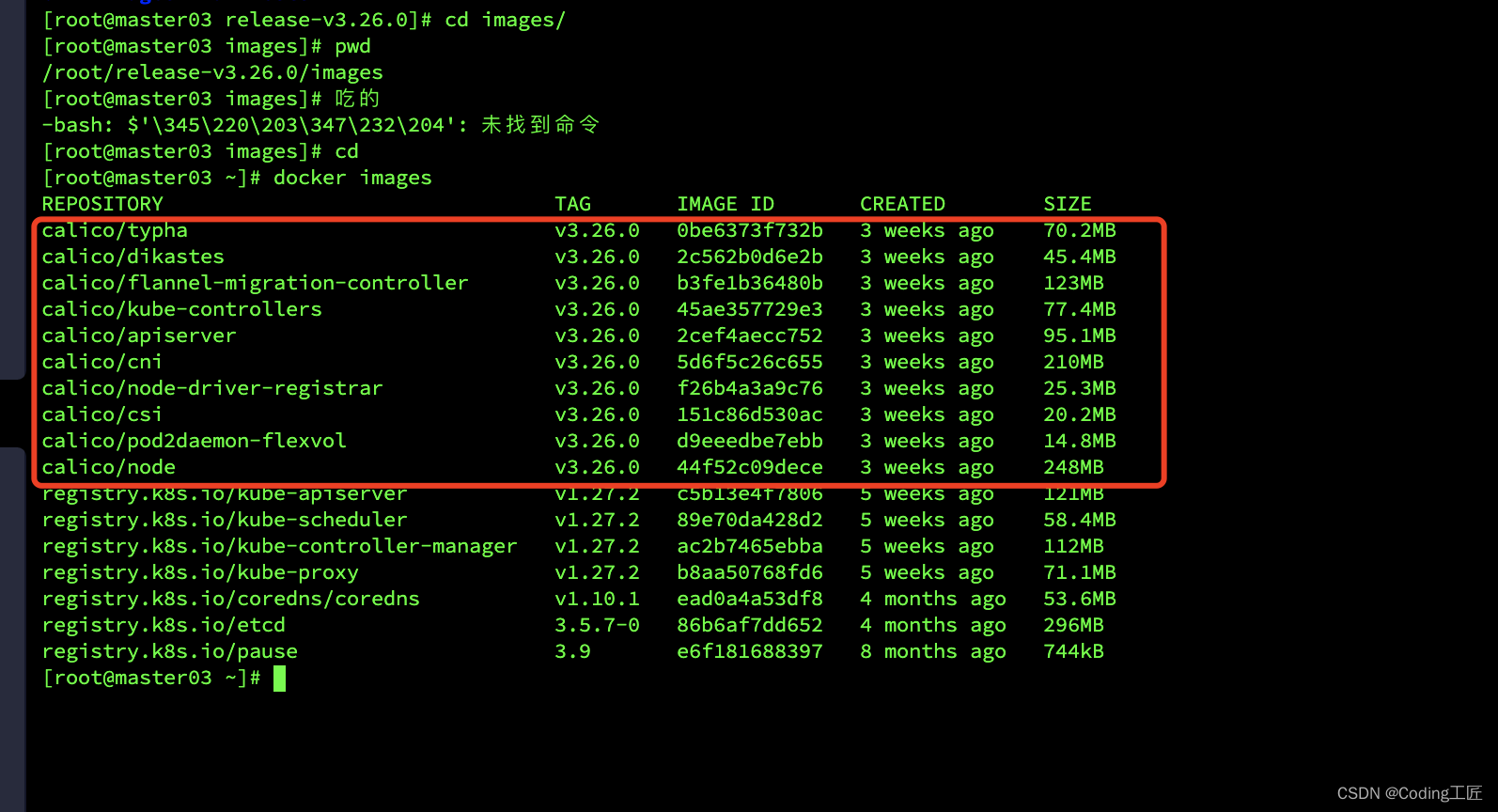

9.2.1 提前准备Calico镜像 (所有节点都需要准备)

下载calico镜像包

#下载

wget https://github.com/projectcalico/calico/releases/download/v3.26.0/release-v3.26.0.tgz

#解压

tar zxvf release-v3.26.0.tgz

#进入镜像

cd release-v3.26.0/images

#加载镜像

docker load -i calico-cni.tar && docker load -i calico-dikastes.tar && docker load -i calico-flannel-migration-controller.tar && docker load -i calico-kube-controllers.tar && docker load -i calico-node.tar && docker load -i calico-pod2daemon.tar && docker load -i calico-typha.tar

所有节点准备镜像后是如下图:

9.2.2 部署Calico网络插件(master01节点)

# 复制安装命令

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.26.0/manifests/tigera-operator.yaml

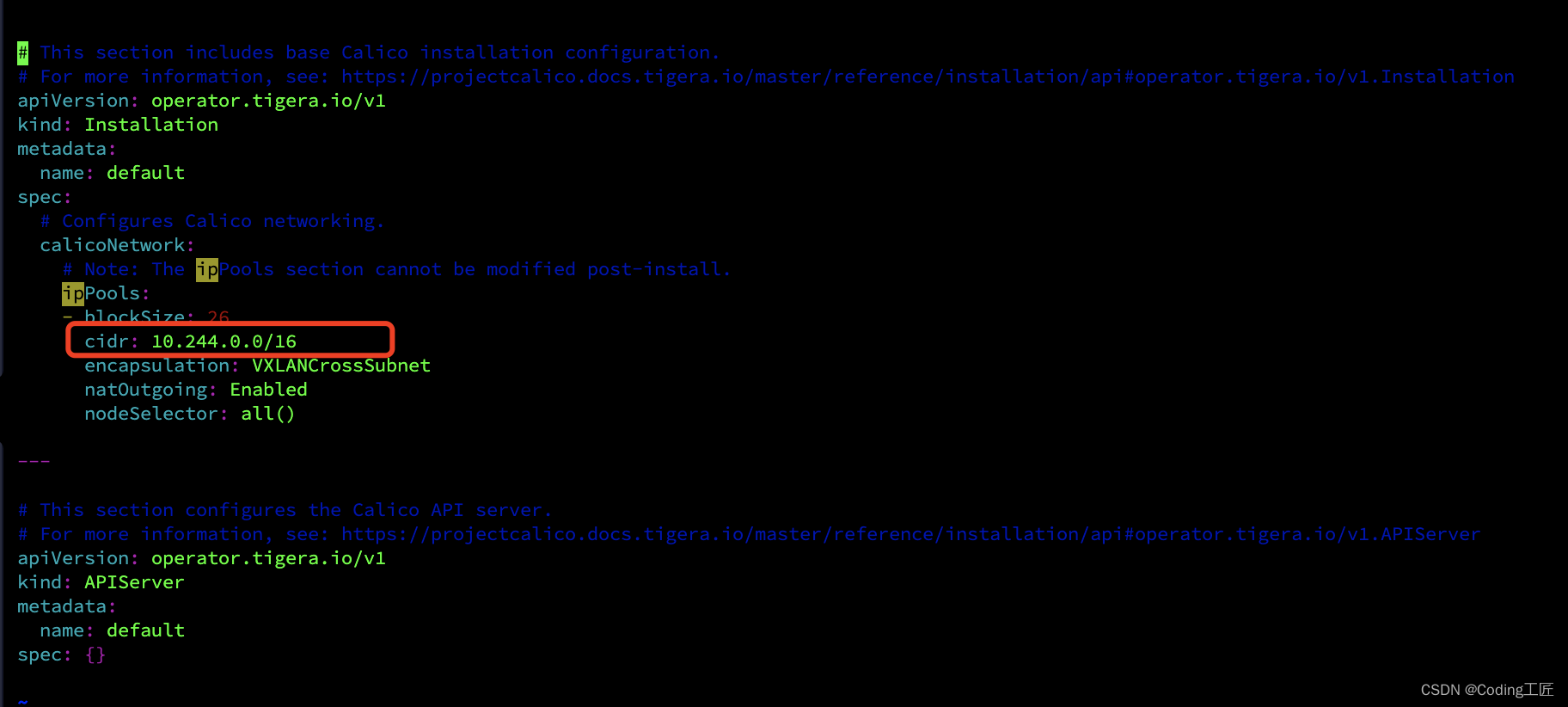

下载配置文件并修改配置文件

# 下载配置文件

wget https://raw.githubusercontent.com/projectcalico/calico/v3.26.0/manifests/custom-resources.yaml

# 修改配置文件

vim custom-resources.yaml

修改配置文件中第13行cidr,与6.5配置文件 kubeadm-config.yaml 中的 podSubnet 想同

修改保存后执行配置文件

# 执行配置文件

kubectl create -f custom-resources.yaml

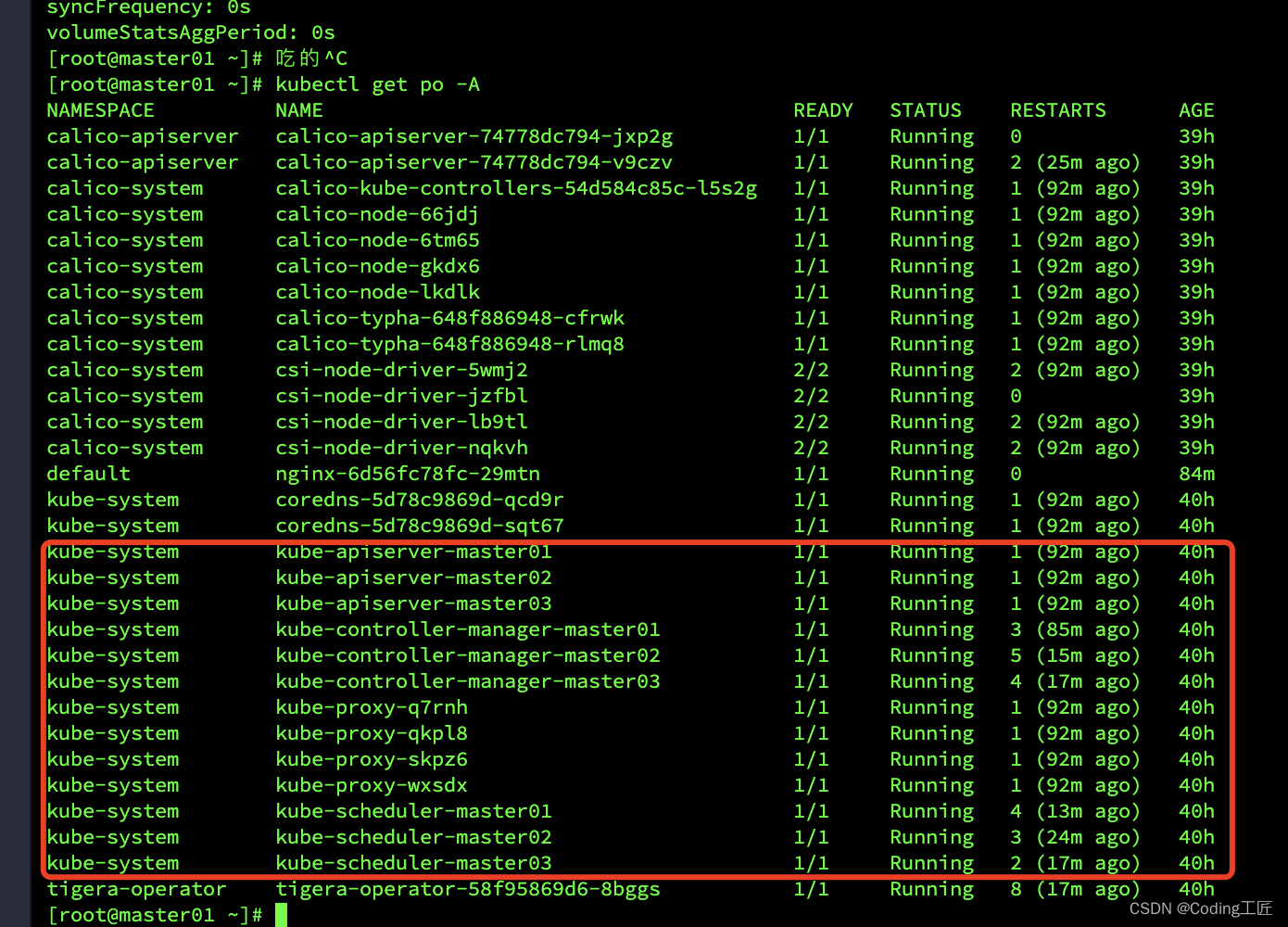

部署完成后我们查询node集群状态:

kubectl get nodes

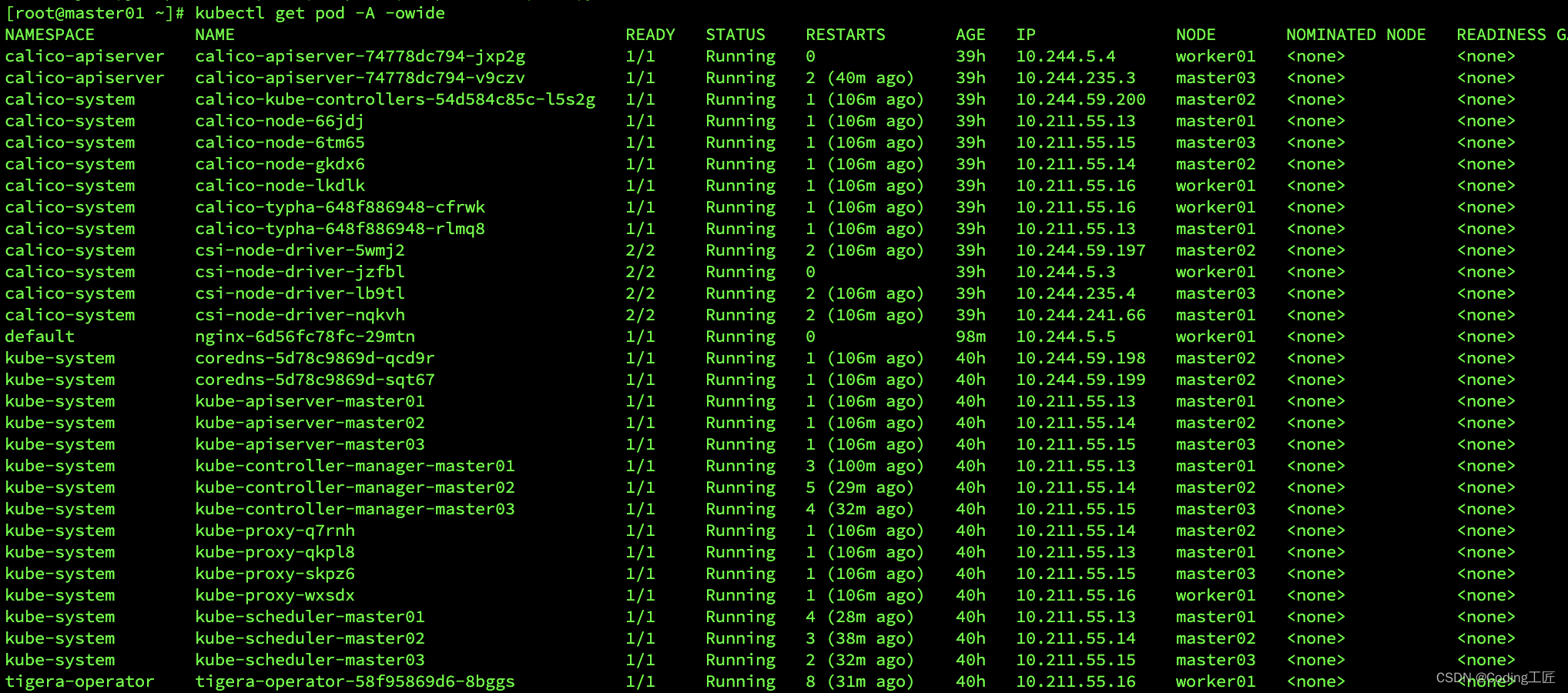

查看所有pod运行状态:

kubectl get po -A -owide

等所有pod运行正常后,我们就可以开始部署你想要的服务啦。

十、创建服务

10.1 创建Nginx测试服务

#创建pod

kubectl create deployment nginx --image=nginx:1.14-alpine

#创建service

kubectl expose deploy nginx --port=80 --target-port=80 --type=NodePort

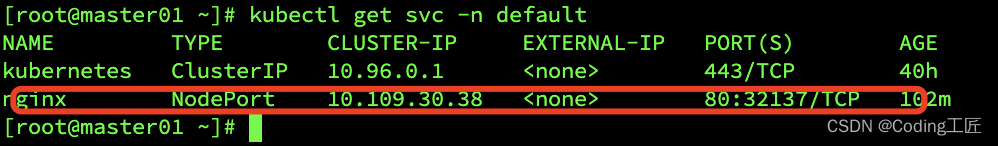

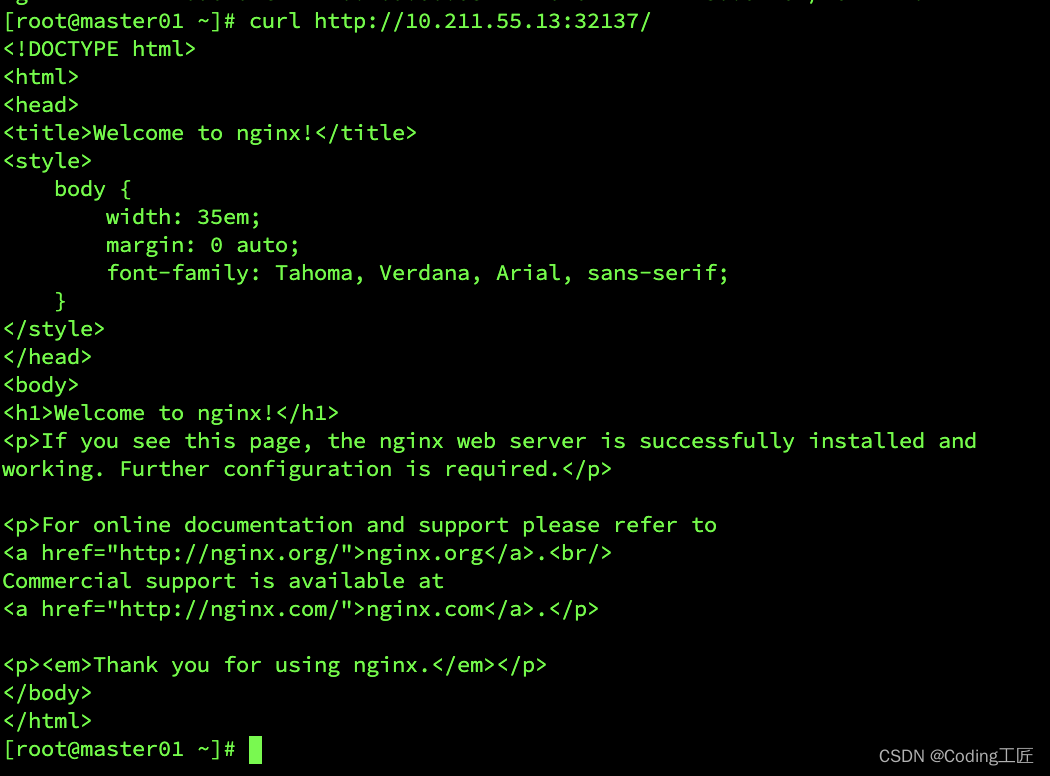

查看我们创建的nginx服务:

kubectl get svc -n default

访问地址:http://10.211.55.13:32173

以上过程是安装最新版的k8s高可用集群。