目录

- 简介

- 技术流程

- 1. 加载依赖包

- 2. 初始化训练和验证生成器

- 3. 建立网络结构

- 4. 编译和训练模型

- 5. 保存模型权重

- 6. 输出预测结果

- 完整程序

- 1. train.py程序

- 2. gui.py程序

简介

准备写个系列博客介绍机器学习实战中的部分公开项目。首先从初级项目开始。

本文主要介绍机器学习项目实战之训练和创建自己的表情符号。项目原网址为:Emojify – Create your own emoji with Deep Learning。

这个外文网址中的一些链接打不开,因此这里给出程序中用到的相关文件并且给出部分程序分析,希望能够帮大家从0构建深度学习项目。

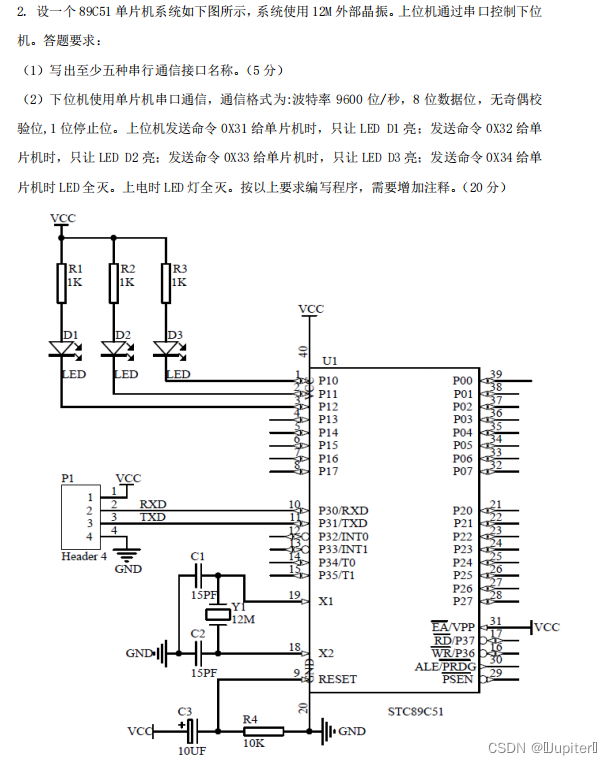

技术流程

Emojify – Create your own emoji with Deep Learning,

该机器学习项目的目的是对人的面部表情进行分类并将其映射为表情符号。

程序中将建立一个卷积神经网络来识别面部表情,然后将使用相应的表情符号或头像来映射这些情感。

以下图为例,左侧摄像头实时拍摄到人脸照片,右侧显示检测结果“surprised”,并将其映射为表情符号。

1. 加载依赖包

import numpy as np

import cv2

from keras.models import Sequential

from keras.layers import Dense, Dropout, Flatten

from keras.layers import Conv2D

from keras.optimizers import Adam

from keras.layers import MaxPooling2D

from keras.preprocessing.image import ImageDataGenerator

解析:

这个项目的深度学习网络调用了keras包,因此需要提前在电脑里安装keras和TensorFlow, 我配置成功的包版本为:

keras 2.10.0

tensorflow 2.10.0

命令行安装命令为:

pip install keras==2.10.0

pip install TensorFlow==2.10.0

除此之外其他安装包如有需要,也就是说运行程序提示缺少**包,就相应安装什么包。

2. 初始化训练和验证生成器

train_dir = 'data/train'

val_dir = 'data/test'

train_datagen = ImageDataGenerator(rescale=1./255)

val_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

train_dir,

target_size=(48, 48),

batch_size=64,

color_mode="grayscale",

class_mode='categorical')

validation_generator = val_datagen.flow_from_directory(

val_dir,

target_size=(48,48),

batch_size=64,

color_mode="grayscale",

class_mode='categorical')

解析:

- ImageDataGenerator:from keras.preprocessing.image import ImageDataGenerator, 对图片进行批量预处理

- flow_from_directory:以文件夹路径为参数,生成经过数据提升/归一化后的数据,参数包括目录名val_dir、批尺寸batch_size等。

batch_size表示一次迭代所需要的样本量,batch_size=total即梯度下降法,bach_size=1即随机梯度下降法SGD;

iteration表示迭代次数,跑完一个batch更新参数;

epoch表示所有样本训练一遍。

3. 建立网络结构

emotion_model = Sequential()

emotion_model.add(Conv2D(32, kernel_size=(3, 3), activation='relu', input_shape=(48,48,1)))

emotion_model.add(Conv2D(64, kernel_size=(3, 3), activation='relu'))

emotion_model.add(MaxPooling2D(pool_size=(2, 2)))

emotion_model.add(Dropout(0.25))

emotion_model.add(Conv2D(128, kernel_size=(3, 3), activation='relu'))

emotion_model.add(MaxPooling2D(pool_size=(2, 2)))

emotion_model.add(Conv2D(128, kernel_size=(3, 3), activation='relu'))

emotion_model.add(MaxPooling2D(pool_size=(2, 2)))

emotion_model.add(Dropout(0.25))

emotion_model.add(Flatten())

emotion_model.add(Dense(1024, activation='relu'))

emotion_model.add(Dropout(0.5))

emotion_model.add(Dense(7, activation='softmax'))

- Sequential(): 序贯模型,与函数式模型对立。from keras.models import Sequential, 序贯模型通过一层层神经网络连接构建深度神经网络。

- add(): 叠加网络层,参数可为conv2D卷积神经网络层,MaxPooling2D二维最大赤化层, Dropout随机失活层(防止过拟合), Dense密集层(全连接FC层,在Keras层中FC层被写作Dense层)

函数式模型:Model(), 调用已经训练好的模型,比如VGG、Inception等神经网络结构,多输入、多输出模型。

4. 编译和训练模型

emotion_model.compile(loss='categorical_crossentropy',optimizer=Adam(lr=0.0001, decay=1e-6),metrics=['accuracy'])

emotion_model_info = emotion_model.fit_generator(

train_generator,

steps_per_epoch=28709 // 64,

epochs=50,

validation_data=validation_generator,

validation_steps=7178 // 64)

- compile(): 编译神经网络结构,参数包括:loss,字符串结构,指定损失函数;optimizer,表示优化方式;metrics,列表,用来衡量模型指标。

- fit_generator: 在搭建完成后,将数据送入模型进行训练,返回一个history对象,记录训练过程中的损失和评估值。参数包括:generator,生成器函数,steps_per_epoch: 每个epoch中生成器执行生成数据的次数;epochs,迭代次数;valdation_data: 数据集验证。

5. 保存模型权重

emotion_model.save_weights('emotion_model.h5')

- save_weights: 保存模型权重

6. 输出预测结果

cv2.ocl.setUseOpenCL(False)

emotion_dict = {0: "Angry", 1: "Disgusted", 2: "Fearful", 3: "Happy", 4: "Neutral", 5: "Sad", 6: "Surprised"}

# start the webcam feed

cap = cv2.VideoCapture(0)

while True:

# Find haar cascade to draw bounding box around face

ret, frame = cap.read()

frame = cv2.resize(frame, (300, 300))

if not ret:

break

bounding_box = cv2.CascadeClassifier(cv2.data.haarcascades + 'haarcascade_frontalface_default.xml')

gray_frame = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

num_faces = bounding_box.detectMultiScale(gray_frame, scaleFactor=1.3, minNeighbors=5)

for (x, y, w, h) in num_faces:

cv2.rectangle(frame, (x, y-50), (x+w, y+h+10), (255, 0, 0), 2)

roi_gray_frame = gray_frame[y:y + h, x:x + w]

cropped_img = np.expand_dims(np.expand_dims(cv2.resize(roi_gray_frame, (48, 48)), -1), 0)

emotion_prediction = emotion_model.predict(cropped_img)

maxindex = int(np.argmax(emotion_prediction))

cv2.putText(frame, emotion_dict[maxindex], (x+20, y-60), cv2.FONT_HERSHEY_SIMPLEX, 1, (255, 255, 255), 2, cv2.LINE_AA)

cv2.imshow('Video', cv2.resize(frame,(300, 300), interpolation=cv2.INTER_CUBIC))

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

使用openCV中haarcascade. xml检测网络摄像头中人脸的边界框,并预测情绪.

- cv2.ocl.setUseOpenCL(False):关闭OpenGL加速。

- cv2.VideoCapture( ):输入参数为0时表示调用/打开笔记本的内置摄像头;参数为文件路径名时表示打开指定视频文件。

- cv2.CascadeClassifier():加载人脸harr级联分类器。该步骤容易包括,安装的OpenCV包中可能不包含该文件,为了方便大家调试,将该文件压缩上传到:人脸harr级联分类器保存的.xml文件,下载完后解压保存在cv2.data.haarcascades默认路径下,cv2.data.haarcascades表示输出cv2.data的路径,默认地址为:

**\anaconda3\lib\site-packages\cv2\data。 - detectMultiScale:输入参数包括:minNeighbors,每一个目标至少被检测到的次数;minSize,目标最小尺寸;maxSize:目标最大尺寸等。通过灵活调节这三个参数可以用来排除干扰项。

完整程序

train.py : 人脸情绪训练代码。此外,将第4节训练过程备注,增加调用程序,可以用来实时测试结果。

gui.py: GUI窗口,输出可互动的界面。

1. train.py程序

"""

Emojify--create your own emoji with deep learning

"""

"""

1. Imports

"""

import numpy as np

import cv2

from keras.models import Sequential

from keras.layers import Dense, Dropout, Flatten

from keras.layers import Conv2D

from keras.optimizers import Adam

from keras.layers import MaxPooling2D

from keras.preprocessing.image import ImageDataGenerator

"""

2. Initialize the training and validation generators

"""

train_dir = 'data/train'

val_dir = 'data/test'

train_datagen = ImageDataGenerator(rescale=1./255)

val_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

train_dir,

target_size=(48, 48),

batch_size=64,

color_mode="grayscale",

class_mode='categorical')

validation_generator = val_datagen.flow_from_directory(

val_dir,

target_size=(48,48),

batch_size=64,

color_mode="grayscale",

class_mode='categorical')

"""

3. Build convolution network architecture

"""

emotion_model = Sequential()

emotion_model.add(Conv2D(32, kernel_size=(3, 3), activation='relu', input_shape=(48,48,1)))

emotion_model.add(Conv2D(64, kernel_size=(3, 3), activation='relu'))

emotion_model.add(MaxPooling2D(pool_size=(2, 2)))

emotion_model.add(Dropout(0.25))

emotion_model.add(Conv2D(128, kernel_size=(3, 3), activation='relu'))

emotion_model.add(MaxPooling2D(pool_size=(2, 2)))

emotion_model.add(Conv2D(128, kernel_size=(3, 3), activation='relu'))

emotion_model.add(MaxPooling2D(pool_size=(2, 2)))

emotion_model.add(Dropout(0.25))

emotion_model.add(Flatten())

emotion_model.add(Dense(1024, activation='relu'))

emotion_model.add(Dropout(0.5))

emotion_model.add(Dense(7, activation='softmax'))

"""

4. Compile and train the model

"""

# emotion_model.compile(loss='categorical_crossentropy',optimizer=Adam(lr=0.0001, decay=1e-6),metrics=['accuracy'])

# emotion_model_info = emotion_model.fit_generator(

# train_generator,

# steps_per_epoch=28709 // 64,

# epochs=50,

# validation_data=validation_generator,

# validation_steps=7178 // 64)

#

# """

# 5. Save the model weights

# """

# emotion_model.save_weights('emotion_model.h5')

"""

6. Using openCV haarcascade xml detect the bounding boxes of face in the webcam and predict the emotions

"""

# 加载已经训练好的表情识别权重

emotion_model.load_weights('emotion_model.h5')

cv2.ocl.setUseOpenCL(False)

emotion_dict = {0: "Angry", 1: "Disgusted", 2: "Fearful", 3: "Happy", 4: "Neutral", 5: "Sad", 6: "Surprised"}

# start the webcam feed

cap = cv2.VideoCapture(0)

while True:

# Find haar cascade to draw bounding box around face

ret, frame = cap.read()

frame = cv2.resize(frame, (300, 300))

if not ret:

break

bounding_box = cv2.CascadeClassifier(cv2.data.haarcascades + 'haarcascade_frontalface_default.xml')

gray_frame = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

num_faces = bounding_box.detectMultiScale(gray_frame, scaleFactor=1.3, minNeighbors=5)

for (x, y, w, h) in num_faces:

cv2.rectangle(frame, (x, y-50), (x+w, y+h+10), (255, 0, 0), 2)

roi_gray_frame = gray_frame[y:y + h, x:x + w]

cropped_img = np.expand_dims(np.expand_dims(cv2.resize(roi_gray_frame, (48, 48)), -1), 0)

emotion_prediction = emotion_model.predict(cropped_img)

maxindex = int(np.argmax(emotion_prediction))

cv2.putText(frame, emotion_dict[maxindex], (x+20, y-60), cv2.FONT_HERSHEY_SIMPLEX, 1, (255, 255, 255), 2, cv2.LINE_AA)

cv2.imshow('Video', cv2.resize(frame,(300, 300), interpolation=cv2.INTER_CUBIC))

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

2. gui.py程序

"""

Code for GUI and mapping with emojis

"""

import tkinter as tk

from tkinter import *

import cv2

from PIL import Image, ImageTk

import os

import numpy as np

import numpy as np

import cv2

from keras.models import Sequential

from keras.layers import Dense, Dropout, Flatten

from keras.layers import Conv2D

from keras.optimizers import Adam

from keras.layers import MaxPooling2D

from keras.preprocessing.image import ImageDataGenerator

emotion_model = Sequential()

emotion_model.add(Conv2D(32, kernel_size=(3, 3), activation='relu', input_shape=(48,48,1)))

emotion_model.add(Conv2D(64, kernel_size=(3, 3), activation='relu'))

emotion_model.add(MaxPooling2D(pool_size=(2, 2)))

emotion_model.add(Dropout(0.25))

emotion_model.add(Conv2D(128, kernel_size=(3, 3), activation='relu'))

emotion_model.add(MaxPooling2D(pool_size=(2, 2)))

emotion_model.add(Conv2D(128, kernel_size=(3, 3), activation='relu'))

emotion_model.add(MaxPooling2D(pool_size=(2, 2)))

emotion_model.add(Dropout(0.25))

emotion_model.add(Flatten())

emotion_model.add(Dense(1024, activation='relu'))

emotion_model.add(Dropout(0.5))

emotion_model.add(Dense(7, activation='softmax'))

emotion_model.load_weights('emotion_model.h5')

cv2.ocl.setUseOpenCL(False)

emotion_dict = {0: " Angry ", 1: "Disgusted", 2: " Fearful ", 3: " Happy ", 4: " Neutral ", 5: " Sad ", 6: "Surprised"}

emoji_dist={0:"./emojis/angry.png",2:"./emojis/disgusted.png",2:"./emojis/fearful.png",3:"./emojis/happy.png",4:"./emojis/neutral.png",5:"./emojis/sad.png",6:"./emojis/surpriced.png"}

global last_frame1

last_frame1 = np.zeros((480, 640, 3), dtype=np.uint8)

global cap1

show_text=[0]

def show_vid():

cap1 = cv2.VideoCapture(0)

if not cap1.isOpened():

print("cant open the camera1")

flag1, frame1 = cap1.read()

frame1 = cv2.resize(frame1,(600,500))

bounding_box = cv2.CascadeClassifier(cv2.data.haarcascades + 'haarcascade_frontalface_default.xml')

gray_frame = cv2.cvtColor(frame1, cv2.COLOR_BGR2GRAY)

num_faces = bounding_box.detectMultiScale(gray_frame,scaleFactor=1.3, minNeighbors=5)

for (x, y, w, h) in num_faces:

cv2.rectangle(frame1, (x, y-50), (x+w, y+h+10), (255, 0, 0), 2)

roi_gray_frame = gray_frame[y:y + h, x:x + w]

cropped_img = np.expand_dims(np.expand_dims(cv2.resize(roi_gray_frame, (48, 48)), -1), 0)

prediction = emotion_model.predict(cropped_img)

maxindex = int(np.argmax(prediction))

# cv2.putText(frame1, emotion_dict[maxindex], (x+20, y-60), cv2.FONT_HERSHEY_SIMPLEX, 1, (255, 255, 255), 2, cv2.LINE_AA)

show_text[0]=maxindex

if flag1 is None:

print ("Major error!")

elif flag1:

global last_frame1

last_frame1 = frame1.copy()

pic = cv2.cvtColor(last_frame1, cv2.COLOR_BGR2RGB)

img = Image.fromarray(pic)

imgtk = ImageTk.PhotoImage(image=img)

lmain.imgtk = imgtk

lmain.configure(image=imgtk)

# lmain.after(10, show_vid)

if cv2.waitKey(1) & 0xFF == ord('q'):

exit()

def show_vid2():

frame2=cv2.imread(emoji_dist[show_text[0]])

pic2=cv2.cvtColor(frame2,cv2.COLOR_BGR2RGB)

img2=Image.fromarray(frame2)

imgtk2=ImageTk.PhotoImage(image=img2)

lmain2.imgtk2=imgtk2

lmain3.configure(text=emotion_dict[show_text[0]],font=('arial',45,'bold'))

lmain2.configure(image=imgtk2)

# lmain2.after(10, show_vid2)

#

if __name__ == '__main__':

root=tk.Tk()

img = ImageTk.PhotoImage(Image.open("logo.png"))

heading = Label(root,image=img,bg='black')

heading.pack()

heading2=Label(root,text="Photo to Emoji",pady=20, font=('arial',45,'bold'),bg='black',fg='#CDCDCD')

heading2.pack()

lmain = tk.Label(master=root,padx=50,bd=10)

lmain2 = tk.Label(master=root,bd=10)

lmain3=tk.Label(master=root,bd=10,fg="#CDCDCD",bg='black')

lmain.pack(side=LEFT)

lmain.place(x=50,y=250)

lmain3.pack()

lmain3.place(x=960,y=250)

lmain2.pack(side=RIGHT)

lmain2.place(x=900,y=350)

root.title("Photo To Emoji")

root.geometry("1400x900+100+10")

root['bg']='black'

exitbutton = Button(root, text='Quit',fg="red",command=root.destroy,font=('arial',25,'bold')).pack(side = BOTTOM)

show_vid()

show_vid2()

root.mainloop()

gui.py输出结果:

如有问题,欢迎指出和讨论。

![[游戏开发][Unity]点击Play按钮卡顿特别久](https://img-blog.csdnimg.cn/img_convert/477d6994a79d75db96acdaa762d129a2.png)