地平线旭日x3派部署yolov8

- 总体流程

- 1.导出onnx模型

- 导出

- YOLOV8_onnxruntime.py验证onnx

- utils.py

- 2.在开发机转为bin模型

- 2.1准备数据图片

- 2.2转换必备的yaml文件

- 2.3开始转换

- 3.开发机验证**quantized_model.onnx

- 4.板子运行bin模型

- 资源链接

总体流程

1.导出onnx模型

导出

使用yolov8的github库导出onnx模型。注意设置opset为11。

from ultralytics import YOLO

model = YOLO('yolov8s.yaml')

model = YOLO('yolov8s.pt')

# 注意设置opset=11

success = model.export(format='onnx',opset=11)

会在运行目录下生成yolov8s.onnx。

YOLOV8_onnxruntime.py验证onnx

使用YOLOV8_onnxruntime.py验证导出的onnx是否可用。运行正常的话会生成result.jpg,图中有检测出的物体。

import time

import cv2

import numpy as np

import onnxruntime

from utils import xywh2xyxy, nms, draw_detections

class YOLOv8:

def __init__(self, path, conf_thres=0.7, iou_thres=0.5):

self.conf_threshold = conf_thres

self.iou_threshold = iou_thres

# Initialize model

self.initialize_model(path)

def __call__(self, image):

return self.detect_objects(image)

def initialize_model(self, path):

self.session = onnxruntime.InferenceSession(path,

providers=['CUDAExecutionProvider',

'CPUExecutionProvider'])

# Get model info

self.get_input_details()

self.get_output_details()

def detect_objects(self, image):

input_tensor = self.prepare_input(image)

# Perform inference on the image

outputs = self.inference(input_tensor)

self.boxes, self.scores, self.class_ids = self.process_output(outputs)

return self.boxes, self.scores, self.class_ids

def prepare_input(self, image):

self.img_height, self.img_width = image.shape[:2]

input_img = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# Resize input image

input_img = cv2.resize(input_img, (self.input_width, self.input_height))

# Scale input pixel values to 0 to 1

input_img = input_img / 255.0

input_img = input_img.transpose(2, 0, 1)

input_tensor = input_img[np.newaxis, :, :, :].astype(np.float32)

return input_tensor

def inference(self, input_tensor):

start = time.perf_counter()

outputs = self.session.run(self.output_names, {self.input_names[0]: input_tensor})

print(outputs[0].shape)

# print(f"Inference time: {(time.perf_counter() - start)*1000:.2f} ms")

return outputs

def process_output(self, output):

predictions = np.squeeze(output[0]).T

# Filter out object confidence scores below threshold

scores = np.max(predictions[:, 4:], axis=1)

predictions = predictions[scores > self.conf_threshold, :]

scores = scores[scores > self.conf_threshold]

if len(scores) == 0:

return [], [], []

# Get the class with the highest confidence

class_ids = np.argmax(predictions[:, 4:], axis=1)

# Get bounding boxes for each object

boxes = self.extract_boxes(predictions)

# Apply non-maxima suppression to suppress weak, overlapping bounding boxes

indices = nms(boxes, scores, self.iou_threshold)

print("bbox len :",len(indices))

return boxes[indices], scores[indices], class_ids[indices]

def extract_boxes(self, predictions):

# Extract boxes from predictions

boxes = predictions[:, :4]

# Scale boxes to original image dimensions

boxes = self.rescale_boxes(boxes)

# Convert boxes to xyxy format

boxes = xywh2xyxy(boxes)

return boxes

def rescale_boxes(self, boxes):

# Rescale boxes to original image dimensions

input_shape = np.array([self.input_width, self.input_height, self.input_width, self.input_height])

boxes = np.divide(boxes, input_shape, dtype=np.float32)

boxes *= np.array([self.img_width, self.img_height, self.img_width, self.img_height])

return boxes

def draw_detections(self, image, draw_scores=True, mask_alpha=0.4):

return draw_detections(image, self.boxes, self.scores,

self.class_ids, mask_alpha)

def get_input_details(self):

model_inputs = self.session.get_inputs()

self.input_names = [model_inputs[i].name for i in range(len(model_inputs))]

self.input_shape = model_inputs[0].shape

self.input_height = self.input_shape[2]

self.input_width = self.input_shape[3]

def get_output_details(self):

model_outputs = self.session.get_outputs()

self.output_names = [model_outputs[i].name for i in range(len(model_outputs))]

if __name__ == '__main__':

model_path = "yolov8s.onnx"

# model_path = "model_output/horizon_x3_original_float_model.onnx"

# Initialize YOLOv7 object detector

yolov8_detector = YOLOv8(model_path, conf_thres=0.5, iou_thres=0.5)

# img_url = "https://live.staticflickr.com/13/19041780_d6fd803de0_3k.jpg"

# img = imread_from_url(img_url)

img = cv2.imread('1121687586719_.pic.jpg')

# Detect Objects

yolov8_detector(img)

# Draw detections

combined_img = yolov8_detector.draw_detections(img)

# cv2.namedWindow("Output", cv2.WINDOW_NORMAL)

# cv2.imshow("Output", combined_img)

# cv2.waitKey(0)

cv2.imwrite('result.jpg',combined_img)

YOLOV8_onnxruntime.py 需要导入utils.py,代码如下:

utils.py

import numpy as np

import cv2

class_names = ['person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat', 'traffic light',

'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow',

'elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee',

'skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard',

'tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple',

'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'couch',

'potted plant', 'bed', 'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard',

'cell phone', 'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase',

'scissors', 'teddy bear', 'hair drier', 'toothbrush']

# Create a list of colors for each class where each color is a tuple of 3 integer values

rng = np.random.default_rng(3)

colors = rng.uniform(0, 255, size=(len(class_names), 3))

def nms(boxes, scores, iou_threshold):

# Sort by score

sorted_indices = np.argsort(scores)[::-1]

keep_boxes = []

while sorted_indices.size > 0:

# Pick the last box

box_id = sorted_indices[0]

keep_boxes.append(box_id)

# Compute IoU of the picked box with the rest

ious = compute_iou(boxes[box_id, :], boxes[sorted_indices[1:], :])

# Remove boxes with IoU over the threshold

keep_indices = np.where(ious < iou_threshold)[0]

# print(keep_indices.shape, sorted_indices.shape)

sorted_indices = sorted_indices[keep_indices + 1]

return keep_boxes

def compute_iou(box, boxes):

# Compute xmin, ymin, xmax, ymax for both boxes

xmin = np.maximum(box[0], boxes[:, 0])

ymin = np.maximum(box[1], boxes[:, 1])

xmax = np.minimum(box[2], boxes[:, 2])

ymax = np.minimum(box[3], boxes[:, 3])

# Compute intersection area

intersection_area = np.maximum(0, xmax - xmin) * np.maximum(0, ymax - ymin)

# Compute union area

box_area = (box[2] - box[0]) * (box[3] - box[1])

boxes_area = (boxes[:, 2] - boxes[:, 0]) * (boxes[:, 3] - boxes[:, 1])

union_area = box_area + boxes_area - intersection_area

# Compute IoU

iou = intersection_area / union_area

return iou

def xywh2xyxy(x):

# Convert bounding box (x, y, w, h) to bounding box (x1, y1, x2, y2)

y = np.copy(x)

y[..., 0] = x[..., 0] - x[..., 2] / 2

y[..., 1] = x[..., 1] - x[..., 3] / 2

y[..., 2] = x[..., 0] + x[..., 2] / 2

y[..., 3] = x[..., 1] + x[..., 3] / 2

return y

def draw_detections(image, boxes, scores, class_ids, mask_alpha=0.3):

mask_img = image.copy()

det_img = image.copy()

img_height, img_width = image.shape[:2]

size = min([img_height, img_width]) * 0.0006

text_thickness = int(min([img_height, img_width]) * 0.001)

# Draw bounding boxes and labels of detections

for box, score, class_id in zip(boxes, scores, class_ids):

color = colors[class_id]

x1, y1, x2, y2 = box.astype(int)

# Draw rectangle

cv2.rectangle(det_img, (x1, y1), (x2, y2), color, 2)

# Draw fill rectangle in mask image

cv2.rectangle(mask_img, (x1, y1), (x2, y2), color, -1)

label = class_names[class_id]

caption = f'{label} {int(score * 100)}%'

(tw, th), _ = cv2.getTextSize(text=caption, fontFace=cv2.FONT_HERSHEY_SIMPLEX,

fontScale=size, thickness=text_thickness)

th = int(th * 1.2)

cv2.rectangle(det_img, (x1, y1),

(x1 + tw, y1 - th), color, -1)

cv2.rectangle(mask_img, (x1, y1),

(x1 + tw, y1 - th), color, -1)

cv2.putText(det_img, caption, (x1, y1),

cv2.FONT_HERSHEY_SIMPLEX, size, (255, 255, 255), text_thickness, cv2.LINE_AA)

cv2.putText(mask_img, caption, (x1, y1),

cv2.FONT_HERSHEY_SIMPLEX, size, (255, 255, 255), text_thickness, cv2.LINE_AA)

return cv2.addWeighted(mask_img, mask_alpha, det_img, 1 - mask_alpha, 0)

def draw_comparison(img1, img2, name1, name2, fontsize=2.6, text_thickness=3):

(tw, th), _ = cv2.getTextSize(text=name1, fontFace=cv2.FONT_HERSHEY_DUPLEX,

fontScale=fontsize, thickness=text_thickness)

x1 = img1.shape[1] // 3

y1 = th

offset = th // 5

cv2.rectangle(img1, (x1 - offset * 2, y1 + offset),

(x1 + tw + offset * 2, y1 - th - offset), (0, 115, 255), -1)

cv2.putText(img1, name1,

(x1, y1),

cv2.FONT_HERSHEY_DUPLEX, fontsize,

(255, 255, 255), text_thickness)

(tw, th), _ = cv2.getTextSize(text=name2, fontFace=cv2.FONT_HERSHEY_DUPLEX,

fontScale=fontsize, thickness=text_thickness)

x1 = img2.shape[1] // 3

y1 = th

offset = th // 5

cv2.rectangle(img2, (x1 - offset * 2, y1 + offset),

(x1 + tw + offset * 2, y1 - th - offset), (94, 23, 235), -1)

cv2.putText(img2, name2,

(x1, y1),

cv2.FONT_HERSHEY_DUPLEX, fontsize,

(255, 255, 255), text_thickness)

combined_img = cv2.hconcat([img1, img2])

if combined_img.shape[1] > 3840:

combined_img = cv2.resize(combined_img, (3840, 2160))

return combined_img

2.在开发机转为bin模型

2.1准备数据图片

简单起见,这里直接使用地平线官方教程中的50张rgb格式的示例图片。图片路径是horizon_model_convert_sample/04_detection/03_yolov5s/mapper/calibration_data_rgb_f32。其应当存在于本文4.3列出的文件中。如果不存在,根据4.2的用户手册6.2.3.2节生成即可。

2.2转换必备的yaml文件

根据onnx模型量化yaml文件模板修改。这里是我使用yolov8的yaml文件。

# 模型转化相关的参数

model_parameters:

# 必选参数

# Onnx浮点网络数据模型文件, 例如:onnx_model: './horizon_x3_onnx.onnx'

onnx_model: './yolov8s.onnx'

march: "bernoulli2"

layer_out_dump: False

working_dir: 'model_output'

output_model_file_prefix: 'horizon_x3'

# 模型输入相关参数

input_parameters:

input_name: ""

input_shape: ''

input_type_rt: 'nv12'

input_layout_rt: 'NHWC'

# 必选参数

# 原始浮点模型训练框架中所使用训练的数据类型

input_type_train: 'rgb'

# 必选参数

# 原始浮点模型训练框架中所使用训练的数据排布, 可选值为 NHWC/NCHW

input_layout_train: 'NCHW'

# 必选参数

# 原始浮点模型训练框架中所使用数据预处理方法,可配置

norm_type: 'data_mean_and_scale'

# 必选参数

# 图像减去的均值, 如果是通道均值,value之间必须用空格分隔

mean_value: 111.0 109.0 118.0

# 必选参数

# 图像预处理缩放比例,如果是通道缩放比例,value之间必须用空格分隔,计算公式:scale = 1/std

scale_value: 0.0078125 0.001215 0.003680

# 模型量化相关参数

calibration_parameters:

# 必选参数, 使用2.1中的图片路径

cal_data_dir: './calibration_data_rgb_f32'

cal_data_type: 'float32'

calibration_type: 'default'

# 编译器相关参数

compiler_parameters:

compile_mode: 'latency'

debug: False

# core_num: 2

optimize_level: 'O3'

2.3开始转换

使用docker运行地平线官方给的镜像。根据官方用户手册6.2.2. 环境安装配置环境。转换命令如下。

hb_mapper makertbin --config yolov8_onnx_convert.yaml --model-type onnx

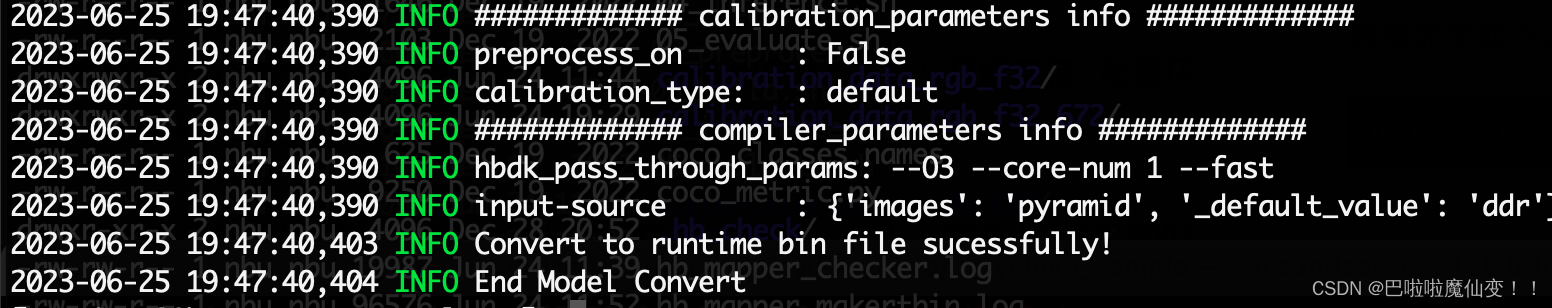

等待运行结束,如下界面表示运行成功。

在model_output下存放转换后的模型。应当存在四个不同的模型。

- ***_original_float_model.onnx

- ***_optimized_float_model.onnx

- ***_quantized_model.onnx

- ***.bin

3.开发机验证**quantized_model.onnx

对于1. ***_original_float_model.onnx

2. ***_optimized_float_model.onnx

3. ***_quantized_model.onnx

都可以使用YOLOv8_hb_onnxruntime.py测试运行。需要注意的是

- 对于YOLOv8_hb_onnxruntime来说,需要使用horizon_bpu的环境运行。根据6.2.2. 环境安装可配置horizon_bpu环境。而对于1中的YOLOV8_onnxruntime.py,安装onnxruntime库可运行.

- 输入格式。对于optimized_float_model.onnx和original_float_model.onnx,使用NCHW输入格式即可。而对于quantized_model.onnx和***.bin,需要将格式改为NHWC。

YOLOv8_hb_onnxruntime.py代码如下。运行成功将生成result.jpg图片。

import time

import cv2

import numpy as np

from horizon_tc_ui import HB_ONNXRuntime

from utils import xywh2xyxy, nms, draw_detections

class YOLOv8:

def __init__(self, path, conf_thres=0.7, iou_thres=0.5):

self.conf_threshold = conf_thres

self.iou_threshold = iou_thres

# Initialize model

self.initialize_model(path)

def __call__(self, image):

return self.detect_objects(image)

def initialize_model(self, path):

self.session = HB_ONNXRuntime(model_file=path)

# self.session.set_dim_param(0, 0, '?')

# Get model info

self.get_input_details()

self.get_output_details()

def detect_objects(self, image):

input_tensor = self.prepare_input(image)

# Perform inference on the image

outputs = self.inference(input_tensor)

self.boxes, self.scores, self.class_ids = self.process_output(outputs)

return self.boxes, self.scores, self.class_ids

def prepare_input(self, image):

self.img_height, self.img_width = image.shape[:2]

input_img = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# Resize input image

input_img = cv2.resize(input_img, (self.input_width, self.input_height))

# Scale input pixel values to 0 to 1

# input_img = input_img / 255.0

# input_img = input_img.transpose(2, 0, 1)

input_tensor = input_img[np.newaxis, :, :, :].astype(np.float32)

return input_tensor

def inference(self, input_tensor):

start = time.perf_counter()

input_name = self.session.input_names[0]

output_name = self.session.output_names

outputs = self.session.run(output_name, {input_name: input_tensor},input_offset=128)

print(outputs[0].shape)

print(f"Inference time: {(time.perf_counter() - start)*1000:.2f} ms")

return outputs

def process_output(self, output):

predictions = np.squeeze(output[0]).T

# Filter out object confidence scores below threshold

scores = np.max(predictions[:, 4:], axis=1)

predictions = predictions[scores > self.conf_threshold, :]

scores = scores[scores > self.conf_threshold]

if len(scores) == 0:

return [], [], []

# Get the class with the highest confidence

class_ids = np.argmax(predictions[:, 4:], axis=1)

# Get bounding boxes for each object

boxes = self.extract_boxes(predictions)

# Apply non-maxima suppression to suppress weak, overlapping bounding boxes

indices = nms(boxes, scores, self.iou_threshold)

print("bbox len :",len(indices))

return boxes[indices], scores[indices], class_ids[indices]

def extract_boxes(self, predictions):

# Extract boxes from predictions

boxes = predictions[:, :4]

# Scale boxes to original image dimensions

boxes = self.rescale_boxes(boxes)

# Convert boxes to xyxy format

boxes = xywh2xyxy(boxes)

return boxes

def rescale_boxes(self, boxes):

# Rescale boxes to original image dimensions

input_shape = np.array([self.input_width, self.input_height, self.input_width, self.input_height])

boxes = np.divide(boxes, input_shape, dtype=np.float32)

boxes *= np.array([self.img_width, self.img_height, self.img_width, self.img_height])

return boxes

def draw_detections(self, image, draw_scores=True, mask_alpha=0.4):

return draw_detections(image, self.boxes, self.scores,

self.class_ids, mask_alpha)

def get_input_details(self):

model_inputs = self.session.get_inputs()

self.input_names = [model_inputs[i].name for i in range(len(model_inputs))]

self.input_shape = model_inputs[0].shape

self.input_height = self.input_shape[1]

self.input_width = self.input_shape[2]

def get_output_details(self):

model_outputs = self.session.get_outputs()

self.output_names = [model_outputs[i].name for i in range(len(model_outputs))]

if __name__ == '__main__':

# model_path = "yolov8n.onnx"

model_path = "model_output/horizon_x3_quantized_model.onnx"

# Initialize YOLOv7 object detector

yolov8_detector = YOLOv8(model_path, conf_thres=0.5, iou_thres=0.5)

# img_url = "https://live.staticflickr.com/13/19041780_d6fd803de0_3k.jpg"

# img = imread_from_url(img_url)

img = cv2.imread('1121687586719_.pic.jpg')

# Detect Objects

yolov8_detector(img)

# Draw detections

combined_img = yolov8_detector.draw_detections(img)

# cv2.namedWindow("Output", cv2.WINDOW_NORMAL)

# cv2.imshow("Output", combined_img)

# cv2.waitKey(0)

cv2.imwrite('result.jpg',combined_img)

4.板子运行bin模型

将**.bin模型拷贝到板子上,使用yolov8.py脚本运行即可。修改代码中的bin文件路径。使用sudo python3 yolov8.py运行脚本

sudo python3 yolov8.py

import time

import cv2

import numpy as np

from hobot_dnn import pyeasy_dnn as dnn

from utils import xywh2xyxy, nms, draw_detections

class YOLOv8:

def __init__(self, path, conf_thres=0.7, iou_thres=0.5):

self.conf_threshold = conf_thres

self.iou_threshold = iou_thres

# Initialize model

self.initialize_model(path)

def __call__(self, image):

return self.detect_objects(image)

def initialize_model(self, path):

self.session = dnn.load(path)

# self.session.set_dim_param(0, 0, '?')

# Get model info

self.get_input_details()

self.get_output_details()

def detect_objects(self, image):

input_tensor = self.prepare_input(image)

print(input_tensor.shape)

# Perform inference on the image

outputs = self.inference(input_tensor)

self.boxes, self.scores, self.class_ids = self.process_output(outputs)

return self.boxes, self.scores, self.class_ids

def prepare_input(self, image):

# self.img_height, self.img_width = image.shape[:2]

self.img_height, self.img_width = image.shape[:2]

input_img = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# Resize input image

input_img = cv2.resize(input_img, (640,640))

# Scale input pixel values to 0 to 1

# input_img = input_img / 255.0

height, width = input_img.shape[0], input_img.shape[1]

area = height * width

yuv420p = cv2.cvtColor(input_img, cv2.COLOR_BGR2YUV_I420).reshape((area * 3 // 2,))

y = yuv420p[:area]

uv_planar = yuv420p[area:].reshape((2, area // 4))

uv_packed = uv_planar.transpose((1, 0)).reshape((area // 2,))

nv12 = np.zeros_like(yuv420p)

nv12[:height * width] = y

nv12[height * width:] = uv_packed

return nv12

def inference(self, input_tensor):

start = time.perf_counter()

# outputs = self.session.run(output_name, {input_name: input_tensor},input_offset=128)

outputs = self.session[0].forward(input_tensor)

print(outputs[0].buffer.shape)

print(f"Inference time: {(time.perf_counter() - start)*1000:.2f} ms")

return outputs

def process_output(self, output):

predictions = np.squeeze(output[0].buffer).T

# Filter out object confidence scores below threshold

scores = np.max(predictions[:, 4:], axis=1)

predictions = predictions[scores > self.conf_threshold, :]

scores = scores[scores > self.conf_threshold]

if len(scores) == 0:

return [], [], []

# Get the class with the highest confidence

class_ids = np.argmax(predictions[:, 4:], axis=1)

# Get bounding boxes for each object

boxes = self.extract_boxes(predictions)

# Apply non-maxima suppression to suppress weak, overlapping bounding boxes

indices = nms(boxes, scores, self.iou_threshold)

print("indices len :",len(indices))

return boxes[indices], scores[indices], class_ids[indices]

def extract_boxes(self, predictions):

# Extract boxes from predictions

boxes = predictions[:, :4]

# Scale boxes to original image dimensions

boxes = self.rescale_boxes(boxes)

# Convert boxes to xyxy format

boxes = xywh2xyxy(boxes)

return boxes

def rescale_boxes(self, boxes):

# Rescale boxes to original image dimensions

input_shape = np.array([self.input_width, self.input_height, self.input_width, self.input_height])

boxes = np.divide(boxes, input_shape, dtype=np.float32)

boxes *= np.array([self.img_width, self.img_height, self.img_width, self.img_height])

return boxes

def draw_detections(self, image, draw_scores=True, mask_alpha=0.4):

return draw_detections(image, self.boxes, self.scores,

self.class_ids, mask_alpha)

def get_input_details(self):

self.input_height = 640

self.input_width = 640

def get_output_details(self):

pass

if __name__ == '__main__':

model_path = "/userdata/horizon_x3.bin"

# Initialize YOLOv7 object detector

yolov8_detector = YOLOv8(model_path, conf_thres=0.5, iou_thres=0.5)

# img_url = "https://live.staticflickr.com/13/19041780_d6fd803de0_3k.jpg"

# img = imread_from_url(img_url)

img = cv2.imread('WechatIMG112.jpeg')

# Detect Objects

yolov8_detector(img)

# Draw detections

combined_img = yolov8_detector.draw_detections(img)

# cv2.namedWindow("Output", cv2.WINDOW_NORMAL)

# cv2.imshow("Output", combined_img)

# cv2.waitKey(0)

cv2.imwrite('result.jpg',combined_img)

yolov8n模型,在板子上运行,延迟为270ms左右。

yolov8s模型,在板子上运行,延迟为370ms左右。

资源链接

1 官网资源中心链接(包括开发者工具、docker镜像等)

2 官方用户手册(包括说明书、环境配置等教程)

3.公版模型转换示例下载。如果有找不到的文件,就去这里面找。

wget -c ftp://xj3ftp@vrftp.horizon.ai/model_convert_sample/horizon_model_convert_sample.tar.gz --ftp-password=xj3ftp@123$%

官方手册中的文件能下都下。官方手册文件变了,很多文件路径对不上。