前言:

kubernetes的部署从1.24版本开始后,弃用docker-shim,也就是说部署1.24版本后的集群不能使用docker-ce了。

比较清晰的解决方案有两个,一是使用containerd,这个是一个新的支持cri标准的shim,一个是使用cri-docker这样的中间插件形式,一头通过CRI跟kubelet交互,另一头跟docker api交互,从而间接的实现了kubernetes以docker作为容器运行时。但是这种架构缺点也很明显,调用链更长,效率更低。

那么,cri-docker虽然有效率低下的缺点,但很明显这个更加符合原来的docker使用习惯。说人话就是部署简单,学习成本不会太高。

因此,如果是仅仅想测试新版本的kubernetes,体验新版本的kubernetes,无疑还是使用cri-docker更为合适的。

本文将就cri-docker的部署做一个详细的介绍,并基于cri-docker部署一个比较新的kubernetes集群。

实验目标:

利用kubeadm部署接近最新版本的kubernetes-1.25.4集群

实验环境:

一台VMware虚拟机,IP地址为:192.168.217.24

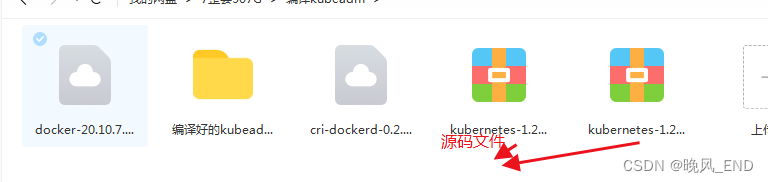

相关软件;

docker-ce-20.10.7

cri-docker-0.2.6

kubeadm-1.25.4

版本说明:

由于计划安装的kubernetes版本是比较新的,因此,三个软件的版本定的也都比较新。经过实际的测试,cri-docker和docker-ce的版本是有一定的对应关系的,前期使用docker-ce-19.0.3导致cri-docker无法安装,在这也提醒一下,最好使用以上的固定版本,否则会出各种意想不到的问题。

一,

docker-ce的安装

这个就没什么好说的,通常是有两种安装方式,一个是rpm方式,一个是二进制方式。

本文采用的是二进制安装方式,不需要配置yum源嘛,二进制部署的好处是可离线,部署方便快捷。

二进制安装包下载地址:

Index of linux/static/stable/x86_64/

下载下来的安装包上传到服务器后解压,并将解压后的目录下的所有文件拷贝到/usr/bin/目录下,这个没什么好说的,太基础就不写这了。

docker的配置文件:

cat >/etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["http://bc437cce.m.daocloud.io"],

"exec-opts":["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

docker的启动脚本:

cat >/usr/lib/systemd/system/docker.service <<EOF

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service containerd.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd --graph=/var/lib/docker

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

StartLimitBurst=3

StartLimitInterval=60s

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

EOF启动docker服务:

systemctl enable docker && systemctl start docker二,

cri-docker的安装部署

下载地址:

https://github.com/Mirantis/cri-dockerd/tags

cri-dockerd-0.2.6.amd64.tgz这个文件里就一个可执行文件,将此文件上传到服务器后,复制可执行文件到/usr/local/bin目录下,注意一定确保有执行权限:

[root@node4 ~]# ls -al /usr/local/bin/cri-dockerd

-rwxr-xr-x 1 zsk zsk 52335229 Sep 25 10:44 /usr/local/bin/cri-dockerd

该服务的部署主要是两个启动脚本

1,

cri-docker的启动脚本

cat >/usr/lib/systemd/system/cri-docker.service <<EOF

[Unit]

Description=CRI Interface for Docker Application Container Engine

Documentation=https://docs.mirantis.com

After=network-online.target firewalld.service docker.service

Wants=network-online.target

Requires=cri-docker.socket

[Service]

Type=notify

ExecStart=/usr/local/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.7

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

StartLimitBurst=3

StartLimitInterval=60s

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

EOF2,

cri-docker的socket

这个socket是与docker通信的关键服务,其中要注意,SocketGroup=root,有些人是写的SocketGroup=docker, 这样的话此服务将不能启动。

cat >/usr/lib/systemd/system/cri-docker.socket <<

[Unit]

Description=CRI Docker Socket for the API

PartOf=cri-docker.service

[Socket]

ListenStream=%t/cri-dockerd.sock

SocketMode=0660

SocketUser=root

SocketGroup=root

[Install]

WantedBy=sockets.target

EOF

启动服务:

systemctl daemon-reload && systemctl enable cri-docker.socket

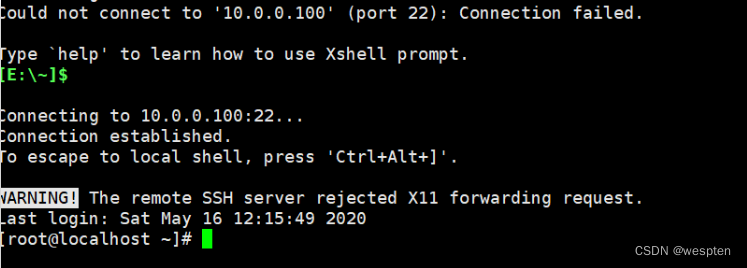

systemctl start cri-docker.socket cri-docker稍微验证一哈:

可以看到一个active和两个绿色的running以及一个文件/var/run/cri-dockerd.sock表示服务没有问题。

[root@node4 ~]# systemctl is-active cri-docker.socket

active

[root@node4 ~]# systemctl status cri-docker cri-docker.socket

● cri-docker.service - CRI Interface for Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/cri-docker.service; enabled; vendor preset: disabled)

Active: active (running) since Tue 2022-12-06 15:25:44 CST; 4h 27min ago

Docs: https://docs.mirantis.com

Main PID: 1256 (cri-dockerd)

CGroup: /system.slice/cri-docker.service

└─1256 /usr/local/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.7

Dec 06 19:52:22 node4 cri-dockerd[1256]: time="2022-12-06T19:52:22+08:00" level=info msg="Using CNI configuration file /etc/cni/net.d/10-flannel.conflist"

Dec 06 19:52:27 node4 cri-dockerd[1256]: time="2022-12-06T19:52:27+08:00" level=info msg="Using CNI configuration file /etc/cni/net.d/10-flannel.conflist"

Dec 06 19:52:32 node4 cri-dockerd[1256]: time="2022-12-06T19:52:32+08:00" level=info msg="Using CNI configuration file /etc/cni/net.d/10-flannel.conflist"

Dec 06 19:52:37 node4 cri-dockerd[1256]: time="2022-12-06T19:52:37+08:00" level=info msg="Using CNI configuration file /etc/cni/net.d/10-flannel.conflist"

Dec 06 19:52:42 node4 cri-dockerd[1256]: time="2022-12-06T19:52:42+08:00" level=info msg="Using CNI configuration file /etc/cni/net.d/10-flannel.conflist"

Dec 06 19:52:47 node4 cri-dockerd[1256]: time="2022-12-06T19:52:47+08:00" level=info msg="Using CNI configuration file /etc/cni/net.d/10-flannel.conflist"

Dec 06 19:52:52 node4 cri-dockerd[1256]: time="2022-12-06T19:52:52+08:00" level=info msg="Using CNI configuration file /etc/cni/net.d/10-flannel.conflist"

Dec 06 19:52:57 node4 cri-dockerd[1256]: time="2022-12-06T19:52:57+08:00" level=info msg="Using CNI configuration file /etc/cni/net.d/10-flannel.conflist"

Dec 06 19:53:02 node4 cri-dockerd[1256]: time="2022-12-06T19:53:02+08:00" level=info msg="Using CNI configuration file /etc/cni/net.d/10-flannel.conflist"

Dec 06 19:53:07 node4 cri-dockerd[1256]: time="2022-12-06T19:53:07+08:00" level=info msg="Using CNI configuration file /etc/cni/net.d/10-flannel.conflist"

● cri-docker.socket - CRI Docker Socket for the API

Loaded: loaded (/usr/lib/systemd/system/cri-docker.socket; enabled; vendor preset: disabled)

Active: active (running) since Tue 2022-12-06 15:25:30 CST; 4h 27min ago

Listen: /run/cri-dockerd.sock (Stream)

Dec 06 15:25:30 node4 systemd[1]: Starting CRI Docker Socket for the API.

Dec 06 15:25:30 node4 systemd[1]: Listening on CRI Docker Socket for the API.

[root@node4 ~]# ls -al /var/run/cri-dockerd.sock

srwxr-xr-x 1 root root 0 Dec 6 15:25 /var/run/cri-dockerd.sock

三,

重新编译kubeadm

云原生|kubernetes|kubeadm部署的集群的100年证书_晚风_END的博客-CSDN博客

其中有编译好的kubeadm,可以直接从网盘下载,如果想自己动手,根据以上文章自行编译即可。

相关文件都放百度网盘里了:

链接:https://pan.baidu.com/s/1ondEDX4caJ16ic-R6cqy_A?pwd=star

提取码:star

四,

kubeadm初始化集群

安装阿里云的kubeadm,kubelet,kubectl

添加阿里云源:

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

安装 kubeadm,kubelet,kubectl

yum install kubeadm-1.25.4 kubelet-1.25.4 kubectl-1.25.4 -y替换第三步编译好的kubeadm,准备执行集群的初始化:

cp -a kubeadm-1.25.4 /usr/bin/kubeadm初始化集群命令:

kubeadm init --image-repository registry.aliyuncs.com/google_containers --kubernetes-version=v1.25.4 --pod-network-cidr=10.244.0.0/16 --cri-socket /var/run/cri-dockerd.sock部分输出的日志:

W1206 15:26:21.940820 2162 initconfiguration.go:119] Usage of CRI endpoints without URL scheme is deprecated and can cause kubelet errors in the future. Automatically prepending scheme "unix" to the "criSocket" with value "/var/run/cri-dockerd.sock". Please update your configuration!

[init] Using Kubernetes version: v1.25.4

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local node4] and IPs [10.96.0.1 192.168.217.24]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost node4] and IPs [192.168.217.24 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost node4] and IPs [192.168.217.24 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

中间的略略略

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.217.24:6443 --token bgkwcq.mgd8h263itfeiaia \

--discovery-token-ca-cert-hash sha256:686af8c137f05a4f0051f8f7d03aabaa72d272b338430f19ca8aaf0d13745a2d

OK,maser节点这样就安装好了,工作节点加入的时候需要增加参数:

kubeadm join 192.168.217.24:6443 --token bgkwcq.mgd8h263itfeiaia \

--discovery-token-ca-cert-hash sha256:686af8c137f05a4f0051f8f7d03aabaa72d272b338430f19ca8aaf0d13745a2d --cri-socket /var/run/cri-dockerd.sock其它的都和低版本的kubernetes集群一样的了,只是需要注意一下,网络插件需要版本升高,例如flannel

![[附源码]Python计算机毕业设计SSM家居购物系统(程序+LW)](https://img-blog.csdnimg.cn/a1b5507bcc9a41a4986b1c9725f6d727.png)