LightDB-高可用常用操作-管理篇

操作系统:

centos7

服务器IP:

1.192.168.121.112 (主)

2.192.168.121.113 (从)

3.192.168.121.114 (哨兵-可选)1.先停止备库的keepalived,在root用户下执行

# 1.获得备库keepalived进 程pid

[root@localhost ~]# cat /var/run/keepalived.pid

7680

# 2.杀死keepalived进程

[root@localhost ~]# kill 7680

# 3.确认keepalived进程确实已不存在

[root@localhost ~]# ps aux | grep keepalived

root 24464 0.0 0.0 112824 976 pts/1 S+ 20:27 0:00 grep --color=auto keepalived- 主库重启,在lightdb用户下执行,重启期间主库不提供服务

# 1.暂停ltclusterd,防止自动failover

[lightdb@localhost ltcluster]$ ltcluster -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf service pause

NOTICE: node 1 (lightdbCluster1921681211125432) paused

NOTICE: node 2 (lightdbCluster1921681211135432) paused

NOTICE: node 4 (lightdbCluster1921681211145432) paused

# 2.查看集群状态,确认primary的paused?状态为yes

[lightdb@localhost ltcluster]$ ltcluster -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf service status

ID | Name | Role | Status | Upstream | ltclusterd | PID | Paused? | Upstream last seen

----+--------------------------------+---------+-----------+--------------------------------+------------+------+---------+--------------------

1 | lightdbCluster1921681211125432 | primary | * running | | running | 4928 | yes | n/a

2 | lightdbCluster1921681211135432 | standby | running | lightdbCluster1921681211125432 | running | 3727 | yes | 0 second(s) ago

4 | lightdbCluster1921681211145432 | witness | * running | lightdbCluster1921681211125432 | running | 3525 | yes | 0 second(s) ago

# 3.先断开所有连接到数据库的客户端和应用程序(否则数据库将stop failed),然后停止主库

# 3.1默认回滚所有未断开的连接

[lightdb@localhost ltcluster]$ lt_ctl -D $LTDATA stop

waiting for server to shut down................... done

server stopped

# 3.2如果存在客户端连接,尝试执行

[lightdb@localhost ltcluster]$ lt_ctl -D $LTDATA stop -m smart

waiting for server to shut down............................................................... failed

lt_ctl: server does not shut down

HINT: The "-m fast" option immediately disconnects sessions rather than

waiting for session-initiated disconnection.

# 3.3如果依旧失败,尝试执行

[lightdb@localhost ltcluster]$ lt_ctl -D $LTDATA stop -m immediate

[lightdb@localhost ltcluster]$ lt_ctl -D $LTDATA stop -m fast

# 4.等待数据库停止成功

# 5.修改数据库参数,或其他操作

# 6.启动主库

[lightdb@localhost ltcluster]$ lt_ctl -D $LTDATA start

2023-01-10 20:46:30.865738T,,,,,postmaster,,00000,2023-01-10 20:46:30 CST,0,72843,LOG: LightDB autoprewarm: prewarm dbnum=0

2023-01-10 20:46:30.869043T,,,,,postmaster,,00000,2023-01-10 20:46:30 CST,0,72843,LOG: ltaudit extension initialized

waiting for server to start........2023-01-10 20:46:35.267239T,,,,,postmaster,,00000,2023-01-10 20:46:30 CST,0,72843,LOG: redirecting log output to logging collector process

2023-01-10 20:46:35.267239T,,,,,postmaster,,00000,2023-01-10 20:46:30 CST,0,72843,HINT: Future log output will appear in directory "log".

done

server started

# 7.等待启动成功,验证集群状态

[lightdb@localhost ltcluster]$ ltcluster -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf service status

ID | Name | Role | Status | Upstream | ltclusterd | PID | Paused? | Upstream last seen

----+--------------------------------+---------+-----------+--------------------------------+------------+------+---------+--------------------

1 | lightdbCluster1921681211125432 | primary | * running | | running | 4928 | yes | n/a

2 | lightdbCluster1921681211135432 | standby | running | lightdbCluster1921681211125432 | running | 3727 | yes | 1 second(s) ago

4 | lightdbCluster1921681211145432 | witness | * running | lightdbCluster1921681211125432 | running | 3525 | yes | 1 second(s) ago

# 8.恢复集群状态

[lightdb@localhost ltcluster]$ ltcluster -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf service unpause

NOTICE: node 1 (lightdbCluster1921681211125432) unpaused

NOTICE: node 2 (lightdbCluster1921681211135432) unpaused

NOTICE: node 4 (lightdbCluster1921681211145432) unpaused

# 9.再次查验集群状态

[lightdb@localhost ltcluster]$ ltcluster -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf service status

ID | Name | Role | Status | Upstream | ltclusterd | PID | Paused? | Upstream last seen

----+--------------------------------+---------+-----------+--------------------------------+------------+------+---------+--------------------

1 | lightdbCluster1921681211125432 | primary | * running | | running | 4928 | no | n/a

2 | lightdbCluster1921681211135432 | standby | running | lightdbCluster1921681211125432 | running | 3727 | no | 0 second(s) ago

4 | lightdbCluster1921681211145432 | witness | * running | lightdbCluster1921681211125432 | running | 3525 | no | 0 second(s) ago- 重启备机keepalived服务

新开一个会话,使用root用户,按照上面输出的提示执行

[root@localhost bin]# cd /usr/local/lightdb/lightdb-x/13.8-22.3/tools/bin

#启动keepalive服务(standby server执行)

[root@localhost bin]# ./keepalived -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/keepalived/keepalived.conf

#查看进程

[root@localhost bin]# ./keepalived -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/keepalived/keepalived.conf

[root@localhost bin]# ps aux|grep keepalived

root 26657 0.0 0.0 48100 1008 ? Ss 20:55 0:00 ./keepalived -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/keepalived/keepalived.conf

root 26658 0.1 0.0 48100 1844 ? S 20:55 0:00 ./keepalived -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/keepalived/keepalived.conf

root 26659 0.0 0.0 48100 1364 ? S 20:55 0:00 ./keepalived -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/keepalived/keepalived.conf

备库因修改参数或者其他原因需要重启,在备库上执行

# 1.暂停ltclusterd,防止自动failover

[lightdb@localhost ltcluster]$ ltcluster -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf service pause

NOTICE: node 1 (lightdbCluster1921681211125432) paused

NOTICE: node 2 (lightdbCluster1921681211135432) paused

NOTICE: node 4 (lightdbCluster1921681211145432) paused

# 2.查看集群状态,确认standby的paused?状态为yes

[lightdb@localhost ltcluster]$ ltcluster -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf service status

ID | Name | Role | Status | Upstream | ltclusterd | PID | Paused? | Upstream last seen

----+--------------------------------+---------+-----------+--------------------------------+------------+------+---------+--------------------

1 | lightdbCluster1921681211125432 | primary | * running | | running | 4928 | yes | n/a

2 | lightdbCluster1921681211135432 | standby | running | lightdbCluster1921681211125432 | running | 3727 | yes | 0 second(s) ago

4 | lightdbCluster1921681211145432 | witness | * running | lightdbCluster1921681211125432 | running | 3525 | yes | 0 second(s) ago

# 3.先断开所有连接到数据库的客户端和应用程序(否则数据库将stop failed),然后停止主库

# 3.1默认回滚所有未断开的连接

[lightdb@localhost ltcluster]$ lt_ctl -D $LTDATA stop

waiting for server to shut down................... done

server stopped

# 3.2如果存在客户端连接,尝试执行

[lightdb@localhost ltcluster]$ lt_ctl -D $LTDATA stop -m smart

waiting for server to shut down............................................................... failed

lt_ctl: server does not shut down

HINT: The "-m fast" option immediately disconnects sessions rather than

waiting for session-initiated disconnection.

# 3.3如果依旧失败,尝试执行

[lightdb@localhost ltcluster]$ lt_ctl -D $LTDATA stop -m immediate

[lightdb@localhost ltcluster]$ lt_ctl -D $LTDATA stop -m fast

# 4.等待数据库停止成功,备库停止后,可以在主库机上执行查看集群状态

[lightdb@localhost ltcluster]$ ltcluster -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf service status

ID | Name | Role | Status | Upstream | ltclusterd | PID | Paused? | Upstream last seen

----+--------------------------------+---------+-----------+----------------------------------+------------+------+---------+--------------------

1 | lightdbCluster1921681211125432 | primary | * running | | running | 4928 | yes | n/a

2 | lightdbCluster1921681211135432 | standby | - failed | ? lightdbCluster1921681211125432 | n/a | n/a | n/a | n/a

4 | lightdbCluster1921681211145432 | witness | * running | lightdbCluster1921681211125432 | running | 3525 | yes | 0 second(s) ago

# 5.修改数据库参数,或其他操作

# 6.启动备库

[lightdb@localhost ltcluster]$ lt_ctl -D $LTDATA start

2023-01-10 20:46:30.865738T,,,,,postmaster,,00000,2023-01-10 20:46:30 CST,0,72843,LOG: LightDB autoprewarm: prewarm dbnum=0

2023-01-10 20:46:30.869043T,,,,,postmaster,,00000,2023-01-10 20:46:30 CST,0,72843,LOG: ltaudit extension initialized

waiting for server to start........2023-01-10 20:46:35.267239T,,,,,postmaster,,00000,2023-01-10 20:46:30 CST,0,72843,LOG: redirecting log output to logging collector process

2023-01-10 20:46:35.267239T,,,,,postmaster,,00000,2023-01-10 20:46:30 CST,0,72843,HINT: Future log output will appear in directory "log".

done

server started

# 7.等待启动成功,验证集群状态

[lightdb@localhost ltcluster]$ ltcluster -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf service status

ID | Name | Role | Status | Upstream | ltclusterd | PID | Paused? | Upstream last seen

----+--------------------------------+---------+-----------+--------------------------------+------------+------+---------+--------------------

1 | lightdbCluster1921681211125432 | primary | * running | | running | 4928 | yes | n/a

2 | lightdbCluster1921681211135432 | standby | running | lightdbCluster1921681211125432 | running | 3727 | yes | 1 second(s) ago

4 | lightdbCluster1921681211145432 | witness | * running | lightdbCluster1921681211125432 | running | 3525 | yes | 1 second(s) ago

# 8.恢复集群状态

[lightdb@localhost ltcluster]$ ltcluster -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf service unpause

NOTICE: node 1 (lightdbCluster1921681211125432) unpaused

NOTICE: node 2 (lightdbCluster1921681211135432) unpaused

NOTICE: node 4 (lightdbCluster1921681211145432) unpaused

# 9.再次查验集群状态

[lightdb@localhost ltcluster]$ ltcluster -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf service status

ID | Name | Role | Status | Upstream | ltclusterd | PID | Paused? | Upstream last seen

----+--------------------------------+---------+-----------+--------------------------------+------------+------+---------+--------------------

1 | lightdbCluster1921681211125432 | primary | * running | | running | 4928 | no | n/a

2 | lightdbCluster1921681211135432 | standby | running | lightdbCluster1921681211125432 | running | 3727 | no | 0 second(s) ago

4 | lightdbCluster1921681211145432 | witness | * running | lightdbCluster1921681211125432 | running | 3525 | no | 0 second(s) ago集群正常运行状态下进行主备切换,可以通过在备机上使用switchover来自动完成

# 1.在备机上试运行

[lightdb@localhost ~]$ ltcluster -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf standby switchover --siblings-follow --dry-run

NOTICE: checking switchover on node "lightdbCluster1921681211135432" (ID: 2) in --dry-run mode

INFO: SSH connection to host "192.168.121.112" succeeded

INFO: able to execute "ltcluster" on remote host "192.168.121.112"

INFO: all sibling nodes are reachable via SSH

INFO: 2 walsenders required, 10 available

INFO: demotion candidate is able to make replication connection to promotion candidate

INFO: 0 pending archive files

INFO: replication lag on this standby is 0 seconds

INFO: 1 replication slots required, 10 available

INFO: would pause ltclusterd on node "lightdbCluster1921681211125432" (ID 1)

INFO: would pause ltclusterd on node "lightdbCluster1921681211135432" (ID 2)

INFO: would pause ltclusterd on node "lightdbCluster1921681211145432" (ID 4)

NOTICE: local node "lightdbCluster1921681211135432" (ID: 2) would be promoted to primary; current primary "lightdbCluster1921681211125432" (ID: 1) would be demoted to standby

INFO: following shutdown command would be run on node "lightdbCluster1921681211125432":

"/usr/local/lightdb/lightdb-x/13.8-22.3/bin/lt_ctl -D '/usr/local/lightdb/lightdb-x/13.8-22.3/data/defaultCluster' -W -m fast stop"

INFO: parameter "shutdown_check_timeout" is set to 1800 seconds

INFO: prerequisites for executing STANDBY SWITCHOVER are met

# 2.如果显示prerequisites for executing STANDBY SWITCHOVER are met,表示成功,可进行下一步

# 3.在备库机上正式执行

[lightdb@localhost ~]$ ltcluster -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf standby switchover --siblings-follow

NOTICE: executing switchover on node "lightdbCluster1921681211135432" (ID: 2)

ltcluster -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf standby switchover --siblings-follow

NOTICE: local node "lightdbCluster1921681211135432" (ID: 2) will be promoted to primary; current primary "lightdbCluster1921681211125432" (ID: 1) will be demoted to standby

NOTICE: stopping current primary node "lightdbCluster1921681211125432" (ID: 1)

NOTICE: issuing CHECKPOINT on node "lightdbCluster1921681211125432" (ID: 1)

DETAIL: executing server command "/usr/local/lightdb/lightdb-x/13.8-22.3/bin/lt_ctl -D '/usr/local/lightdb/lightdb-x/13.8-22.3/data/defaultCluster' -W -m fast stop"

INFO: checking for primary shutdown; 1 of 1800 attempts ("shutdown_check_timeout")

INFO: checking for primary shutdown; 2 of 1800 attempts ("shutdown_check_timeout")

INFO: checking for primary shutdown; 3 of 1800 attempts ("shutdown_check_timeout")

INFO: checking for primary shutdown; 4 of 1800 attempts ("shutdown_check_timeout")

INFO: checking for primary shutdown; 5 of 1800 attempts ("shutdown_check_timeout")

INFO: checking for primary shutdown; 6 of 1800 attempts ("shutdown_check_timeout")

INFO: checking for primary shutdown; 7 of 1800 attempts ("shutdown_check_timeout")

INFO: checking for primary shutdown; 8 of 1800 attempts ("shutdown_check_timeout")

INFO: checking for primary shutdown; 9 of 1800 attempts ("shutdown_check_timeout")

INFO: checking for primary shutdown; 10 of 1800 attempts ("shutdown_check_timeout")

INFO: checking for primary shutdown; 11 of 1800 attempts ("shutdown_check_timeout")

INFO: checking for primary shutdown; 12 of 1800 attempts ("shutdown_check_timeout")

INFO: checking for primary shutdown; 13 of 1800 attempts ("shutdown_check_timeout")

NOTICE: current primary has been cleanly shut down at location 0/A0000028

NOTICE: promoting standby to primary

DETAIL: promoting server "lightdbCluster1921681211135432" (ID: 2) using pg_promote()

NOTICE: waiting up to 60 seconds (parameter "promote_check_timeout") for promotion to complete

NOTICE: STANDBY PROMOTE successful

DETAIL: server "lightdbCluster1921681211135432" (ID: 2) was successfully promoted to primary

INFO: local node 1 can attach to rejoin target node 2

DETAIL: local node's recovery point: 0/A0000028; rejoin target node's fork point: 0/A00000A0

INFO: creating replication slot as user "ltcluster"

NOTICE: setting node 1's upstream to node 2

WARNING: unable to ping "host=192.168.121.112 port=5432 user=ltcluster dbname=ltcluster connect_timeout=2"

DETAIL: PQping() returned "PQPING_NO_RESPONSE"

NOTICE: starting server using "/usr/local/lightdb/lightdb-x/13.8-22.3/bin/lt_ctl -w -D '/usr/local/lightdb/lightdb-x/13.8-22.3/data/defaultCluster' start"

WARNING: node "lightdbCluster1921681211125432" not found in "pg_stat_replication"

ERROR: connection to database failed

DETAIL:

could not connect to server: Connection refused

Is the server running on host "192.168.121.112" and accepting

TCP/IP connections on port 5432?

DETAIL: attempted to connect using:

user=ltcluster connect_timeout=2 dbname=ltcluster host=192.168.121.112 port=5432 fallback_application_name=ltcluster options=-csearch_path=

WARNING: unable to connect to local node to check replication slot status

HINT: execute "ltcluster node check" to check inactive slots and drop manually if necessary

NOTICE: NODE REJOIN successful

DETAIL: node 1 is now attached to node 2

WARNING: node "lightdbCluster1921681211125432" not found in "pg_stat_replication"

INFO: waiting for node "lightdbCluster1921681211125432" (ID: 1) to connect to new primary; 1 of max 60 attempts (parameter "node_rejoin_timeout")

DETAIL: checking for record in node "lightdbCluster1921681211135432"'s "pg_stat_replication" table where "application_name" is "lightdbCluster1921681211125432"

WARNING: node "lightdbCluster1921681211125432" not found in "pg_stat_replication"

WARNING: node "lightdbCluster1921681211125432" not found in "pg_stat_replication"

WARNING: node "lightdbCluster1921681211125432" not found in "pg_stat_replication"

WARNING: node "lightdbCluster1921681211125432" not found in "pg_stat_replication"

WARNING: node "lightdbCluster1921681211125432" attached in state "catchup"

INFO: waiting for node "lightdbCluster1921681211125432" (ID: 1) to connect to new primary; 6 of max 60 attempts (parameter "node_rejoin_timeout")

DETAIL: node "lightdbCluster1921681211135432" (ID: 1) is currrently attached to its upstream node in state "catchup"

WARNING: node "lightdbCluster1921681211125432" attached in state "catchup"

WARNING: node "lightdbCluster1921681211125432" attached in state "catchup"

WARNING: node "lightdbCluster1921681211125432" attached in state "catchup"

WARNING: node "lightdbCluster1921681211125432" attached in state "catchup"

WARNING: node "lightdbCluster1921681211125432" attached in state "catchup"

INFO: waiting for node "lightdbCluster1921681211125432" (ID: 1) to connect to new primary; 11 of max 60 attempts (parameter "node_rejoin_timeout")

DETAIL: node "lightdbCluster1921681211135432" (ID: 1) is currrently attached to its upstream node in state "catchup"

NOTICE: node "lightdbCluster1921681211135432" (ID: 2) promoted to primary, node "lightdbCluster1921681211125432" (ID: 1) demoted to standby

NOTICE: executing STANDBY FOLLOW on 1 of 1 siblings

INFO: node 4 received notification to follow node 2

INFO: STANDBY FOLLOW successfully executed on all reachable sibling nodes

NOTICE: replication slot "ltcluster_slot_2" deleted on node 1

NOTICE: switchover was successful

DETAIL: node "lightdbCluster1921681211135432" is now primary and node "lightdbCluster1921681211125432" is attached as standby

NOTICE: STANDBY SWITCHOVER has completed successfully

# 4.查看集群状态(2切换成了primary)

[lightdb@localhost ~]$ ltcluster -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf service status

ID | Name | Role | Status | Upstream | ltclusterd | PID | Paused? | Upstream last seen

----+--------------------------------+---------+-----------+--------------------------------+------------+------+---------+--------------------

1 | lightdbCluster1921681211125432 | standby | running | lightdbCluster1921681211135432 | running | 4928 | no | 1 second(s) ago

2 | lightdbCluster1921681211135432 | primary | * running | | running | 3727 | no | n/a

4 | lightdbCluster1921681211145432 | witness | * running | lightdbCluster1921681211135432 | running | 3525 | no | 0 second(s) ago 当主库发生故障(如宕机)failover后,备库会自动提升为新主库,以确保集群可用 因上面做了一次主备切换,目前新的主库地址为:192.168.121.113,备库地址为:192.168.121.112

- 模拟主库故障,将主库所在机器(192.168.121.113)上的lightdb进程停掉

# 1.查看lightdb进程,确认lightdb是停止运行状态,如果不是,执行步骤2停掉,来模拟主库故障

[lightdb@localhost ~]$ ps aux|grep lightdb

# 2.停止lightdb

[lightdb@localhost ltcluster]$ lt_ctl -D $LTDATA stop

waiting for server to shut down......... done

server stopped

# 3.查看状态

[lightdb@localhost ltcluster]$ ltcluster -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf service status

ERROR: connection to database failed

DETAIL:

could not connect to server: Connection refused

Is the server running on host "192.168.121.113" and accepting

TCP/IP connections on port 5432?

DETAIL: attempted to connect using:

user=ltcluster connect_timeout=2 dbname=ltcluster host=192.168.121.113 port=5432 fallback_application_name=ltcluster options=-csearch_path=

# 4.在备库机器上查询状态(可以看到1机器切换到了primary)

[lightdb@localhost ltcluster]$ ltcluster -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf service status

ID | Name | Role | Status | Upstream | ltclusterd | PID | Paused? | Upstream last seen

----+--------------------------------+---------+-----------+--------------------------------+------------+------+---------+--------------------

1 | lightdbCluster1921681211125432 | primary | * running | | running | 4928 | no | n/a

2 | lightdbCluster1921681211135432 | primary | - failed | ? | n/a | n/a | n/a | n/a

4 | lightdbCluster1921681211145432 | witness | * running | lightdbCluster1921681211125432 | running | 3525 | no | 0 second(s) ago

WARNING: following issues were detected

- unable to connect to node "lightdbCluster1921681211135432" (ID: 2)

HINT: execute with --verbose option to see connection error messages- 确认当前主库所在机器(192.168.121.113)上的ltcluster处于运行状态

# 1.查看ltcluster进程

[lightdb@localhost ltcluster]$ ps aux|grep ltcluster

lightdb 60183 0.0 0.0 116884 1624 ? Sl 22:47 0:00 /usr/local/lightdb/lightdb-x/13.8-22.3/bin/ltclusterd -d -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf -p /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltclusterd.pid

# 2.确认ltcluster是运行状态,如果没有,则执行下面命令启动

[lightdb@localhost ltcluster]$ /usr/local/lightdb/lightdb-x/13.8-22.3/bin/ltclusterd -d -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf -p /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltclusterd.pid

[2023-01-10 22:47:18] [NOTICE] redirecting logging output to "/usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster2023-01-10_224718.log"3.重启故障机器上的lightdb数据库进程

# 1.重新启动lightdb数据库进程

[lightdb@localhost ltcluster]$ lt_ctl -D $LTDATA start

2023-01-10 23:02:16.502933T,,,,,postmaster,,00000,2023-01-10 23:02:16 CST,0,62485,LOG: LightDB autoprewarm: prewarm dbnum=0

waiting for server to start....2023-01-10 23:02:16.508863T,,,,,postmaster,,00000,2023-01-10 23:02:16 CST,0,62485,LOG: ltaudit extension initialized

...2023-01-10 23:02:20.135427T,,,,,postmaster,,00000,2023-01-10 23:02:16 CST,0,62485,LOG: redirecting log output to logging collector process

2023-01-10 23:02:20.135427T,,,,,postmaster,,00000,2023-01-10 23:02:16 CST,0,62485,HINT: Future log output will appear in directory "log".

done

server started4.故障恢复后重新加入集群

故障恢复后的数据库不会自动加入集群,如果想要重新加入,可以使用region将当前数据库恢复成备库。如果还想将当前数据库成为主库,可以参考主从切换操作。

# 1.region试运行(ps:host地址为集群中primary的ip地址)

[lightdb@localhost ltcluster]$ ltcluster -f $LTHOME/etc/ltcluster/ltcluster.conf node rejoin -d 'host=192.168.121.112 port=5432 dbname=ltcluster user=ltcluster' --verbose --force-rewind --dry-run

NOTICE: using provided configuration file "/usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf"

INFO: replication slots in use, 1 free slots on node 10

INFO: replication connection to the rejoin target node was successful

INFO: local and rejoin target system identifiers match

DETAIL: system identifier is 7186927613994770615

NOTICE: lt_rewind execution required for this node to attach to rejoin target node 1

DETAIL: rejoin target server's timeline 3 forked off current database system timeline 2 before current recovery point 1/28

INFO: prerequisites for using lt_rewind are met

INFO: temporary archive directory "/tmp/ltcluster-config-archive-lightdbCluster1921681211135432" created

INFO: 0 files would have been copied to "/tmp/ltcluster-config-archive-lightdbCluster1921681211135432"

INFO: temporary archive directory "/tmp/ltcluster-config-archive-lightdbCluster1921681211135432" deleted

INFO: lt_rewind would now be executed

DETAIL: lt_rewind command is:

/usr/local/lightdb/lightdb-x/13.8-22.3/bin/lt_rewind -D '/usr/local/lightdb/lightdb-x/13.8-22.3/data/defaultCluster' --source-server='host=192.168.121.112 port=5432 user=ltcluster dbname=ltcluster connect_timeout=2'

INFO: prerequisites for executing NODE REJOIN are met

# 2.等待确认执行成功

# 3.region正式执行

[lightdb@localhost ltcluster]$ ltcluster -f $LTHOME/etc/ltcluster/ltcluster.conf node rejoin -d 'host=192.168.121.112 port=5432 dbname=ltcluster user=ltcluster' --verbose --force-rewind

NOTICE: using provided configuration file "/usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf"

NOTICE: lt_rewind execution required for this node to attach to rejoin target node 1

DETAIL: rejoin target server's timeline 3 forked off current database system timeline 2 before current recovery point 1/28

INFO: prerequisites for using lt_rewind are met

INFO: 0 files copied to "/tmp/ltcluster-config-archive-lightdbCluster1921681211135432"

NOTICE: executing lt_rewind

DETAIL: lt_rewind command is "/usr/local/lightdb/lightdb-x/13.8-22.3/bin/lt_rewind -D '/usr/local/lightdb/lightdb-x/13.8-22.3/data/defaultCluster' --source-server='host=192.168.121.112 port=5432 user=ltcluster dbname=ltcluster connect_timeout=2'"

NOTICE: 0 files copied to /usr/local/lightdb/lightdb-x/13.8-22.3/data/defaultCluster

INFO: directory "/tmp/ltcluster-config-archive-lightdbCluster1921681211135432" deleted

INFO: creating replication slot as user "ltcluster"

NOTICE: setting node 2's upstream to node 1

WARNING: unable to ping "host=192.168.121.113 port=5432 user=ltcluster dbname=ltcluster connect_timeout=2"

DETAIL: PQping() returned "PQPING_NO_RESPONSE"

NOTICE: starting server using "/usr/local/lightdb/lightdb-x/13.8-22.3/bin/lt_ctl -w -D '/usr/local/lightdb/lightdb-x/13.8-22.3/data/defaultCluster' start"

WARNING: unable to ping "host=192.168.121.113 port=5432 user=ltcluster dbname=ltcluster connect_timeout=2"

DETAIL: PQping() returned "PQPING_NO_RESPONSE"

INFO: waiting for node "lightdbCluster1921681211135432" (ID: 2) to respond to pings; 1 of max 60 attempts (parameter "node_rejoin_timeout")

WARNING: unable to ping "host=192.168.121.113 port=5432 user=ltcluster dbname=ltcluster connect_timeout=2"

DETAIL: PQping() returned "PQPING_NO_RESPONSE"

WARNING: unable to ping "host=192.168.121.113 port=5432 user=ltcluster dbname=ltcluster connect_timeout=2"

DETAIL: PQping() returned "PQPING_NO_RESPONSE"

WARNING: unable to ping "host=192.168.121.113 port=5432 user=ltcluster dbname=ltcluster connect_timeout=2"

DETAIL: PQping() returned "PQPING_NO_RESPONSE"

INFO: node "lightdbCluster1921681211135432" (ID: 2) is pingable

INFO: node "lightdbCluster1921681211135432" (ID: 2) has attached to its upstream node

NOTICE: NODE REJOIN successful

DETAIL: node 2 is now attached to node 1

# 4.查看集群状态(可以看到2重新加入了集群)

[lightdb@localhost ltcluster]$ ltcluster -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf service status

ID | Name | Role | Status | Upstream | ltclusterd | PID | Paused? | Upstream last seen

----+--------------------------------+---------+-----------+--------------------------------+------------+-------+---------+--------------------

1 | lightdbCluster1921681211125432 | primary | * running | | running | 4928 | no | n/a

2 | lightdbCluster1921681211135432 | standby | running | lightdbCluster1921681211125432 | running | 60183 | no | 1 second(s) ago

4 | lightdbCluster1921681211145432 | witness | * running | lightdbCluster1921681211125432 | running | 3525 | no | 1 second(s) ago

# 5.确认keepalived正常运行,如果没有运行,则需要启动它

[lightdb@localhost ltcluster]$ ps aux | grep keepalived

root 26657 0.0 0.0 48100 1008 ? Ss 20:56 0:01 ./keepalived -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/keepalived/keepalived.conf

root 26658 0.0 0.0 48100 1844 ? S 20:56 0:01 ./keepalived -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/keepalived/keepalived.conf

root 26659 0.0 0.0 48100 1408 ? S 20:56 0:03 ./keepalived -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/keepalived/keepalived.conf

lightdb 63836 0.0 0.0 112832 984 pts/1 S+ 23:32 0:00 grep --color=auto keepalived5.以上执行region如果失败,可以按照下面步骤重新克隆

# 1.确认lightdb数据库已经停止运行,如果没有,执行以下命令进行停止

[lightdb@localhost ltcluster]$ lt_ctl -D $LTDATA stop

waiting for server to shut down.... done

server stopped

# 2.查看一下集群状态

[lightdb@localhost data]$ ltcluster -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf service status

ERROR: connection to database failed

DETAIL:

could not connect to server: Connection refused

Is the server running on host "192.168.121.113" and accepting

TCP/IP connections on port 5432?

DETAIL: attempted to connect using:

user=ltcluster connect_timeout=2 dbname=ltcluster host=192.168.121.113 port=5432 fallback_application_name=ltcluster options=-csearch_path=

# 3.清空实例目录下的内容($LTDATA/),如果需要,可以先进行备份

# 4.clone试运行

[lightdb@localhost data]$ ltcluster -h 192.168.121.112 -p 5432 -U ltcluster -d ltcluster -f $LTHOME/etc/ltcluster/ltcluster.conf standby clone --dry-run

NOTICE: destination directory "/usr/local/lightdb/lightdb-x/13.8-22.3/data/defaultCluster" provided

INFO: connecting to source node

DETAIL: connection string is: host=192.168.121.112 port=5432 user=ltcluster dbname=ltcluster

DETAIL: current installation size is 83 MB

INFO: "ltcluster" extension is installed in database "ltcluster"

INFO: parameter "max_replication_slots" set to 10

INFO: parameter "max_wal_senders" set to 10

NOTICE: checking for available walsenders on the source node (2 required)

INFO: sufficient walsenders available on the source node

DETAIL: 2 required, 10 available

NOTICE: checking replication connections can be made to the source server (2 required)

INFO: required number of replication connections could be made to the source server

DETAIL: 2 replication connections required

INFO: replication slots will be created by user "ltcluster"

NOTICE: standby will attach to upstream node 1

HINT: consider using the -c/--fast-checkpoint option

INFO: all prerequisites for "standby clone" are met

# 5.确认all prerequisites for "standby clone" are met

# 6.克隆实例目录(ps:-h为primary服务器地址)

[lightdb@localhost data]$ ltcluster -h 192.168.121.112 -p 5432 -U ltcluster -d ltcluster -f $LTHOME/etc/ltcluster/ltcluster.conf standby clone -F

NOTICE: destination directory "/usr/local/lightdb/lightdb-x/13.8-22.3/data/defaultCluster" provided

INFO: connecting to source node

DETAIL: connection string is: host=192.168.121.112 port=5432 user=ltcluster dbname=ltcluster

DETAIL: current installation size is 83 MB

NOTICE: checking for available walsenders on the source node (2 required)

NOTICE: checking replication connections can be made to the source server (2 required)

INFO: creating directory "/usr/local/lightdb/lightdb-x/13.8-22.3/data/defaultCluster"...

INFO: creating replication slot as user "ltcluster"

NOTICE: starting backup (using lt_basebackup)...

HINT: this may take some time; consider using the -c/--fast-checkpoint option

INFO: executing:

/usr/local/lightdb/lightdb-x/13.8-22.3/bin/lt_basebackup -l "ltcluster base backup" -D /usr/local/lightdb/lightdb-x/13.8-22.3/data/defaultCluster -h 192.168.121.112 -p 5432 -U ltcluster -X stream -S ltcluster_slot_2

NOTICE: standby clone (using lt_basebackup) complete

NOTICE: you can now start your LightDB server

HINT: for example: lt_ctl -D /usr/local/lightdb/lightdb-x/13.8-22.3/data/defaultCluster start

HINT: after starting the server, you need to re-register this standby with "ltcluster standby register --force" to update the existing node record

# 7.启动数据库

[lightdb@localhost data]$ lt_ctl -D $LTDATA start

2023-01-11 00:02:19.037650T,,,,,postmaster,,00000,2023-01-11 00:02:19 CST,0,65044,LOG: LightDB autoprewarm: prewarm dbnum=0

2023-01-11 00:02:19.040082T,,,,,postmaster,,00000,2023-01-11 00:02:19 CST,0,65044,LOG: ltaudit extension initialized

waiting for server to start......2023-01-11 00:02:22.125889T,,,,,postmaster,,00000,2023-01-11 00:02:19 CST,0,65044,LOG: redirecting log output to logging collector process

2023-01-11 00:02:22.125889T,,,,,postmaster,,00000,2023-01-11 00:02:19 CST,0,65044,HINT: Future log output will appear in directory "log".

done

server started

# 8.重新注册为standby

[lightdb@localhost data]$ ltcluster -f $LTHOME/etc/ltcluster/ltcluster.conf standby register -F

INFO: connecting to local node "lightdbCluster1921681211135432" (ID: 2)

INFO: connecting to primary database

INFO: standby registration complete

NOTICE: standby node "lightdbCluster1921681211135432" (ID: 2) successfully registered

# 9.查看集群状态(可以看到2正常加入到集群中)

[lightdb@localhost data]$ ltcluster -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf service status

ID | Name | Role | Status | Upstream | ltclusterd | PID | Paused? | Upstream last seen

----+--------------------------------+---------+-----------+--------------------------------+------------+-------+---------+--------------------

1 | lightdbCluster1921681211125432 | primary | * running | | running | 4928 | no | n/a

2 | lightdbCluster1921681211135432 | standby | running | lightdbCluster1921681211125432 | running | 60183 | no | 0 second(s) ago

4 | lightdbCluster1921681211145432 | witness | * running | lightdbCluster1921681211125432 | running | 3525 | no | 0 second(s) ago

# 10.确认keepalived正常运行,如果没有运行,则需要启动它

[lightdb@localhost ltcluster]$ ps aux | grep keepalived

root 26657 0.0 0.0 48100 1008 ? Ss 20:56 0:01 ./keepalived -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/keepalived/keepalived.conf

root 26658 0.0 0.0 48100 1844 ? S 20:56 0:01 ./keepalived -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/keepalived/keepalived.conf

root 26659 0.0 0.0 48100 1408 ? S 20:56 0:03 ./keepalived -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/keepalived/keepalived.conf备库发生故障后的恢复步骤如下

# 1.确认ltcluster是运行状态,如果没有,则执行下面命令启动

[lightdb@localhost ltcluster]$ /usr/local/lightdb/lightdb-x/13.8-22.3/bin/ltclusterd -d -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster.conf -p /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltclusterd.pid

[2023-01-10 22:47:18] [NOTICE] redirecting logging output to "/usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster2023-01-10_224718.log"

# 2.启动lightdb数据库

[lightdb@localhost data]$ lt_ctl -D $LTDATA start

2023-01-11 00:02:19.037650T,,,,,postmaster,,00000,2023-01-11 00:02:19 CST,0,65044,LOG: LightDB autoprewarm: prewarm dbnum=0

2023-01-11 00:02:19.040082T,,,,,postmaster,,00000,2023-01-11 00:02:19 CST,0,65044,LOG: ltaudit extension initialized

waiting for server to start......2023-01-11 00:02:22.125889T,,,,,postmaster,,00000,2023-01-11 00:02:19 CST,0,65044,LOG: redirecting log output to logging collector process

2023-01-11 00:02:22.125889T,,,,,postmaster,,00000,2023-01-11 00:02:19 CST,0,65044,HINT: Future log output will appear in directory "log".

done

server started

# 3.确认keepalived正常运行,如果没有运行,则需要启动它

[lightdb@localhost ltcluster]$ ps aux | grep keepalived

root 26657 0.0 0.0 48100 1008 ? Ss 20:56 0:01 ./keepalived -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/keepalived/keepalived.conf

root 26658 0.0 0.0 48100 1844 ? S 20:56 0:01 ./keepalived -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/keepalived/keepalived.conf

root 26659 0.0 0.0 48100 1408 ? S 20:56 0:03 ./keepalived -f /usr/local/lightdb/lightdb-x/13.8-22.3/etc/keepalived/keepalived.conf不通业务场景对数据库主备一致性有不同的要求,一致性越高对性能影响越大。可以通过调整synchronous_commit来达到不通级别的一致性

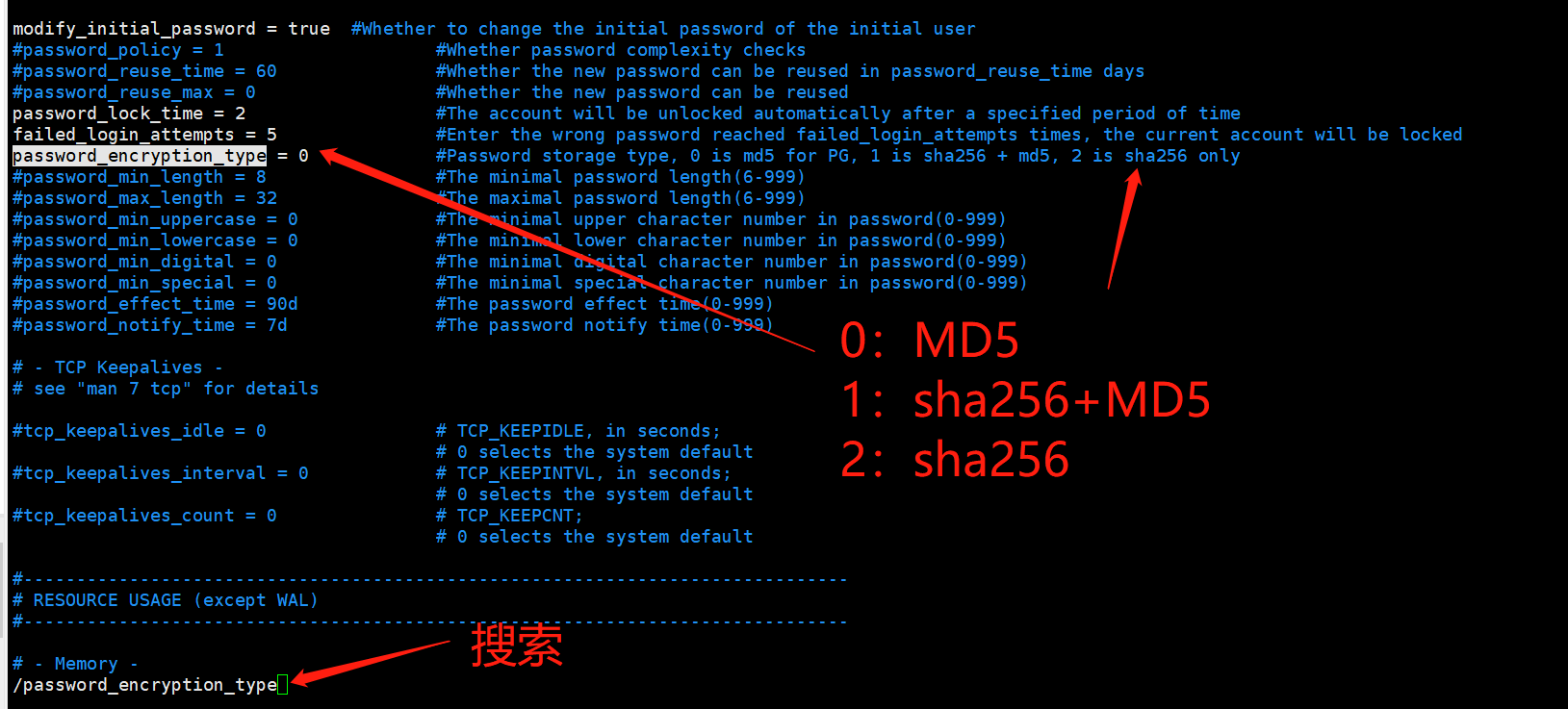

修改$LTDATA/lightdb.conf文件中的配置参数

# 同步模式下,修改主节点上的配置

synchronous_commit = 'on'

synchronous_standby_names = '*'

# 异步模式下,修改主节点上的配置

synchronous_commit = 'local'

synchronous_standby_names = ''

# 使修改生效

[lightdb@localhost defaultCluster]$ lt_ctl -D $LTDATA reload

server signaledltcluster和lightdb的运行是独立的。如果三台机器全部宕机重启,这时候lightdb和ltcluster进程都不存在。

如果想要恢复集群环境,需要先启动lightdb,再启动ltcluster

[lightdb@localhost ~]$ cat /usr/local/lightdb/lightdb-x/13.8-22.3/etc/ltcluster/ltcluster2023-01-12_103553.log

[2023-01-12 10:35:53] [NOTICE] ltclusterd (ltclusterd 5.2.1) starting up

[2023-01-12 10:35:53] [INFO] connecting to database "host=192.168.121.112 port=5432 user=ltcluster dbname=ltcluster connect_timeout=2"

[2023-01-12 10:35:53] [ERROR] connection to database failed

[2023-01-12 10:35:53] [DETAIL]

could not connect to server: Connection refused

Is the server running on host "192.168.121.112" and accepting

TCP/IP connections on port 5432?

[2023-01-12 10:35:53] [DETAIL] attempted to connect using:

user=ltcluster connect_timeout=2 dbname=ltcluster host=192.168.121.112 port=5432 fallback_application_name=ltcluster options=-csearch_path=