文章目录

- 声明

- 目标网站

- acw_sc__v2分析

- python调用测试

- 话外拓展-风控浅析

- 往期逆向文章推荐

声明

本文章中所有内容仅供学习交流,严禁用于商业用途和非法用途,否则由此产生的一切后果均与作者无关,若有侵权,请私信我立即删除!

目标网站

aHR0cHM6Ly93ZS41MWpvYi5jb20vcGMvc2VhcmNoP2tleXdvcmQ9amF2YSZzZWFyY2hUeXBlPTImc29ydFR5cGU9MCZtZXRybz0=

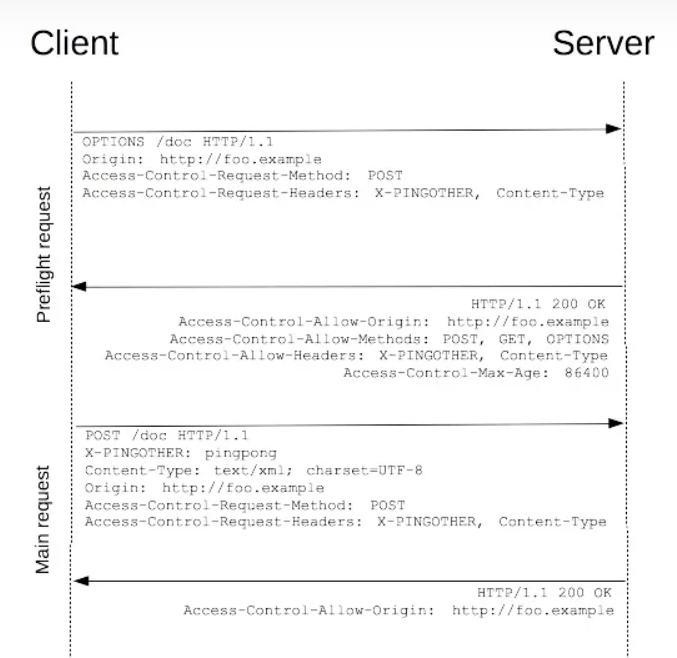

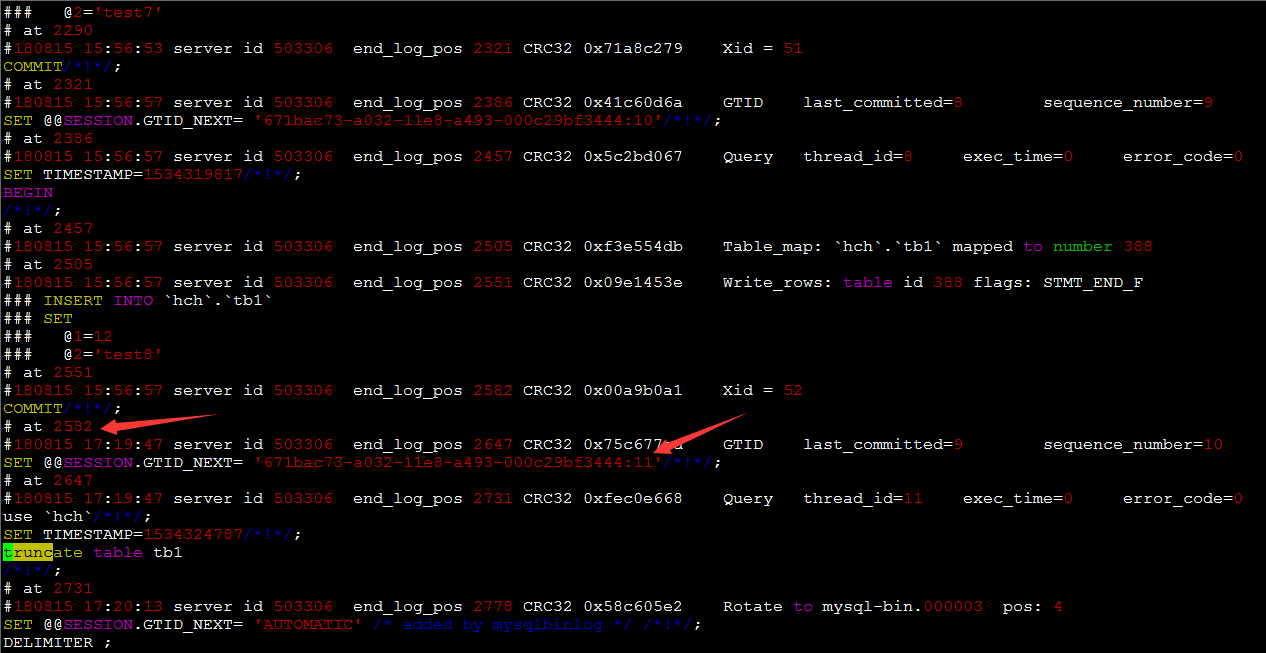

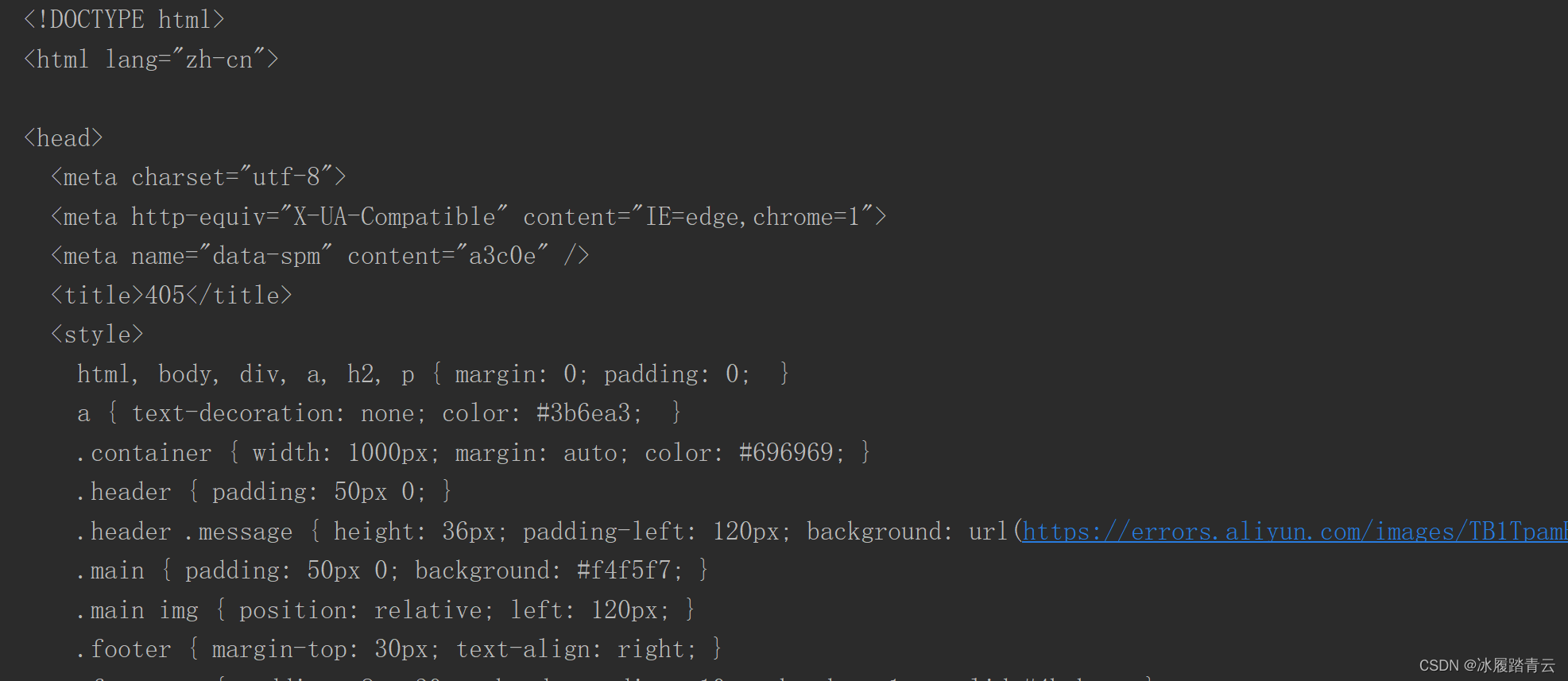

acw_sc__v2分析

看到了熟悉的acw_sc__v2,不熟悉的推荐之前写的两篇文章:

阿里系cookie之acw_sc__v2 逆向分析

雪球acw_sc__v2

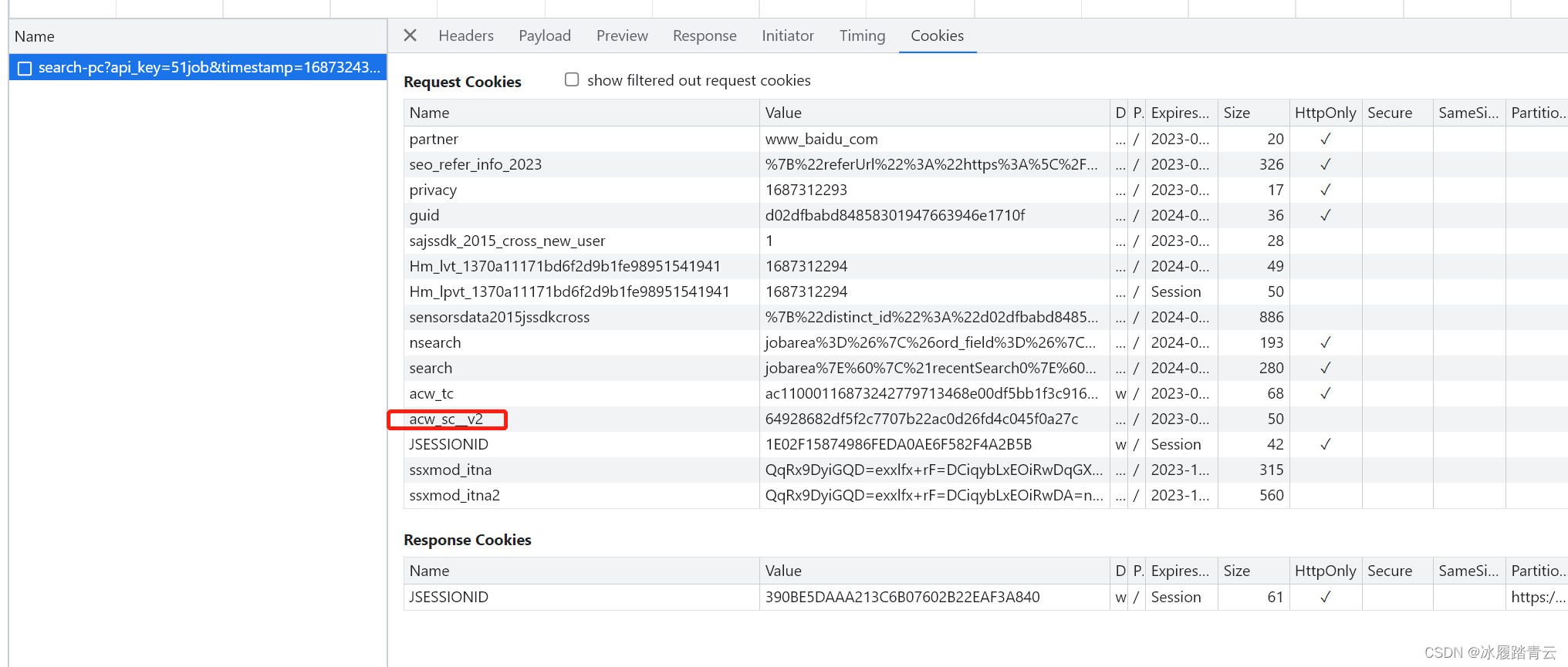

不出意外第一次请求应该是设置acw_sc__v2

这个acw_sc__v2很多网站的算法都是通用的,我们可以直接用之前的代码,也可以自己跟跟看。

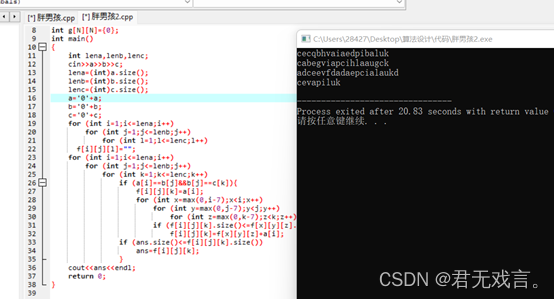

acw_sc__v2 生成js:

window = {};

var arg3 = null;

var arg4 = null;

var arg5 = null;

var arg6 = null;

var arg7 = null;

var arg8 = null;

var arg9 = null;

var arg10 = null;

var l = function (arg1) {

while (window["_phantom"] || window["__phantomas"]) {}

var _0x5e8b26 = "3000176000856006061501533003690027800375";

String["prototype"]["hexXor"] = function (_0x4e08d8) {

var _0x5a5d3b = "";

for (var _0xe89588 = 0; _0xe89588 < this["length"] && _0xe89588 < _0x4e08d8["length"]; _0xe89588 += 2) {

var _0x401af1 = parseInt(this["slice"](_0xe89588, _0xe89588 + 2), 16);

var _0x105f59 = parseInt(_0x4e08d8["slice"](_0xe89588, _0xe89588 + 2), 16);

var _0x189e2c = (_0x401af1 ^ _0x105f59)["toString"](16);

if (_0x189e2c["length"] == 1) {

_0x189e2c = "0" + _0x189e2c;

}

_0x5a5d3b += _0x189e2c;

}

return _0x5a5d3b;

};

String["prototype"]["unsbox"] = function () {

var _0x4b082b = [15, 35, 29, 24, 33, 16, 1, 38, 10, 9, 19, 31, 40, 27, 22, 23, 25, 13, 6, 11, 39, 18, 20, 8, 14, 21, 32, 26, 2, 30, 7, 4, 17, 5, 3, 28, 34, 37, 12, 36];

var _0x4da0dc = [];

var _0x12605e = "";

for (var _0x20a7bf = 0; _0x20a7bf < this["length"]; _0x20a7bf++) {

var _0x385ee3 = this[_0x20a7bf];

for (var _0x217721 = 0; _0x217721 < _0x4b082b["length"]; _0x217721++) {

if (_0x4b082b[_0x217721] == _0x20a7bf + 1) {

_0x4da0dc[_0x217721] = _0x385ee3;

}

}

}

_0x12605e = _0x4da0dc["join"]("");

return _0x12605e;

};

var _0x23a392 = arg1["unsbox"]();

arg2 = _0x23a392["hexXor"](_0x5e8b26);

console.log('arg2==>',arg2)

// setTimeout("reload(arg2)", 2);

return arg2

};

// var arg1 = "FAA6CB46CF724D58FF82E5310687947623413114";

// l(arg1)

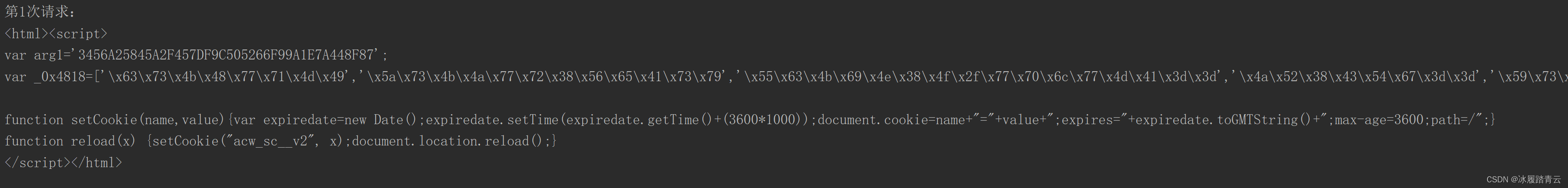

python调用测试

# -*- coding: UTF-8 -*-

import time

import uuid

import requests

import re

import json

import execjs

headers = {

'Accept': 'application/json, text/plain, */*',

'Accept-Language': 'zh',

'Cache-Control': 'no-cache',

'Connection': 'keep-alive',

'From-Domain': '51job_web',

'Pragma': 'no-cache',

'Referer': 'https://we.51job.com/pc/search?keyword=java&searchType=2&sortType=0&metro=',

'Sec-Fetch-Dest': 'empty',

'Sec-Fetch-Mode': 'cors',

'Sec-Fetch-Site': 'same-origin',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/114.0.0.0 Safari/537.36',

'account-id': '',

'partner': '',

'property': '%7B%22partner%22%3A%22%22%2C%22webId%22%3A2%2C%22fromdomain%22%3A%2251job_web%22%2C%22frompageUrl%22%3A%22https%3A%2F%2Fwe.51job.com%2F%22%2C%22pageUrl%22%3A%22https%3A%2F%2Fwe.51job.com%2Fpc%2Fsearch%3Fkeyword%3Djava%26searchType%3D2%26sortType%3D0%26metro%3D%22%2C%22identityType%22%3A%22%22%2C%22userType%22%3A%22%22%2C%22isLogin%22%3A%22%E5%90%A6%22%2C%22accountid%22%3A%22%22%7D',

'sec-ch-ua': '"Not.A/Brand";v="8", "Chromium";v="114", "Google Chrome";v="114"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'sign': '839932c059141791d8a003f0e6652e14facbf788a502df374fecf9c107d93b9e',

'user-token': '',

'uuid': '1687228791235576552',

}

params = {

'api_key': '51job',

'timestamp': '1687228791',

'keyword': 'java',

'searchType': '2',

'function': '',

'industry': '',

'jobArea': '000000',

'jobArea2': '',

'landmark': '',

'metro': '',

'salary': '',

'workYear': '',

'degree': '',

'companyType': '',

'companySize': '',

'jobType': '',

'issueDate': '',

'sortType': '0',

'pageNum': '1',

'requestId': '',

'pageSize': '20',

'source': '1',

'accountId': '',

'pageCode': 'sou|sou|soulb',

}

# 换成自己的代理,或者不用,单个ip应该有限制

proxies = {

"http":"http://xxx",

"https":"http://xxxx"

}

for i in range(1,3):

try:

# cookie = {'guid': 'd02dfbabd84858301947663946e1710f'}

session = requests.session()

print("第%s次请求:" % i)

response = session.get('https://we.51job.com/api/job/search-pc', params=params,proxies=proxies,headers=headers)

print(response.text[:300])

arg1 = re.findall("arg1='(.*?)';",response.text,re.S)[0]

print('arg1--->',arg1)

guid = str(uuid.uuid4()).replace("-", "")

cookie = {'guid': str(guid)}

with open('04.js', 'r', encoding='utf-8') as f:

js = f.read()

acw_sc__v2 = execjs.compile(js).call('l', arg1)

print('acw_sc__v2-->',acw_sc__v2)

cookie.update({"acw_sc__v2": acw_sc__v2})

# cookie.update({"acw_sc__v3": "649257ebe376df87b3db6a94c1e5ad37f42f783b"})

response2 = session.get('https://we.51job.com/api/job/search-pc', params=params,headers=headers,proxies=proxies,cookies=cookie) #

cookie.update(response2.cookies.get_dict())

response = session.get('https://we.51job.com/api/job/search-pc', params=params,headers=headers,proxies=proxies, cookies=cookie)

print(response.text)

time.sleep(0.5)

except Exception as e:

print(e)

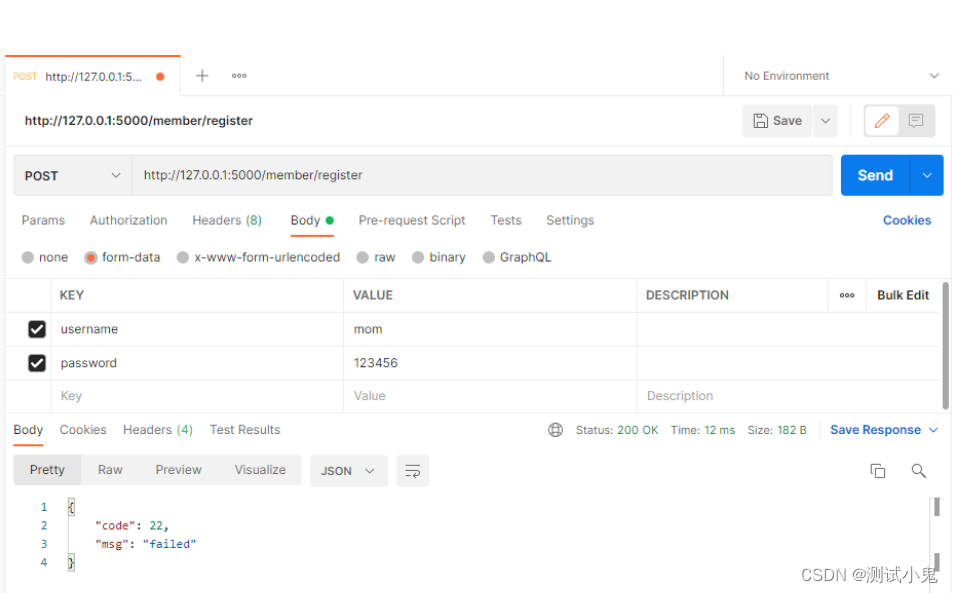

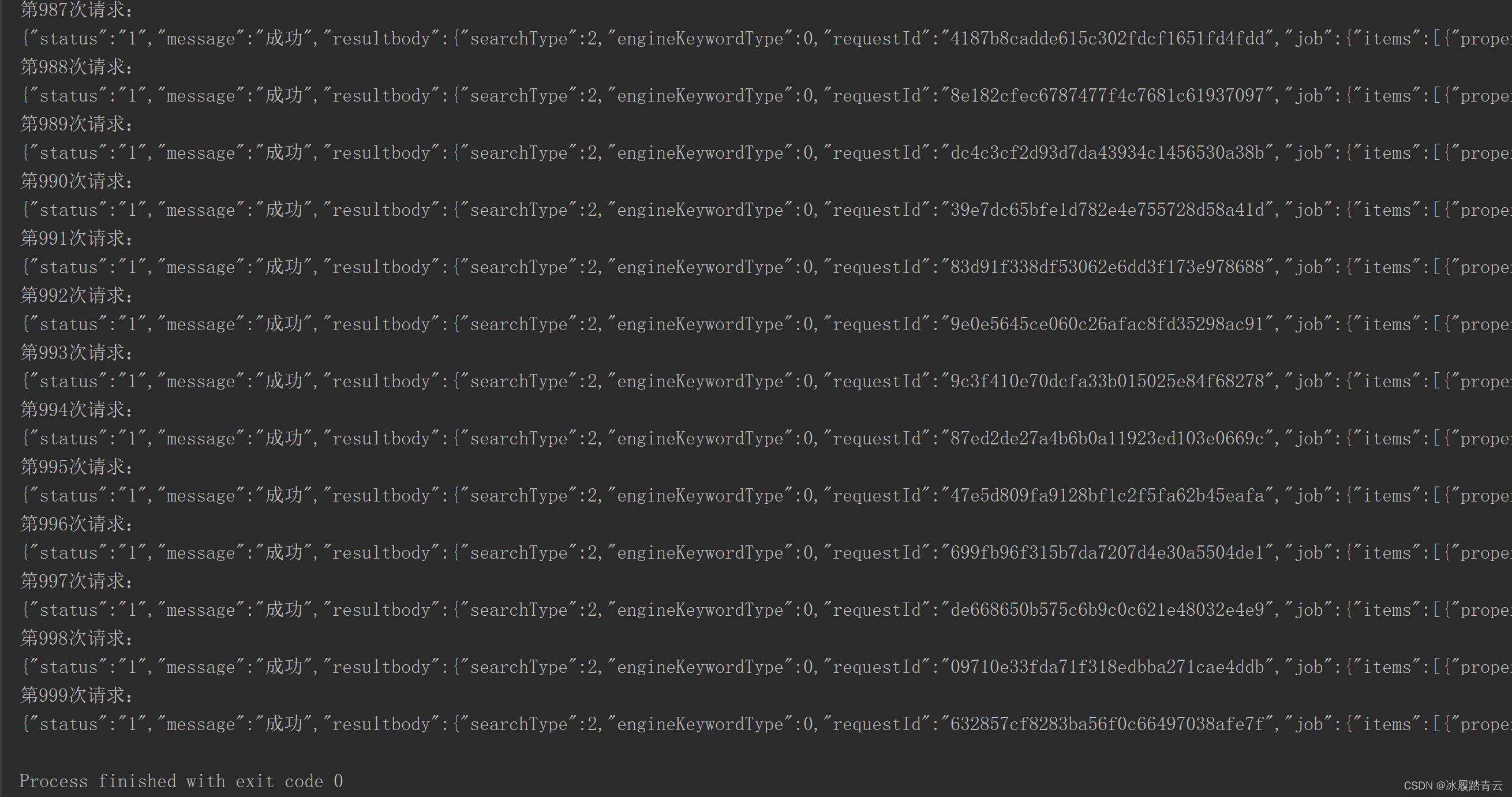

测试结果:

话外拓展-风控浅析

测了一下风控,单个ip用acw_sc__v2请求多了会出滑块验证

过掉滑块,生成acw_sc__v3, cookie携带acw_sc__v3参数请求,使用单个ip

请求1000次还是成功的,再启动一下爬虫就是405响应:

再打开浏览器刷新就是这样:

这种情况就是ip被封掉了,浏览器再切换一下ip

又能正常访问了,确定是ip被限制了。

结论:请求量大的还是堆ip吧【狗头保命】

往期逆向文章推荐

B站w_rid逆向

某书最新版X-s(2023/5/23更新)

JS逆向之今日头条signature

JS逆向之抖音__ac_signature

JS逆向之淘宝sign

JS逆向之知乎jsvmp算法

JS逆向之艺恩数据

JS逆向之网易云音乐

JS逆向之巨量星图sign签名

JS逆向之巨量创意signature签名

JS逆向之巨量算数signature与data解密

JS逆向之行行查data解密

JS逆向之百度翻译

JS逆向解析之有道翻译

JS逆向之企名科技

JS逆向之人口流动态势

JS逆向系列之猿人学爬虫第1题

JS逆向系列之猿人学爬虫第2题

JS逆向系列之猿人学爬虫第3题

JS逆向系列之猿人学爬虫第4题

JS逆向系列之猿人学爬虫第5题

JS逆向系列之猿人学爬虫第6题

JS逆向系列之猿人学爬虫第7题-动态字体,随风漂移

JS逆向系列之猿人学爬虫第12题

JS逆向系列之猿人学爬虫第13题

JS逆向系列之猿人学爬虫第15题-备周则意怠,常见则不疑

JS逆向系列之猿人学爬虫第17题

JS逆向之猿人学爬虫第19题-乌拉

JS逆向之网洛者反爬虫练习平台第4题