原文链接: Stable Diffusion: 利用Latent Diffusion Models实现高分辨率图像合成

High-Resolution Image Synthesis with Latent Diffusion Models

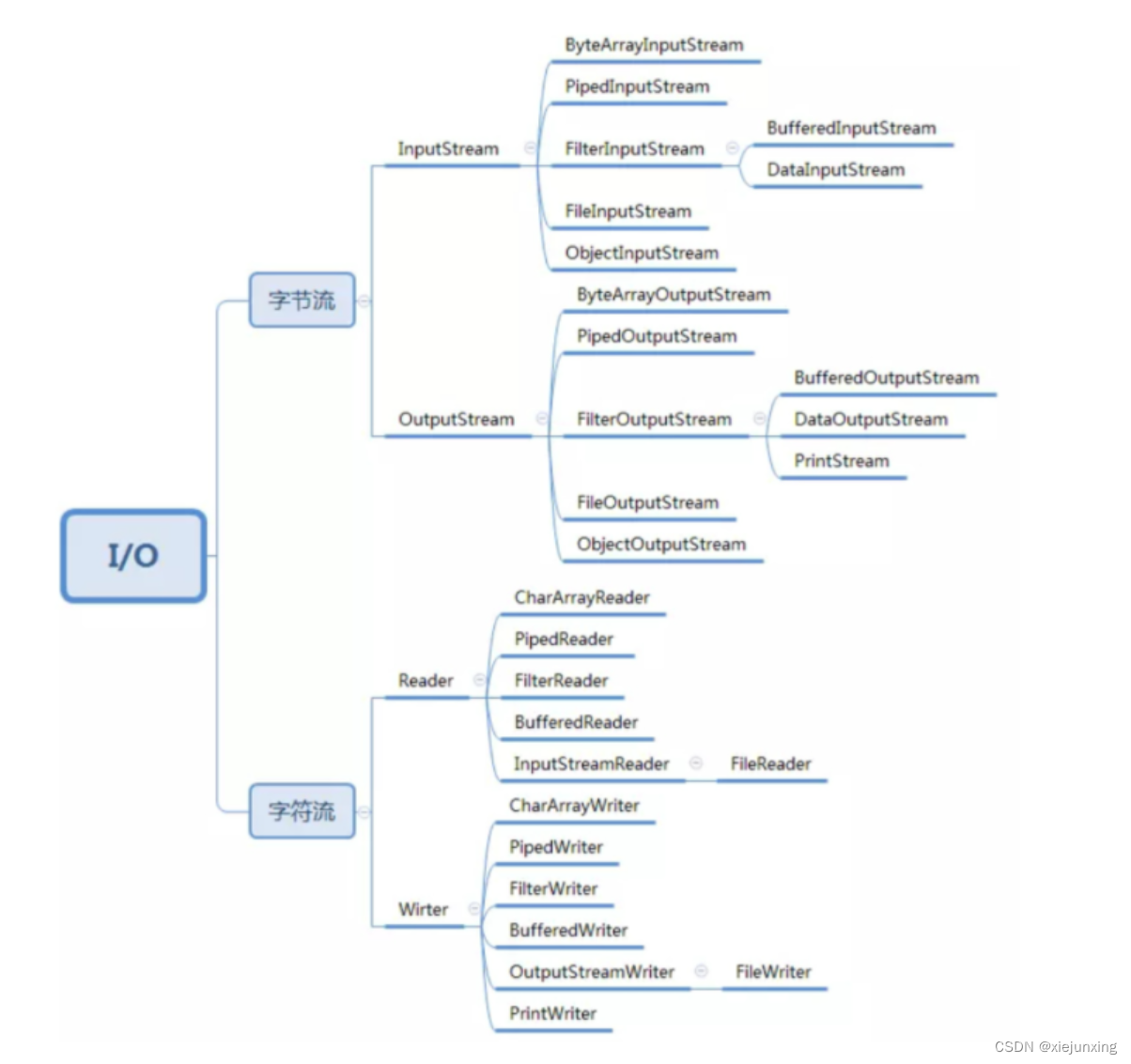

- 01 The shortcomings of the existing works?

- 02 What problem is addressed?

- 03 What are the keys to the solutions?

- 04 What are the main contributions?

- 05 Related works?

- 06 Method descriptions

- Perceptual Image Compression

- Latent Diffusion Models

- Conditioning Mechanisms

- 07 Results and Comparisons

- Image Generation with Latent Diffusion

- Conditional Latent Diffusion

- Transformer Encoders for LDMs

- Convolutional Sampling Beyond 25 6 2 256^2 2562

- Super-Resolution with Latent Diffusion

- Inpainting with Latent Diffusion

- 08 Ablation studies

- On Perceptual Compression Tradeoffs

- 09 How this work can be improved?

- 10 Conclusions

01 The shortcomings of the existing works?

- Since these diffusion model typically operate directly in pixel space, optimization of powerful DMs often consumes hundreds of GPU days and inference is expensive due to sequential evaluations

02 What problem is addressed?

- Reach a near-optimal point between complexity reduction and detail preservation, greatly boosting visual fidelity.

- LDMs achieve new state of the art scores for image inpainting and class-conditional image synthesis and highly competitive performance on various tasks, including unconditional image generation, text-to-image synthesis, and super-resolution, while significantly reducing computational requirements compared to pixel-based DMs

03 What are the keys to the solutions?

- By introducing cross-attention layers into the model architecture, we turn diffusion models into powerful and flexible generators for general conditioning inputs such as text or bounding boxes and high-resolution synthesis becomes possible in a convolutional manner.

- First, we train an autoencoder which provides a lower-dimensional (and thereby efficient) representational space which is perceptually equivalent to the data space.

- For the latter, we design an architecture that connects transformers to the DM’s UNet backbone [69] and enables arbitrary types of token-based conditioning mechanisms.

04 What are the main contributions?

- In contrast to purely transformer-based approaches [23, 64], our method scales more graceful to higher dimensional data and can thus (a) work on a compression level which provides more faithful and detailed reconstructions than previous work. (b) can be efficiently applied to high-resolution synthesis of megapixel images.

- We achieve competitive performance on multiple tasks (unconditional image synthesis, inpainting, stochastic super-resolution) and datasets while significantly lowering computational costs.

- our approach does not require a delicate weighting of reconstruction and generative abilities. This ensures extremely faithful reconstructions and requires very little regularization of the latent space.

- We find that for densely conditioned tasks such as super-resolution, inpainting and semantic synthesis, our model can be applied in a convolutional fashion and render large, consistent images of ∼ 10242 px

- we design a general-purpose conditioning mechanism based on cross-attention, enabling multi-modal training. We use it to train class-conditional, text-to-image and layout-to-image models.

- we release pretrained latent diffusion and autoencoding models at https://github. com/CompVis/latent-diffusion which might be reusable for a various tasks besides training of DMs.

05 Related works?

- Generative Models for Image Synthesis

- Diffusion Probabilistic Models (DM)

- Two-Stage Image Synthesis

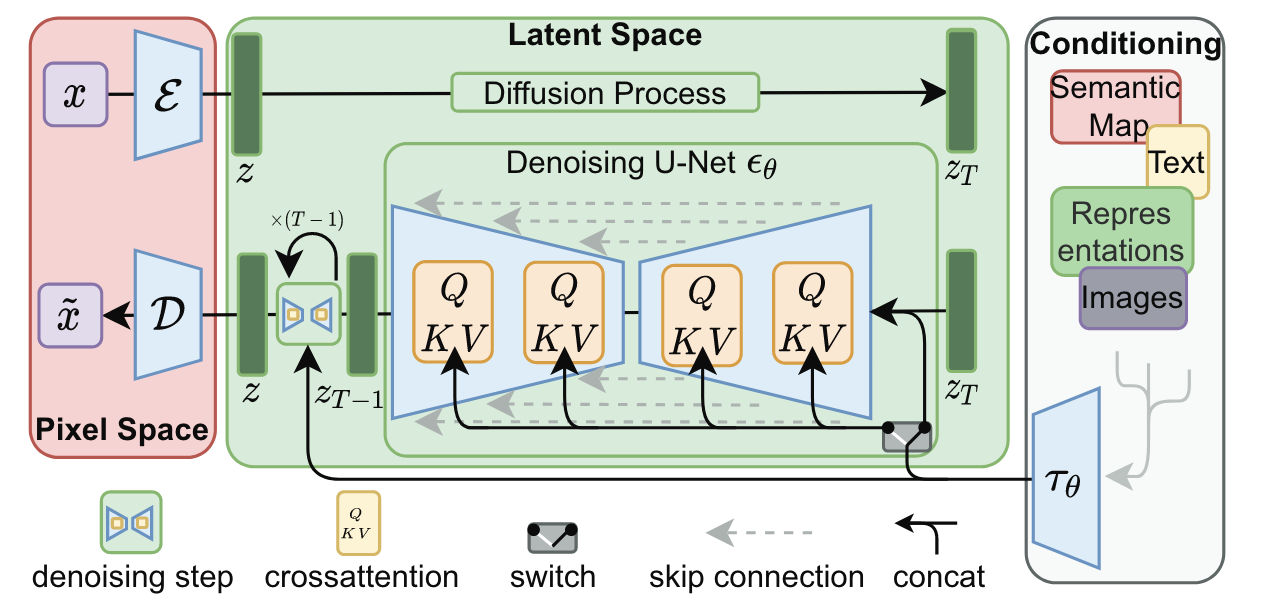

06 Method descriptions

we utilize an autoencoding model which learns a space that is perceptually equivalent to the image space, but offers significantly reduced computational complexity.

Such an approach offers several advantages:

- By leaving the high-dimensional image space, we obtain DMs which are computationally much more efficient because sampling is performed on a low-dimensional space.

- We exploit the inductive bias of DMs inherited from their UNet architecture [69], which makes them particularly effective for data with spatial structure and therefore alleviates the need for aggressive, quality-reducing compression levels as required by previous approaches [23, 64].

- Finally, we obtain general-purpose compression models whose latent space can be used to train multiple generative models and which can also be utilized for other downstream applications such as single-image CLIP-guided synthesis [25].

Perceptual Image Compression

given an image x ∈ R H × W × 3 x \in R^{H×W×3} x∈RH×W×3 in RGB space, the encoder E encodes x into a latent representation z = E ( x ) z = E(x) z=E(x), and the decoder D reconstructs the image from the latent, giving ̃ x = D ( z ) = D ( E ( x ) ) x = D(z)=D(E(x)) x=D(z)=D(E(x)), where z ∈ R h × w × c z ∈ R^{h×w×c} z∈Rh×w×c.

Latent Diffusion Models

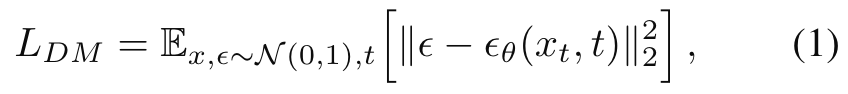

The corresponding objective in DM can be simplified to:

Compared to the high-dimensional pixel space, latent space is more suitable for likelihood-based generative models, as they can now (i) focus on the important, semantic bits of the data and (ii) train in a lower dimensional, computationally much more efficient space.

Since the forward process is fixed, z t z_t zt can be efficiently obtained from E during training, and samples from p ( z ) p(z) p(z) can be decoded to image space with a single pass through D.

Conditioning Mechanisms

We turn DMs into more flexible conditional image generators by augmenting their underlying UNet backbone with the cross-attention mechanism [94], which is effective for learning attention-based models of various input modalities [34,35].

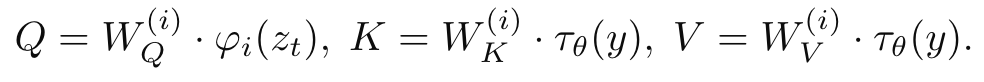

To pre-process y y y from various modalities (such as language prompts) we introduce a domain specific encoder τ θ τ_θ τθ that projects y to an intermediate representation τ θ ( y ) ∈ R M × d τ τ_θ(y) ∈ R^{M×d_τ} τθ(y)∈RM×dτ , which is then mapped to the intermediate layers of the UNet via a cross-attention layer implementing $Attention(Q, K, V )=softmax (\frac{QK^T}{√d}) · V $, with:

See Fig. 3for a visual depiction.

Based on image-conditioning pairs, we then learn the conditional LDM via:

This conditioning mechanism is flexible as τ θ τ_θ τθ can be parameterized with domain-specific experts, e.g. (unmasked) transformers [94] when y y y are text prompts.

07 Results and Comparisons

Experimental findings:

- LDMs trained in VQ-regularized latent spaces achieve better sample quality, even though the reconstruction capabilities of VQ-regularized first stage models slightly fall behind those of their continuous counterparts, cf . Tab. 8 .Therefore, we evaluate VQ-regularized LDMs in the remainder of the paper, unless stated differently.

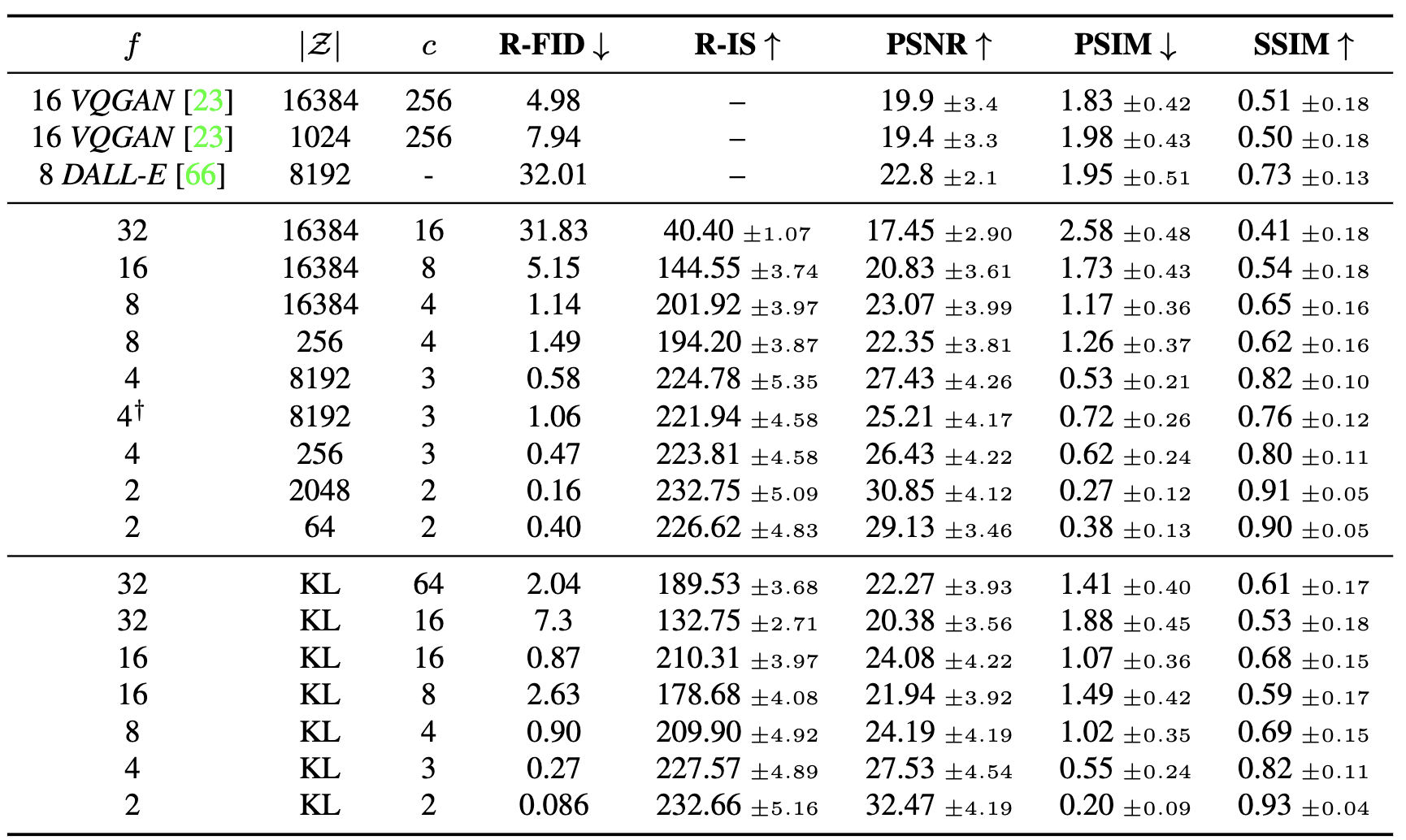

Image Generation with Latent Diffusion

- On CelebA-HQ, we report a new state-of-the-art FID of 5.11, outperforming previous likelihood-based models as well as GANs. We also outperform LSGM [93] where a latent diffusion model is trained jointly together with the first stage.

- We outperform prior diffusion based approaches on all but the LSUN-Bedrooms dataset, where our score is close to ADM [15], despite utilizing half its parameters and requiring 4-times less train resources

- LDMs consistently improve upon GAN-based methods in Precision and Recall, thus confirming the advantages of their mode-covering likelihood-based training objective over adversarial approaches.

In Fig. 4 we also show qualitative results on each dataset.

![Figure 4. Samples from LDMs trained on CelebAHQ [39], FFHQ [41], LSUN-Churches [102], LSUN-Bedrooms [102] and classconditional ImageNet [12],](https://img-blog.csdnimg.cn/img_convert/ecdffbadb80145e65c30fc68988f70a7.png)

Conditional Latent Diffusion

Transformer Encoders for LDMs

For quantitative analysis, we follow prior work and evaluate text-to-image generation on the MS-COCO [51] validation set, where our model improves upon powerful AR [17, 66] and GAN-based [109] methods, cf . Tab. 2.

![Table 2. Evaluation of text-conditional image synthesis on the 256 × 256-sized MS-COCO [51] dataset](https://img-blog.csdnimg.cn/img_convert/12c06193207d745ed87d4d069f76dce2.png)

![Table 3. Comparison of a class-conditional ImageNet LDM with recent state-of-the-art methods for class-conditional image generation on ImageNet [12]](https://img-blog.csdnimg.cn/img_convert/f8789381561c757e523fae4703b628c1.png)

Convolutional Sampling Beyond 25 6 2 256^2 2562

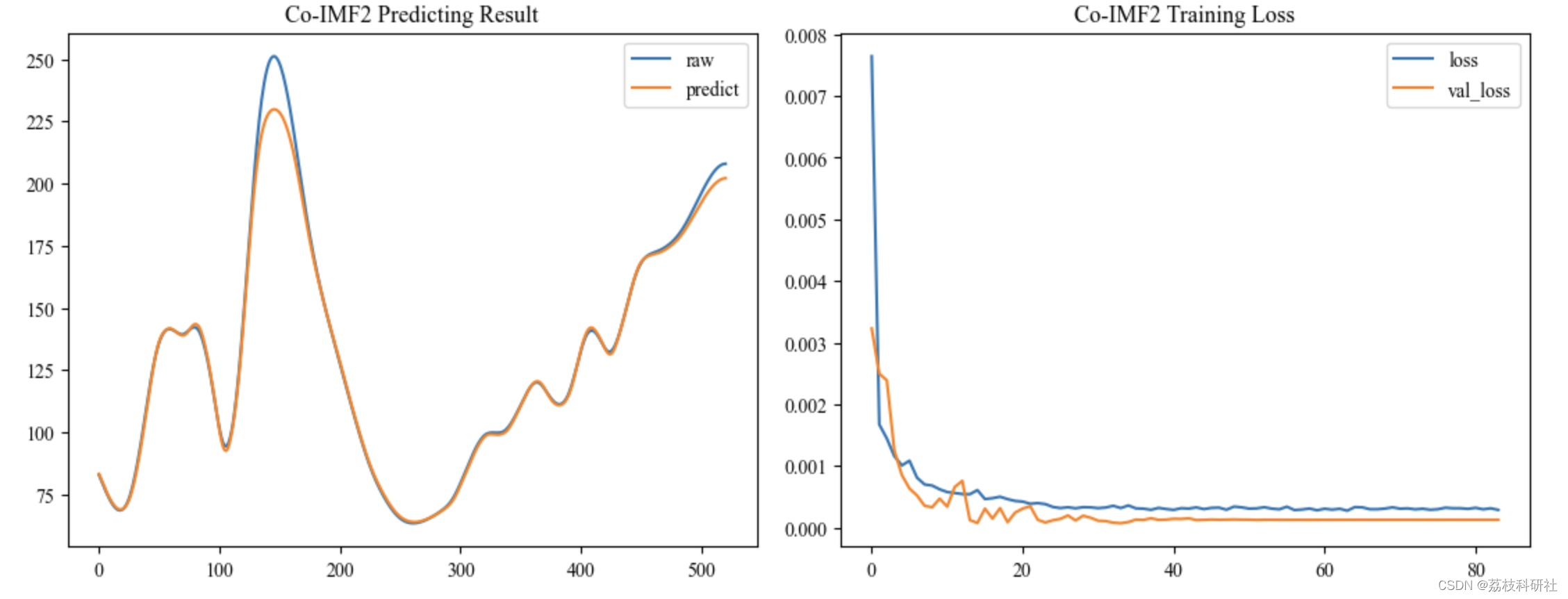

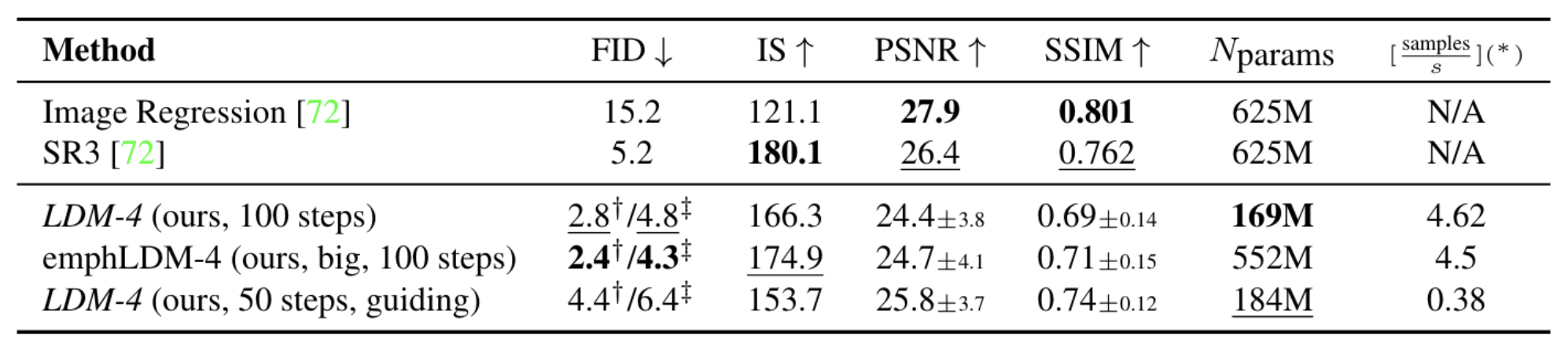

Super-Resolution with Latent Diffusion

Our qualitative and quantitative results (see Fig. 10 and Tab. 5) show competitive performance and LDM-SR outperforms SR3 in FID while SR3 has a better IS.

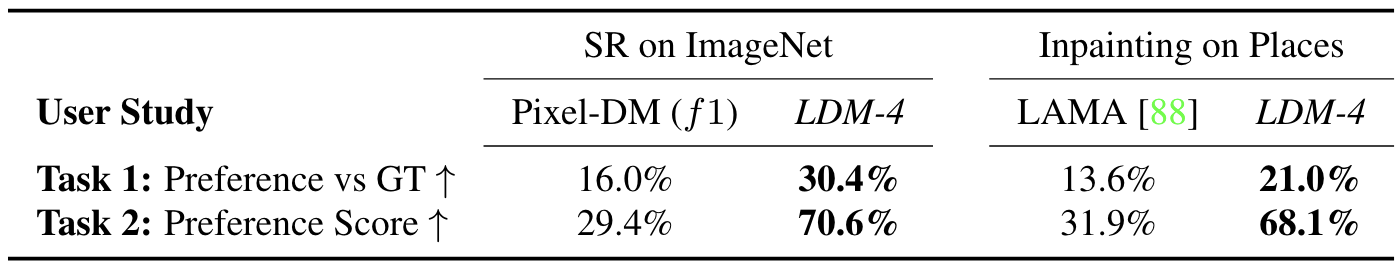

Further, we conduct a user study comparing the pixel-baseline with LDM-SR. The results in Tab. 4 affirm the good performance of LDM-SR. PSNR and SSIM can be pushed by using a post-hoc guiding mechanism [15] and we implement this image-based guider via a perceptual loss.

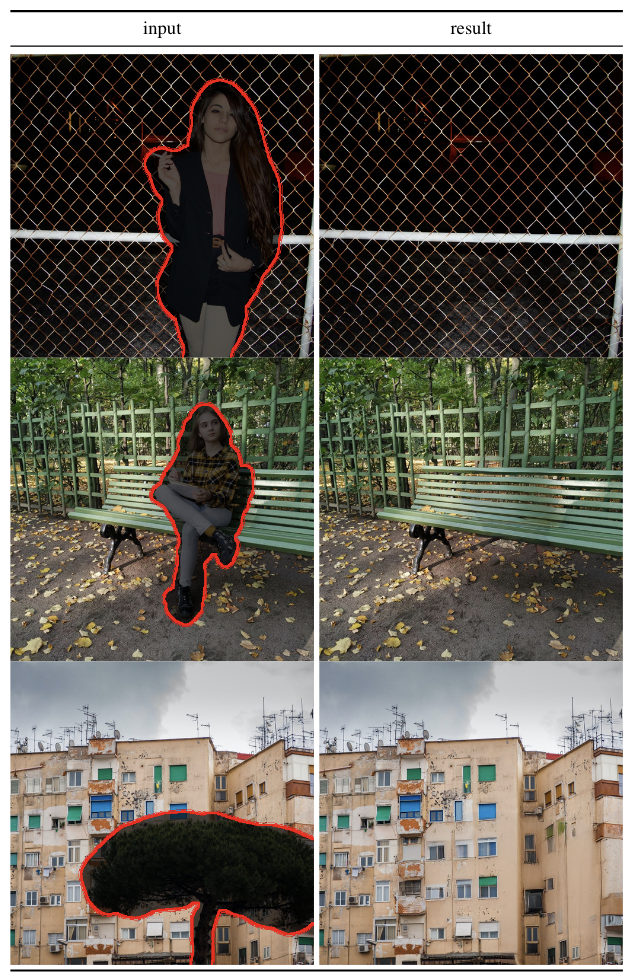

Inpainting with Latent Diffusion

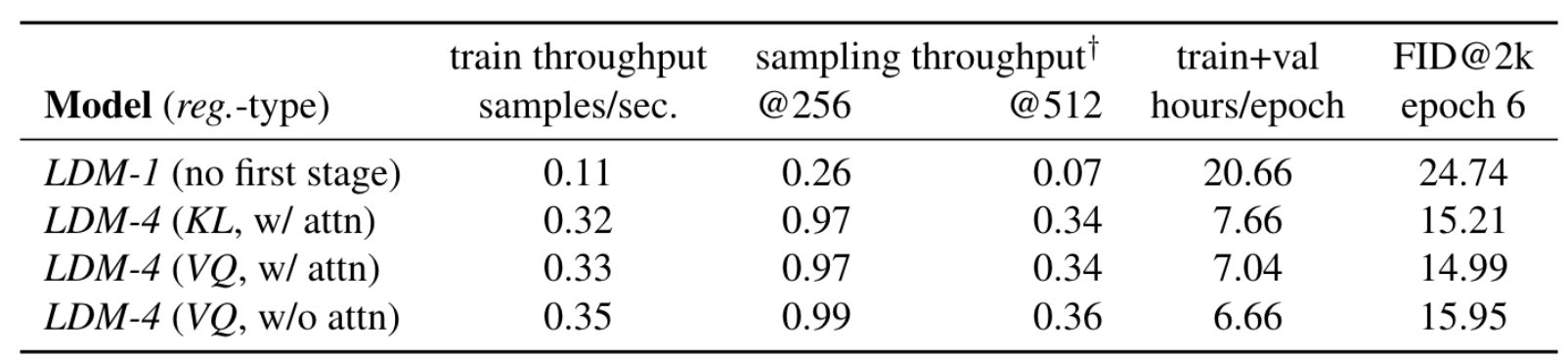

In particular, we compare the inpainting efficiency of LDM-1 (i.e. a pixel-based conditional DM) with LDM-4, for both KL and VQ regularizations, as well as VQLDM-4 without any attention in the first stage (see Tab. 8), where the latter reduces GPU memory for decoding at high resolutions.

Tab. 6 reports the training and sampling throughput at resolution 2562 and 5122, the total training time in hours per epoch and the FID score on the validation split after six epochs.

The comparison with other inpainting approaches in Tab. 7 shows that our model with attention improves the overall image quality as measured by FID over that of [88]

![Table 7. Comparison of inpainting performance on 30k crops of size 512 × 512 from test images of Places [108]](https://img-blog.csdnimg.cn/img_convert/31fd2221f838743532941177e6017169.png)

08 Ablation studies

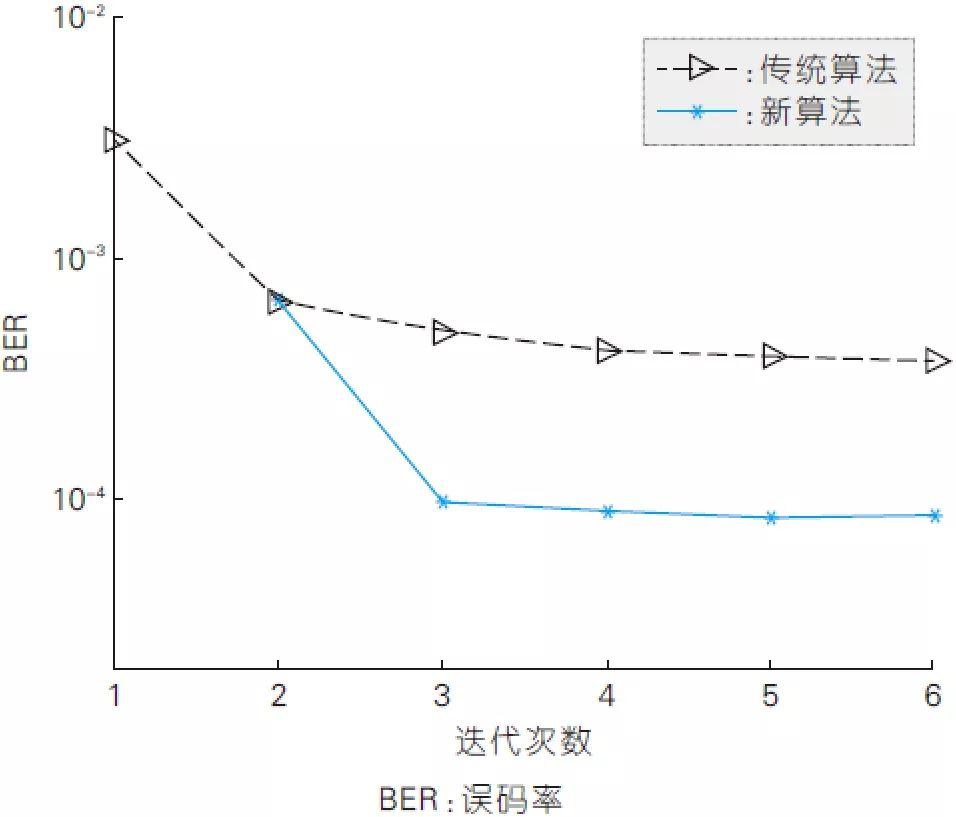

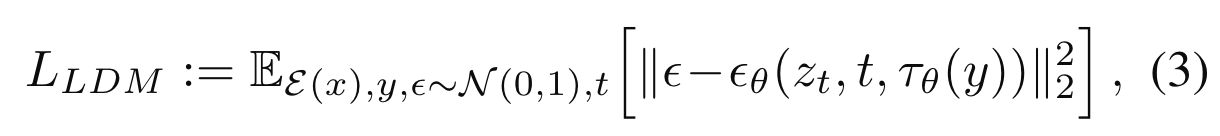

On Perceptual Compression Tradeoffs

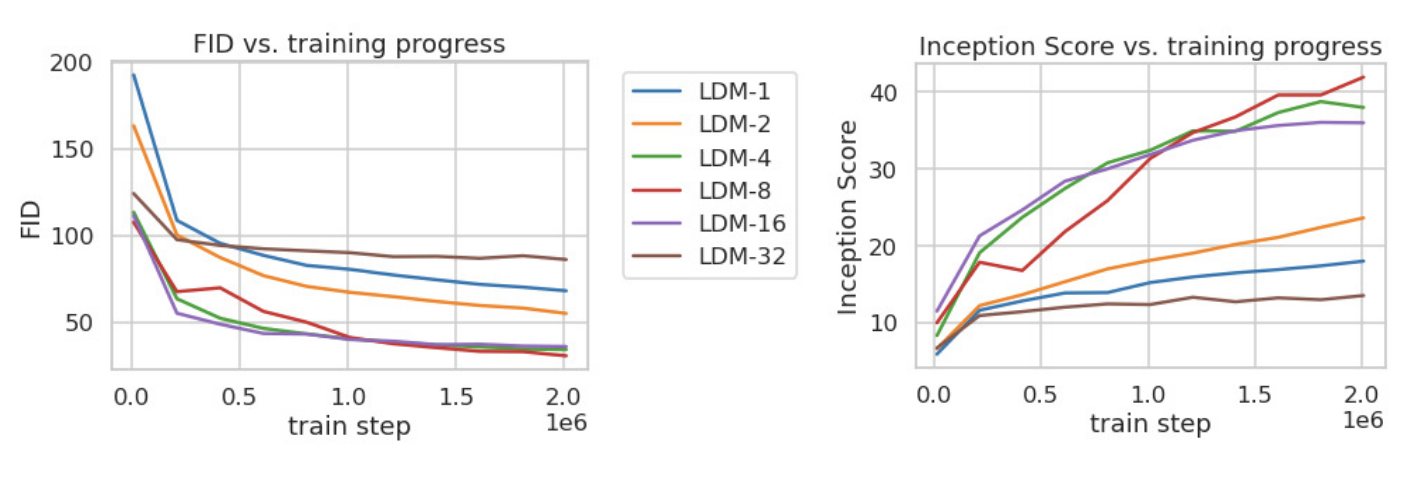

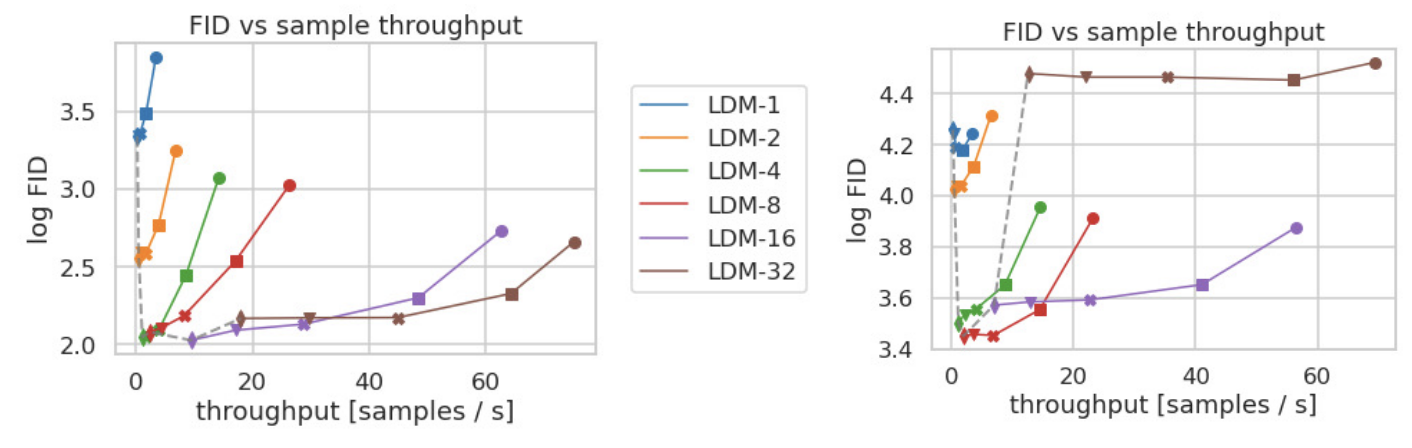

This section analyzes the behavior of our LDMs with different downsampling factors f ∈{1, 2, 4, 8, 16, 32}.

Tab. 8 shows hyperparameters and reconstruction performance of the first stage models used for the LDMs compared in this section.

Fig. 6 shows sample quality as a function of training progress for 2M steps of class-conditional models on the ImageNet [12] dataset.

In Fig. 7, we compare models trained on CelebAHQ [39] and ImageNet in terms sampling speed for different numbers of denoising steps with the DDIM sampler [84] and plot it against FID-scores [29]

Complex datasets such as ImageNet require reduced compression rates to avoid reducing quality. In summary, LDM-4 and -8 offer the best conditions for achieving high-quality synthesis results.

09 How this work can be improved?

- While LDMs significantly reduce computational requirements compared to pixel-based approaches, their sequential sampling process is still slower than that of GANs.

- The use of LDMs can be questionable when high precision is required.

10 Conclusions

- We have presented latent diffusion models, a simple and efficient way to significantly improve both the training and sampling efficiency of denoising diffusion models without degrading their quality.

- Based on this and our cross-attention conditioning mechanism, our experiments could demonstrate favorable results compared to state-of-the-art methods across a wide range of conditional image synthesis tasks without task-specific architectures.

原文链接: Stable Diffusion: 利用Latent Diffusion Models实现高分辨率图像合成