(一)基础环境

虚拟机环境 :vmware workstation 12

操作系统 : redhat6.4 - 64bit

数据库版本 :11.2.0.4

Last login: Fri Jun 16 18:40:20 2023 from 192.168.186.1

[root@rhel64 ~]# cat /etc/redhat-release

Red Hat Enterprise Linux Server release 6.4 (Santiago)

[root@rhel64 ~]# lsb_release -a

LSB Version: :base-4.0-amd64:base-4.0-noarch:core-4.0-amd64:core-4.0-noarch:graphics-4.0-amd64:graphics-4.0-noarch:printing-4.0-amd64:printing-4.0-noarch

Distributor ID: RedHatEnterpriseServer

Description: Red Hat Enterprise Linux Server release 6.4 (Santiago)

Release: 6.4

Codename: Santiago

[root@rhel64 ~]# uname -r

2.6.32-358.el6.x86_64

[root@rhel64 ~]#

修改hostname和hosts文件

[root@rhel64 ~]# vi /etc/sysconfig/network

[root@rhel64 ~]# vi /etc/hosts

[root@rac1 ~]# tail /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=rac1

GATEWAY=192.168.182.2

[root@rac1 ~]# more /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

#Public

192.168.186.128 rac1

192.168.186.129 rac2

#vip

192.168.186.108 rac1-vip

192.168.186.109 rac2-vip

#private

192.168.182.145 rac1-priv

192.168.182.147 rac2-priv

#scan-ip

192.168.186.120 scan-ip

关闭防火墙

[root@rac1 ~]# setenforce 0

setenforce: SELinux is disabled

[root@rac1 ~]# vi /etc/sysconfig/selinux

SELINUX=disabled

[root@rac1 ~]# service iptables stop

[root@rac1 ~]# chkconfig iptables off

[root@rhel64 ~]# more /etc/sysconfig/selinux

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of these two values:

# targeted - Targeted processes are protected,

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

[root@rhel64 ~]# service iptables status

iptables: Firewall is not running.

[root@rhel64 ~]#

操作系统参数修改,2个节点都要修改

修改/etc/sysctl.conf

[root@rac2 ~]# cat <<EOF >> /etc/sysctl.conf

> # 在末尾添加

>

> kernel.msgmnb = 65536

> kernel.msgmax = 65536

> kernel.shmmax = 68719476736

> kernel.shmall = 4294967296

> fs.aio-max-nr = 1048576

> fs.file-max = 6815744

> kernel.shmall = 2097152

> kernel.shmmax = 1306910720

> kernel.shmmni = 4096

> kernel.sem = 250 32000 100 128

> net.ipv4.ip_local_port_range = 9000 65500

> net.core.rmem_default = 262144

> net.core.rmem_max = 4194304

> net.core.wmem_default = 262144

> net.core.wmem_max = 1048586

> net.ipv4.tcp_wmem = 262144 262144 262144

> net.ipv4.tcp_rmem = 4194304 4194304 4194304

> EOF

[root@rac2 ~]# tail -n 20 /etc/sysctl.conf

kernel.shmall = 4294967296

# 在末尾添加

kernel.msgmnb = 65536

kernel.msgmax = 65536

kernel.shmmax = 68719476736

kernel.shmall = 4294967296

fs.aio-max-nr = 1048576

fs.file-max = 6815744

kernel.shmall = 2097152

kernel.shmmax = 1306910720

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048586

net.ipv4.tcp_wmem = 262144 262144 262144

net.ipv4.tcp_rmem = 4194304 4194304 4194304

[root@rac2 ~]# tail /etc/sysctl.conf

kernel.shmmax = 1306910720

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048586

net.ipv4.tcp_wmem = 262144 262144 262144

net.ipv4.tcp_rmem = 4194304 4194304 4194304

[root@rac2 ~]# tail /etc/sysctl.conf

kernel.shmmax = 1306910720

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048586

net.ipv4.tcp_wmem = 262144 262144 262144

net.ipv4.tcp_rmem = 4194304 4194304 4194304

修改/etc/security/limits.conf

[root@rac1 ~]# cat <<EOF >>/etc/security/limits.conf

> grid soft nproc 2047

> grid hard nproc 16384

> grid soft nofile 1024

> grid hard nofile 65536

> oracle soft nproc 2047

> oracle hard nproc 16384

> oracle soft nofile 1024

> oracle hard nofile 65536

> EOF

[root@rac1 ~]# cat /etc/security/limits.conf

修改/etc/pam.d/login

[root@rac1 ~]# vi /etc/pam.d/login

[root@rac1 ~]# more /etc/pam.d/login

#%PAM-1.0

auth [user_unknown=ignore success=ok ignore=ignore default=bad] pam_securetty.so

auth include system-auth

account required pam_nologin.so

account include system-auth

password include system-auth

# pam_selinux.so close should be the first session rule

session required pam_selinux.so close

session required pam_loginuid.so

session optional pam_console.so

# pam_selinux.so open should only be followed by sessions to be executed in the

user context

session required pam_selinux.so open

session required pam_namespace.so

session optional pam_keyinit.so force revoke

session include system-auth

-session optional pam_ck_connector.so

session required pam_limits.so

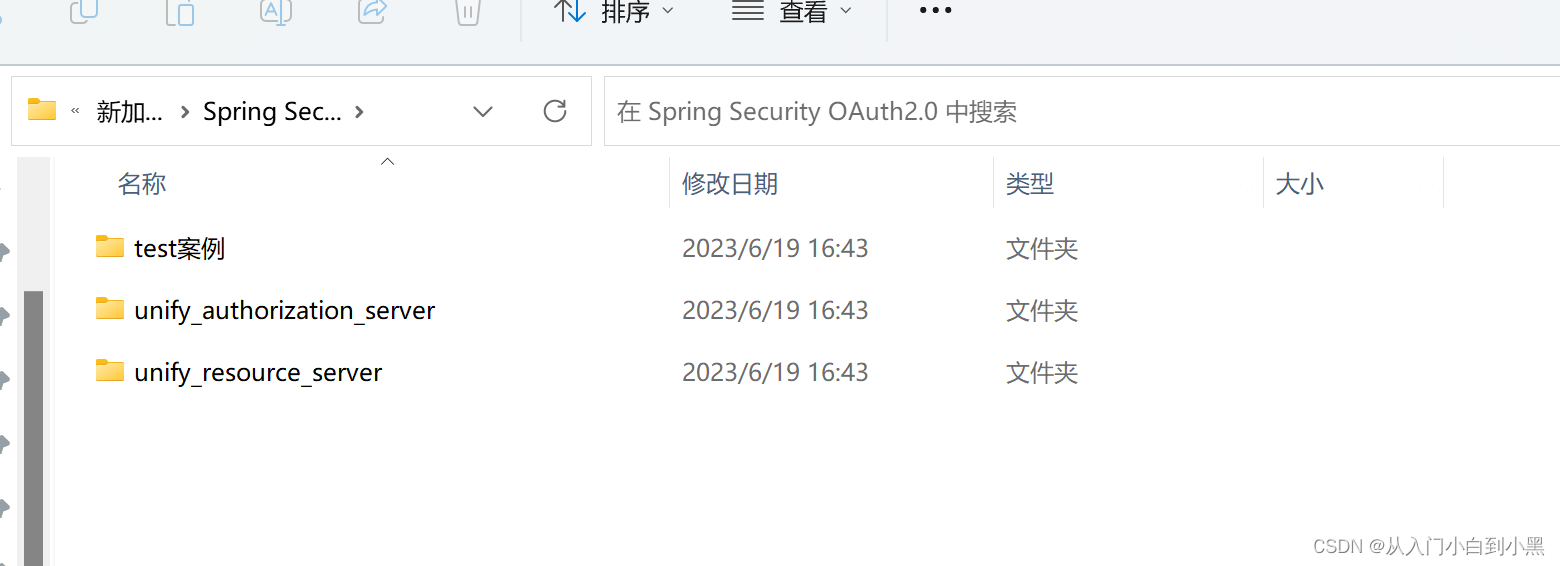

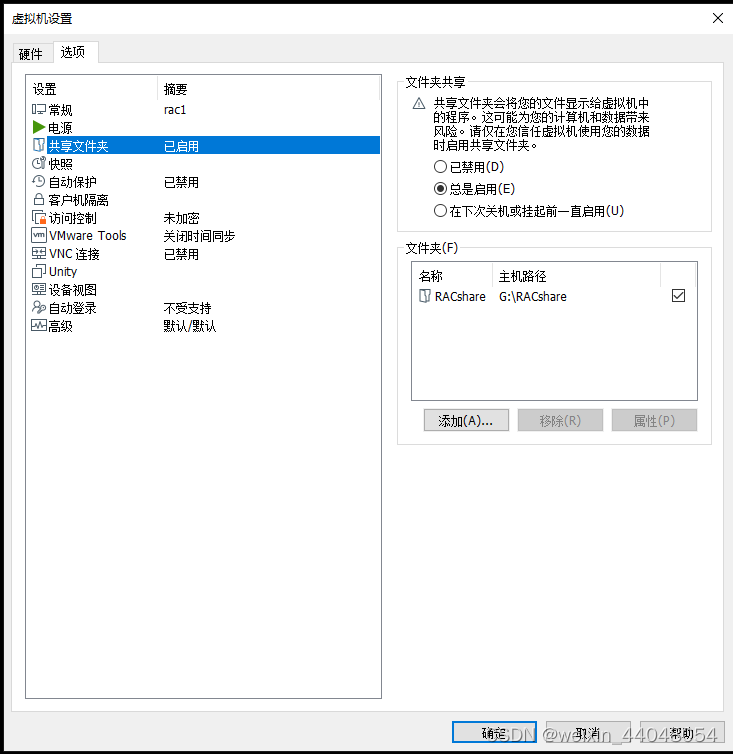

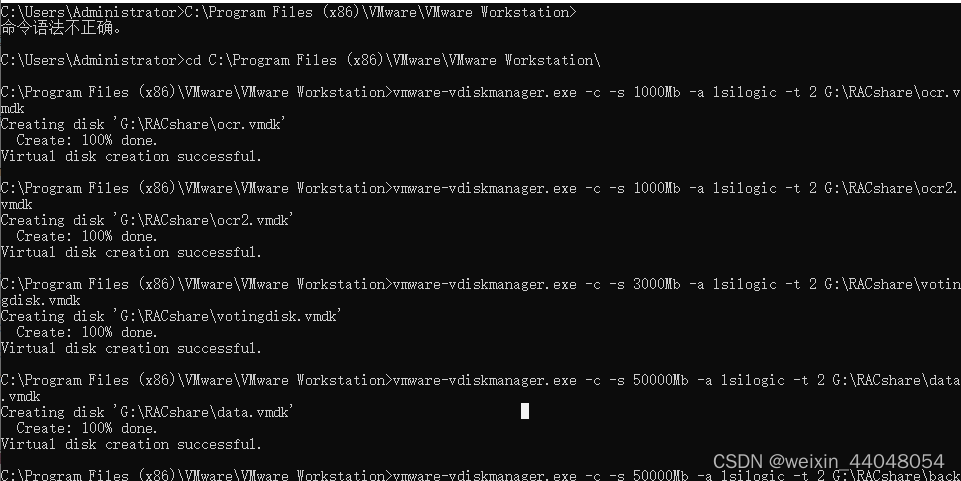

(2.3)创建共享磁盘

两个节点都执行

选择虚拟机设置-选项-共享文件夹,启动文件夹在G盘创建共享文件夹RACshare

由于采用的是共享存储ASM,而且搭建集群需要共享空间作注册盘(OCR)和投票盘(votingdisk)。VMware创建共享存储方式:

进入VMware安装目录,cmd命令下:

C:\Program Files (x86)\VMware\VMware Workstation>

vmware-vdiskmanager.exe -c -s 1000Mb -a lsilogic -t 2 G:\RACshare\ocr.vmdk

vmware-vdiskmanager.exe -c -s 1000Mb -a lsilogic -t 2 G:\RACshare\ocr2.vmdk

vmware-vdiskmanager.exe -c -s 3000Mb -a lsilogic -t 2 G:\RACshare\votingdisk.vmdk

vmware-vdiskmanager.exe -c -s 50000Mb -a lsilogic -t 2 G:\RACshare\data.vmdk

vmware-vdiskmanager.exe -c -s 50000Mb -a lsilogic -t 2 G:\RACshare\backup.vmdk

修改RAC1虚拟机目录下的vmx配置文件:

scsi1.present = "TRUE"

scsi1.virtualDev = "lsilogic"

scsi1.sharedBus = "virtual"

scsi1:1.present = "TRUE"

scsi1:1.mode = "independent-persistent"

scsi1:1.filename = "G:\RACshare\ocr.vmdk"

scsi1:1.deviceType = "plainDisk"

scsi1:2.present = "TRUE"

scsi1:2.mode = "independent-persistent"

scsi1:2.filename = "G:\RACshare\votingdisk.vmdk"

scsi1:2.deviceType = "plainDisk"

scsi1:3.present = "TRUE"

scsi1:3.mode = "independent-persistent"

scsi1:3.filename = "G:\RACshare\data.vmdk"

scsi1:3.deviceType = "plainDisk"

scsi1:4.present = "TRUE"

scsi1:4.mode = "independent-persistent"

scsi1:4.filename = "G:\RACshare\backup.vmdk"

scsi1:4.deviceType = "plainDisk"

scsi1:5.present = "TRUE"

scsi1:5.mode = "independent-persistent"

scsi1:5.filename = "G:\RACshare\ocr2.vmdk"

scsi1:5.deviceType = "plainDisk"

disk.locking = "false"

diskLib.dataCacheMaxSize = "0"

diskLib.dataCacheMaxReadAheadSize = "0"

diskLib.DataCacheMinReadAheadSize = "0"

diskLib.dataCachePageSize = "4096"

diskLib.maxUnsyncedWrites = "0"

修改RAC2的vmx配置文件:

scsi1.present = "TRUE"

scsi1.virtualDev = "lsilogic"

scsi1.sharedBus = "virtual"

scsi1:1.present = "TRUE"

scsi1:1.mode = "independent-persistent"

scsi1:1.filename = "G:\RACshare\ocr.vmdk"

scsi1:1.deviceType = "disk"

scsi1:2.present = "TRUE"

scsi1:2.mode = "independent-persistent"

scsi1:2.filename = "G:\RACshare\votingdisk.vmdk"

scsi1:2.deviceType = "disk"

scsi1:3.present = "TRUE"

scsi1:3.mode = "independent-persistent"

scsi1:3.filename = "G:\RACshare\data.vmdk"

scsi1:3.deviceType = "disk"

scsi1:4.present = "TRUE"

scsi1:4.mode = "independent-persistent"

scsi1:4.filename = "G:\RACshare\backup.vmdk"

scsi1:4.deviceType = "disk"

scsi1:5.present = "TRUE"

scsi1:5.mode = "independent-persistent"

scsi1:5.filename = "G:\RACshare\ocr2.vmdk"

scsi1:5.deviceType = "disk"

disk.locking = "false"

diskLib.dataCacheMaxSize = "0"

diskLib.dataCacheMaxReadAheadSize = "0"

diskLib.DataCacheMinReadAheadSize = "0"

diskLib.dataCachePageSize = "4096"

diskLib.maxUnsyncedWrites = "0"

分区、格式化磁盘,在一个节点上执行即可

[root@rac2 ~]# fdisk -l /dev/sd*

Disk /dev/sda: 85.9 GB, 85899345920 bytes

255 heads, 63 sectors/track, 10443 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00006046

Device Boot Start End Blocks Id System

/dev/sda1 * 1 64 512000 83 Linux

Partition 1 does not end on cylinder boundary.

/dev/sda2 64 10444 83373056 8e Linux LVM

Disk /dev/sda1: 524 MB, 524288000 bytes

255 heads, 63 sectors/track, 63 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sda2: 85.4 GB, 85374009344 bytes

255 heads, 63 sectors/track, 10379 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdb: 1048 MB, 1048576000 bytes

64 heads, 32 sectors/track, 1000 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdc: 3145 MB, 3145728000 bytes

255 heads, 63 sectors/track, 382 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdd: 52.4 GB, 52428800000 bytes

255 heads, 63 sectors/track, 6374 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sde: 52.4 GB, 52428800000 bytes

255 heads, 63 sectors/track, 6374 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdf: 1048 MB, 1048576000 bytes

64 heads, 32 sectors/track, 1000 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

[root@rac2 ~]# fdisk /dev/sdb

Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel

Building a new DOS disklabel with disk identifier 0x56133267.

Changes will remain in memory only, until you decide to write them.

After that, of course, the previous content won't be recoverable.

Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite)

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to

switch off the mode (command 'c') and change display units to

sectors (command 'u').

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-1000, default 1):

Using default value 1

Last cylinder, +cylinders or +size{K,M,G} (1-1000, default 1000):

Using default value 1000

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

[root@rac2 ~]# fdisk /dev/sdc

Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel

Building a new DOS disklabel with disk identifier 0xb8b02994.

Changes will remain in memory only, until you decide to write them.

After that, of course, the previous content won't be recoverable.

Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite)

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to

switch off the mode (command 'c') and change display units to

sectors (command 'u').

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-382, default 1):

Using default value 1

Last cylinder, +cylinders or +size{K,M,G} (1-382, default 382):

Using default value 382

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

[root@rac2 ~]# fdisk /dev/sdd

Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel

Building a new DOS disklabel with disk identifier 0x9fb0516a.

Changes will remain in memory only, until you decide to write them.

After that, of course, the previous content won't be recoverable.

Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite)

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to

switch off the mode (command 'c') and change display units to

sectors (command 'u').

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-6374, default 1):

Using default value 1

Last cylinder, +cylinders or +size{K,M,G} (1-6374, default 6374):

Using default value 6374

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

[root@rac2 ~]# fdisk /dev/sde

Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel

Building a new DOS disklabel with disk identifier 0xd22cfa59.

Changes will remain in memory only, until you decide to write them.

After that, of course, the previous content won't be recoverable.

Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite)

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to

switch off the mode (command 'c') and change display units to

sectors (command 'u').

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-6374, default 1):

Using default value 1

Last cylinder, +cylinders or +size{K,M,G} (1-6374, default 6374):

Using default value 6374

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

[root@rac2 ~]# fdisk /dev/sdf

Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel

Building a new DOS disklabel with disk identifier 0x7007e43f.

Changes will remain in memory only, until you decide to write them.

After that, of course, the previous content won't be recoverable.

Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite)

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to

switch off the mode (command 'c') and change display units to

sectors (command 'u').

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-1000, default 1):

Using default value 1

Last cylinder, +cylinders or +size{K,M,G} (1-1000, default 1000):

Using default value 1000

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

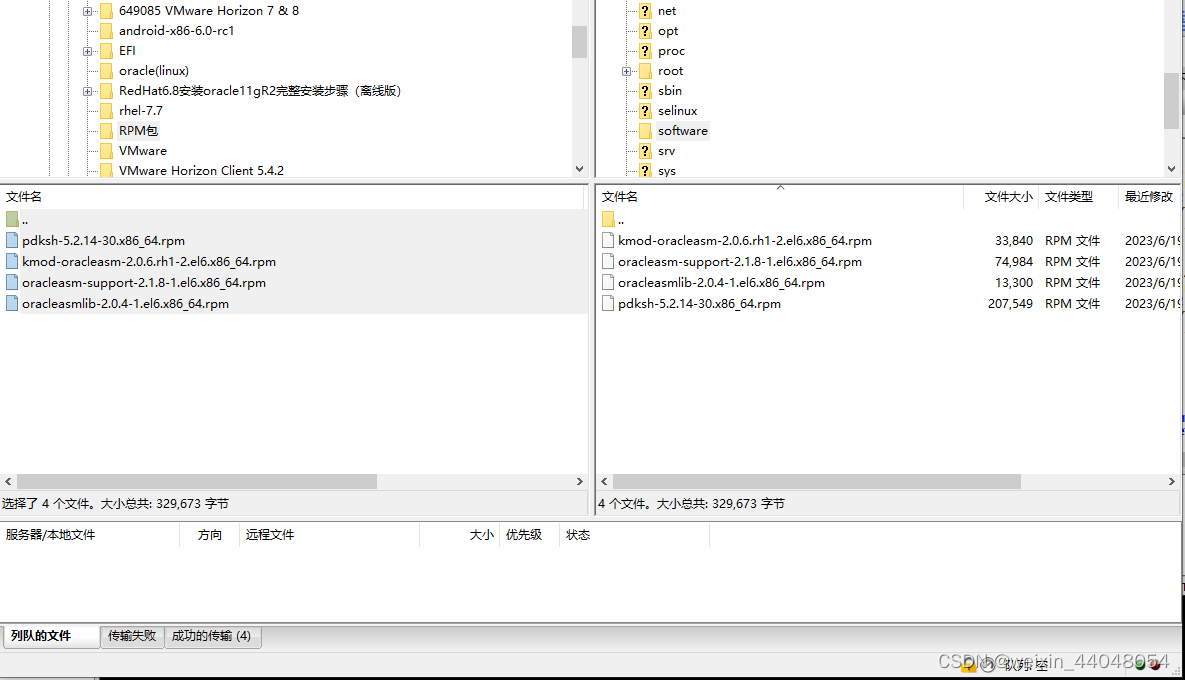

通过xftp上传asmlib安装包

[root@rac1 /]# mkdir software

[root@rac1 /]# ls

bin dev home lib64 media mnt opt root selinux srv tmp usr

boot etc lib lost+found misc net proc sbin software sys u01 var

[root@rac1 /]# cd software/

[root@rac1 software]# ls

kmod-oracleasm-2.0.6.rh1-2.el6.x86_64.rpm

oracleasmlib-2.0.4-1.el6.x86_64.rpm

oracleasm-support-2.1.8-1.el6.x86_64.rpm

pdksh-5.2.14-30.x86_64.rpm

[root@rac1 software]# rpm -ivh oracleasmlib-2.0.4-1.el6.x86_64.rpm

warning: oracleasmlib-2.0.4-1.el6.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY

error: Failed dependencies:

oracleasm >= 1.0.4 is needed by oracleasmlib-2.0.4-1.el6.x86_64

[root@rac1 software]# rpm -ivh oracleasm-support-2.1.8-1.el6.x86_64.rpm

warning: oracleasm-support-2.1.8-1.el6.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY

Preparing... ########################################### [100%]

1:oracleasm-support ########################################### [100%]

[root@rac1 software]# rpm -ivh oracleasmlib-2.0.4-1.el6.x86_64.rpm

warning: oracleasmlib-2.0.4-1.el6.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY

error: Failed dependencies:

oracleasm >= 1.0.4 is needed by oracleasmlib-2.0.4-1.el6.x86_64

[root@rac1 software]# rpm -ivh kmod-oracleasm-2.0.6.rh1-2.el6.x86_64.rpm

Preparing... ########################################### [100%]

1:kmod-oracleasm ########################################### [100%]

[root@rac1 software]# rpm -ivh oracleasmlib-2.0.4-1.el6.x86_64.rpm

warning: oracleasmlib-2.0.4-1.el6.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ec551f03: NOKEY

Preparing... ########################################### [100%]

1:oracleasmlib ########################################### [100%]

[root@rac1 software]# rpm -qa |grep oracleasm

oracleasm-support-2.1.8-1.el6.x86_64

oracleasmlib-2.0.4-1.el6.x86_64

kmod-oracleasm-2.0.6.rh1-2.el6.x86_64

[root@rac1 software]#

配置本地源的方式,自己先进行配置

[root@rac2 ~]# vi /etc/yum.repos.d/rhel-source.repo

[root@rac2 ~]# more /etc/yum.repos.d/rhel-source.repo

[rhel64]

name=rhel64

baseurl=file:///mnt/cdrom/Server

gpgcheck=0

[root@rac1 software]# mkdir /mnt/cdrom

[root@rac1 software]# mount /dev/sr0 /mnt/cdrom/

mount: block device /dev/sr0 is write-protected, mounting read-only

[root@rac1 software]#

安装依赖包,2个节点都执行

yum install -y binutils-*

yum install -y compat-libstdc++-*

yum install -y elfutils-libelf-*

yum install -y elfutils-libelf-*

yum install -y elfutils-libelf-devel-static-*

yum install -y gcc-*

yum install -y gcc-c++-*

yum install -y glibc-*

yum install -y glibc-common-*

yum install -y glibc-devel-*

yum install -y glibc-headers-*

yum install -y kernel-headers-*

yum install -y ksh-*

yum install -y libaio-*

yum install -y libaio-devel-*

yum install -y libgcc-*

yum install -y libgomp-*

yum install -y libstdc++-*

yum install -y libstdc++-devel-*

yum install -y make-*

yum install -y sysstat-*

yum install -y compat-libcap*

(2.5)创建oracle、grid用户相关

(2.5.1)创建用户、用户组、目录,2个节点都要执行

[root@rac1 software]# /usr/sbin/groupadd -g 1010 oinstall

[root@rac1 software]# /usr/sbin/groupadd -g 1020 asmadmin

[root@rac1 software]# /usr/sbin/groupadd -g 1021 asmdba

[root@rac1 software]# /usr/sbin/groupadd -g 1022 asmoper

[root@rac1 software]# /usr/sbin/groupadd -g 1031 dba

[root@rac1 software]# /usr/sbin/groupadd -g 1032 oper

useradd -u 1100 -g oinstall -G asmadmin,asmdba,asmoper,oper,dba grid

[root@rac1 software]# useradd -u 1100 -g oinstall -G asmadmin,asmdba,asmoper,oper,dba grid

useradd -u 1101 -g oinstall -G dba,asmdba,oper oracle

mkdir -p /u01/app/11.2.0/grid

[root@rac1 software]# useradd -u 1101 -g oinstall -G dba,asmdba,oper oracle

mkdir -p /u01/app/grid

[root@rac1 software]# mkdir -p /u01/app/11.2.0/grid

mkdir /u01/app/oracle

[root@rac1 software]# mkdir -p /u01/app/grid

[root@rac1 software]# mkdir /u01/app/oracle

[root@rac1 software]# chown -R grid:oinstall /u01

[root@rac1 software]# chown oracle:oinstall /u01/app/oracle

[root@rac1 software]# chmod -R 775 /u01/

[root@rac1 software]#

配置grid的环境变量,2个节点都要执行

[root@rac1 software]# su - grid

[grid@rac1 ~]$ vi .bash_profile

[grid@rac1 ~]$ more .bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin

export PATH

#添加

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_SID=+ASM1 #如果是节点2,改为:+ASM2

export ORACLE_BASE=/u01/app/grid

export ORACLE_HOME=/u01/app/11.2.0/grid

export PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

umask 022

[grid@rac1 ~]$

(2.5.3)配置oracle的环境变量,2个节点都要执行

[root@rac1 software]# su - oracle

[oracle@rac1 ~]$ more .bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin

export PATH

#添加

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_SID=standby1

export ORACLE_BASE=/u01/app/oracle

export ORACLE_HOME=$ORACLE_BASE/product/11.2.0/db_1

export TNS_ADMIN=$ORACLE_HOME/network/admin

export PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

umask 022

[oracle@rac1 ~]$

使用oracle/grid用户,配置oracle/grid用户等效性,在所有节点,所有用户执行;

ssh-keygen -t rsa

ssh-keygen -t dsa

使用oracle/grid用户,配置oracle/grid用户等效性,在节点1执行

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

ssh tbrac2 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

ssh tbrac2 cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

scp ~/.ssh/authorized_keys tbrac2:~/.ssh/authorized_keys

验证grid用户等效性,在所有节点执行;

所有节点都运行

ssh racdb1 date

ssh racdb1 date

ssh racdb1 date

ssh racdb1 date

oracle用户类似以上操作。

[root@rac1 ~]# su - grid

[grid@rac1 ~]$ ssh-keygen -r rsa

rsa IN SSHFP 1 1 165dbb864d3f30767048b5af5888a4168f1429fa

rsa IN SSHFP 2 1 8927ec05309df9059cafbc1e170eefad13af16a0

[grid@rac1 ~]$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/grid/.ssh/id_rsa):

Created directory '/home/grid/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/grid/.ssh/id_rsa.

Your public key has been saved in /home/grid/.ssh/id_rsa.pub.

The key fingerprint is:

a2:cd:a6:90:c5:2e:67:b7:f5:25:1f:c1:91:08:d4:6b grid@rac1

The key's randomart image is:

+--[ RSA 2048]----+

| .o. |

| ... . |

| ..o |

| . E. . |

| o . S. o |

| + + . . |

| + = = . . o |

| = + o . + . |

| . . . . |

+-----------------+

[grid@rac1 ~]$ ssh-keygen -t dsa

Generating public/private dsa key pair.

Enter file in which to save the key (/home/grid/.ssh/id_dsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/grid/.ssh/id_dsa.

Your public key has been saved in /home/grid/.ssh/id_dsa.pub.

The key fingerprint is:

46:5b:49:d4:5a:4b:7d:25:32:88:dd:2b:83:60:77:c6 grid@rac1

The key's randomart image is:

+--[ DSA 1024]----+

| =o+o.. o|

| o o.E.=o...|

| . o.+o+ o . |

| ..o+ o |

| S o |

| . |

| |

| |

| |

+-----------------+

[grid@rac1 ~]$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

[grid@rac1 ~]$ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

[grid@rac1 ~]$ ssh rac2 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

The authenticity of host 'rac2 (192.168.182.147)' can't be established.

RSA key fingerprint is 67:81:77:3b:06:ba:b8:12:79:9c:6f:1c:93:e8:e5:99.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'rac2,192.168.182.147' (RSA) to the list of known hosts.

grid@rac2's password:

cat: /home/grid/.ssh/id_rsa.pub: No such file or directory

[grid@rac1 ~]$ ssh rac2 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

grid@rac2's password:

cat: /home/grid/.ssh/id_rsa.pub: No such file or directory

[grid@rac1 ~]$ cd ~/.ssh/

[grid@rac1 .ssh]$ ls

authorized_keys id_dsa id_dsa.pub id_rsa id_rsa.pub known_hosts

[grid@rac1 .ssh]$ ll

total 24

-rw-r--r-- 1 grid oinstall 990 Jun 19 23:23 authorized_keys

-rw------- 1 grid oinstall 672 Jun 19 23:22 id_dsa

-rw-r--r-- 1 grid oinstall 599 Jun 19 23:22 id_dsa.pub

-rw------- 1 grid oinstall 1671 Jun 19 23:21 id_rsa

-rw-r--r-- 1 grid oinstall 391 Jun 19 23:21 id_rsa.pub

-rw-r--r-- 1 grid oinstall 402 Jun 19 23:24 known_hosts

[grid@rac1 .ssh]$ pwd

/home/grid/.ssh

在第二节点执行

total 0

[root@rac2 .ssh]# su - grid

[grid@rac2 ~]$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/grid/.ssh/id_rsa):

Created directory '/home/grid/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/grid/.ssh/id_rsa.

Your public key has been saved in /home/grid/.ssh/id_rsa.pub.

The key fingerprint is:

42:dc:0a:c3:cc:9e:84:82:76:f6:9b:77:ae:8b:a5:fd grid@rac2

The key's randomart image is:

+--[ RSA 2048]----+

| |

|. = . . |

|o..oB o . |

|..oo.= . |

| o.o S |

| o. |

| o o . |

| * o |

| o ++E |

+-----------------+

[grid@rac2 ~]$ ssh-keygen -t dsa

Generating public/private dsa key pair.

Enter file in which to save the key (/home/grid/.ssh/id_dsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/grid/.ssh/id_dsa.

Your public key has been saved in /home/grid/.ssh/id_dsa.pub.

The key fingerprint is:

21:0f:45:cf:78:25:4a:e4:53:e9:1f:6a:c8:92:1a:cd grid@rac2

The key's randomart image is:

+--[ DSA 1024]----+

| o= o.. |

| + *.o |

| o *.+ |

| + +. . |

| o oS. o . |

| . E o o . |

| o . . |

| . |

| |

+-----------------+

[grid@rac2 ~]$ ssh rac2 date

The authenticity of host 'rac2 (192.168.182.147)' can't be established.

RSA key fingerprint is 67:81:77:3b:06:ba:b8:12:79:9c:6f:1c:93:e8:e5:99.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'rac2,192.168.182.147' (RSA) to the list of known hosts.

Mon Jun 19 23:34:37 CST 2023

[grid@rac2 ~]$ ssh rac1 date

The authenticity of host 'rac1 (192.168.182.145)' can't be established.

RSA key fingerprint is 67:81:77:3b:06:ba:b8:12:79:9c:6f:1c:93:e8:e5:99.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'rac1,192.168.182.145' (RSA) to the list of known hosts.

Mon Jun 19 23:34:43 CST 2023

[grid@rac2 ~]$

再次在第一节点执行

[root@rac1 ~]# cd /root/.ssh/

[root@rac1 .ssh]# ls

[root@rac1 .ssh]# ll

total 0

[root@rac1 .ssh]# ssh rac2 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

The authenticity of host 'rac2 (192.168.182.147)' can't be established.

RSA key fingerprint is 67:81:77:3b:06:ba:b8:12:79:9c:6f:1c:93:e8:e5:99.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'rac2,192.168.182.147' (RSA) to the list of known hosts.

root@rac2's password:

[root@rac1 .ssh]# su - grid

[grid@rac1 ~]$ ssh rac2 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

grid@rac2's password:

[grid@rac1 ~]$ ssh rac2 cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

grid@rac2's password:

[grid@rac1 ~]$ scp ~/.ssh/authorized_keys rac2:~/.ssh/authorized_keys

grid@rac2's password:

authorized_keys 100% 1980 1.9KB/s 00:00

[grid@rac1 ~]$ ssh rac1 date

The authenticity of host 'rac1 (192.168.182.145)' can't be established.

RSA key fingerprint is 67:81:77:3b:06:ba:b8:12:79:9c:6f:1c:93:e8:e5:99.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'rac1,192.168.182.145' (RSA) to the list of known hosts.

Mon Jun 19 23:34:09 CST 2023

[grid@rac1 ~]$ ssh rac2 date

Mon Jun 19 23:34:51 CST 2023

[grid@rac1 ~]$

在节点一配置oracle用户等效性

[root@rac1 .ssh]# su - oracle

[oracle@rac1 ~]$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/oracle/.ssh/id_rsa):

Created directory '/home/oracle/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/oracle/.ssh/id_rsa.

Your public key has been saved in /home/oracle/.ssh/id_rsa.pub.

The key fingerprint is:

50:a0:06:3f:61:bc:b1:57:6b:de:7e:32:17:7d:d1:12 oracle@rac1

The key's randomart image is:

+--[ RSA 2048]----+

| ..o ... |

| +oo .. E |

| =+.. . ..|

| .o...o ...|

| . oS. . ..|

| . . . . .|

| . . . |

| + o |

| = |

+-----------------+

[oracle@rac1 ~]$ ssh-keygen -t dsa

Generating public/private dsa key pair.

Enter file in which to save the key (/home/oracle/.ssh/id_dsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/oracle/.ssh/id_dsa.

Your public key has been saved in /home/oracle/.ssh/id_dsa.pub.

The key fingerprint is:

50:47:d7:3c:a1:2c:ee:dd:9a:14:af:93:67:31:25:24 oracle@rac1

The key's randomart image is:

+--[ DSA 1024]----+

| ..o .o.. |

| . . oE.= |

| . . oo . |

| . . . . .|

| S . . o |

| . . +o |

| . o.oo |

| .o+o |

| ++ |

+-----------------+

[oracle@rac1 ~]$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

[oracle@rac1 ~]$ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

[oracle@rac1 ~]$ cd .ssh/

[oracle@rac1 .ssh]$ ls

authorized_keys id_dsa id_dsa.pub id_rsa id_rsa.pub

[oracle@rac1 .ssh]$ cat authorized_keys

ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA3sKVtz8FWb8APE7k0k02MnUddGEJbeeQ2Cmarf7u6gqPSh8aJB6loj8W6Wy9FzFuBqImBcfKOIIDoPd8suMYbFNFFPpmvTCg0kaBd1MobIevQN91jBolRbU5sK6YgWdCOkw4cd9pFWvgv5iDsZ/NS6LZnovTCnEPjBJKXJMF3r7XzzNk48oc8GSDqrRuGFW15tQESq1oIxaTKdP0uQ1kjjbWyqMs3V4AbiFWNCyyGvgYtsTvpdT5zu/OSzEG+JKq9603+WRzT1G/zrswrm5a1nJLlrGHwIghjlKkmEUjcMLt0TCI1ikbkf9HGs6AeY1wDmGw8VU7PHcJV6QCMZgbHw== oracle@rac1

ssh-dss AAAAB3NzaC1kc3MAAACBAMBZDFXiIAx852S1ABPTjgzlx7NpRw8+uGvdcGSZrLhd0B19JsQxdluRh6Qq0cugMERBwrJiSQ7YENx1/A4AYdXOA9bmuzBE+20upgkeWt8TxltztF+MSuMyOSkjT/Mt0c6Mg+fBmWSU8LSwWNynYGA/wazoGmyURJIh0KuBHSZTAAAAFQC6wRKzNQwbOD1NXdKQQc80QcxlWQAAAIEAu5Kl3hwBLA04W197t9cy7G2HlQmWCnITW6iZ5orQ4zQk2UmR7n8NAEmjHrQjWSLNsoKQEETffA2STE3uBfBLebRFu7kaOuvUVTCjhajekktiaBe9b02hF1Kbn6x9IJ2vPzERy0+WuRc1LtuqQKyM3PCOXS5J/e2IKXbdFyPc4wIAAACAe7Iye9e8O9a3um3lmXSQTGiBuH1ph2t6SMc7hXae9SneWE+BSiOJ+8WldslLLDUq/OPr3uuNisfKybgLQKShzk9hxyhBWGlXRwLqRP9u/fBoNmRXnP06TYfF1Ph8yvqWsoI80Lf6GUcYEJDlmlNbVlOs7KdC9BNNbC9S4McuASo= oracle@rac1

[oracle@rac1 .ssh]$ ssh rac2 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

The authenticity of host 'rac2 (192.168.182.147)' can't be established.

RSA key fingerprint is 67:81:77:3b:06:ba:b8:12:79:9c:6f:1c:93:e8:e5:99.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'rac2,192.168.182.147' (RSA) to the list of known hosts.

oracle@rac2's password:

[oracle@rac1 .ssh]$ ssh rac2 cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

oracle@rac2's password:

[oracle@rac1 .ssh]$ scp ~/.ssh/authorized_keys tbrac2:~/.ssh/authorized_keys

ssh: Could not resolve hostname tbrac2: Temporary failure in name resolution

lost connection

[oracle@rac1 .ssh]$ scp ~/.ssh/authorized_keys rac2:~/.ssh/authorized_keys

oracle@rac2's password:

authorized_keys 100% 1988 1.9KB/s 00:00

[oracle@rac1 .ssh]$ ssh rac1 date

The authenticity of host 'rac1 (192.168.182.145)' can't be established.

RSA key fingerprint is 67:81:77:3b:06:ba:b8:12:79:9c:6f:1c:93:e8:e5:99.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'rac1,192.168.182.145' (RSA) to the list of known hosts.

Mon Jun 19 23:49:51 CST 2023

[oracle@rac1 .ssh]$ ssh rac2 date

Mon Jun 19 23:49:53 CST 2023

[oracle@rac1 .ssh]$

节点二配置等效性

[grid@rac2 ~]$ su - oracle

Password:

[oracle@rac2 ~]$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/oracle/.ssh/id_rsa):

Created directory '/home/oracle/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/oracle/.ssh/id_rsa.

Your public key has been saved in /home/oracle/.ssh/id_rsa.pub.

The key fingerprint is:

16:59:b3:df:e7:f4:2d:8a:da:f6:58:9d:95:cf:60:09 oracle@rac2

The key's randomart image is:

+--[ RSA 2048]----+

| o |

| o o |

| o . E |

| . . o ..|

| S . =.+|

| . o O+|

| . + *|

| ..+ . . |

| .o+.o |

+-----------------+

[oracle@rac2 ~]$ ssh-keygen -t dsa

Generating public/private dsa key pair.

Enter file in which to save the key (/home/oracle/.ssh/id_dsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/oracle/.ssh/id_dsa.

Your public key has been saved in /home/oracle/.ssh/id_dsa.pub.

The key fingerprint is:

87:d5:d7:53:f3:fe:0d:90:aa:62:4e:5e:91:92:b1:ed oracle@rac2

The key's randomart image is:

+--[ DSA 1024]----+

| ..|

| . . .+|

| . . + ..o|

| = + . o ..|

| + S o . .|

| o + .o|

| + E o|

| = o |

| o |

+-----------------+

[oracle@rac2 ~]$ ssh rac1 date

The authenticity of host 'rac1 (192.168.182.145)' can't be established.

RSA key fingerprint is 67:81:77:3b:06:ba:b8:12:79:9c:6f:1c:93:e8:e5:99.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'rac1,192.168.182.145' (RSA) to the list of known hosts.

Mon Jun 19 23:50:04 CST 2023

[oracle@rac2 ~]$ ssh rac2 date

The authenticity of host 'rac2 (192.168.182.147)' can't be established.

RSA key fingerprint is 67:81:77:3b:06:ba:b8:12:79:9c:6f:1c:93:e8:e5:99.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'rac2,192.168.182.147' (RSA) to the list of known hosts.

Mon Jun 19 23:50:09 CST 2023

[oracle@rac2 ~]$ ssh rac2 date

Mon Jun 19 23:50:11 CST 2023

[oracle@rac2 ~]$ ssh rac1 date

Mon Jun 19 23:50:15 CST 2023

[oracle@rac2 ~]$

上传oracle安装包

[root@rac1 software]# ls

grid

kmod-oracleasm-2.0.6.rh1-2.el6.x86_64.rpm

oracleasmlib-2.0.4-1.el6.x86_64.rpm

oracleasm-support-2.1.8-1.el6.x86_64.rpm

p13390677_112040_Linux-x86-64_1of7.zip

p13390677_112040_Linux-x86-64_2of7.zip

p13390677_112040_Linux-x86-64_3of7.zip

pdksh-5.2.14-30.x86_64.rpm

[root@rac1 software]# unzip p13390677_112040_Linux-x86-64_3of7.zip

[root@rac1 software]# chown -R grid:oinstall grid/

[root@rac1 software]# chmod -R 775 grid/

[root@rac1 software]#

利用runcluvfy脚本检查集群安装情况

[grid@rac1 grid]$ ./runcluvfy.sh stage -pre crsinst -n tbrac1,tbrac2 -verbose

Performing pre-checks for cluster services setup

Checking node reachability...

PRVF-6006 : Unable to reach any of the nodes

PRKN-1034 : Failed to retrieve IP address of host "tbrac1"PRKN-1034 : Failed to retrieve IP address of host "tbrac2"

Pre-check for cluster services setup was unsuccessful on all the nodes.

[grid@rac1 grid]$ ./runcluvfy.sh stage -pre crsinst -n rac1,rac2 -verbose

Performing pre-checks for cluster services setup

Checking node reachability...

Check: Node reachability from node "rac1"

Destination Node Reachable?

------------------------------------ ------------------------

rac2 yes

rac1 yes

Result: Node reachability check passed from node "rac1"

Checking user equivalence...

Check: User equivalence for user "grid"

Node Name Status

------------------------------------ ------------------------

rac2 passed

rac1 passed

Result: User equivalence check passed for user "grid"

Checking node connectivity...

Checking hosts config file...

Node Name Status

------------------------------------ ------------------------

rac2 passed

rac1 passed

Verification of the hosts config file successful

Interface information for node "rac2"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

eth1 192.168.186.129 192.168.186.0 0.0.0.0 192.168.186.1 00:0C:29:EC:39:AB 1500

eth2 192.168.182.147 192.168.182.0 0.0.0.0 192.168.186.1 00:0C:29:EC:39:B5 1500

Interface information for node "rac1"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

eth1 192.168.186.128 192.168.186.0 0.0.0.0 192.168.186.1 00:0C:29:51:B8:59 1500

eth2 192.168.182.145 192.168.182.0 0.0.0.0 192.168.186.1 00:0C:29:51:B8:63 1500

Check: Node connectivity of subnet "192.168.186.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

rac2[192.168.186.129] rac1[192.168.186.128] yes

Result: Node connectivity passed for subnet "192.168.186.0" with node(s) rac2,rac1

Check: TCP connectivity of subnet "192.168.186.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

rac1:192.168.186.128 rac2:192.168.186.129 passed

Result: TCP connectivity check passed for subnet "192.168.186.0"

Check: Node connectivity of subnet "192.168.182.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

rac2[192.168.182.147] rac1[192.168.182.145] yes

Result: Node connectivity passed for subnet "192.168.182.0" with node(s) rac2,rac1

Check: TCP connectivity of subnet "192.168.182.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

rac1:192.168.182.145 rac2:192.168.182.147 passed

Result: TCP connectivity check passed for subnet "192.168.182.0"

Interfaces found on subnet "192.168.186.0" that are likely candidates for VIP are:

rac2 eth1:192.168.186.129

rac1 eth1:192.168.186.128

Interfaces found on subnet "192.168.182.0" that are likely candidates for a private interconnect are:

rac2 eth2:192.168.182.147

rac1 eth2:192.168.182.145

Checking subnet mask consistency...

Subnet mask consistency check passed for subnet "192.168.186.0".

Subnet mask consistency check passed for subnet "192.168.182.0".

Subnet mask consistency check passed.

Result: Node connectivity check passed

Checking multicast communication...

Checking subnet "192.168.186.0" for multicast communication with multicast group "230.0.1.0"...

Check of subnet "192.168.186.0" for multicast communication with multicast group "230.0.1.0" passed.

Checking subnet "192.168.182.0" for multicast communication with multicast group "230.0.1.0"...

Check of subnet "192.168.182.0" for multicast communication with multicast group "230.0.1.0" passed.

Check of multicast communication passed.

Checking ASMLib configuration.

Node Name Status

------------------------------------ ------------------------

rac2 passed

rac1 passed

Result: Check for ASMLib configuration passed.

Check: Total memory

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 3.1137GB (3264920.0KB) 1.5GB (1572864.0KB) passed

rac1 3.1137GB (3264920.0KB) 1.5GB (1572864.0KB) passed

Result: Total memory check passed

Check: Available memory

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 2.9323GB (3074748.0KB) 50MB (51200.0KB) passed

rac1 2.7971GB (2932960.0KB) 50MB (51200.0KB) passed

Result: Available memory check passed

Check: Swap space

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 7.9062GB (8290296.0KB) 3.1137GB (3264920.0KB) passed

rac1 7.9062GB (8290296.0KB) 3.1137GB (3264920.0KB) passed

Result: Swap space check passed

Check: Free disk space for "rac2:/tmp"

Path Node Name Mount point Available Required Status

---------------- ------------ ------------ ------------ ------------ ------------

/tmp rac2 / 15.0566GB 1GB passed

Result: Free disk space check passed for "rac2:/tmp"

Check: Free disk space for "rac1:/tmp"

Path Node Name Mount point Available Required Status

---------------- ------------ ------------ ------------ ------------ ------------

/tmp rac1 / 10.0817GB 1GB passed

Result: Free disk space check passed for "rac1:/tmp"

Check: User existence for "grid"

Node Name Status Comment

------------ ------------------------ ------------------------

rac2 passed exists(1100)

rac1 passed exists(1100)

Checking for multiple users with UID value 1100

Result: Check for multiple users with UID value 1100 passed

Result: User existence check passed for "grid"

Check: Group existence for "oinstall"

Node Name Status Comment

------------ ------------------------ ------------------------

rac2 passed exists

rac1 passed exists

Result: Group existence check passed for "oinstall"

Check: Group existence for "dba"

Node Name Status Comment

------------ ------------------------ ------------------------

rac2 passed exists

rac1 passed exists

Result: Group existence check passed for "dba"

Check: Membership of user "grid" in group "oinstall" [as Primary]

Node Name User Exists Group Exists User in Group Primary Status

---------------- ------------ ------------ ------------ ------------ ------------

rac2 yes yes yes yes passed

rac1 yes yes yes yes passed

Result: Membership check for user "grid" in group "oinstall" [as Primary] passed

Check: Membership of user "grid" in group "dba"

Node Name User Exists Group Exists User in Group Status

---------------- ------------ ------------ ------------ ----------------

rac2 yes yes yes passed

rac1 yes yes yes passed

Result: Membership check for user "grid" in group "dba" passed

Check: Run level

Node Name run level Required Status

------------ ------------------------ ------------------------ ----------

rac2 5 3,5 passed

rac1 5 3,5 passed

Result: Run level check passed

Check: Hard limits for "maximum open file descriptors"

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

rac2 hard 65536 65536 passed

rac1 hard 65536 65536 passed

Result: Hard limits check passed for "maximum open file descriptors"

Check: Soft limits for "maximum open file descriptors"

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

rac2 soft 1024 1024 passed

rac1 soft 1024 1024 passed

Result: Soft limits check passed for "maximum open file descriptors"

Check: Hard limits for "maximum user processes"

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

rac2 hard 16384 16384 passed

rac1 hard 16384 16384 passed

Result: Hard limits check passed for "maximum user processes"

Check: Soft limits for "maximum user processes"

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

rac2 soft 2047 2047 passed

rac1 soft 2047 2047 passed

Result: Soft limits check passed for "maximum user processes"

Check: System architecture

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 x86_64 x86_64 passed

rac1 x86_64 x86_64 passed

Result: System architecture check passed

Check: Kernel version

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 2.6.32-358.el6.x86_64 2.6.9 passed

rac1 2.6.32-358.el6.x86_64 2.6.9 passed

Result: Kernel version check passed

Check: Kernel parameter for "semmsl"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 250 250 250 passed

rac1 250 250 250 passed

Result: Kernel parameter check passed for "semmsl"

Check: Kernel parameter for "semmns"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 32000 32000 32000 passed

rac1 32000 32000 32000 passed

Result: Kernel parameter check passed for "semmns"

Check: Kernel parameter for "semopm"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 100 100 100 passed

rac1 100 100 100 passed

Result: Kernel parameter check passed for "semopm"

Check: Kernel parameter for "semmni"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 128 128 128 passed

rac1 128 128 128 passed

Result: Kernel parameter check passed for "semmni"

Check: Kernel parameter for "shmmax"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 1306910720 1306910720 1671639040 failed Current value incorrect. Configured value incorrect.

rac1 1306910720 1306910720 1671639040 failed Current value incorrect. Configured value incorrect.

Result: Kernel parameter check failed for "shmmax"

Check: Kernel parameter for "shmmni"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 4096 4096 4096 passed

rac1 4096 4096 4096 passed

Result: Kernel parameter check passed for "shmmni"

Check: Kernel parameter for "shmall"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 2097152 2097152 2097152 passed

rac1 2097152 2097152 2097152 passed

Result: Kernel parameter check passed for "shmall"

Check: Kernel parameter for "file-max"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 6815744 6815744 6815744 passed

rac1 6815744 6815744 6815744 passed

Result: Kernel parameter check passed for "file-max"

Check: Kernel parameter for "ip_local_port_range"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 between 9000.0 & 65500.0 between 9000.0 & 65500.0 between 9000.0 & 65500.0 passed

rac1 between 9000.0 & 65500.0 between 9000.0 & 65500.0 between 9000.0 & 65500.0 passed

Result: Kernel parameter check passed for "ip_local_port_range"

Check: Kernel parameter for "rmem_default"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 262144 262144 262144 passed

rac1 262144 262144 262144 passed

Result: Kernel parameter check passed for "rmem_default"

Check: Kernel parameter for "rmem_max"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 4194304 4194304 4194304 passed

rac1 4194304 4194304 4194304 passed

Result: Kernel parameter check passed for "rmem_max"

Check: Kernel parameter for "wmem_default"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 262144 262144 262144 passed

rac1 262144 262144 262144 passed

Result: Kernel parameter check passed for "wmem_default"

Check: Kernel parameter for "wmem_max"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 1048586 1048586 1048576 passed

rac1 1048586 1048586 1048576 passed

Result: Kernel parameter check passed for "wmem_max"

Check: Kernel parameter for "aio-max-nr"

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac2 1048576 1048576 1048576 passed

rac1 1048576 1048576 1048576 passed

Result: Kernel parameter check passed for "aio-max-nr"

Check: Package existence for "make"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 make-3.81-20.el6 make-3.80 passed

rac1 make-3.81-20.el6 make-3.80 passed

Result: Package existence check passed for "make"

Check: Package existence for "binutils"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 binutils-2.20.51.0.2-5.36.el6 binutils-2.15.92.0.2 passed

rac1 binutils-2.20.51.0.2-5.36.el6 binutils-2.15.92.0.2 passed

Result: Package existence check passed for "binutils"

Check: Package existence for "gcc(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 gcc(x86_64)-4.4.7-3.el6 gcc(x86_64)-3.4.6 passed

rac1 gcc(x86_64)-4.4.7-3.el6 gcc(x86_64)-3.4.6 passed

Result: Package existence check passed for "gcc(x86_64)"

Check: Package existence for "libaio(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 libaio(x86_64)-0.3.107-10.el6 libaio(x86_64)-0.3.105 passed

rac1 libaio(x86_64)-0.3.107-10.el6 libaio(x86_64)-0.3.105 passed

Result: Package existence check passed for "libaio(x86_64)"

Check: Package existence for "glibc(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 glibc(x86_64)-2.12-1.107.el6 glibc(x86_64)-2.3.4-2.41 passed

rac1 glibc(x86_64)-2.12-1.107.el6 glibc(x86_64)-2.3.4-2.41 passed

Result: Package existence check passed for "glibc(x86_64)"

Check: Package existence for "compat-libstdc++-33(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 compat-libstdc++-33(x86_64)-3.2.3-69.el6 compat-libstdc++-33(x86_64)-3.2.3 passed

rac1 compat-libstdc++-33(x86_64)-3.2.3-69.el6 compat-libstdc++-33(x86_64)-3.2.3 passed

Result: Package existence check passed for "compat-libstdc++-33(x86_64)"

Check: Package existence for "elfutils-libelf(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 elfutils-libelf(x86_64)-0.152-1.el6 elfutils-libelf(x86_64)-0.97 passed

rac1 elfutils-libelf(x86_64)-0.152-1.el6 elfutils-libelf(x86_64)-0.97 passed

Result: Package existence check passed for "elfutils-libelf(x86_64)"

Check: Package existence for "elfutils-libelf-devel"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 elfutils-libelf-devel-0.152-1.el6 elfutils-libelf-devel-0.97 passed

rac1 elfutils-libelf-devel-0.152-1.el6 elfutils-libelf-devel-0.97 passed

Result: Package existence check passed for "elfutils-libelf-devel"

Check: Package existence for "glibc-common"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 glibc-common-2.12-1.107.el6 glibc-common-2.3.4 passed

rac1 glibc-common-2.12-1.107.el6 glibc-common-2.3.4 passed

Result: Package existence check passed for "glibc-common"

Check: Package existence for "glibc-devel(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 glibc-devel(x86_64)-2.12-1.107.el6 glibc-devel(x86_64)-2.3.4 passed

rac1 glibc-devel(x86_64)-2.12-1.107.el6 glibc-devel(x86_64)-2.3.4 passed

Result: Package existence check passed for "glibc-devel(x86_64)"

Check: Package existence for "glibc-headers"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 glibc-headers-2.12-1.107.el6 glibc-headers-2.3.4 passed

rac1 glibc-headers-2.12-1.107.el6 glibc-headers-2.3.4 passed

Result: Package existence check passed for "glibc-headers"

Check: Package existence for "gcc-c++(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 gcc-c++(x86_64)-4.4.7-3.el6 gcc-c++(x86_64)-3.4.6 passed

rac1 gcc-c++(x86_64)-4.4.7-3.el6 gcc-c++(x86_64)-3.4.6 passed

Result: Package existence check passed for "gcc-c++(x86_64)"

Check: Package existence for "libaio-devel(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 libaio-devel(x86_64)-0.3.107-10.el6 libaio-devel(x86_64)-0.3.105 passed

rac1 libaio-devel(x86_64)-0.3.107-10.el6 libaio-devel(x86_64)-0.3.105 passed

Result: Package existence check passed for "libaio-devel(x86_64)"

Check: Package existence for "libgcc(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 libgcc(x86_64)-4.4.7-3.el6 libgcc(x86_64)-3.4.6 passed

rac1 libgcc(x86_64)-4.4.7-3.el6 libgcc(x86_64)-3.4.6 passed

Result: Package existence check passed for "libgcc(x86_64)"

Check: Package existence for "libstdc++(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 libstdc++(x86_64)-4.4.7-3.el6 libstdc++(x86_64)-3.4.6 passed

rac1 libstdc++(x86_64)-4.4.7-3.el6 libstdc++(x86_64)-3.4.6 passed

Result: Package existence check passed for "libstdc++(x86_64)"

Check: Package existence for "libstdc++-devel(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 libstdc++-devel(x86_64)-4.4.7-3.el6 libstdc++-devel(x86_64)-3.4.6 passed

rac1 libstdc++-devel(x86_64)-4.4.7-3.el6 libstdc++-devel(x86_64)-3.4.6 passed

Result: Package existence check passed for "libstdc++-devel(x86_64)"

Check: Package existence for "sysstat"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 sysstat-9.0.4-20.el6 sysstat-5.0.5 passed

rac1 sysstat-9.0.4-20.el6 sysstat-5.0.5 passed

Result: Package existence check passed for "sysstat"

Check: Package existence for "pdksh"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 missing pdksh-5.2.14 failed

rac1 missing pdksh-5.2.14 failed

Result: Package existence check failed for "pdksh"

Check: Package existence for "expat(x86_64)"

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac2 expat(x86_64)-2.0.1-11.el6_2 expat(x86_64)-1.95.7 passed

rac1 expat(x86_64)-2.0.1-11.el6_2 expat(x86_64)-1.95.7 passed

Result: Package existence check passed for "expat(x86_64)"

Checking for multiple users with UID value 0

Result: Check for multiple users with UID value 0 passed

Check: Current group ID

Result: Current group ID check passed

Starting check for consistency of primary group of root user

Node Name Status

------------------------------------ ------------------------

rac2 passed

rac1 passed

Check for consistency of root user's primary group passed

Starting Clock synchronization checks using Network Time Protocol(NTP)...

NTP Configuration file check started...

The NTP configuration file "/etc/ntp.conf" is available on all nodes

NTP Configuration file check passed

No NTP Daemons or Services were found to be running

PRVF-5507 : NTP daemon or service is not running on any node but NTP configuration file exists on the following node(s):

rac2,rac1

Result: Clock synchronization check using Network Time Protocol(NTP) failed

Checking Core file name pattern consistency...

Core file name pattern consistency check passed.

Checking to make sure user "grid" is not in "root" group

Node Name Status Comment

------------ ------------------------ ------------------------

rac2 passed does not exist

rac1 passed does not exist

Result: User "grid" is not part of "root" group. Check passed

Check default user file creation mask

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

rac2 0022 0022 passed

rac1 0022 0022 passed

Result: Default user file creation mask check passed

Checking consistency of file "/etc/resolv.conf" across nodes

Checking the file "/etc/resolv.conf" to make sure only one of domain and search entries is defined

File "/etc/resolv.conf" does not have both domain and search entries defined

Checking if domain entry in file "/etc/resolv.conf" is consistent across the nodes...

domain entry in file "/etc/resolv.conf" is consistent across nodes

Checking if search entry in file "/etc/resolv.conf" is consistent across the nodes...

search entry in file "/etc/resolv.conf" is consistent across nodes

Checking DNS response time for an unreachable node

Node Name Status

------------------------------------ ------------------------

rac2 failed

rac1 failed

PRVF-5636 : The DNS response time for an unreachable node exceeded "15000" ms on following nodes: rac2,rac1

File "/etc/resolv.conf" is not consistent across nodes

Check: Time zone consistency

Result: Time zone consistency check passed

Pre-check for cluster services setup was unsuccessful on all the nodes.

[grid@rac1 grid]$

检查发现有三个地方报错

Check: Kernel parameter for “shmmax” ,修改/etc/sysctl.con,原kernel.shmmax = 1306910720配置错误。

[root@rac1 software]# vi /etc/sysctl.conf^C

[root@rac1 software]# tail /etc/sysctl.conf -n 10

kernel.shmall = 2097152

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048586

net.ipv4.tcp_wmem = 262144 262144 262144

net.ipv4.tcp_rmem = 4194304 4194304 4194304

Check: Package existence for “pdksh” 处理,删除ksh,然后安装pdksh包

[root@rac1 software]# rpm -ivh pdksh-5.2.14-30.x86_64.rpm

warning: pdksh-5.2.14-30.x86_64.rpm: Header V3 DSA/SHA1 Signature, key ID 4f2a6fd2: NOKEY

error: Failed dependencies:

pdksh conflicts with ksh-20100621-19.el6.x86_64

[root@rac1 software]# rpm -e ksh

[root@rac1 software]# rpm -ivh pdksh-5.2.14-30.x86_64.rpm

warning: pdksh-5.2.14-30.x86_64.rpm: Header V3 DSA/SHA1 Signature, key ID 4f2a6fd2: NOKEY

Preparing... ########################################### [100%]

1:pdksh ########################################### [100%]

[root@rac1 software]#

NTP Configuration file check started…

The NTP configuration file “/etc/ntp.conf” is available on all nodes

NTP Configuration file check passed

No NTP Daemons or Services were found to be running

PRVF-5507 : NTP daemon or service is not running on any node but NTP configuration file exists on the following node(s):

rac2,rac1

Result: Clock synchronization check using Network Time Protocol(NTP) failed

禁用NTP时间同步

[grid@rac1 grid]$ exit

logout

[root@rac1 software]# serivce ntpd status

-bash: serivce: command not found

[root@rac1 software]# service ntpd status

ntpd is stopped

[root@rac1 software]# mv /etc/ntp.conf /etc/ntp.conf.bak

[root@rac1 software]# chkconfig ntpd off

如果没有配置DNS的话 检测/etc/resolv.conf 这步骤是会失败的,

但是不影响安装 ,直接 ignore 即可

Checking the file "/etc/resolv.conf" to make sure only one of domain and search entries is defined

File "/etc/resolv.conf" does not have both domain and search entries defined

Checking if domain entry in file "/etc/resolv.conf" is consistent across the nodes...

domain entry in file "/etc/resolv.conf" is consistent across nodes

Checking if search entry in file "/etc/resolv.conf" is consistent across the nodes...

search entry in file "/etc/resolv.conf" is consistent across nodes

Checking DNS response time for an unreachable node

Node Name Status

------------------------------------ ------------------------

rac2 failed

rac1 failed

PRVF-5636 : The DNS response time for an unreachable node exceeded "15000" ms on following nodes: rac2,rac1

File "/etc/resolv.conf" is not consistent across nodes

Check: Time zone consistency

Result: Time zone consistency check passed

在节点1和节点2 分别执行以下操作

[root@rac1 software]# oracleasm configure -i

Configuring the Oracle ASM library driver.

This will configure the on-boot properties of the Oracle ASM library

driver. The following questions will determine whether the driver is

loaded on boot and what permissions it will have. The current values

will be shown in brackets ('[]'). Hitting <ENTER> without typing an

answer will keep that current value. Ctrl-C will abort.

Default user to own the driver interface []: grid

Default group to own the driver interface []: dba

Start Oracle ASM library driver on boot (y/n) [n]: y

Scan for Oracle ASM disks on boot (y/n) [y]: y

Writing Oracle ASM library driver configuration: done

[root@rac1 software]#

加载 oracleasm 内核模块

[root@rac1 dev]# oracleasm init

Creating /dev/oracleasm mount point: /dev/oracleasm

Loading module "oracleasm": oracleasm

Mounting ASMlib driver filesystem: /dev/oracleasm

以上操作没问题,在节点rac1上以root执行创建 ASM 磁盘(只需要在节点一创建)

[root@rac1 dev]# oracleasm createdisk CRS01 /dev/sdb1

Writing disk header: done

Instantiating disk: done

[root@rac1 dev]# oracleasm createdisk CRS02 /dev/sdf1

Writing disk header: done

Instantiating disk: done

[root@rac1 dev]# oracleasm createdisk DATA01 /dev/sdd1

Writing disk header: done

Instantiating disk: done

[root@rac1 dev]# oracleasm createdisk DATA02 /dev/sde1

Writing disk header: done

Instantiating disk: done

[root@rac1 dev]# oracleasm createdisk FRA01 /dev/sdc1

Writing disk header: done

Instantiating disk: done

[root@rac1 dev]#

节点二扫描一遍就会发现这三块盘

[root@rac2 software]# oracleasm scandisks

Reloading disk partitions: done

Cleaning any stale ASM disks...

Scanning system for ASM disks...

Instantiating disk "CRS01"

Instantiating disk "FRA01"

Instantiating disk "DATA01"

Instantiating disk "DATA02"

Instantiating disk "CRS02"

[root@rac2 software]#

再次确认建立互信关系

[root@rac1 dev]# su - oracle

[oracle@rac1 ~]$ ssh rac2 date

Tue Jun 20 01:08:08 CST 2023

[oracle@rac1 ~]$ ssh rac2-priv date

The authenticity of host 'rac2-priv (192.168.186.129)' can't be established.

RSA key fingerprint is 67:81:77:3b:06:ba:b8:12:79:9c:6f:1c:93:e8:e5:99.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'rac2-priv,192.168.186.129' (RSA) to the list of known hosts.

Tue Jun 20 01:08:19 CST 2023

[oracle@rac1 ~]$ ssh rac1-priv date

The authenticity of host 'rac1-priv (192.168.186.128)' can't be established.

RSA key fingerprint is 67:81:77:3b:06:ba:b8:12:79:9c:6f:1c:93:e8:e5:99.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'rac1-priv,192.168.186.128' (RSA) to the list of known hosts.

Tue Jun 20 01:08:29 CST 2023

[oracle@rac1 ~]$

安装前的检查

2个节点安装cvuqdisk包,进入grid安装包

[root@rac1 dev]# cd /software/grid/rpm/

[root@rac1 rpm]# ls

cvuqdisk-1.0.9-1.rpm

[root@rac1 rpm]# rpm -ivh cvuqdisk-1.0.7-1.rpm

error: open of cvuqdisk-1.0.7-1.rpm failed: No such file or directory

[root@rac1 rpm]# ls

cvuqdisk-1.0.9-1.rpm

[root@rac1 rpm]# rpm -ivh cvuqdisk-1.0.9-1.rpm

Preparing... ########################################### [100%]

Using default group oinstall to install package

1:cvuqdisk ########################################### [100%]

[root@rac1 rpm]# scp cvuqdisk-1.0.9-1.rpm rac2:/software

root@rac2's password:

Permission denied, please try again.

root@rac2's password:

cvuqdisk-1.0.9-1.rpm 100% 8288 8.1KB/s 00:00

[root@rac1 rpm]#

[root@rac2 software]# cd /software/

[root@rac2 software]# ls

cvuqdisk-1.0.9-1.rpm

kmod-oracleasm-2.0.6.rh1-2.el6.x86_64.rpm

oracleasmlib-2.0.4-1.el6.x86_64.rpm

oracleasm-support-2.1.8-1.el6.x86_64.rpm

pdksh-5.2.14-30.x86_64.rpm

[root@rac2 software]# rpm -ivh cvuqdisk-1.0.9-1.rpm

Preparing... ########################################### [100%]

Using default group oinstall to install package

1:cvuqdisk ########################################### [100%]

[root@rac2 software]#

在节点一加粗样式上设置环境变量

[root@rac1 rpm]# export DISPLAY=192.168.182.1:0.0

[root@rac1 rpm]# su - grid

[grid@rac1 ~]$ export DISPLAY=192.168.182.1:0.0

[grid@rac1 ~]$ cd /software/grid/

[grid@rac1 grid]$ ls

install readme.html response rpm runcluvfy.sh runInstaller sshsetup stage welcome.html

[grid@rac1 grid]$ ./runInstaller

Starting Oracle Universal Installer...

Checking Temp space: must be greater than 120 MB. Actual 9630 MB Passed

Checking swap space: must be greater than 150 MB. Actual 8095 MB Passed

Checking monitor: must be configured to display at least 256 colors. Actual 16777216 Passed

Preparing to launch Oracle Universal Installer from /tmp/OraInstall2023-06-20_01-25-47AM. Please wait ...[grid@rac1 grid]$

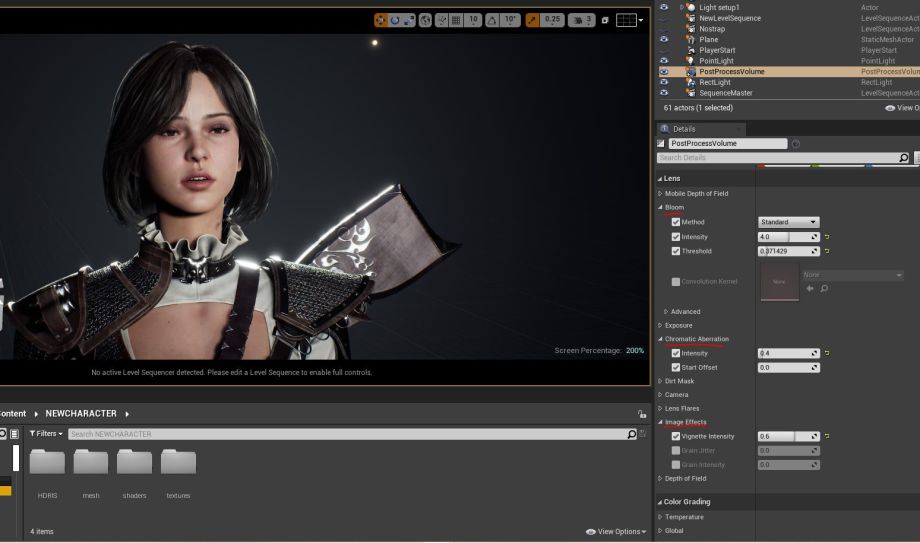

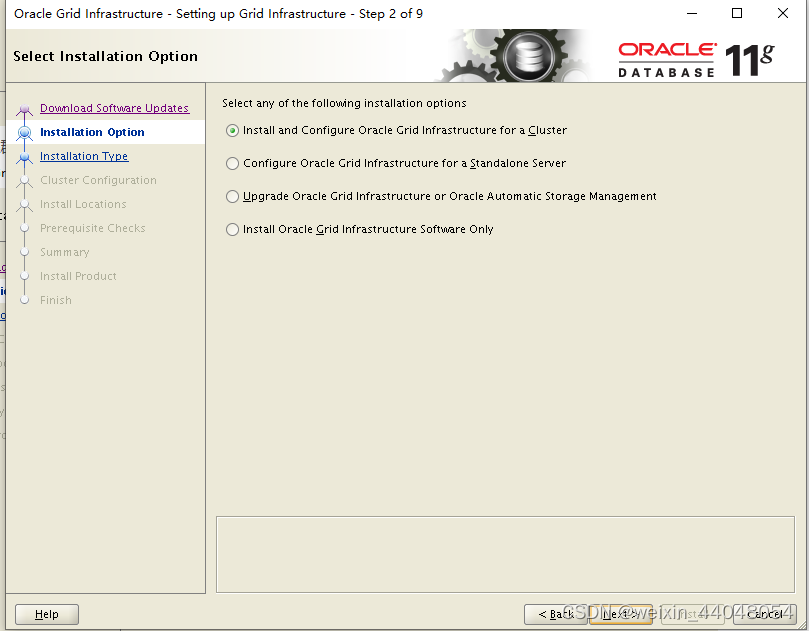

切换到 grid 用户运行脚本,即可进入图形化安装

选择Skip software updates,然后选择next

选择安装集群和配置环境,然后选择next

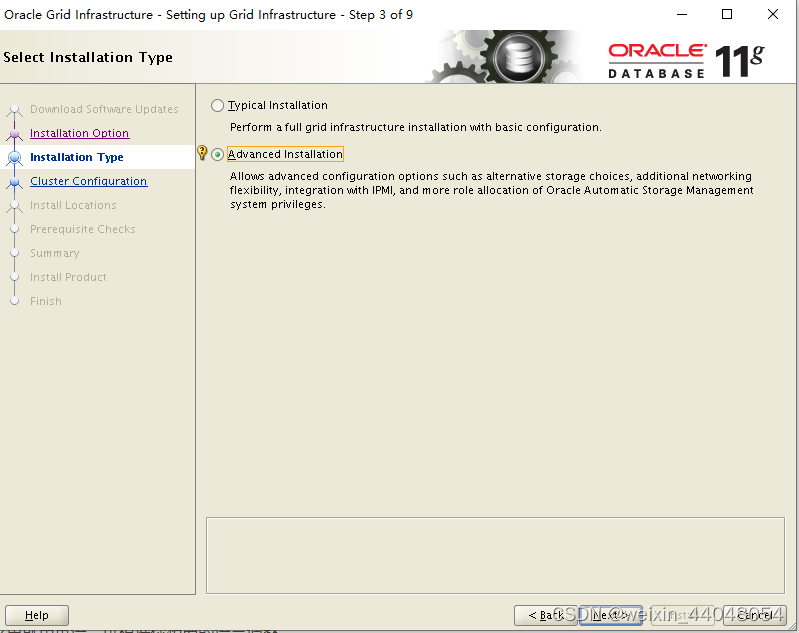

选择高级安装,然后在next

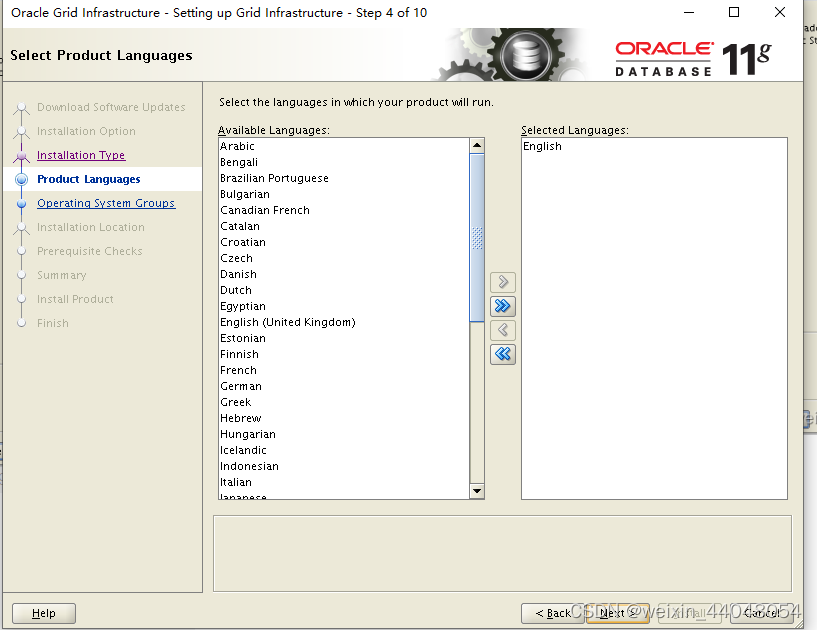

选择语言,这里就用英语,可根据你想要的语言调整

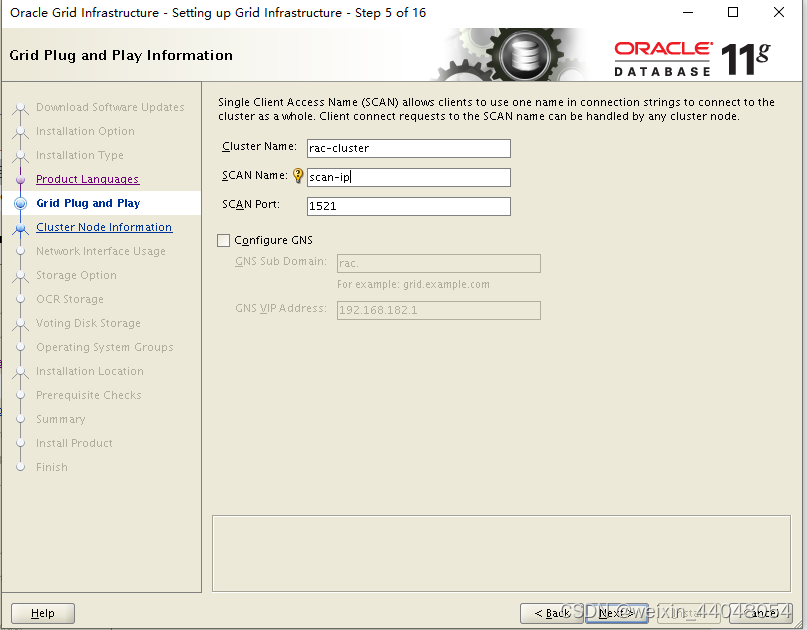

此处集群名自己取,SCAN名需要和/etc/hosts中的scan ip别名一致,端口默认,不选GNS,然后 next

界面只有第一个节点rac1,点击“Add”把第二个节点rac2加上。选择Add添加节点二,按照hosts 设置的填写,然后在 next,如果节点一和节点二互信没配好,下一步会报错

这一步是系统自动识别,不用管理,直接 next

存储选择,选择ASM,然后在 next

创建一个asm Disk Group Name 组,并给一个名称ORC,并选择下面的三块盘,然后 Nex