作者: 我是咖啡哥 原文来源: https://tidb.net/blog/f614b200

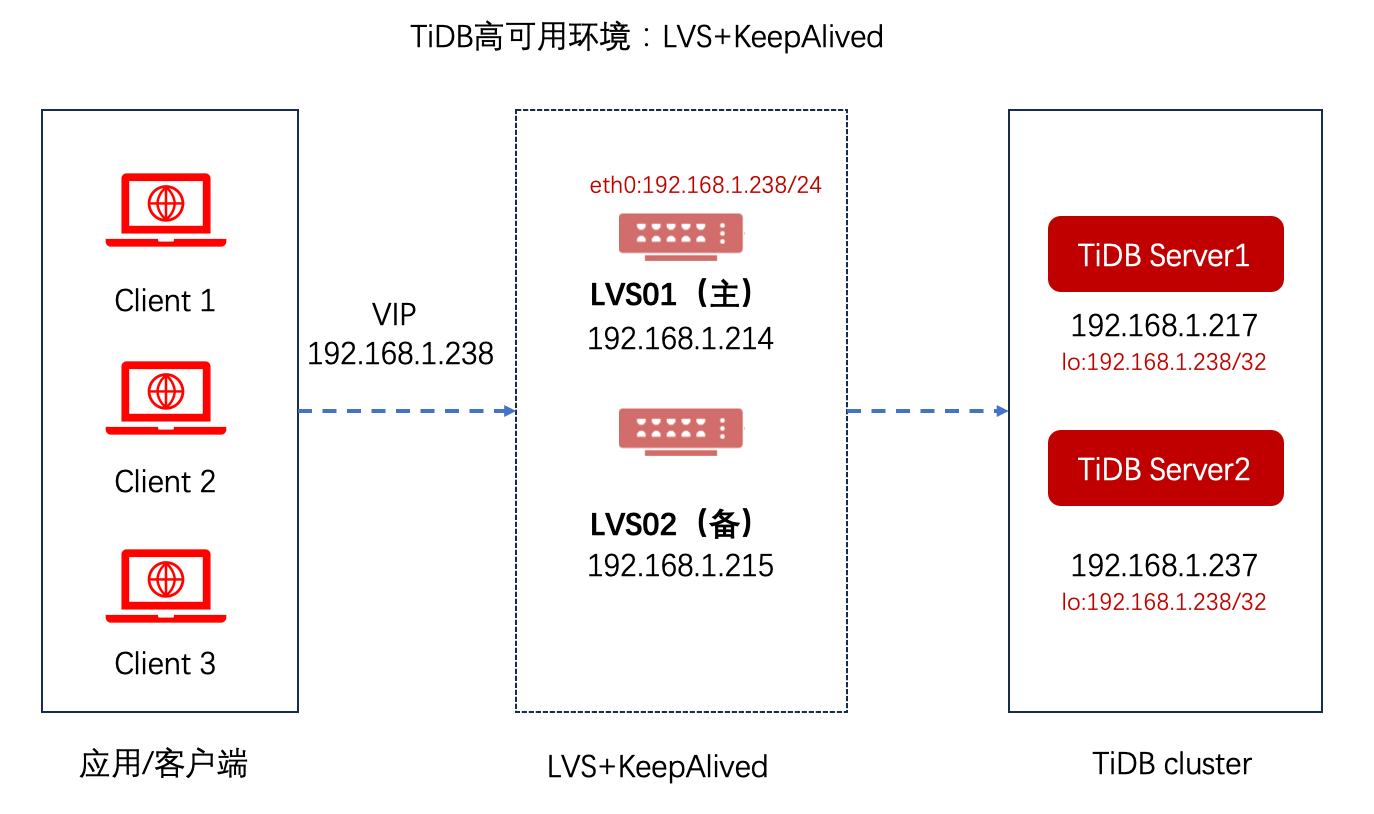

昨天,发了一篇使用HAproxy+KP搭建TiDB负载均衡环境的文章,今天我们再用LVS+KP来做个实验。

环境信息

TiDB版本:V7.1.0

haproxy版本:2.6.2

OS环境:Centos 7.9

VIP:192.168.1.238

TiDB Server IP:192.168.1.217、192.168.1.237

LVS+KP安装节点:192.168.1.214、192.168.1.215

1、安装vs+keepalived

sudo -i

yum -y install keepalived ipvsadm

yum -y install popt* libnl-devel gcc

2、去tidb server挂载lvs的vip到lo口

注意这里挂载的是32的IP地址。不是24.

/sbin/ip addr add 192.168.1.238/32 dev lo

并且在tidb server上vi /etc/rc.local 添加以下内容,主要作用,tidb server节点主机重启后,自动挂载VIP

# start_tidb_vip

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

/sbin/ip addr add 192.168.1.238/32 dev lo

3、配置主kp

vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

router_id lvs_tidb_217

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 238

priority 100

advert_int 1

nopreempt

virtual_ipaddress {

192.168.1.238/24 dev eth0

}

}

virtual_server 192.168.1.238 4000 {

delay_loop 1

lb_algo wlc

lb_kind DR

persistence_timeout 0

protocol TCP

real_server 192.168.1.217 4000 {

weight 1

TCP_CHECK {

connect_timeout 2

nb_get_retry 3

delay_before_retry 2

connect_port 4000

}

}

real_server 192.168.1.237 4000 {

weight 1

TCP_CHECK {

connect_timeout 2

nb_get_retry 3

delay_before_retry 2

connect_port 4000

}

}

}

4、配置从kp

vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id lvs_tidb_237

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 238

priority 90

advert_int 1

nopreempt

virtual_ipaddress {

192.168.1.238/24 dev eth0

}

}

virtual_server 192.168.1.238 4000 {

delay_loop 1

lb_algo wlc

lb_kind DR

persistence_timeout 0

protocol TCP

real_server 192.168.1.217 4000 {

weight 1

TCP_CHECK {

connect_timeout 2

nb_get_retry 3

delay_before_retry 2

connect_port 4000

}

}

real_server 192.168.1.237 4000 {

weight 1

TCP_CHECK {

connect_timeout 2

nb_get_retry 3

delay_before_retry 2

connect_port 4000

}

}

}

注意:1、同一集群的2份配置文件参数的区别只有router_id和优先级priority,其余都一样。2、lvs的vip不用手动挂载,启动kp时会自动挂载,如是手动挂载,需要手动做arping。3、同一网段的lvs+kp集群,不同集群的配置参数virtual_router_id必须不一样,所以搭建多套lvs集群时,需要手动修改virtual_router_id。

5、启动lvs和kp

ipvsadm --save > /etc/sysconfig/ipvsadm

systemctl start ipvsadm

systemctl start keepalived.service

6、测试lvs,找一台不是lvs和tidb server的服务器,连接lvs的vip测试

mysql -uroot -p -h192.168.1.238 -P4000

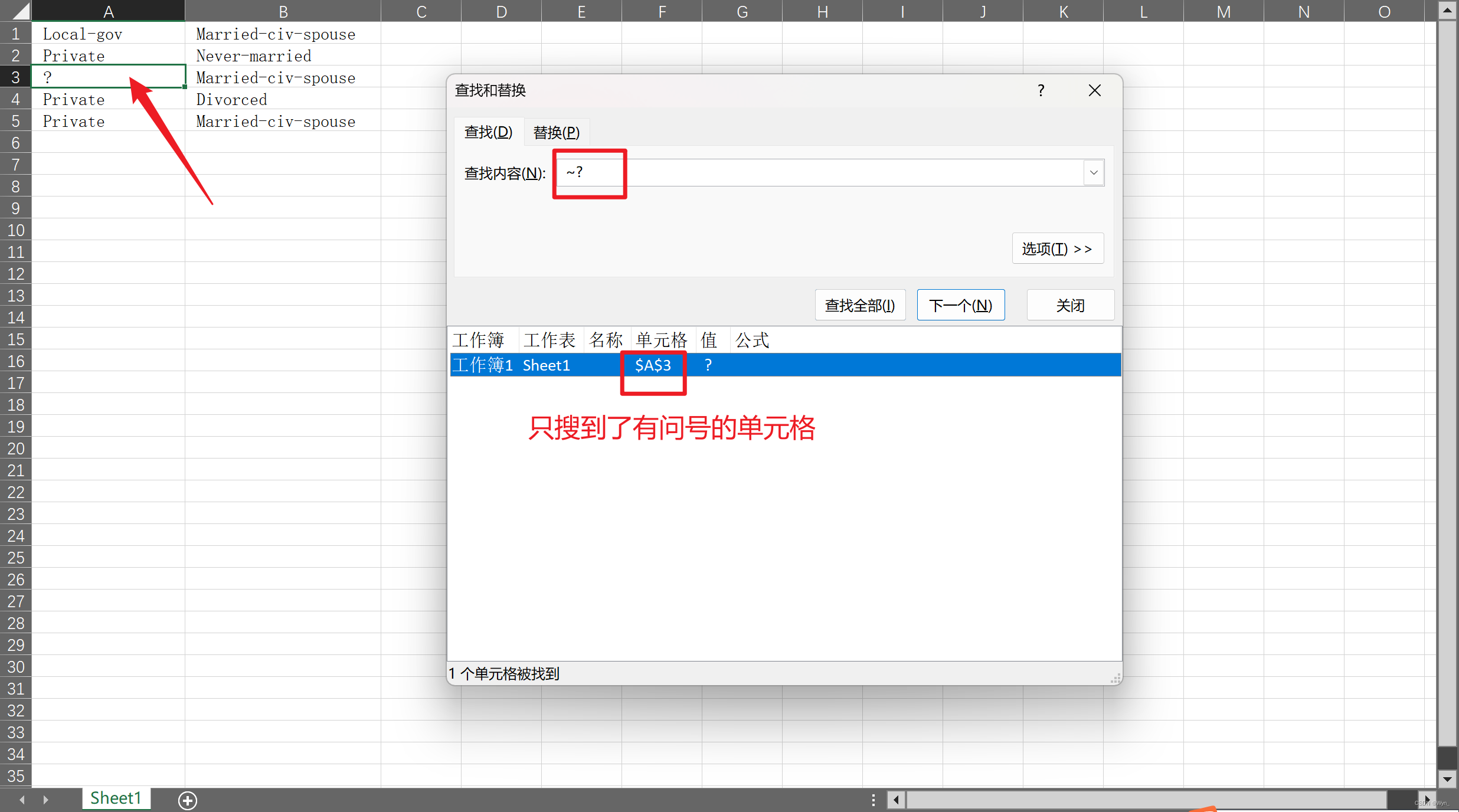

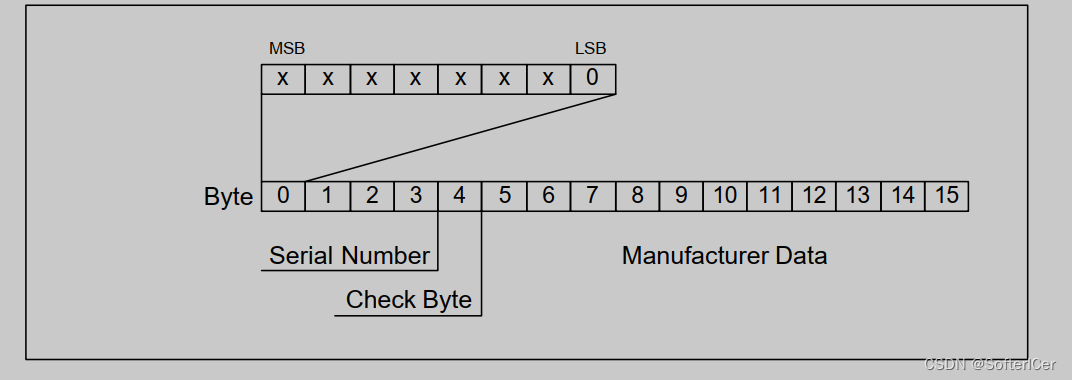

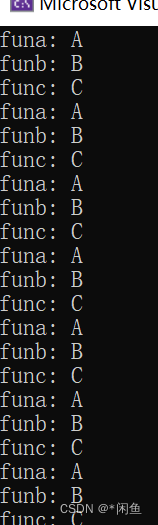

连接后lvs显示如下:

sudo ipvsadm -Ln

断开连接后显示如下:

sudo ipvsadm -Ln

7、测试lvs高可用

在主lvs上重启kp,验证vip漂移到从

systemctl restart keepalived.service

无问题后在从lvs上执行,验证vip漂移到主

systemctl restart keepalived.service

8、申请tidb用户的sudo权限

如果想使用tidb用户来管理LVS和KP,需要tidb用户有相关sudo权限。

(1)tidb用户sudo权限(lvs节点)

sudo ipvsadm systemctl status/start/stop/restart/reload keepalived.service

IP: LVS+KP安装所在节点:192.168.1.214、192.168.1.215

(2)tidb用户权限(qs节点)sudo /sbin/ip、/sbin/arping 的所有权限IP:TiDB Server IP:192.168.1.217、192.168.1.237