Datax在win10中的安装_windows安装datax_JMzz的博客-CSDN博客

DataX/userGuid.md at master · alibaba/DataX · GitHub

环境准备:

1.JDK(1.8以上,推荐1.8)

2.①Python(推荐Python2.7.X)

②Python(Python3.X.X的可以下载下面的安装包替换)

python3.0需要替换安装目录bin下的3个文件

替换文件下载:链接: 百度网盘 请输入提取码 提取码: re42

3.Apache Maven 3.x (Compile DataX) 非编译安装不需要

Python环境安装这里就不作说明,请自行下载安装。

1、下载解压

备注:我用的Python3.0.X 没有替换python相关文件

安装目录

E:\DATAX\datax

所有脚本请到 E:\DATAX\datax\bin 下执行

cmd

e:

cd E:\DATAX\datax\bin

2、自检脚本

python datax.py ../job/job.json

3、练手配置示例:从stream读取数据并打印到控制台

第一步、创建作业的配置文件(json格式)

可以通过命令查看配置模板: python datax.py -r {YOUR_READER} -w {YOUR_WRITER}

例如:

python datax.py -r streamreader -w streamwriter

返回如下

DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.

Please refer to the streamreader document:

https://github.com/alibaba/DataX/blob/master/streamreader/doc/streamreader.md

Please refer to the streamwriter document:

https://github.com/alibaba/DataX/blob/master/streamwriter/doc/streamwriter.md

Please save the following configuration as a json file and use

python {DATAX_HOME}/bin/datax.py {JSON_FILE_NAME}.json

to run the job.

{

"job": {

"content": [

{

"reader": {

"name": "streamreader",

"parameter": {

"column": [],

"sliceRecordCount": ""

}

},

"writer": {

"name": "streamwriter",

"parameter": {

"encoding": "",

"print": true

}

}

}

],

"setting": {

"speed": {

"channel": ""

}

}

}

}

根据模板配置json如下:

stream2stream.json

{

"job": {

"content": [

{

"reader": {

"name": "streamreader",

"parameter": {

"sliceRecordCount": 10,

"column": [

{

"type": "long",

"value": "10"

},

{

"type": "string",

"value": "hello,你好,世界-DataX"

}

]

}

},

"writer": {

"name": "streamwriter",

"parameter": {

"encoding": "UTF-8",

"print": true

}

}

}

],

"setting": {

"speed": {

"channel": 5

}

}

}

}

第二步:启动DataX

$ cd {YOUR_DATAX_DIR_BIN}

$ python datax.py ./stream2stream.json

python datax.py ../job/stream2stream.json

同步结束,显示日志如下:

DataX (DATAX-OPENSOURCE-3.0), From Alibaba !

Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved.

2023-03-16 13:52:50.773 [main] INFO MessageSource - JVM TimeZone: GMT+08:00, Locale: zh_CN

2023-03-16 13:52:50.776 [main] INFO MessageSource - use Locale: zh_CN timeZone: sun.util.calendar.ZoneInfo[id="GMT+08:00",offset=28800000,dstSavings=0,useDaylight=false,transitions=0,lastRule=null]

2023-03-16 13:52:50.786 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl

2023-03-16 13:52:50.791 [main] INFO Engine - the machine info =>

osInfo: Oracle Corporation 1.8 25.172-b11

jvmInfo: Windows 10 amd64 10.0

cpu num: 8

totalPhysicalMemory: -0.00G

freePhysicalMemory: -0.00G

maxFileDescriptorCount: -1

currentOpenFileDescriptorCount: -1

GC Names [PS MarkSweep, PS Scavenge]

MEMORY_NAME | allocation_size | init_size

PS Eden Space | 256.00MB | 256.00MB

Code Cache | 240.00MB | 2.44MB

Compressed Class Space | 1,024.00MB | 0.00MB

PS Survivor Space | 42.50MB | 42.50MB

PS Old Gen | 683.00MB | 683.00MB

Metaspace | -0.00MB | 0.00MB

2023-03-16 13:52:50.815 [main] INFO Engine -

{

"content":[

{

"reader":{

"name":"streamreader",

"parameter":{

"column":[

{

"type":"long",

"value":"10"

},

{

"type":"string",

"value":"hello,你好,世界-DataX"

}

],

"sliceRecordCount":10

}

},

"writer":{

"name":"streamwriter",

"parameter":{

"encoding":"UTF-8",

"print":true

}

}

}

],

"setting":{

"speed":{

"channel":5

}

}

}

2023-03-16 13:52:50.833 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null

2023-03-16 13:52:50.835 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0

2023-03-16 13:52:50.835 [main] INFO JobContainer - DataX jobContainer starts job.

2023-03-16 13:52:50.837 [main] INFO JobContainer - Set jobId = 0

2023-03-16 13:52:50.855 [job-0] INFO JobContainer - jobContainer starts to do prepare ...

2023-03-16 13:52:50.856 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] do prepare work .

2023-03-16 13:52:50.857 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] do prepare work .

2023-03-16 13:52:50.857 [job-0] INFO JobContainer - jobContainer starts to do split ...

2023-03-16 13:52:50.858 [job-0] INFO JobContainer - Job set Channel-Number to 5 channels.

2023-03-16 13:52:50.859 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] splits to [5] tasks.

2023-03-16 13:52:50.859 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] splits to [5] tasks.

2023-03-16 13:52:50.880 [job-0] INFO JobContainer - jobContainer starts to do schedule ...

2023-03-16 13:52:50.889 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups.

2023-03-16 13:52:50.892 [job-0] INFO JobContainer - Running by standalone Mode.

2023-03-16 13:52:50.900 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [5] channels for [5] tasks.

2023-03-16 13:52:50.905 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to -1, No bps activated.

2023-03-16 13:52:50.906 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated.

2023-03-16 13:52:50.916 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[2] attemptCount[1] is started

2023-03-16 13:52:50.919 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started

2023-03-16 13:52:50.923 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[3] attemptCount[1] is started

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

2023-03-16 13:52:50.929 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[1] attemptCount[1] is started

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

2023-03-16 13:52:50.933 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[4] attemptCount[1] is started

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

10 hello,你好,世界-DataX

2023-03-16 13:52:51.049 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[130]ms

2023-03-16 13:52:51.049 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[1] is successed, used[120]ms

2023-03-16 13:52:51.050 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[2] is successed, used[135]ms

2023-03-16 13:52:51.052 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[3] is successed, used[129]ms

2023-03-16 13:52:51.052 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[4] is successed, used[119]ms

2023-03-16 13:52:51.053 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] completed it's tasks.

2023-03-16 13:53:00.918 [job-0] INFO StandAloneJobContainerCommunicator - Total 50 records, 950 bytes | Speed 95B/s, 5 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.008s | Percentage 100.00%

2023-03-16 13:53:00.919 [job-0] INFO AbstractScheduler - Scheduler accomplished all tasks.

2023-03-16 13:53:00.923 [job-0] INFO JobContainer - DataX Writer.Job [streamwriter] do post work.

2023-03-16 13:53:00.923 [job-0] INFO JobContainer - DataX Reader.Job [streamreader] do post work.

2023-03-16 13:53:00.923 [job-0] INFO JobContainer - DataX jobId [0] completed successfully.

2023-03-16 13:53:00.924 [job-0] INFO HookInvoker - No hook invoked, because base dir not exists or is a file: E:\DATAX\datax\hook

2023-03-16 13:53:00.925 [job-0] INFO JobContainer -

[total cpu info] =>

averageCpu | maxDeltaCpu | minDeltaCpu

-1.00% | -1.00% | -1.00%

[total gc info] =>

NAME | totalGCCount | maxDeltaGCCount | minDeltaGCCount | totalGCTime | maxDeltaGCTime | minDeltaGCTime

PS MarkSweep | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s

PS Scavenge | 0 | 0 | 0 | 0.000s | 0.000s | 0.000s

2023-03-16 13:53:00.925 [job-0] INFO JobContainer - PerfTrace not enable!

2023-03-16 13:53:00.926 [job-0] INFO StandAloneJobContainerCommunicator - Total 50 records, 950 bytes | Speed 95B/s, 5 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 0.000s | All Task WaitReaderTime 0.008s | Percentage 100.00%

2023-03-16 13:53:00.927 [job-0] INFO JobContainer -

任务启动时刻 : 2023-03-16 13:52:50

任务结束时刻 : 2023-03-16 13:53:00

任务总计耗时 : 10s

任务平均流量 : 95B/s

记录写入速度 : 5rec/s

读出记录总数 : 50

读写失败总数 : 0

4、实际配置

这里只测试了mysql的相关配置,其他的需要继续研究

脚本格式信息可以去https://github.com/alibaba/DataX/查看

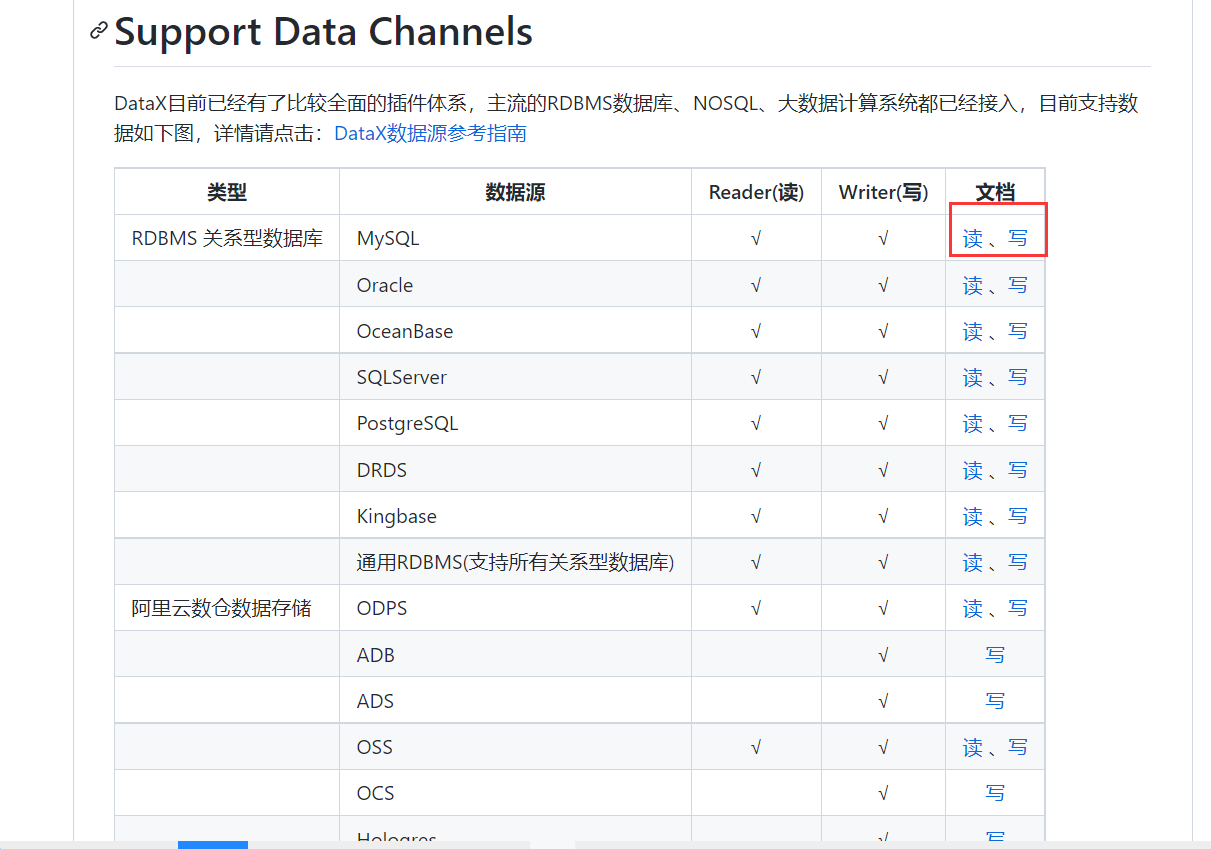

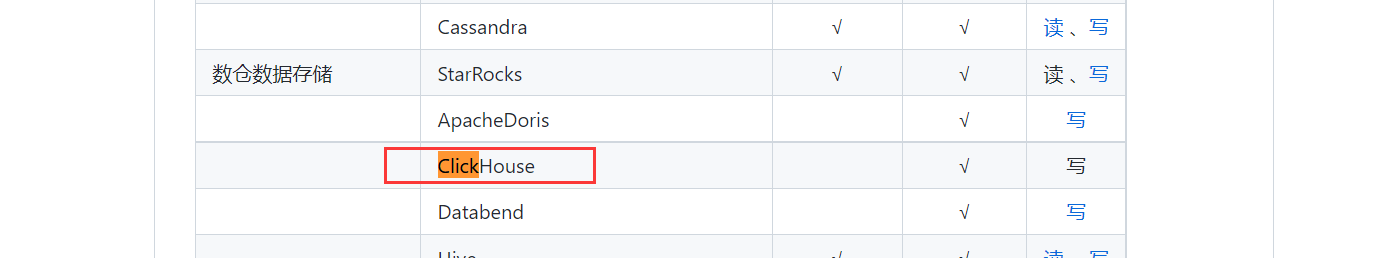

因为和clickhouse一起学习,这里可以看到支持对 CLickHouse的写

1、mysqlreader

DataX/mysqlreader/doc/mysqlreader.md at master · alibaba/DataX · GitHub

table column方式和querysql方式是冲突的。只能用一种

干货:

jdbcUrl 可以配置多个,依次检查合法性

table 可以配置多个,需保证多张表是同一schema结构?,table必须包含在connection配置单元中

1、配置一个从Mysql数据库同步抽取数据到本地的作业:

通过table column方式

mysql2stream1.json

{

"job": {

"setting": {

"speed": {

"channel": 3

},

"errorLimit": {

"record": 0,

"percentage": 0.02

}

},

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"username": "root",

"password": "sa",

"column": [

"ryxm",

"rysfz"

],

"splitPk": "id",

"connection": [

{

"table": [

"sys_czry"

],

"jdbcUrl": [

"jdbc:mysql://172.16.0.101:3306/qyjx_v3.1?useUnicode=true&characterEncoding=utf8&zeroDateTimeBehavior=convertToNull&useSSL=false&serverTimezone=GMT%2B8&&nullCatalogMeansCurrent=true&allowMultiQueries=true&rewriteBatchedStatements=true"

]

}

]

}

},

"writer": {

"name": "streamwriter",

"parameter": {

"print":true

}

}

}

]

}

}

python datax.py ../job/mysql2stream1.json

2、配置一个自定义SQL的数据库同步任务到本地内容的作业:

通过querysql方式

mysql2stream2.json

{

"job": {

"setting": {

"speed": {

"channel": 1

}

},

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"username": "root",

"password": "sa",

"connection": [

{

"querySql": [

"SELECT ryxm,rysfz,rygh from sys_czry;"

],

"jdbcUrl": [

"jdbc:mysql://172.16.0.101:3306/qyjx_v3.1?useUnicode=true&characterEncoding=utf8&zeroDateTimeBehavior=convertToNull&useSSL=false&serverTimezone=GMT%2B8&&nullCatalogMeansCurrent=true&allowMultiQueries=true&rewriteBatchedStatements=true"

]

}

]

}

},

"writer": {

"name": "streamwriter",

"parameter": {

"print": true,

"encoding": "UTF-8"

}

}

}

]

}

}

python datax.py ../job/mysql2stream2.json

2、mysqlwrier

DataX/mysqlwriter/doc/mysqlwriter.md at master · alibaba/DataX · GitHub

1、这里使用一份从内存产生到 Mysql 导入的数据

{

"job": {

"setting": {

"speed": {

"channel": 1

}

},

"content": [

{

"reader": {

"name": "streamreader",

"parameter": {

"column" : [

{

"value": "DataX",

"type": "string"

}

],

"sliceRecordCount": 1000

}

},

"writer": {

"name": "mysqlwriter",

"parameter": {

"writeMode": "insert",

"username": "root",

"password": "root",

"column": [

"name"

],

"session": [

"set session sql_mode='ANSI'"

],

"preSql": [

"delete from test"

],

"connection": [

{

"jdbcUrl": "jdbc:mysql://127.0.0.1:3306/datax?useUnicode=true&characterEncoding=gbk",

"table": [

"test"

]

}

]

}

}

}

]

}

}

python datax.py ../job/stream2mysql1.json

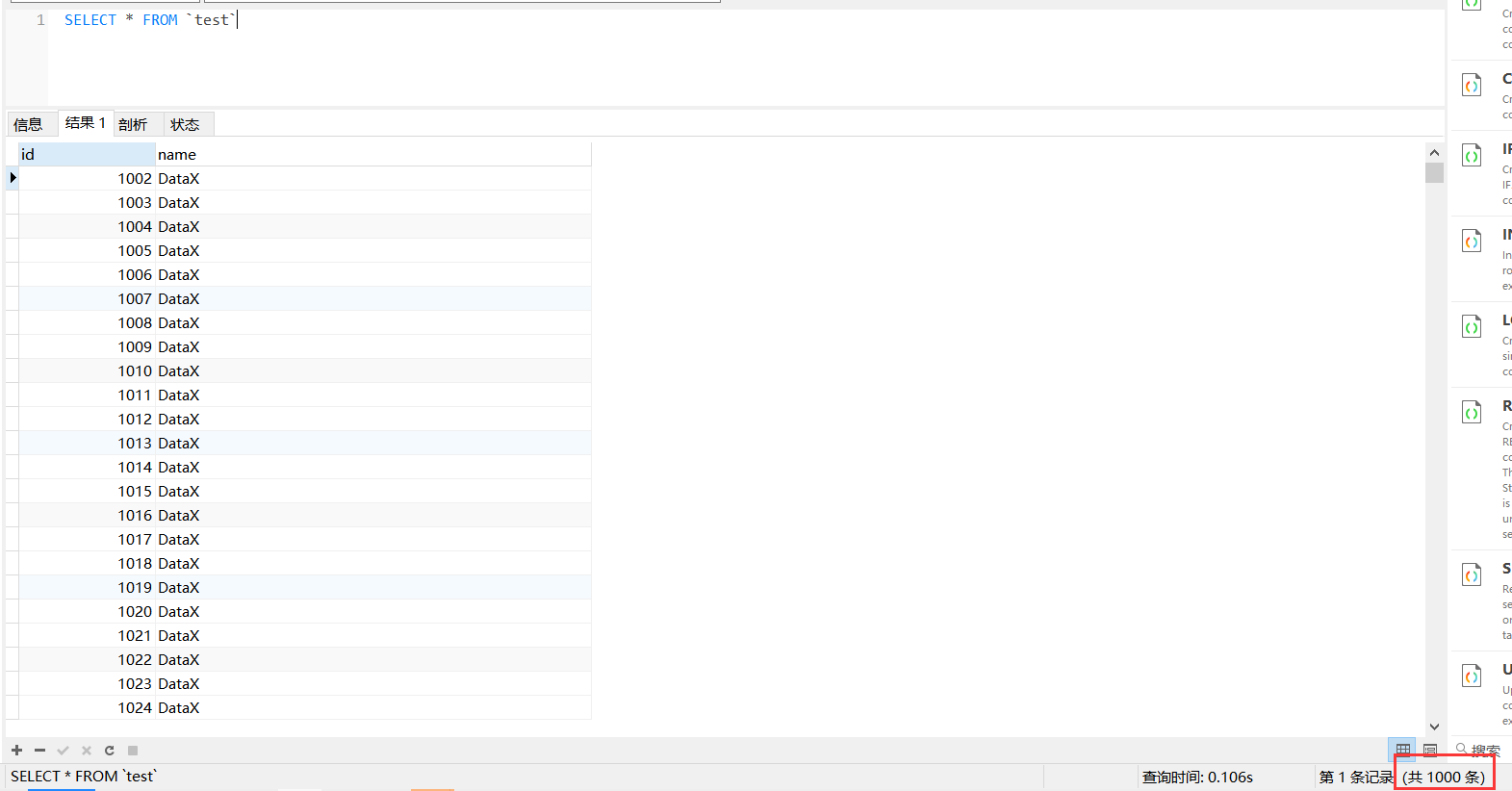

结果:

2、这里使用一份从mysql(服务器1)产生到 Mysql(本地) 导入的数据

模拟跨服务器、数据库环境

{

"job": {

"setting": {

"speed": {

"channel": 1

}

},

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"username": "root",

"password": "sa",

"connection": [

{

"querySql": [

"SELECT ryxm,rysfz from sys_czry;"

],

"jdbcUrl": [

"jdbc:mysql://172.16.0.101:3306/qyjx_v3.1?useUnicode=true&characterEncoding=utf8&zeroDateTimeBehavior=convertToNull&useSSL=false&serverTimezone=GMT%2B8&&nullCatalogMeansCurrent=true&allowMultiQueries=true&rewriteBatchedStatements=true"

]

}

]

}

},

"writer": {

"name": "mysqlwriter",

"parameter": {

"writeMode": "insert",

"username": "root",

"password": "root",

"column": [

"name","rysfz"

],

"session": [

"set session sql_mode='ANSI'"

],

"preSql": [

"delete from test"

],

"connection": [

{

"jdbcUrl": "jdbc:mysql://127.0.0.1:3306/datax?useUnicode=true&characterEncoding=gbk",

"table": [

"test"

]

}

]

}

}

}

]

}

}

python datax.py ../job/mysql2mysql.json

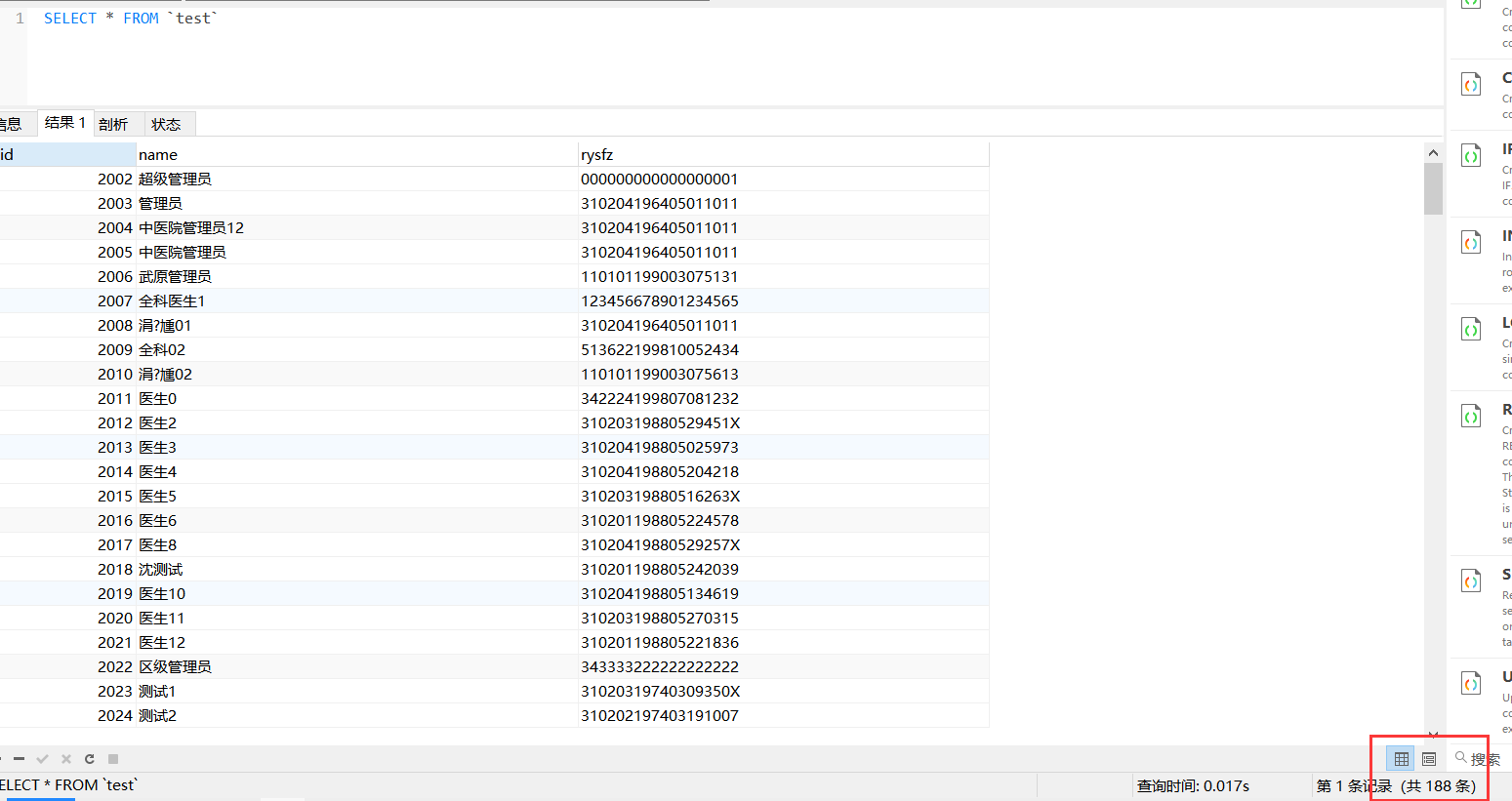

结果:

异常:

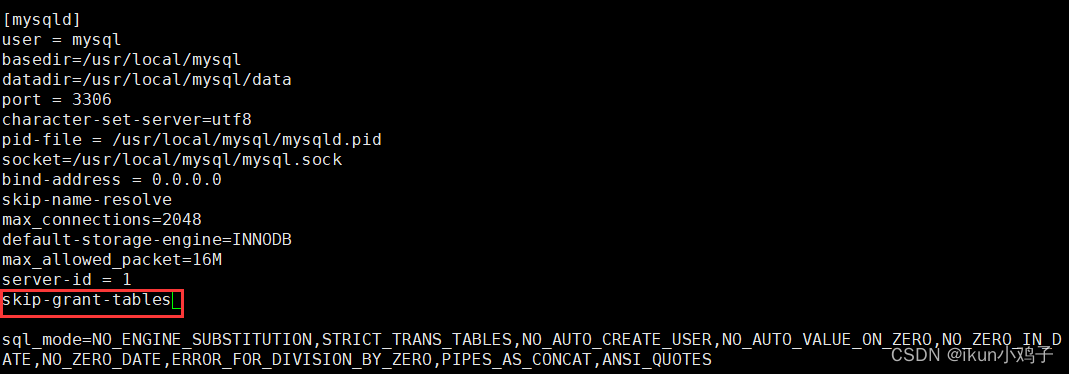

1、如果出现乱码的话

先输入CHCP 65001

![[游戏开发]Unity中随机位置_在圆/椭圆/三角形/多边形/内随机一个点](https://img-blog.csdnimg.cn/4668207fb7d34b05a7ebf6cfe8504c45.png)