initialize

forward()

- https://github1s.com/NVIDIA/FasterTransformer/blob/v1.0/fastertransformer/cuda/open_attention.h#L149-L217

- 使用cuBLAS库执行矩阵乘法运算,并对cublasGemmEx()进行三个单独的调用。这些操作包括将属性核与输入张量相乘,并添加偏差项,从而生成查询、键和值矩阵。

在这些矩阵相乘之后,该函数使用sqrtf()函数计算缩放因子,并将查询、键和值矩阵以及该缩放器传递给另一个名为multiHeadAttr_nofuse_kernelLauncher()的函数。此函数可能会使用额外的计算将多头注意力应用于查询、键和值矩阵,以生成输出矩阵param_.attr_out。

最后,forward()函数捕获执行过程中抛出的任何运行时错误,并重新抛出它们。

cublasGemmEx *3

check_cuda_error(cublasGemmEx(param_.cublas_handle,

CUBLAS_OP_N, CUBLAS_OP_N,

n, m, k,

&alpha,

param_.attr_kernel_Q, AType_, n,

param_.from_tensor, BType_, k,

&beta,

query_buf_, CType_, n,

computeType_,

static_cast<cublasGemmAlgo_t>(cublasAlgo_[0])));

check_cuda_error(cublasGemmEx(param_.cublas_handle,

CUBLAS_OP_N, CUBLAS_OP_N,

n, m, k,

&alpha,

param_.attr_kernel_K, AType_, n,

param_.to_tensor, BType_, k,

&beta,

key_buf_, CType_, n,

computeType_,

static_cast<cublasGemmAlgo_t>(cublasAlgo_[0])));

check_cuda_error(cublasGemmEx(param_.cublas_handle,

CUBLAS_OP_N, CUBLAS_OP_N,

n, m, k,

&alpha,

param_.attr_kernel_V, AType_, n,

param_.to_tensor, BType_, k,

&beta,

value_buf_, CType_, n,

computeType_,

static_cast<cublasGemmAlgo_t>(cublasAlgo_[0])));

cublasGemmEx

cublasGemmEx is a function from the NVIDIA cuBLAS library that performs a generalized matrix multiplication operation (GEMM) on two matrices A and B, and accumulates the result into a third matrix C.

The “Ex” suffix in the function name indicates that this is an extended version of the basic cublasGemm function, which allows for more advanced features such as data type casting, tensor operations, and tensor cores support.

The function signature for cublasGemmEx is as follows:

cublasStatus_t cublasGemmEx(cublasHandle_t handle,

cublasOperation_t transa,

cublasOperation_t transb,

int m,

int n,

int k,

const void *alpha,

const void *A,

cudaDataType_t Atype,

int lda,

const void *B,

cudaDataType_t Btype,

int ldb,

const void *beta,

void *C,

cudaDataType_t Ctype,

int ldc,

cudaDataType_t computeType,

cublasGemmAlgo_t algo);

Here’s a brief overview of the input parameters:

handle: A handle to the cuBLAS library context.transaandtransb: Transpose operation to be performed on matrices A and B respectively before the GEMM operation.m,n, andk: The dimensions of matrices A, B, and C, respectively.alpha: Scalar used to scale the product of matrices A and B.A,B, andC: Pointers to the device memory storing the matrices A, B, and C.Atype,Btype, andCtype: Data types of matrices A, B, and C, respectively.lda,ldb, andldc: The leading dimensions of matrices A, B, and C, respectively.beta: Scalar used to scale matrix C before accumulation.computeType: The data type used for intermediate computations.algo: The algorithm used for the GEMM operation.

The function returns a cublasStatus_t value indicating whether the operation was successful or if an error occurred.

multiHeadAttr_nofuse_kernelLauncher

declaration in “open_attention.h”

void multiHeadAttr_nofuse_kernelLauncher(

cudaStream_t stream,

cublasHandle_t handle,

DataType_* Q,

const DataType_* bias_Q,

DataType_* K,

const DataType_* bias_K,

DataType_* V,

const DataType_* bias_V,

const DataType_* attr_mask,

DataType_* dst,

const int batch_size,

const int seq_len,

const int head_num,

const int size_per_head,

const DataType_ scaler);

define in “open_attention.cu”

- 定义了一个模板函数和两个特化模板。程序编译时会匹配然后编译。

template void OpenMultiHeadAttention<OperationType::FP32>::multiHeadAttr_nofuse_kernelLauncher(

cudaStream_t stream,

cublasHandle_t handle,

float* Q,

const float* bias_Q,

float* K,

const float* bias_K,

float* V,

const float* bias_V,

const float* attr_mask,

float* dst,

const int batch_size,

const int seq_len,

const int head_num,

const int size_per_head,

const float scaler);

template void OpenMultiHeadAttention<OperationType::HALF>::multiHeadAttr_nofuse_kernelLauncher(

cudaStream_t stream,

cublasHandle_t handle,

__half* Q,

const __half* bias_Q,

__half* K,

const __half* bias_K,

__half* V,

const __half* bias_V,

const __half* attr_mask,

__half* dst,

const int batch_size,

const int seq_len,

const int head_num,

const int size_per_head,

const __half scaler);

}//namespace cuda

template<OperationType OpType_>

void OpenMultiHeadAttention<OpType_>::multiHeadAttr_nofuse_kernelLauncher(

cudaStream_t stream,

cublasHandle_t cublas_handle,

DataType_* Q,

const DataType_* bias_Q,

DataType_* K,

const DataType_* bias_K,

DataType_* V,

const DataType_* bias_V,

const DataType_* attr_mask,

DataType_* dst,

const int batch_size,

const int seq_len,

const int head_num,

const int size_per_head,

const DataType_ scaler)

{

int m = batch_size * seq_len;

int k = head_num * size_per_head;

dim3 grid;

dim3 block;

if(OpType_ == OperationType::FP32)

{

const int word_per_block = 1;

assert(k <= 1024);

assert(m / word_per_block * 3 <= 65536);

dim3 grid(m / word_per_block * 3);

dim3 block(k);

add_QKV_bias<DataType_><<<grid, block, 0, stream>>>(Q, bias_Q, K, bias_K, V, bias_V, q_buf_, k_buf_, v_buf_,

batch_size, seq_len, head_num, size_per_head, word_per_block);

}

else

{

const int word_per_block = 1;

grid.x = batch_size * seq_len / word_per_block;

block.x = head_num * size_per_head * word_per_block / 2;

add_QKV_bias<DataType_><<<grid, block, 0, stream>>>(Q, bias_Q, K, bias_K, V, bias_V, q_buf_, k_buf_,

v_buf_, batch_size, seq_len, head_num, size_per_head / 2, word_per_block);

}

DataType_ alpha = (DataType_)1.0f, beta = (DataType_)0.0f;

check_cuda_error(cublasGemmStridedBatchedEx(cublas_handle,

CUBLAS_OP_T, CUBLAS_OP_N,

seq_len, seq_len, size_per_head,

&alpha,

k_buf_, AType_, size_per_head, seq_len * size_per_head,

q_buf_, BType_, size_per_head, seq_len * size_per_head,

&beta,

qk_buf_, CType_, seq_len, seq_len * seq_len,

batch_size * head_num,

computeType_,

static_cast<cublasGemmAlgo_t>(cublasAlgo_[1])));

if(seq_len <= 32)

block.x = 32;

else if(seq_len > 32 && seq_len <= 64)

block.x = 64;

else if(seq_len > 64 && seq_len <= 128)

block.x = 128;

else if(seq_len > 128 && seq_len <= 256)

block.x = 256;

else if(seq_len > 256 && seq_len <= 512)

block.x = 512;

else

block.x = 1024;

if(batch_size * head_num <= 120)

{

grid.x = batch_size * head_num * seq_len;

softmax_kernel_v2<DataType_><<<grid, block, 0, stream>>>(qk_buf_, attr_mask, batch_size, head_num, seq_len, scaler);

}

else

{

grid.x = batch_size * head_num;

softmax_kernel<DataType_><<<grid, block, 0, stream>>>(qk_buf_, attr_mask, batch_size, head_num, seq_len, scaler);

}

check_cuda_error(cublasGemmStridedBatchedEx(cublas_handle,

CUBLAS_OP_N, CUBLAS_OP_N,

size_per_head, seq_len, seq_len,

&alpha,

v_buf_, AType_, size_per_head, seq_len * size_per_head,

qk_buf_, BType_, seq_len, seq_len * seq_len,

&beta,

transpose_dst_, CType_, size_per_head, seq_len * size_per_head,

batch_size * head_num,

computeType_,

static_cast<cublasGemmAlgo_t>(cublasAlgo_[2])));

/* for half2 only */

if(OpType_ == OperationType::HALF)

{

const int seq_per_block = 4;

grid.x = batch_size * head_num * seq_len / seq_per_block;

block.x = seq_per_block * size_per_head / 2;

assert(grid.x * seq_per_block == batch_size * head_num * seq_len);

transpose<DataType_><<<grid, block, 0, stream>>>(transpose_dst_, dst,

batch_size, seq_len, head_num, size_per_head / 2);

}

else

{

const int seq_per_block = 1;

grid.x = batch_size * head_num * seq_len / seq_per_block;

block.x = seq_per_block * size_per_head;

transpose<DataType_><<<grid, block, 0, stream>>>(transpose_dst_, dst,

batch_size, seq_len, head_num, size_per_head);

}

}

cublasGemmTridedBatchedEx

cublasGemmTridedBatchedEx是cuBLAS库中的一个函数,用于执行跨步分批矩阵乘法。它获取多组输入矩阵,并对每组并行执行相同的矩阵乘法运算,将结果存储回内存。函数名称中的“Ex”表示这是基本“cublasGemmTriedBatched”函数的扩展版本,其中包括用于指定数据类型、比例因子和其他设置的附加选项。

在深度学习的背景下,“cublasGemmTridedBatchedEx”通常用于在Transformer神经网络中执行多头自注意操作。此操作涉及将查询、键和值矩阵集相乘以产生注意力分数,注意力分数用于对值进行加权并计算最终输出。有效地执行这些矩阵乘法对于在大型Transformer模型中实现高性能至关重要。

Attention & Transformer

- FlashAttention:一种具有 IO 感知,且兼具快速、内存高效的新型注意力算法(但非传统attention的近似方法,是数学等价的)

https://www.bilibili.com/video/BV1SW4y1X7kh/?

https://zhuanlan.zhihu.com/p/618533434

CG

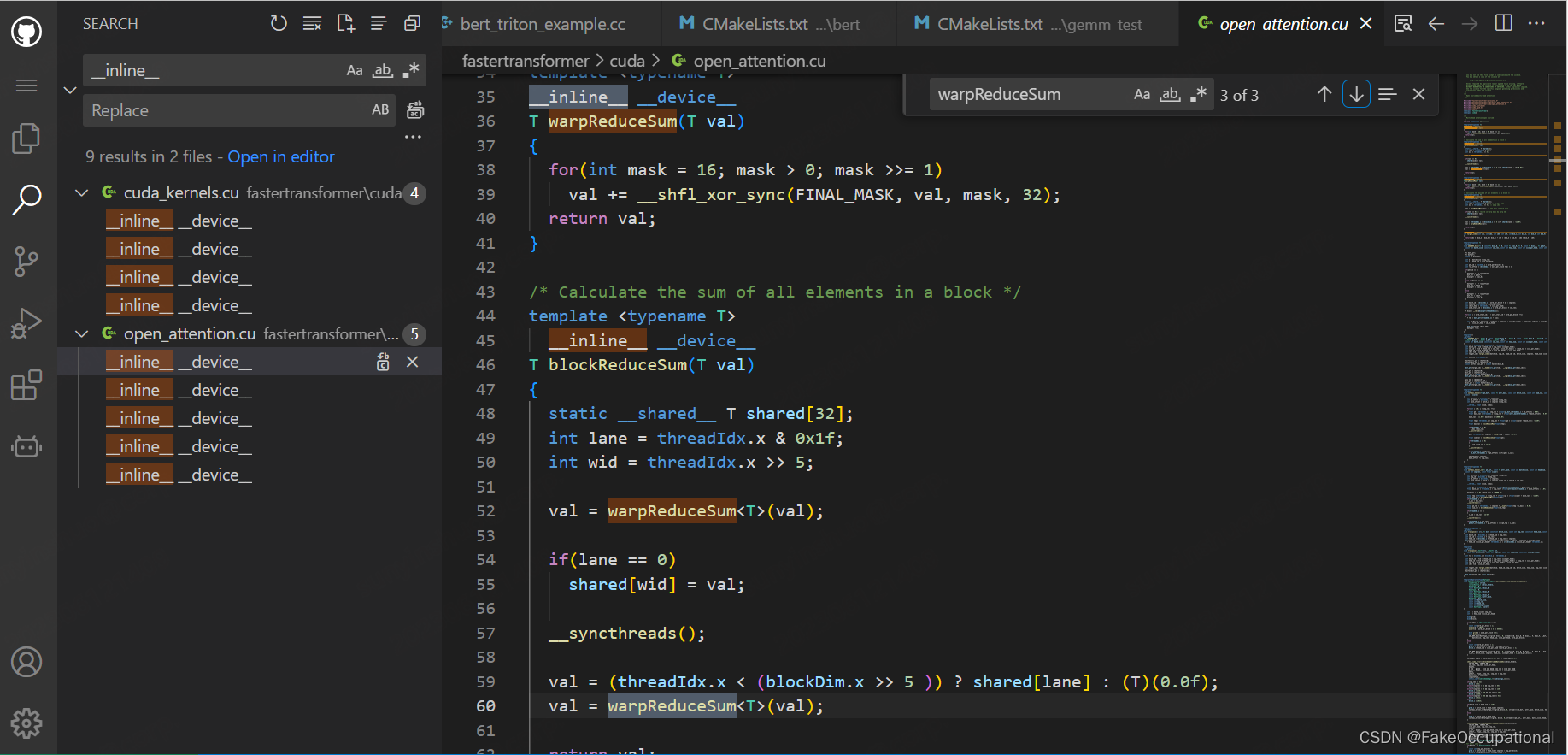

cuda inline

- 您可能会注意到的第一件事是__inline__声明。这可能是不必要的。它告诉编译器 将此函数的整个代码放在该点 调用它的位置,而不是导致跳转发生。 这使得编译后的代码运行得更快。另一方面 NVCC知道使许多(也许是大多数)设备功能内联 已经没有问了,所以我们的要求可能是多余的。 我把它放进去,这样我就可以谈论它了。如果函数 更长,也许默认情况下它不会内联,但是 如果您编写该函数主要是为了可读性,则 使其内联可能对您很重要。在这种情况下, 您可以获得更具可读性的代码,而不会牺牲速度来跳转。

layernorm

-

https://github1s.com/NVIDIA/FasterTransformer/blob/v1.0/fastertransformer/bert_encoder_transformer.h#L208-L281 中调用了add_bias_input_layernorm_kernelLauncher方法。

-

一个帮助理解multiHeadAttr_nofuse_kernelLauncher的例子

#include <stdio.h>

#include <iostream>

#include <typeinfo>

// using OperationType = int;

enum class OperationType{FP32, HALF};

template<OperationType op>class First{};

template< OperationType N,template<OperationType>class XXX >

class Second;

template< OperationType N,template<OperationType>class XXX >

class Second{

public:

XXX<N> b;

Second(){

std::cout<<"NNN";

}

};

// template<template<OperationType> class MultiHeadAttention_>

// class BertEncoderTransformerTraits<OperationType::FP32, MultiHeadAttention_>

template< template<OperationType>class XXX >

class Second<OperationType::FP32,XXX>{

public:

XXX<OperationType::FP32> b;

Second(){

std::cout<<"SSSSS";

}

};

// template< template<OperationType>class XXX >

// class Second<OperationType::HALF,XXX>{

// public:

// XXX<OperationType::HALF> b;

// Second(){

// std::cout<<"HALF";

// }

// };

int main()

{

printf("Hello World\n");

// Second<OperationType::FP32,First> *second = new Second<OperationType::FP32,First>();

Second<OperationType::HALF,First> *second = new Second<OperationType::HALF,First>();

return 0;

}