目录

一、简介

二、nfs服务&nfs-provisioner配置

1、k8S服务器需安装nfs客户端

2、nfs服务端安装配置

3、使用nfs-provisioner动态创建PV (文件已修改)

三、hadoop配置文件

1、# cat hadoop.yaml

2、# cat hadoop-datanode.yaml

3、# cat yarn-node.yaml

4、执行文件并查看

5、联通性验证

四、报错&解决

1、nfs报错

2、nfs报错

一、简介

基础环境使用kubeasz(https://github.com/easzlab/kubeasz)配置K8S环境.

K8S配置示例:https://blog.csdn.net/zhangxueleishamo/article/details/108670578

使用nfs作为资源存储。

二、nfs服务&nfs-provisioner配置

1、k8S服务器需安装nfs客户端

yum -y install nfs-utils2、nfs服务端安装配置

yum -y install nfs-utils rpcbind nfs-server

# cat /etc/exports

/data/hadoop *(rw,no_root_squash,no_all_squash,sync)

###权限及目录配置,具体不再说明

systemctl start rpcbind

systemctl enable rpcbind

systemctl start nfs

systemctl enable nfs3、使用nfs-provisioner动态创建PV (文件已修改)

# cat nfs-provisioner.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: dev

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["list", "watch", "create", "update", "patch"]

- apiGroups: [""]

resources: ["services"]

verbs: ["get"]

- apiGroups: ["extensions"]

resources: ["podsecuritypolicies"]

resourceNames: ["nfs-provisioner"]

verbs: ["use"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: dev

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-client-provisioner

namespace: dev

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

#image: quay.io/external_storage/nfs-client-provisioner:latest

image: jmgao1983/nfs-client-provisioner:latest

imagePullPolicy: IfNotPresent

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

# 此处供应者名字供storageclass调用

value: nfs-storage

- name: NFS_SERVER

value: 10.2.1.190

- name: NFS_PATH

value: /data/hadoop

volumes:

- name: nfs-client-root

nfs:

server: 10.2.1.190

path: /data/hadoop

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storage

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: nfs-storage

volumeBindingMode: Immediate

reclaimPolicy: Delete

###执行并查看sa & sc ##

# kubectl apply -f nfs-provisioner.yaml

# kubectl get sa,sc -n dev

NAME SECRETS AGE

serviceaccount/nfs-client-provisioner 1 47m

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

storageclass.storage.k8s.io/nfs-storage (default) nfs-storage Delete Immediate false 45m

三、hadoop配置文件

1、# cat hadoop.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-hadoop-conf

namespace: dev

data:

HDFS_MASTER_SERVICE: hadoop-hdfs-master

HDOOP_YARN_MASTER: hadoop-yarn-master

---

apiVersion: v1

kind: Service

metadata:

name: hadoop-hdfs-master

namespace: dev

spec:

type: NodePort

selector:

name: hdfs-master

ports:

- name: rpc

port: 9000

targetPort: 9000

- name: http

port: 50070

targetPort: 50070

nodePort: 32007

---

apiVersion: v1

kind: Service

metadata:

name: hadoop-yarn-master

namespace: dev

spec:

type: NodePort

selector:

name: yarn-master

ports:

- name: "8030"

port: 8030

- name: "8031"

port: 8031

- name: "8032"

port: 8032

- name: http

port: 8088

targetPort: 8088

nodePort: 32088

---

apiVersion: v1

kind: Service

metadata:

name: yarn-node

namespace: dev

spec:

clusterIP: None

selector:

name: yarn-node

ports:

- port: 8040

2、# cat hadoop-datanode.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: hdfs-master

namespace: dev

spec:

replicas: 1

selector:

matchLabels:

name: hdfs-master

template:

metadata:

labels:

name: hdfs-master

spec:

containers:

- name: hdfs-master

image: kubeguide/hadoop:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9000

- containerPort: 50070

env:

- name: HADOOP_NODE_TYPE

value: namenode

- name: HDFS_MASTER_SERVICE

valueFrom:

configMapKeyRef:

name: kube-hadoop-conf

key: HDFS_MASTER_SERVICE

- name: HDOOP_YARN_MASTER

valueFrom:

configMapKeyRef:

name: kube-hadoop-conf

key: HDOOP_YARN_MASTER

restartPolicy: Always

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: hadoop-datanode

namespace: dev

spec:

replicas: 3

selector:

matchLabels:

name: hadoop-datanode

serviceName: hadoop-datanode

template:

metadata:

labels:

name: hadoop-datanode

spec:

containers:

- name: hadoop-datanode

image: kubeguide/hadoop:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9000

- containerPort: 50070

volumeMounts:

- name: data

mountPath: /root/hdfs/

subPath: hdfs

- name: data

mountPath: /usr/local/hadoop/logs/

subPath: logs

env:

- name: HADOOP_NODE_TYPE

value: datanode

- name: HDFS_MASTER_SERVICE

valueFrom:

configMapKeyRef:

name: kube-hadoop-conf

key: HDFS_MASTER_SERVICE

- name: HDOOP_YARN_MASTER

valueFrom:

configMapKeyRef:

name: kube-hadoop-conf

key: HDOOP_YARN_MASTER

restartPolicy: Always

volumeClaimTemplates:

- metadata:

name: data

namespace: dev

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 2Gi

storageClassName: "nfs-storage"3、# cat yarn-node.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: yarn-master

namespace: dev

spec:

replicas: 1

selector:

matchLabels:

name: yarn-master

template:

metadata:

labels:

name: yarn-master

spec:

containers:

- name: yarn-master

image: kubeguide/hadoop:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9000

- containerPort: 50070

env:

- name: HADOOP_NODE_TYPE

value: resourceman

- name: HDFS_MASTER_SERVICE

valueFrom:

configMapKeyRef:

name: kube-hadoop-conf

key: HDFS_MASTER_SERVICE

- name: HDOOP_YARN_MASTER

valueFrom:

configMapKeyRef:

name: kube-hadoop-conf

key: HDOOP_YARN_MASTER

restartPolicy: Always

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: yarn-node

namespace: dev

spec:

replicas: 3

selector:

matchLabels:

name: yarn-node

serviceName: yarn-node

template:

metadata:

labels:

name: yarn-node

spec:

containers:

- name: yarn-node

image: kubeguide/hadoop:latest

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8040

- containerPort: 8041

- containerPort: 8042

volumeMounts:

- name: yarn-data

mountPath: /root/hdfs/

subPath: hdfs

- name: yarn-data

mountPath: /usr/local/hadoop/logs/

subPath: logs

env:

- name: HADOOP_NODE_TYPE

value: yarnnode

- name: HDFS_MASTER_SERVICE

valueFrom:

configMapKeyRef:

name: kube-hadoop-conf

key: HDFS_MASTER_SERVICE

- name: HDOOP_YARN_MASTER

valueFrom:

configMapKeyRef:

name: kube-hadoop-conf

key: HDOOP_YARN_MASTER

restartPolicy: Always

volumeClaimTemplates:

- metadata:

name: yarn-data

namespace: dev

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 2Gi

storageClassName: "nfs-storage"4、执行文件并查看

kubectl apply -f hadoop.yaml

kubectl apply -f hadoop-datanode.yaml

kubectl apply -f yarn-node.yaml

# kubectl get pv,pvc -n dev

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-2bf83ccf-85eb-43d7-8d49-10a2617c1bde 2Gi RWX Delete Bound dev/data-hadoop-datanode-0 nfs-storage 34m

persistentvolume/pvc-5ecff2b2-ea9d-4d6f-851b-0ab2cecbbe54 2Gi RWX Delete Bound dev/yarn-data-yarn-node-1 nfs-storage 32m

persistentvolume/pvc-91132f6d-a3e1-4938-b8d7-674d6b0656a8 2Gi RWX Delete Bound dev/data-hadoop-datanode-2 nfs-storage 34m

persistentvolume/pvc-a44adf12-2505-4133-ab57-99a61c4d4476 2Gi RWX Delete Bound dev/data-hadoop-datanode-1 nfs-storage 34m

persistentvolume/pvc-c4bf1e26-936f-46f6-8529-98d2699a916e 2Gi RWX Delete Bound dev/yarn-data-yarn-node-2 nfs-storage 32m

persistentvolume/pvc-e6d360be-2f72-4c47-a99b-fee79ca5e03b 2Gi RWX Delete Bound dev/yarn-data-yarn-node-0 nfs-storage 32m

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/data-hadoop-datanode-0 Bound pvc-2bf83ccf-85eb-43d7-8d49-10a2617c1bde 2Gi RWX nfs-storage 39m

persistentvolumeclaim/data-hadoop-datanode-1 Bound pvc-a44adf12-2505-4133-ab57-99a61c4d4476 2Gi RWX nfs-storage 34m

persistentvolumeclaim/data-hadoop-datanode-2 Bound pvc-91132f6d-a3e1-4938-b8d7-674d6b0656a8 2Gi RWX nfs-storage 34m

persistentvolumeclaim/yarn-data-yarn-node-0 Bound pvc-e6d360be-2f72-4c47-a99b-fee79ca5e03b 2Gi RWX nfs-storage 32m

persistentvolumeclaim/yarn-data-yarn-node-1 Bound pvc-5ecff2b2-ea9d-4d6f-851b-0ab2cecbbe54 2Gi RWX nfs-storage 32m

persistentvolumeclaim/yarn-data-yarn-node-2 Bound pvc-c4bf1e26-936f-46f6-8529-98d2699a916e 2Gi RWX nfs-storage 32m

# kubectl get all -n dev -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/hadoop-datanode-0 1/1 Running 0 40m 172.20.4.65 10.2.1.194 <none> <none>

pod/hadoop-datanode-1 1/1 Running 0 35m 172.20.4.66 10.2.1.194 <none> <none>

pod/hadoop-datanode-2 1/1 Running 0 35m 172.20.4.67 10.2.1.194 <none> <none>

pod/hdfs-master-5946bb8ff4-lt5mp 1/1 Running 0 40m 172.20.4.64 10.2.1.194 <none> <none>

pod/nfs-client-provisioner-8ccc8b867-ndssr 1/1 Running 0 52m 172.20.4.63 10.2.1.194 <none> <none>

pod/yarn-master-559c766d4c-jzz4s 1/1 Running 0 33m 172.20.4.68 10.2.1.194 <none> <none>

pod/yarn-node-0 1/1 Running 0 33m 172.20.4.69 10.2.1.194 <none> <none>

pod/yarn-node-1 1/1 Running 0 33m 172.20.4.70 10.2.1.194 <none> <none>

pod/yarn-node-2 1/1 Running 0 33m 172.20.4.71 10.2.1.194 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/hadoop-hdfs-master NodePort 10.68.193.79 <none> 9000:26007/TCP,50070:32007/TCP 40m name=hdfs-master

service/hadoop-yarn-master NodePort 10.68.243.133 <none> 8030:34657/TCP,8031:35352/TCP,8032:33633/TCP,8088:32088/TCP 40m name=yarn-master

service/yarn-node ClusterIP None <none> 8040/TCP 40m name=yarn-node

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/hdfs-master 1/1 1 1 40m hdfs-master kubeguide/hadoop:latest name=hdfs-master

deployment.apps/nfs-client-provisioner 1/1 1 1 52m nfs-client-provisioner jmgao1983/nfs-client-provisioner:latest app=nfs-client-provisioner

deployment.apps/yarn-master 1/1 1 1 33m yarn-master kubeguide/hadoop:latest name=yarn-master

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/hdfs-master-5946bb8ff4 1 1 1 40m hdfs-master kubeguide/hadoop:latest name=hdfs-master,pod-template-hash=5946bb8ff4

replicaset.apps/nfs-client-provisioner-8ccc8b867 1 1 1 52m nfs-client-provisioner jmgao1983/nfs-client-provisioner:latest app=nfs-client-provisioner,pod-template-hash=8ccc8b867

replicaset.apps/yarn-master-559c766d4c 1 1 1 33m yarn-master kubeguide/hadoop:latest name=yarn-master,pod-template-hash=559c766d4c

NAME READY AGE CONTAINERS IMAGES

statefulset.apps/hadoop-datanode 3/3 40m hadoop-datanode kubeguide/hadoop:latest

statefulset.apps/yarn-node 3/3 33m yarn-node kubeguide/hadoop:latest

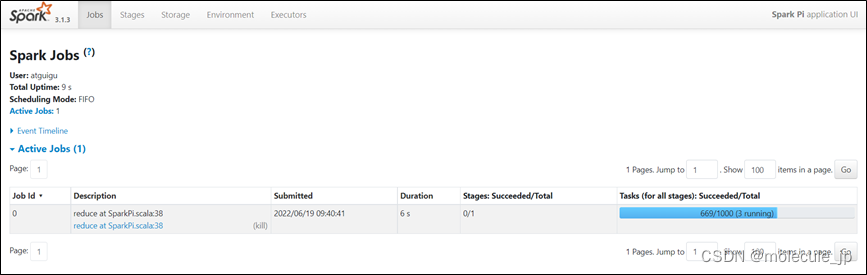

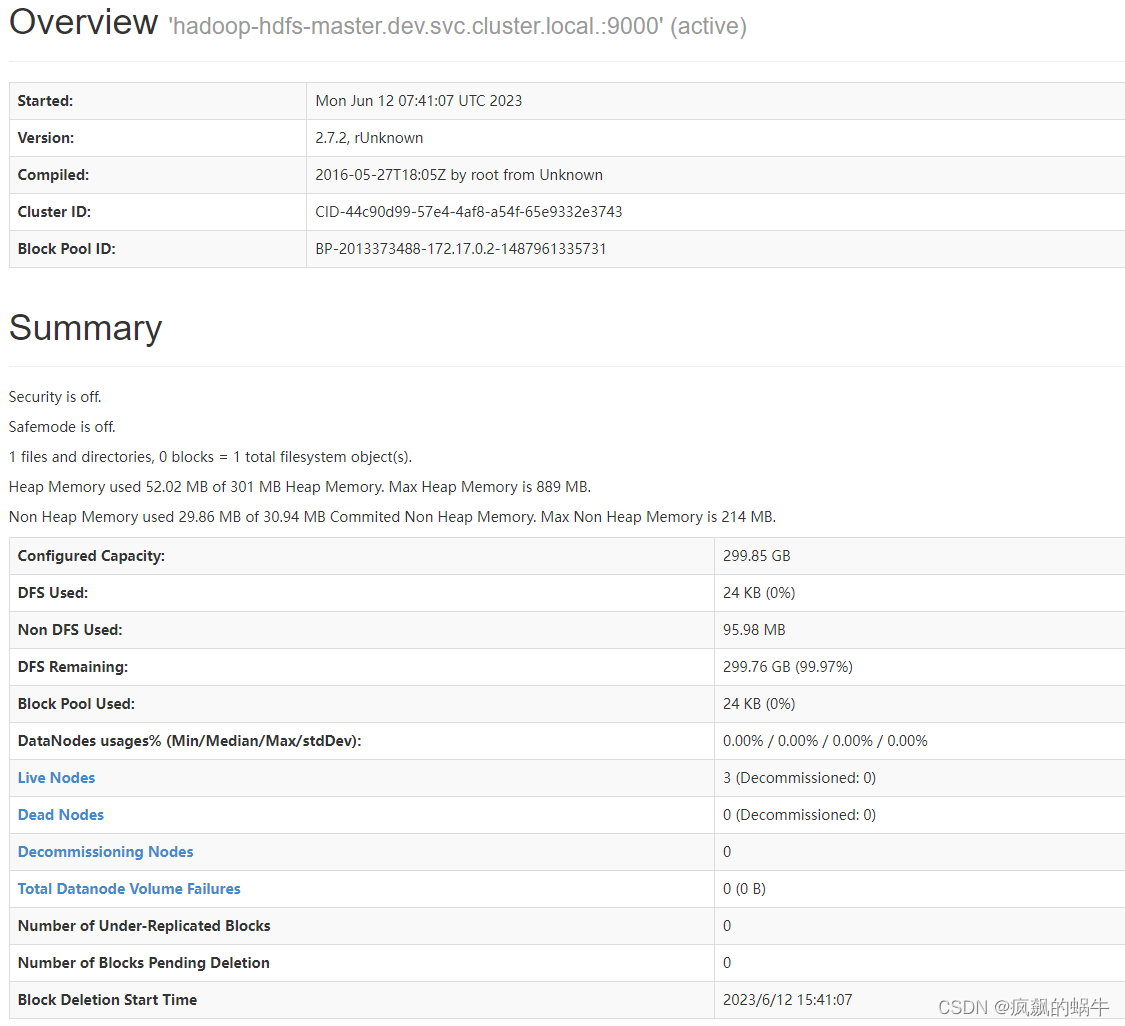

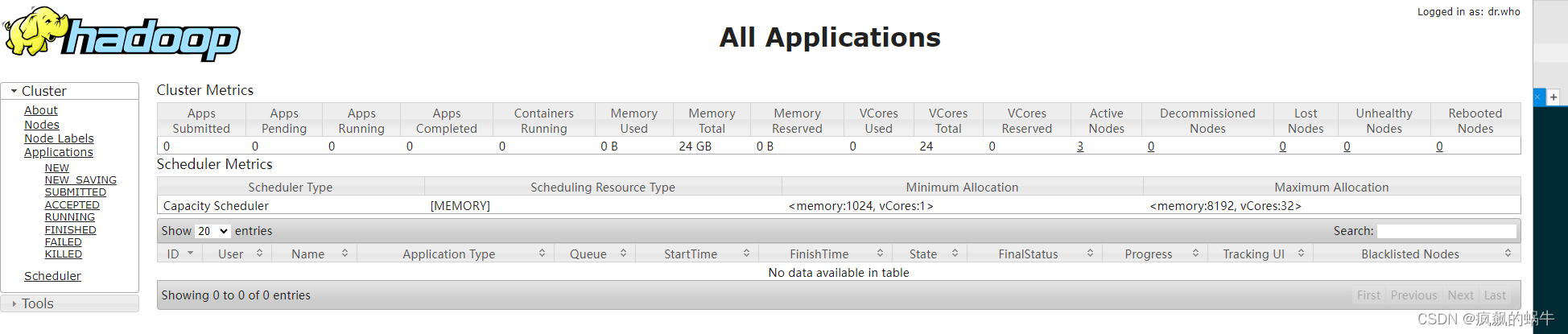

访问http://ip:32007 和http://ip:32088 即可看到hadoop管理界面

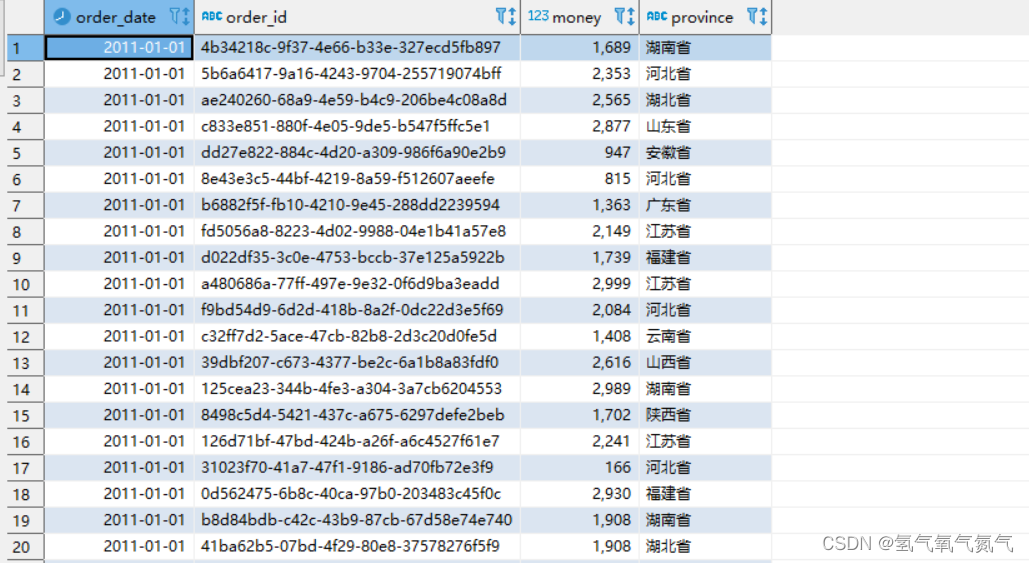

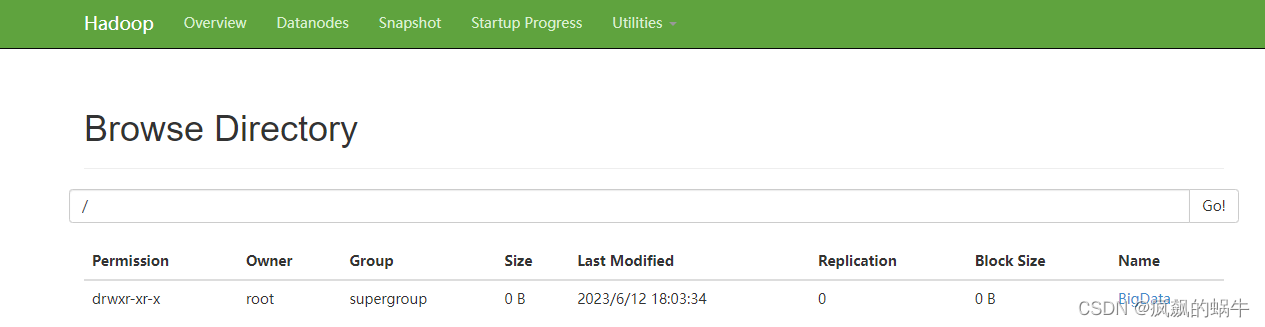

5、联通性验证

在hdfs上创建一个目录

# kubectl exec -it hdfs-master-5946bb8ff4-lt5mp -n dev /bin/bash

# hdfs dfs -mkdir /BigData

在Hadoop WebUI界面查看刚才创建的目录

四、报错&解决

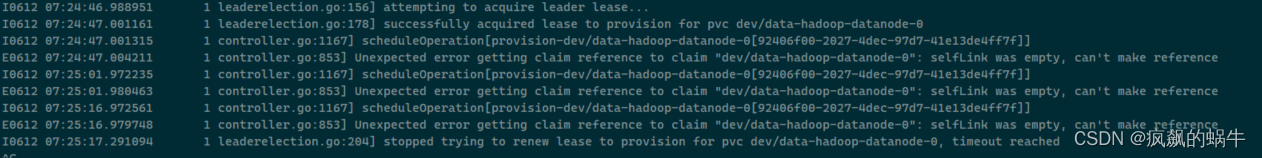

1、nfs报错

Unexpected error getting claim reference to claim "dev/data-hadoop-datanode-0": selfLink was empty, can't make reference

问题原因: 没有权限创建

解决:

1、chmod 777 /data/hadoop #配置nfs共享文件权限,方便测试就777了

2、修改nfs-provisioner.yaml的rules

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["list", "watch", "create", "update", "patch"]

- apiGroups: [""]

resources: ["services"]

verbs: ["get"]

- apiGroups: ["extensions"]

resources: ["podsecuritypolicies"]

resourceNames: ["nfs-provisioner"]

verbs: ["use"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

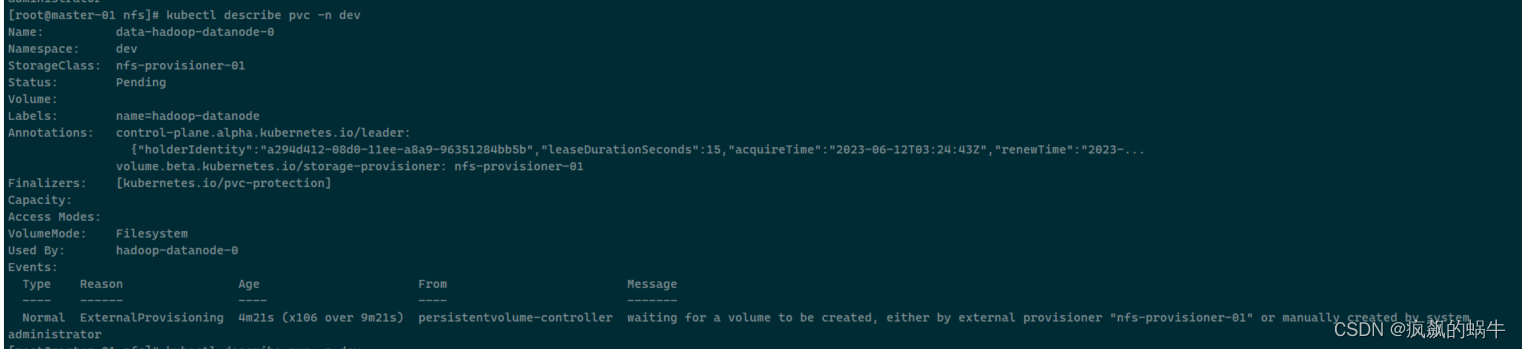

2、nfs报错

persistentvolume-controller waiting for a volume to be created, either by external provisioner "nfs-provisioner" or manually created by system administrator

问题原因:selfLink导致,因为kubernetes 1.20版本 禁用了 selfLink导致

https://github.com/kubernetes/kubernetes/pull/94397

问题解决:

1、在hadoop的3个文件里 添加namespace与nfs的统一

2、修改kube-apiserver.yaml配置文件,添加如下内容

apiVersion: v1

-----

- --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

- --feature-gates=RemoveSelfLink=false # 添加这个配置本k8s使用kubeasz配置未找到该文件,需要直接修改所有的master的kube-apiserver服务的配置文件

# cat /etc/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

ExecStart=/opt/kube/bin/kube-apiserver \

--advertise-address=10.2.1.190 \

--allow-privileged=true \

--anonymous-auth=false \

--api-audiences=api,istio-ca \

--authorization-mode=Node,RBAC \

--token-auth-file=/etc/kubernetes/ssl/basic-auth.csv \

--bind-address=10.2.1.190 \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--endpoint-reconciler-type=lease \

--etcd-cafile=/etc/kubernetes/ssl/ca.pem \

--etcd-certfile=/etc/kubernetes/ssl/kubernetes.pem \

--etcd-keyfile=/etc/kubernetes/ssl/kubernetes-key.pem \

--etcd-servers=https://10.2.1.190:2379,https://10.2.1.191:2379,https://10.2.1.192:2379 \

--kubelet-certificate-authority=/etc/kubernetes/ssl/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/ssl/admin.pem \

--kubelet-client-key=/etc/kubernetes/ssl/admin-key.pem \

--service-account-issuer=kubernetes.default.svc \

--service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-key-file=/etc/kubernetes/ssl/ca.pem \

--service-cluster-ip-range=10.68.0.0/16 \

--service-node-port-range=20000-40000 \

--tls-cert-file=/etc/kubernetes/ssl/kubernetes.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kubernetes-key.pem \

--feature-gates=RemoveSelfLink=false \ #添加这个配置

--requestheader-client-ca-file=/etc/kubernetes/ssl/ca.pem \

--requestheader-allowed-names= \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--proxy-client-cert-file=/etc/kubernetes/ssl/aggregator-proxy.pem \

--proxy-client-key-file=/etc/kubernetes/ssl/aggregator-proxy-key.pem \

--enable-aggregator-routing=true \

--v=2

Restart=always

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

重启服务 systemctl daemon-reload && systemctl restart kubelet

最好是整体服务器重启下