【打卡】苹果叶片病害分类和建筑物变化检测数据挖掘竞赛

文章目录

- 【打卡】苹果叶片病害分类和建筑物变化检测数据挖掘竞赛

- Task 1两个赛题数据可视化

- 任务2 苹果病害数据加载与数据增强

- 任务三

Task 1两个赛题数据可视化

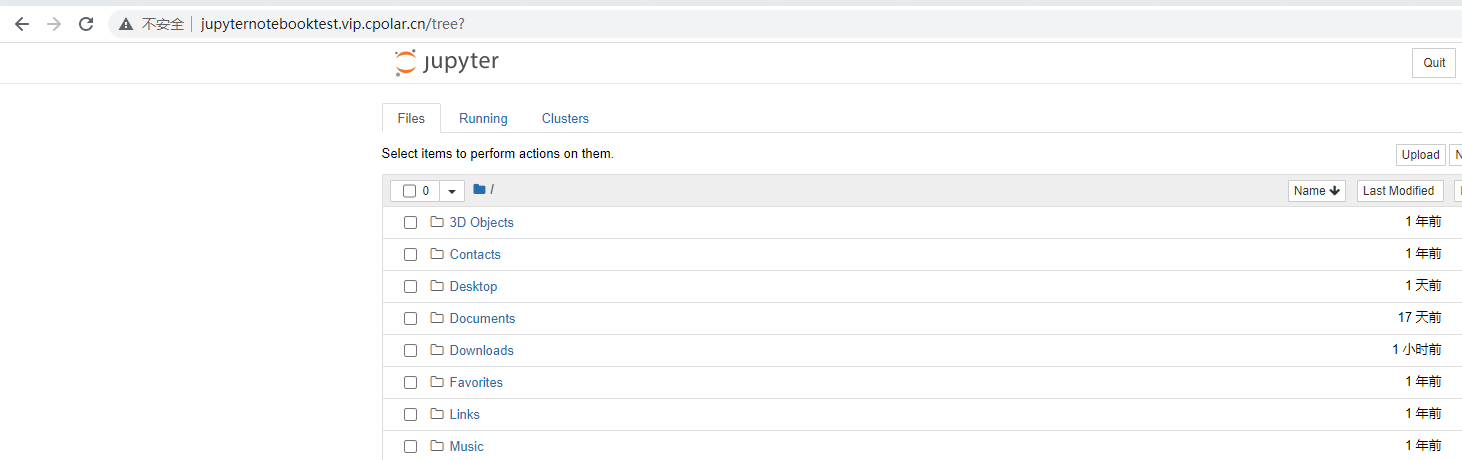

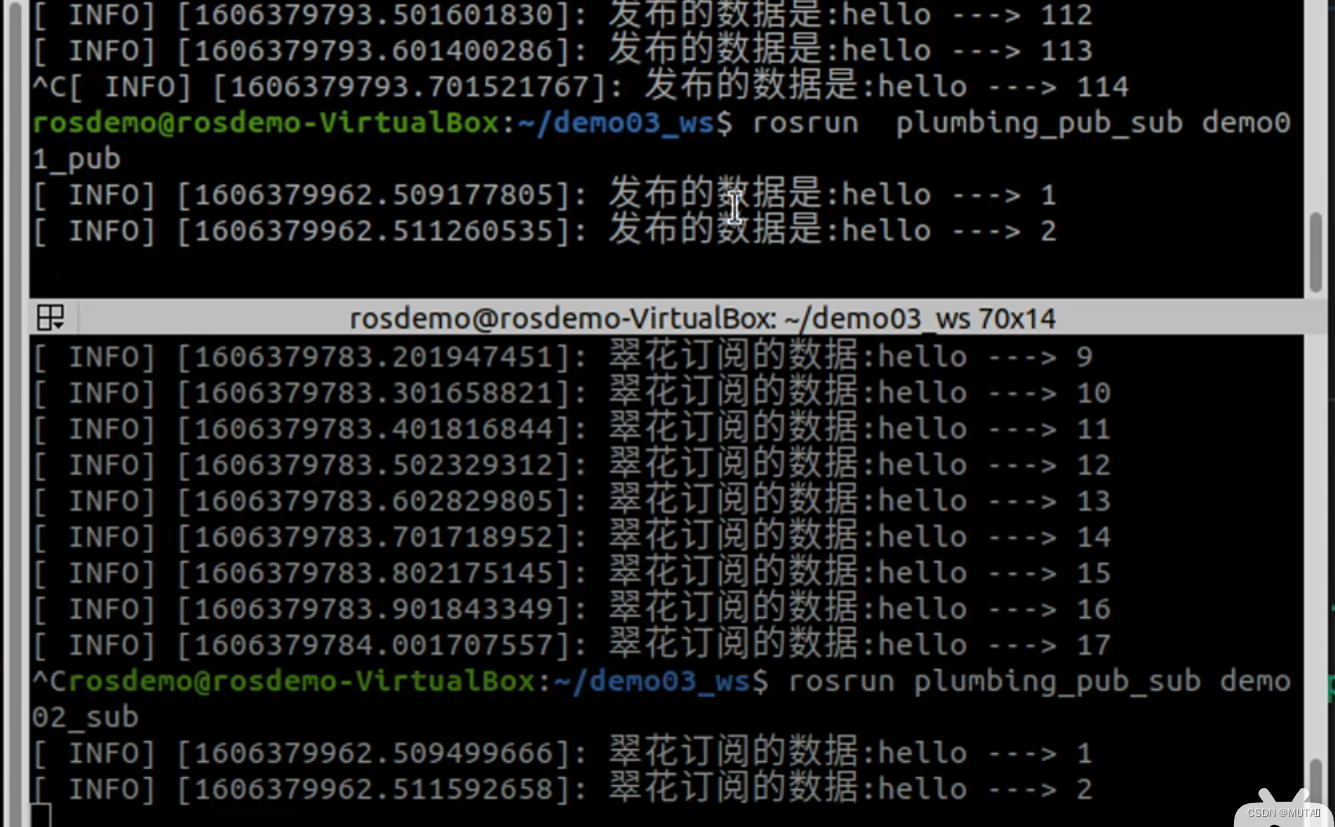

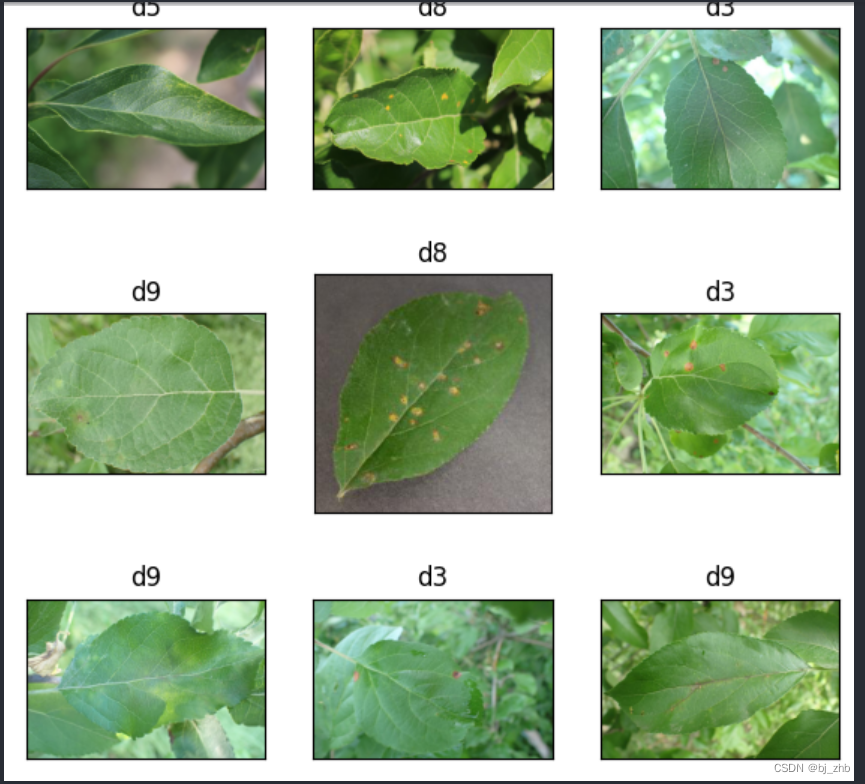

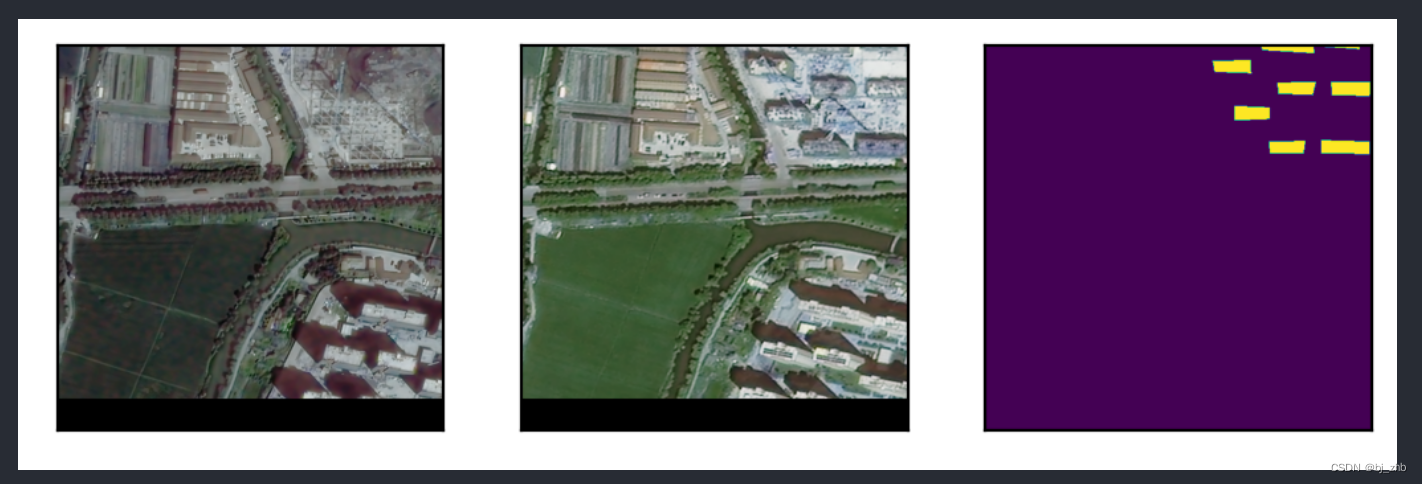

在这个任务中,参赛选手需要对两个赛题的数据进行可视化。对于苹果病害数据,选手可以展示苹果叶片的病害图像以及它们所属的标签。对于建筑物检测数据,选手需要使用"吉林一号"高分辨率卫星遥感影像作为数据集。选手需要展示这些卫星影像,并可视化其中的建筑物变化。

import os, sys, glob, argparse

import pandas as pd

import numpy as np

from tqdm import tqdm

%matplotlib inline

import matplotlib.pyplot as plt

import cv2

from PIL import Image

from sklearn.model_selection import train_test_split, StratifiedKFold, KFold

import torch

torch.manual_seed(0)

torch.backends.cudnn.deterministic = False

torch.backends.cudnn.benchmark = True

import torchvision.models as models

import torchvision.transforms as transforms

import torchvision.datasets as datasets

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torch.autograd import Variable

from torch.utils.data.dataset import Dataset

train_path = glob.glob('./train/*/*')

test_path = glob.glob('./test/*')

np.random.shuffle(train_path)

np.random.shuffle(test_path)

plt.figure(figsize=(7, 7))

for idx in range(9):

plt.subplot(3, 3, idx+1)

plt.imshow(Image.open(train_path[idx]))

plt.xticks([]);

plt.yticks([]);

plt.title(train_path[idx].split('/')[-2])

import numpy as np

import glob

import cv2

import matplotlib.pyplot as plt

import os, sys, glob, argparse

import pandas as pd

import numpy as np

from tqdm import tqdm

import cv2

from PIL import Image

from sklearn.model_selection import train_test_split, StratifiedKFold, KFold

import torch

torch.manual_seed(0)

torch.backends.cudnn.deterministic = False

torch.backends.cudnn.benchmark = True

import torchvision.models as models

import torchvision.transforms as transforms

import torchvision.datasets as datasets

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torch.autograd import Variable

from torch.utils.data.dataset import Dataset

train_tiff1 = glob.glob('./初赛训练集/Image1/*')

train_tiff2 = glob.glob('./初赛训练集/Image2/*')

train_label = glob.glob('./train/label1/*')

train_tiff1.sort()

train_tiff2.sort()

train_label.sort()

test_tiff1 = glob.glob('./初赛测试集/Image1/*')

test_tiff2 = glob.glob('./初赛测试集/Image2/*')

test_tiff1.sort()

test_tiff2.sort()

idx = 20

img1 = cv2.imread(train_tiff1[idx])

img2 = cv2.imread(train_tiff2[idx])

label = cv2.imread(train_label[idx])

plt.figure(dpi=200)

plt.subplot(131)

plt.imshow(img1)

plt.xticks([]); plt.yticks([])

plt.subplot(132)

plt.imshow(img2)

plt.xticks([]); plt.yticks([])

plt.subplot(133)

plt.imshow(label[:, :, 1] * 128)

plt.xticks([]); plt.yticks([])

方法很简单,总体来说就是使用cv2.imread和plt.imshow(Image.open(train_path[idx]))实现可视化。

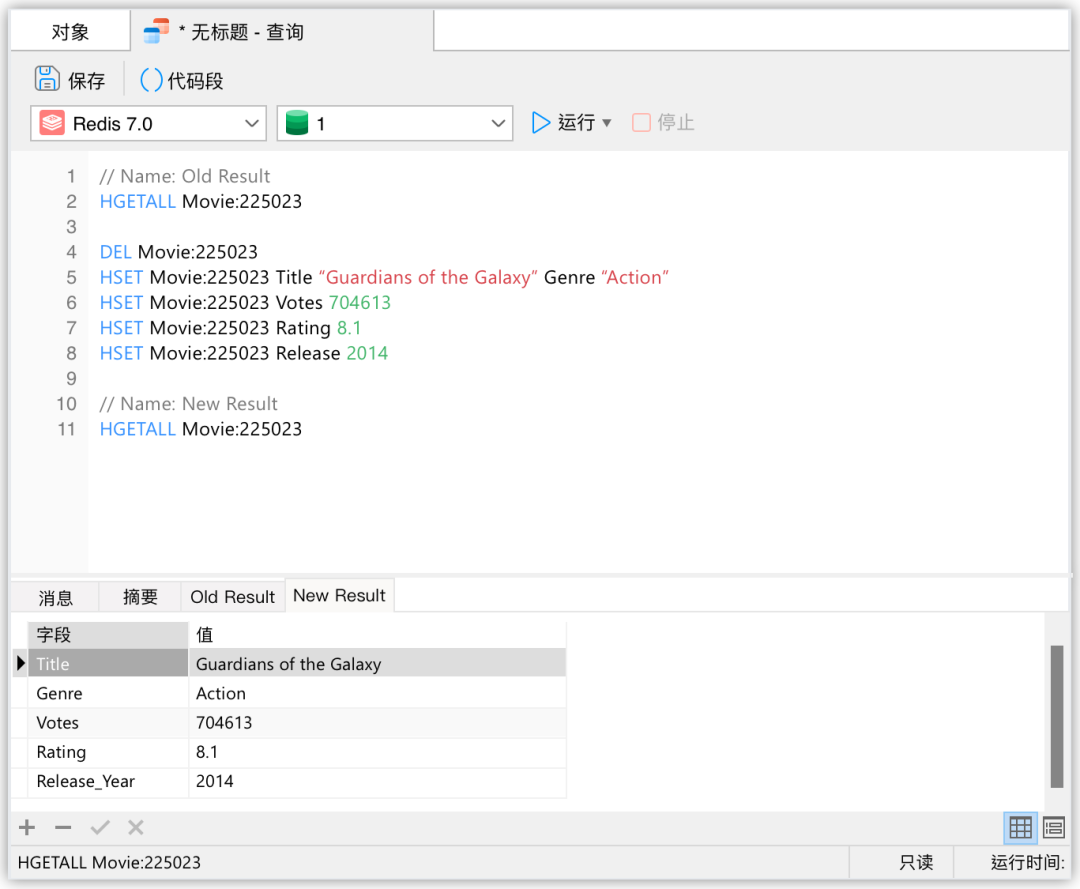

任务2 苹果病害数据加载与数据增强

数据加载阶段,选手需要编写代码来读取和处理提供的图像数据。数据增强阶段,选手可以使用各种图像处理技术和方法,如旋转、缩放、翻转、亮度调整等,来增强数据集的多样性和数量。

步骤1:使用OpenCV或者PIL加载数据集(已经在任务一实现)

步骤2:使用torchvision或者OpenCV实现图像分类任务的数据增强

import torch

from torch.utils.data import Dataset

from PIL import Image

import numpy as np

DATA_CACHE = {}

import cv2

class XunFeiDataset(Dataset):

def __init__(self, img_path, transform=None):

self.img_path = img_path

if transform is not None:

self.transform = transform

else:

self.transform = None

def __getitem__(self, index):

if self.img_path[index] in DATA_CACHE:

img = DATA_CACHE[self.img_path[index]]

else:

img = cv2.imread(self.img_path[index])

DATA_CACHE[self.img_path[index]] = img

if self.transform is not None:

img = self.transform(image=img)['image']

if self.img_path[index].split('/')[-2] in [

'd1', 'd2', 'd3', 'd4', 'd5', 'd6', 'd7', 'd8', 'd9'

]:

label = ['d1', 'd2', 'd3', 'd4', 'd5', 'd6', 'd7', 'd8',

'd9'].index(self.img_path[index].split('/')[-2])

else:

label = -1

img = img.transpose([2, 0, 1]) # HWC -> CHW numpy中的transpose

return img, torch.from_numpy(np.array(label))

def __len__(self):

return len(self.img_path)

import argparse

import torch

import torchvision.transforms as transforms

from mydatasets.xunfeidataset import XunFeiDataset

import albumentations as A

def get_loader(args, train_path, test_path):

train_loader = torch.utils.data.DataLoader(XunFeiDataset(

train_path[:-1000],

A.Compose([

A.RandomRotate90(),

A.Resize(256, 256),

A.RandomCrop(224, 224),

A.HorizontalFlip(p=0.5),

A.RandomContrast(p=0.5),

A.RandomBrightnessContrast(p=0.5),

A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225))

])),

batch_size=args.batch_size,

shuffle=True,

num_workers=args.num_workers,

pin_memory=True)

val_loader = torch.utils.data.DataLoader(

XunFeiDataset(

train_path[-1000:],

A.Compose([

A.Resize(256, 256),

A.RandomCrop(224, 224),

# A.HorizontalFlip(p=0.5),

# A.RandomContrast(p=0.5),

A.Normalize(mean=(0.485, 0.456, 0.406),

std=(0.229, 0.224, 0.225))

])),

batch_size=args.batch_size,

shuffle=False,

num_workers=args.num_workers,

pin_memory=True)

test_loader = torch.utils.data.DataLoader(XunFeiDataset(

test_path,

A.Compose([

A.Resize(256, 256),

A.RandomCrop(224, 224),

A.HorizontalFlip(p=0.5),

A.RandomContrast(p=0.5),

A.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225))

])),

batch_size=args.batch_size,

shuffle=False,

num_workers=args.num_workers,

pin_memory=True)

return train_loader, val_loader, test_loader

步骤4:实现Mixup数据增强。

def mixup_data(x, y, alpha=1.0, use_cuda=True):

'''Returns mixed inputs, pairs of targets, and lambda'''

if alpha > 0:

lam = np.random.beta(alpha, alpha)

else:

lam = 1

batch_size = x.size()[0]

if use_cuda:

index = torch.randperm(batch_size).cuda()

else:

index = torch.randperm(batch_size)

mixed_x = lam * x + (1 - lam) * x[index, :]

y_a, y_b = y, y[index]

return mixed_x, y_a, y_b, lam

def mixup_criterion(criterion, pred, y_a, y_b, lam):

return lam * criterion(pred, y_a) + (1 - lam) * criterion(pred, y_b)