目录

前言

课题背景和意义

实现技术思路

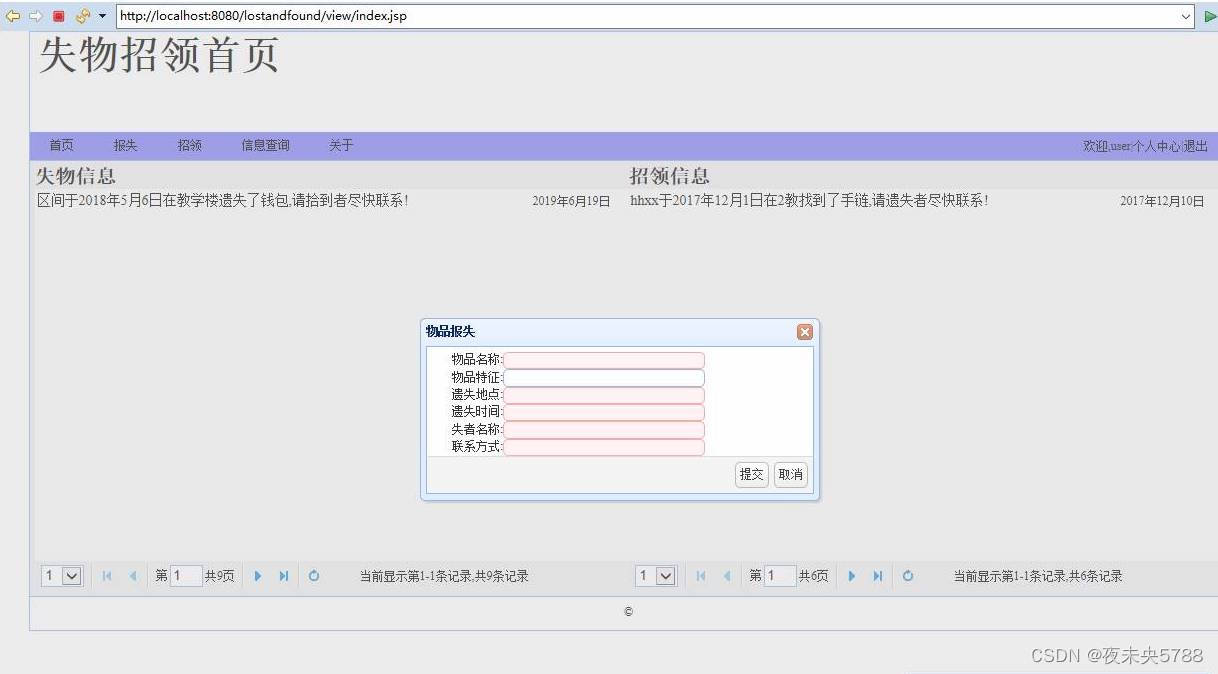

实现效果图样例

前言

📅大四是整个大学期间最忙碌的时光,一边要忙着备考或实习为毕业后面临的就业升学做准备,一边要为毕业设计耗费大量精力。近几年各个学校要求的毕设项目越来越难,有不少课题是研究生级别难度的,对本科同学来说是充满挑战。为帮助大家顺利通过和节省时间与精力投入到更重要的就业和考试中去,学长分享优质的选题经验和毕设项目与技术思路。

🚀对毕设有任何疑问都可以问学长哦!

大家好,这里是海浪学长毕设专题,本次分享的课题是

🎯基于机器视觉的深蹲检测识别

课题背景和意义

深蹲是一项健身运动,是练大腿肌肉的王牌动作,坚持做还会起到减肥的作用。深蹲被认为是增强腿部和臀部力量和围度,以及发展核心力量(core strength)必不可少的练习。深蹲时,有明确阶段和大幅度变化的基本运动,可以通过机器视觉技术来实现对深蹲的检测识别。

实现技术思路

需要的库文件

import io

from PIL import ImageFont

from PIL import ImageDraw

import csv

import cv2

from matplotlib import pyplot as plt

import numpy as np

import os

from PIL import Image

import sys

import tqdm

from mediapipe.python.solutions import drawing_utils as mp_drawing

from mediapipe.python.solutions import pose as mp_pose

技术步骤

- 收集目标练习的图像样本并对其进行姿势预测,

- 将获得的姿态标志转换为适合 k-NN 分类器的数据,并使用这些数据形成训练集,

- 执行分类本身,然后进行重复计数。

训练样本

为了建立一个好的分类器,应该为训练集收集适当的样本:理论上每个练习的每个最终状态大约有几百个样本,收集的样本涵盖不同的摄像机角度、环境条件、身体形状和运动变化,这一点很重要,能做到这样最好。但实际中如果嫌麻烦的话每种状态15-25张左右都可以,然后拍摄角度要注意多样化,最好每隔15度拍摄一张。

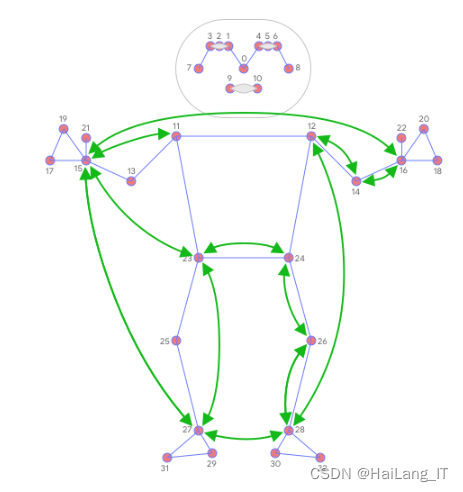

获取归一化的landmarks

要将样本转换为 k-NN 分类器训练集,我们可以在给定图像上运行 BlazePose 模型,并将预测的landmarks转储到 CSV 文件中。此外,Pose Classification Colab (Extended)通过针对整个训练集对每个样本进行分类,提供了有用的工具来查找异常值(例如,错误预测的姿势)和代表性不足的类别(例如,不覆盖所有摄像机角度)。

# 人体姿态编码模块

class FullBodyPoseEmbedder(object):

"""Converts 3D pose landmarks into 3D embedding."""

def __init__(self, torso_size_multiplier=2.5):

# Multiplier to apply to the torso to get minimal body size.

self._torso_size_multiplier = torso_size_multiplier

# Names of the landmarks as they appear in the prediction.

self._landmark_names = [

'nose',

'left_eye_inner', 'left_eye', 'left_eye_outer',

'right_eye_inner', 'right_eye', 'right_eye_outer',

'left_ear', 'right_ear',

'mouth_left', 'mouth_right',

'left_shoulder', 'right_shoulder',

'left_elbow', 'right_elbow',

'left_wrist', 'right_wrist',

'left_pinky_1', 'right_pinky_1',

'left_index_1', 'right_index_1',

'left_thumb_2', 'right_thumb_2',

'left_hip', 'right_hip',

'left_knee', 'right_knee',

'left_ankle', 'right_ankle',

'left_heel', 'right_heel',

'left_foot_index', 'right_foot_index',

]

def __call__(self, landmarks):

"""Normalizes pose landmarks and converts to embedding

Args:

landmarks - NumPy array with 3D landmarks of shape (N, 3).

Result:

Numpy array with pose embedding of shape (M, 3) where `M` is the number of

pairwise distances defined in `_get_pose_distance_embedding`.

"""

assert landmarks.shape[0] == len(self._landmark_names), 'Unexpected number of landmarks: {}'.format(

landmarks.shape[0])

# Get pose landmarks.

landmarks = np.copy(landmarks)

# Normalize landmarks.

landmarks = self._normalize_pose_landmarks(landmarks)

# Get embedding.

embedding = self._get_pose_distance_embedding(landmarks)

return embedding

def _normalize_pose_landmarks(self, landmarks):

"""Normalizes landmarks translation and scale."""

landmarks = np.copy(landmarks)

# Normalize translation.

pose_center = self._get_pose_center(landmarks)

landmarks -= pose_center

# Normalize scale.

pose_size = self._get_pose_size(landmarks, self._torso_size_multiplier)

landmarks /= pose_size

# Multiplication by 100 is not required, but makes it eaasier to debug.

landmarks *= 100

return landmarks

def _get_pose_size(self, landmarks, torso_size_multiplier):

"""Calculates pose size.

It is the maximum of two values:

* Torso size multiplied by `torso_size_multiplier`

* Maximum distance from pose center to any pose landmark

"""

# This approach uses only 2D landmarks to compute pose size.

landmarks = landmarks[:, :2]

# Hips center.

left_hip = landmarks[self._landmark_names.index('left_hip')]

right_hip = landmarks[self._landmark_names.index('right_hip')]

hips = (left_hip + right_hip) * 0.5

# Shoulders center.

left_shoulder = landmarks[self._landmark_names.index('left_shoulder')]

right_shoulder = landmarks[self._landmark_names.index('right_shoulder')]

shoulders = (left_shoulder + right_shoulder) * 0.5

# Torso size as the minimum body size.

torso_size = np.linalg.norm(shoulders - hips)

# Max dist to pose center.

pose_center = self._get_pose_center(landmarks)

max_dist = np.max(np.linalg.norm(landmarks - pose_center, axis=1))

return max(torso_size * torso_size_multiplier, max_dist)

def _get_pose_distance_embedding(self, landmarks):

"""Converts pose landmarks into 3D embedding.

We use several pairwise 3D distances to form pose embedding. All distances

include X and Y components with sign. We differnt types of pairs to cover

different pose classes. Feel free to remove some or add new.

Args:

landmarks - NumPy array with 3D landmarks of shape (N, 3).

Result:

Numpy array with pose embedding of shape (M, 3) where `M` is the number of

pairwise distances.

"""

embedding = np.array([

# One joint.

self._get_distance(

self._get_average_by_names(landmarks, 'left_hip', 'right_hip'),

self._get_average_by_names(landmarks, 'left_shoulder', 'right_shoulder')),

self._get_distance_by_names(landmarks, 'left_shoulder', 'left_elbow'),

self._get_distance_by_names(landmarks, 'right_shoulder', 'right_elbow'),

self._get_distance_by_names(landmarks, 'left_elbow', 'left_wrist'),

self._get_distance_by_names(landmarks, 'right_elbow', 'right_wrist'),

self._get_distance_by_names(landmarks, 'left_hip', 'left_knee'),

self._get_distance_by_names(landmarks, 'right_hip', 'right_knee'),

self._get_distance_by_names(landmarks, 'left_knee', 'left_ankle'),

self._get_distance_by_names(landmarks, 'right_knee', 'right_ankle'),

# Two joints.

self._get_distance_by_names(landmarks, 'left_shoulder', 'left_wrist'),

self._get_distance_by_names(landmarks, 'right_shoulder', 'right_wrist'),

self._get_distance_by_names(landmarks, 'left_hip', 'left_ankle'),

self._get_distance_by_names(landmarks, 'right_hip', 'right_ankle'),

# Four joints.

self._get_distance_by_names(landmarks, 'left_hip', 'left_wrist'),

self._get_distance_by_names(landmarks, 'right_hip', 'right_wrist'),

# Five joints.

self._get_distance_by_names(landmarks, 'left_shoulder', 'left_ankle'),

self._get_distance_by_names(landmarks, 'right_shoulder', 'right_ankle'),

self._get_distance_by_names(landmarks, 'left_hip', 'left_wrist'),

self._get_distance_by_names(landmarks, 'right_hip', 'right_wrist'),

# Cross body.

self._get_distance_by_names(landmarks, 'left_elbow', 'right_elbow'),

self._get_distance_by_names(landmarks, 'left_knee', 'right_knee'),

self._get_distance_by_names(landmarks, 'left_wrist', 'right_wrist'),

self._get_distance_by_names(landmarks, 'left_ankle', 'right_ankle'),

# Body bent direction.

# self._get_distance(

# self._get_average_by_names(landmarks, 'left_wrist', 'left_ankle'),

# landmarks[self._landmark_names.index('left_hip')]),

# self._get_distance(

# self._get_average_by_names(landmarks, 'right_wrist', 'right_ankle'),

# landmarks[self._landmark_names.index('right_hip')]),

])

return embedding

def _get_average_by_names(self, landmarks, name_from, name_to):

lmk_from = landmarks[self._landmark_names.index(name_from)]

lmk_to = landmarks[self._landmark_names.index(name_to)]

return (lmk_from + lmk_to) * 0.5

def _get_distance_by_names(self, landmarks, name_from, name_to):

lmk_from = landmarks[self._landmark_names.index(name_from)]

lmk_to = landmarks[self._landmark_names.index(name_to)]

return self._get_distance(lmk_from, lmk_to)

def _get_distance(self, lmk_from, lmk_to):

return lmk_to - lmk_from

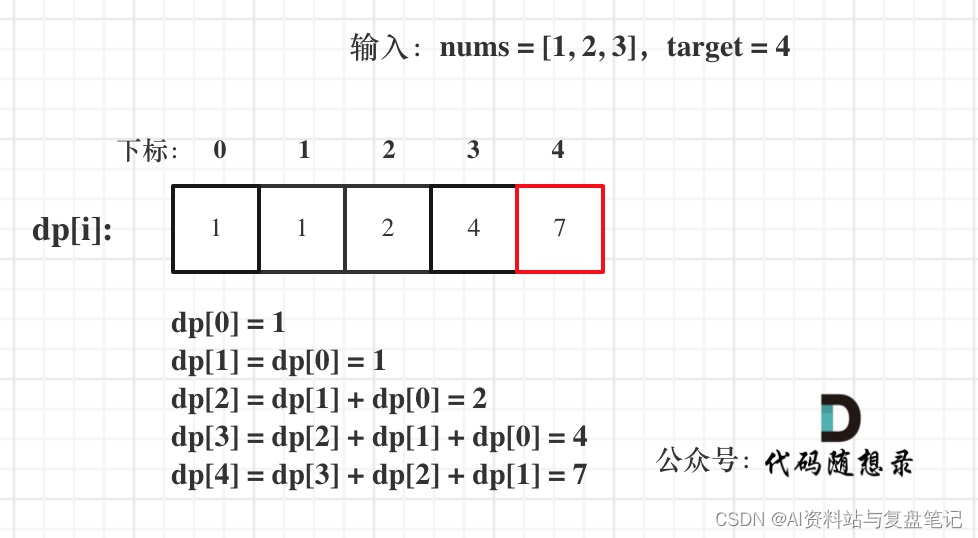

使用KNN算法分类

用于姿势分类的 k-NN 算法需要每个样本的特征向量表示和一个度量来计算两个这样的向量之间的距离,以找到最接近目标的姿势样本。

为了将姿势标志转换为特征向量,我们使用预定义的姿势关节列表之间的成对距离,例如手腕和肩膀、脚踝和臀部以及两个手腕之间的距离。由于该算法依赖于距离,因此在转换之前所有姿势都被归一化以具有相同的躯干尺寸和垂直躯干方向。

可以根据运动的特点选择所要计算的距离对(例如,引体向上可能更加关注上半身的距离对)。

为了获得更好的分类结果,使用不同的距离度量调用了两次 k-NN 搜索:

首先,为了过滤掉与目标样本几乎相同但在特征向量中只有几个不同值的样本(这意味着不同的弯曲关节和其他姿势类),使用最小坐标距离作为距离度量,

然后使用平均坐标距离在第一次搜索中找到最近的姿势簇。

最后,我们应用指数移动平均(EMA) 平滑来平衡来自姿势预测或分类的任何噪声。为此,我们不仅搜索最近的姿势簇,而且计算每个姿势簇的概率,并将其用于随着时间的推移进行平滑处理。

# 姿态分类结果平滑

class EMADictSmoothing(object):

"""Smoothes pose classification."""

def __init__(self, window_size=10, alpha=0.2):

self._window_size = window_size

self._alpha = alpha

self._data_in_window = []

def __call__(self, data):

"""Smoothes given pose classification.

Smoothing is done by computing Exponential Moving Average for every pose

class observed in the given time window. Missed pose classes arre replaced

with 0.

Args:

data: Dictionary with pose classification. Sample:

{

'pushups_down': 8,

'pushups_up': 2,

}

Result:

Dictionary in the same format but with smoothed and float instead of

integer values. Sample:

{

'pushups_down': 8.3,

'pushups_up': 1.7,

}

"""

# Add new data to the beginning of the window for simpler code.

self._data_in_window.insert(0, data)

self._data_in_window = self._data_in_window[:self._window_size]

# Get all keys.

keys = set([key for data in self._data_in_window for key, _ in data.items()])

# Get smoothed values.

smoothed_data = dict()

for key in keys:

factor = 1.0

top_sum = 0.0

bottom_sum = 0.0

for data in self._data_in_window:

value = data[key] if key in data else 0.0

top_sum += factor * value

bottom_sum += factor

# Update factor.

factor *= (1.0 - self._alpha)

smoothed_data[key] = top_sum / bottom_sum

return smoothed_data

计数器计数

为了计算重复次数,该算法监控目标姿势类别的概率。深蹲的“向上”和“向下”终端状态:

当“下”位姿类的概率第一次通过某个阈值时,算法标记进入“下”位姿类。

一旦概率下降到阈值以下(即起身超过一定高度),算法就会标记“向下”姿势类别,退出并增加计数器。

为了避免概率在阈值附近波动(例如,当用户在“向上”和“向下”状态之间暂停时)导致幻像计数的情况,用于检测何时退出状态的阈值实际上略低于用于检测状态退出的阈值。

实现效果图样例

我是海浪学长,创作不易,欢迎点赞、关注、收藏、留言。

毕设帮助,疑难解答,欢迎打扰!

![[附源码]计算机毕业设计基于web的羽毛球管理系统](https://img-blog.csdnimg.cn/7cc5ce16928743089ff4026b83d88d33.png)

![Leetcode 1687. 从仓库到码头运输箱子 [四种解法] 动态规划 从朴素出发详细剖析优化步骤](https://img-blog.csdnimg.cn/b9898ac88fb8438da1284d8913fb7e49.png)