背景

平台:Xavier nvidia AGX板子

编写c++程序测试单目3D目标检测DEVIANT(https://blog.csdn.net/qq_39523365/article/details/130982966?spm=1001.2014.3001.5501)python算法的过程。代码如下:

文件结构

具体代码:

test.cpp

#include <opencv2/opencv.hpp>

#include <iostream>

#include <time.h>

#include "LD_Detection.h"

#include <torch/torch.h>

#include <sys/timeb.h>

LD_Detection * InitDetection(int m_ichannel, int m_iWidth, int m_iHeight) {

// 程序执行路径

std::string application_dir = "/home/nvidia/sd/DEVIANT";

// qDebug() << "current path: " << qApp->applicationDirPath() << endl;

application_dir += "/code/tools/";

const char *m_cPyDir = application_dir.c_str();

std::string m_cPyFile = "demo_inference_zs";

std::string m_cPyFunction = "detect_online";

LD_Detection * m_pLD_Detection = new LD_Detection(m_ichannel, m_iWidth, m_iHeight, m_cPyDir, m_cPyFile.c_str(), m_cPyFunction.c_str() );

std::cout << "*****************" << std::endl;

return m_pLD_Detection;

}

int main()

{

printf("##########%d***",torch::cuda::is_available());

// 用 OpenCV 打开摄像头读取文件(你随便咋样获取图片都OK哪)

//cv::VideoCapture cap = cv::VideoCapture(0);

cv::VideoCapture cap;

cap.open("/home/nvidia/sd/128G/test/data/video/0320/result2.avi");

// 设置宽高 无所谓多宽多高后面都会通过一个算法转换为固定宽高的

// 固定宽高值应该是你通过YoloV5训练得到的模型所需要的

// 传入方式是构造 YoloV5 对象时传入 width 默认值为 640,height 默认值为 640

cap.set(cv::CAP_PROP_FRAME_WIDTH, 1920);

cap.set(cv::CAP_PROP_FRAME_HEIGHT, 1080);

std::string videoname = "/home/nvidia/sd/128G/test/data/video/0320/result.avi";

int videoFps = cap.get(cv::CAP_PROP_FPS);

int myFourCC = cv::VideoWriter::fourcc('m', 'p', '4', 'v');

cv::VideoWriter g_writer = cv::VideoWriter(videoname, myFourCC, videoFps, cv::Size(cap.get(cv::CAP_PROP_FRAME_WIDTH), cap.get(cv::CAP_PROP_FRAME_HEIGHT)));

LD_Detection * m_pLD_Detection = InitDetection(0,1920,1080);

// sleep(5);

cv::Mat frame;

int count = 0;

while (cap.isOpened())

{

// 读取一帧

cap.read(frame);

if (frame.empty())

{

std::cout << "Read frame failed!" << std::endl;

break;

}

std::cout << "------------------------" << std::endl;

// 预测

// 简单吧,两行代码预测结果就出来了,封装的还可以吧 嘚瑟

clock_t start = clock();

//void LD_Detection::startDetect(int camChan, unsigned char *image)

m_pLD_Detection->startDetect(count, frame.data);

clock_t ends = clock();

// std::cout << start << std::endl;

// std::cout << ends << std::endl;

std::cout <<"Running Time : "<<(double)(ends - start) / CLOCKS_PER_SEC << CLOCKS_PER_SEC << std::endl;

// show 图片

g_writer << frame;

// if(count++ > 2000)

// {

// break;

// }

//cv::imshow("", frame);

//if (cv::waitKey(1) == 27) break;

}

g_writer.release();

return 0;

}

LD_Detection.cpp

// #include <numpy/arrayobject.h>

#include </home/nvidia/sd/miniforge-pypy3/envs/centerpoint/include/python3.6m/Python.h>

#include </home/nvidia/sd/miniforge-pypy3/envs/centerpoint/lib/python3.6/site-packages/numpy/core/include/numpy/arrayobject.h>

// #include </usr/include/python2.7/Python.h>

// #include </usr/lib/python2.7/dist-packages/numpy/core/include/numpy/arrayobject.h>

//#include <json.h>

//#include <writer.h>

//#include <reader.h>

//#include <value.h>

#include "LD_Detection.h"

#include <thread>

// #include <QPainter>

// #include <QThread>

PyObject *pModule = nullptr;

PyObject *pDict = nullptr;

PyObject *pFunc = nullptr;

PyObject *pFunc1 = nullptr;

// PyObject *pFuncrelease = nullptr;

PyThreadState *pyThreadState = nullptr;

// LD_Detection::LD_Detection(QObject *parent, int camCount, int width, int

// height, const char *pyDir, const char *pyFile, const char *pyFunction) :

// QObject(parent)

// {

// // Python脚本所在路径(绝对路径)

// m_cPyDir = pyDir;

// // Python脚本文件名

// m_cPyFile = pyFile;

// // Python脚本中要运行的函数名

// m_cPyFunction = pyFunction;

//

// m_iCamCount = camCount;

// m_iCamWidth = width;

// m_iCamHeight = height;

// InitPython();

// ImportPyModule();

// m_bDetection = false;

//

// m_pDetectionthread = nullptr;

// }

LD_Detection::LD_Detection(int camChan, int width, int height,

const char *pyDir, const char *pyFile,

const char *pyFunction) {

// Python脚本所在路径(绝对路径)

m_cPyDir = pyDir;

// Python脚本文件名

m_cPyFile = pyFile;

// Python脚本中要运行的函数名

m_cPyFunction = pyFunction;

m_iCamChan = camChan;

m_iCamWidth = width;

m_iCamHeight = height;

InitPython();

ImportPyModule();

m_bDetection = false;

std::cout << m_iCamWidth <<"-----startDetect----- " <<m_iCamHeight << std::endl;

// std::string videoname = "/Leador_storage/zs/result/result.avi";

// int myFourCC = cv::VideoWriter::fourcc('m', 'p', '4', 'v');

// g_writer = cv::VideoWriter(videoname, myFourCC, 20, cv::Size(m_iCamWidth, m_iCamHeight));

}

LD_Detection::~LD_Detection() {

ReleasePython();

// g_writer.release();

}

bool LD_Detection::InitPython() {

// qDebug() << "-----Initialize Python-----" << endl;

Py_Initialize();

if (!Py_IsInitialized()) {

// qDebug() << "【error】: Python is not initialized" << endl;

std::cout << "Python is not initialized";

return false;

}

PyEval_InitThreads();

PyRun_SimpleString("import sys");

PyRun_SimpleString("print(sys.version)");

PyRun_SimpleString("print(sys.executable)");

std::cout << "-----------000-------------" << std::endl;

std::cout << "-----------111-------------" << std::endl;

import_array();

std::cout << "-----------222-------------" << std::endl;

if (PyErr_Occurred()) {

std::cerr << "【error】: Failed to import numpy Python module" << std::endl;

return false;

}

assert(PyArray_API);

pyThreadState = PyEval_SaveThread();

return true;

}

bool LD_Detection::ReleasePython() {

// PyObject_CallObject(pFuncrelease, NULL);

PyEval_RestoreThread(pyThreadState);

Py_DECREF(pModule);

Py_DECREF(pDict);

Py_DECREF(pFunc);

Py_DECREF(pFunc1);

Py_Finalize();

return true;

}

void LD_Detection::ImportPyModule() {

PyGILState_STATE state = PyGILState_Ensure();

// qDebug() << "-----Add python file path-----" << endl;

std::cout << "-----Add python file path-----" << m_cPyDir << std::endl;

char tempPath[256] = {};

PyRun_SimpleString("import sys");

sprintf(tempPath, "sys.path.append('%s')", m_cPyDir);

PyRun_SimpleString(tempPath);

PyRun_SimpleString("print('curr sys.path: ', sys.path)");

PyRun_SimpleString("print(sys.version)");

PyRun_SimpleString("print(sys.executable)");

std::cout << "PyRun_SimpleString " << tempPath << std::endl;

// qDebug() << "-----Add python file-----" << endl;

std::cout << "-----Add python file----- " << m_cPyFile << std::endl;

pModule = PyImport_ImportModule(m_cPyFile);

std::cout << "-----Add zs file----- " << m_cPyFile << std::endl;

if (!pModule) {

// qDebug() << "【error】: Can't find python file" << endl;

std::cout << "-----Can't find python file-----" << m_cPyFile << std::endl;

return;

}

pDict = PyModule_GetDict(pModule);

if (!pDict) {

return;

}

// qDebug() << "-----Get python function-----" << endl;

std::cout << "-----Get python function----- "

<< " pModule " << pModule << " m_cPyFunction " << m_cPyFunction<< endl;

pFunc1 = PyObject_GetAttrString(pModule, "print_version");

PyObject *pValue_result = PyObject_CallObject(pFunc1, NULL);

int result = PyLong_AsLong(pValue_result);

std::cout << "-----zssssssss----- " << result << endl;

// pFuncrelease = PyObject_GetAttrString(pModule, "release_resource");

pFunc = PyObject_GetAttrString(pModule, m_cPyFunction);

// pFunc = PyObject_GetAttrString(pModule, "detect_online");

// PyObject_CallObject(pFunc, NULL);

std::cout << "-----Add python function-----"<< m_cPyFunction << endl;

if (!pFunc || !PyCallable_Check(pFunc)) {

// qDebug() << "【error】: Can't find python function" << endl;

std::cout << "-----Can't find python function----- " << pFunc;

return;

}

PyGILState_Release(state);

}

void LD_Detection::slotStartDetection() {

m_bDetection = true;

//开启推流线程

m_pDetectionthread =

new std::thread(&LD_Detection::StartDetectionSingle, this);

}

void LD_Detection::slotStopDetection() {

m_bDetection = false;

if (m_pDetectionthread != nullptr) {

m_pDetectionthread->join();

delete m_pDetectionthread;

m_pDetectionthread = nullptr;

}

}

void LD_Detection::setParam(int nChan, unsigned char *image) {

m_iChan = nChan;

m_VideoOrgImage = image;

}

void LD_Detection::setParamMulti(unsigned char *images[]) {

for (int i = 0; i < m_iCamCount; i++) {

m_VideoOrgImages[i] = images[i];

}

}

// 直接传单个图像参数进行检测

void LD_Detection::startDetect(int camChan, unsigned char *image) {

PyGILState_STATE state = PyGILState_Ensure();

PyObject *pArgs = PyTuple_New(2);

cv::Mat temp = cv::Mat(m_iCamHeight, m_iCamWidth, CV_8UC3, image, 0);

// std::cout << "-----startDetect----- " << m_iCamWidth << std::endl;

npy_intp Dims[3] = {temp.rows, temp.cols, temp.channels()};

PyObject *image_channel = Py_BuildValue("i", camChan);

PyObject *image_array =

PyArray_SimpleNewFromData(3, Dims, NPY_UBYTE, temp.data);

PyTuple_SetItem(pArgs, 0, image_channel);

PyTuple_SetItem(pArgs, 1, image_array);

PyObject *pReturnValue = PyObject_CallObject(pFunc, pArgs);

// std::cout << "-----startDetect----- " << m_iCamWidth << std::endl;

if (PyList_Check(pReturnValue)) {

int SizeOfObjects = PyList_Size(pReturnValue);

std::cout << "Size of return list: " << SizeOfObjects << std::endl;

sign_objects.clear();

for (int i = 0; i < SizeOfObjects; i++) {

PyObject *pObject = PyList_GetItem(pReturnValue, i);

int SizeOfObject = PyList_Size(pObject);

std::cout << "Size of return sublist: " << SizeOfObject << std::endl;

struct SignObject object;

PyArg_Parse(PyList_GetItem(pObject, 2), "f", &object.x_min);

PyArg_Parse(PyList_GetItem(pObject, 3), "f", &object.y_min);

PyArg_Parse(PyList_GetItem(pObject, 4), "f", &object.x_max);

PyArg_Parse(PyList_GetItem(pObject, 5), "f", &object.y_max);

PyArg_Parse(PyList_GetItem(pObject, 13), "f", &object.confidence);

PyArg_Parse(PyList_GetItem(pObject, 0), "i", &object.clas);

// PyArg_Parse(PyList_GetItem(pObject, 6), "s", &object.clas_name);

std::cout << "Object's x_min: " << object.x_min << std::endl;

std::cout << "Object's y_min: " << object.y_min << std::endl;

std::cout << "Object's x_max: " << object.x_max << std::endl;

std::cout << "Object's y_max: " << object.y_max << std::endl;

std::cout << "Object's confidence: " << object.confidence << std::endl;

std::cout << "Object's class name: " << object.clas << std::endl;

sign_objects.push_back(object);

cv::Point point_min((int)object.x_min, (int)object.y_min),

point_max((int)object.x_max, (int)object.y_max);

cv::Scalar colorRectangle(255, 0, 0);

int thicknessRectangle = 3;

cv::resize(temp,temp, cv::Size(768, 512));

cv::rectangle(temp, point_min, point_max, colorRectangle,

thicknessRectangle);

cv::imwrite("test.jpg", temp);

// cv::imshow("test", temp);

// g_writer<<temp;

}

}

PyGILState_Release(state);

}

// 直接传多个图像参数进行检测

void LD_Detection::startDetection(unsigned char *images[]) {

PyGILState_STATE state = PyGILState_Ensure();

PyObject *pArgImages = PyTuple_New(m_iCamChan);

for (int i = 0; i < m_iCamChan; i++) {

cv::Mat temp = cv::Mat(m_iCamHeight, m_iCamWidth, CV_8UC3, images[i], 0);

npy_intp Dims[3] = {temp.rows, temp.cols, temp.channels()};

PyObject *image_array =

PyArray_SimpleNewFromData(3, Dims, NPY_UBYTE, temp.data);

PyTuple_SetItem(pArgImages, i, image_array);

}

PyObject *pArgs = PyTuple_New(1);

PyTuple_SetItem(pArgs, 0, pArgImages);

PyObject_CallObject(pFunc, pArgs);

PyGILState_Release(state);

// QThread::msleep(1);

}

// Muilt Camera detection

void LD_Detection::StartDetectionMulti() {

// qDebug() << "-----Video data to python function-----" << endl;

while (m_bDetection) {

PyGILState_STATE state = PyGILState_Ensure();

PyObject *pArgImages = PyTuple_New(m_iCamCount);

for (int i = 0; i < m_iCamCount; i++) {

cv::Mat temp =

cv::Mat(m_iCamHeight, m_iCamWidth, CV_8UC3, m_VideoOrgImages[i], 0);

npy_intp Dims[3] = {temp.rows, temp.cols, temp.channels()};

PyObject *image_array =

PyArray_SimpleNewFromData(3, Dims, NPY_UBYTE, temp.data);

PyTuple_SetItem(pArgImages, i, image_array);

}

PyObject *pArgs = PyTuple_New(1);

PyTuple_SetItem(pArgs, 0, pArgImages);

PyObject_CallObject(pFunc, pArgs);

PyGILState_Release(state);

// QThread::msleep(1);

std::chrono::milliseconds dura(2);

std::this_thread::sleep_for(dura);

}

}

// Single Camera detection

void LD_Detection::StartDetectionSingle() {

// qDebug() << "-----Video data to python function-----" << endl;

int count = 0;

while (m_bDetection) {

PyGILState_STATE state = PyGILState_Ensure();

PyObject *pArgs = PyTuple_New(2);

PyObject *pReturnValue;

cv::Mat temp =

cv::Mat(m_iCamHeight, m_iCamWidth, CV_8UC3, m_VideoOrgImage, 0);

// qDebug() << "CVImage exist: " << temp.empty() << endl;

// cv::cvtColor(temp, *image, cv::COLOR_BGR2RGB);

// QString title = QString("test%1").arg(m_iChan);

// cv::imshow(title.toStdString(), *image);

npy_intp Dims[3] = {temp.rows, temp.cols, temp.channels()};

PyObject *image_channel = Py_BuildValue("i", count);

PyObject *image_array =

PyArray_SimpleNewFromData(3, Dims, NPY_UBYTE, temp.data);

PyTuple_SetItem(pArgs, 0, image_channel);

PyTuple_SetItem(pArgs, 1, image_array);

// PyObject_CallObject(pFunc, pArgs);

pReturnValue = PyObject_CallObject(pFunc, pArgs);

if (PyList_Check(pReturnValue)) {

int SizeOfObjects = PyList_Size(pReturnValue);

// qDebug() << "Size of return list" << SizeOfObjects << endl;

sign_objects.clear();

for (int i = 0; i < SizeOfObjects; i++) {

PyObject *pObject = PyList_GetItem(pReturnValue, i);

int SizeOfObject = PyList_Size(pObject);

// for(int j = 0; j < pObject; j ++)

// {

// float item = 0.0;

// PyObject *pItem = PyList_GetItem(pObject, j);

// PyArg_Parse(pItem, "f", &item);

// qDebug() << "Data: " << item << " ";

// }

struct SignObject object;

PyArg_Parse(PyList_GetItem(pObject, 0), "i", &object.x_min);

PyArg_Parse(PyList_GetItem(pObject, 1), "i", &object.y_min);

PyArg_Parse(PyList_GetItem(pObject, 2), "i", &object.x_max);

PyArg_Parse(PyList_GetItem(pObject, 3), "i", &object.y_max);

PyArg_Parse(PyList_GetItem(pObject, 4), "f", &object.confidence);

PyArg_Parse(PyList_GetItem(pObject, 5), "i", &object.clas);

PyArg_Parse(PyList_GetItem(pObject, 6), "s", &object.clas_name);

// qDebug() << "Object's x_min: " << object.x_min;

// qDebug() << "Object's y_min: " << object.y_min;

// qDebug() << "Object's x_max: " << object.x_max;

// qDebug() << "Object's y_max: " << object.y_max;

// qDebug() << "Object's confidence: " << object.confidence;

// qDebug() << "Object's class name: " << object.clas_name;

sign_objects.push_back(object);

cv::Point point_min(object.x_min, object.y_min),

point_max(object.x_max, object.y_max);

cv::Scalar colorRectangle(255, 0, 0);

int thicknessRectangle = 3;

cv::rectangle(temp, point_min, point_max, colorRectangle,

thicknessRectangle);

}

// QString title = QString("test%1").arg(m_iChan);

// cv::imshow(title.toStdString(), temp);

// qDebug() << "Number of objects in cur image: " << sign_objects.size()

// << endl;

}

count++;

PyGILState_Release(state);

// QThread::msleep(1);

std::chrono::milliseconds dura(1);

std::this_thread::sleep_for(dura);

}

}

LD_Detection.h

#ifndef LD_DETECTION_H

#define LD_DETECTION_H

#include <iostream>

// #include <QObject>

// #include <QDebug>

// #include <QImage>

// #include <QVector>

#include <opencv2/highgui.hpp>

#include <opencv2/imgcodecs.hpp>

#include <opencv2/opencv.hpp>

#include <thread>

#include <numpy/arrayobject.h>

#define MAX_CAMER_NUM 8

using namespace std;

class LD_Detection {

public:

struct SignObject {

float x_min;

float y_min;

float x_max;

float y_max;

float confidence;

int clas;

char *clas_name;

};

std::vector<SignObject> sign_objects;

public:

// LD_Detection(int camCount, int width, int height, const char *pyDir,

// const char *pyFile, const char *pyFunction);

LD_Detection(int camChan, int width, int height, const char *pyDir,

const char *pyFile, const char *pyFunction);

~LD_Detection();

void setParam(int nChan, unsigned char *image);

void setParamMulti(unsigned char *images[]);

void startDetect(int camChan, unsigned char *image);

void startDetection(unsigned char *images[]);

void StartDetectionSingle();

void StartDetectionMulti();

void RunDetection(bool bDetection);

private:

bool InitPython();

bool ReleasePython();

void ImportPyModule();

private:

int m_iCamChan = 0;

int m_iCamCount = 0;

int m_iCamWidth = 0;

int m_iCamHeight = 0;

const char *m_cPyDir;

const char *m_cPyFile;

const char *m_cPyFunction;

unsigned char *m_VideoOrgImage;

unsigned char *m_VideoOrgImages[MAX_CAMER_NUM];

int m_iChan = 0;

std::thread *m_pDetectionthread;

bool m_bDetection;

cv::VideoWriter g_writer;

void slotStartDetection();

void slotStopDetection();

};

#endif // LD_DETECTION_H

CMakeLists.txt

#1.cmake verson,指定cmake版本

cmake_minimum_required(VERSION 3.2)

#2.project name,指定项目的名称,一般和项目的文件夹名称对应

PROJECT(TEST)

option(USE_PYTORCH " set ON detection mode change to USE_PYTORCH" ON)

option(NO_Encrtyption " set ON 测试模式,不需要license,且模型是未加密的模型" OFF)

set(op_cnt 0)

if (USE_PYTORCH)

math(EXPR op_cnt ${op_cnt}+1)

message("Using RefineDet detection 2")

endif()

#3.head file path,头文件目录

INCLUDE_DIRECTORIES(

${CMAKE_CURRENT_SOURCE_DIR}

${CMAKE_CURRENT_SOURCE_DIR}/include

)

#set(OpenCV_DIR /usr/local/share/OpenCV)

find_package (OpenCV REQUIRED NO_CMAKE_FIND_ROOT_PATH)

if(OpenCV_FOUND)

INCLUDE_DIRECTORIES(${OpenCV_INCLUDE_DIRS})

message(STATUS "OpenCV library status:")

message(STATUS " version: ${OpenCV_VERSION}")

message(STATUS " libraries: ${OpenCV_LIBS}")

message(STATUS " include path: ${OpenCV_INCLUDE_DIRS}")

endif()

if(USE_PYTORCH)

IF(${CMAKE_SYSTEM_PROCESSOR} STREQUAL "aarch64")

MESSAGE(STATUS "Now is aarch64 OS's.")

#Set library path

set(CMAKE_PREFIX_PATH /home/nvidia/sd/miniforge-pypy3/envs/centerpoint/lib/python3.6/site-packages/torch)

ELSE()

MESSAGE(STATUS "Now is UNIX-like PC OS's.")

#Set library path

#set(CMAKE_PREFIX_PATH /home/he/miniconda/envs/py36/lib/python3.6/site-packages/torch)

#set(TensorRT_DIR /home/he/work/TensorRT/tensorrt_v5.1_x64_release)

#if(DEFINED TensorRT_DIR)

# include_directories("${TensorRT_DIR}/include" )

# link_directories("${TensorRT_DIR}/lib")

# link_directories("/usr/local/cuda/lib64")

#endif(DEFINED TensorRT_DIR)

ENDIF()

endif()

message("${ALGORITHM_SDK_INCLUDE_DIR}")

find_package (Torch REQUIRED NO_CMAKE_FIND_ROOT_PATH)

#4.source directory,APP源文件目录

#AUX_SOURCE_DIRECTORY(libosa/src DIR_SRCS)

AUX_SOURCE_DIRECTORY(src DIR_SRCS)

AUX_SOURCE_DIRECTORY(test TEST_SRCS)

SET(CMAKE_BUILD_TYPE RELEASE) #DEBUG RELEASE

#设置编译器版本

SET(CMAKE_C_COMPILER gcc)

SET(CMAKE_CXX_COMPILER g++)

if(CMAKE_COMPILER_IS_GNUCXX)

#add_compile_options(-std=c++11)

#message(STATUS "optional:-std=c++11")

endif(CMAKE_COMPILER_IS_GNUCXX)

#5.set environment variable,置环境变量,编译用到的源文件全部都要放到这里,否则编译能够通过,但是执行的时候会出现各种问题

SET(PROC_ALL_FILES

${DIR_SRCS}

)

set(TEST_APP Test_app)

# cuda

include_directories("/usr/local/cuda/include"

/home/nvidia/sd/miniforge-pypy3/envs/centerpoint/include/python3.6m

/home/nvidia/sd/miniforge-pypy3/envs/centerpoint/lib/python3.6/site-packages/numpy/core/include/numpy

)

link_directories("/usr/local/cuda/lib64")

link_directories(BEFORE "/home/nvidia/sd/miniforge-pypy3/envs/centerpoint/lib")

link_directories(BEFORE "/home/nvidia/sd/miniforge-pypy3/envs/centerpoint/lib/python3.6/site-packages/numpy/core")

link_directories(BEFORE "/home/nvidia/sd/miniforge-pypy3/envs/centerpoint/lib/python3.6/config-3.6m-aarch64-linux-gnu")

#6.add executable file,添加要编译的可执行文件

#ADD_EXECUTABLE(${PROJECT_NAME} ${PROC_ALL_FILES}) #编译成可执行文件

add_library(${PROJECT_NAME} SHARED ${PROC_ALL_FILES}) #编译成动态库

add_executable(${TEST_APP} ${TEST_SRCS})

FILE(GLOB_RECURSE LIBS_CUDA /usr/local/cuda/lib64)

FILE(GLOB_RECURSE LIBS_BOOST_SYS /usr/lib/x86_64-linux-gnu/libboost_system.so)

#7.add link library,添加可执行文件所需要的库,比如我们用到了libm.so(命名规则:lib+name+.so),就添加该库的名称

TARGET_LINK_LIBRARIES(${PROJECT_NAME} ${OpenCV_LIBS})

TARGET_LINK_LIBRARIES(${PROJECT_NAME} ${TORCH_LIBRARIES} pthread)

TARGET_LINK_LIBRARIES(${PROJECT_NAME} -ldl -lcudart -lcublas -lcurand -lcudnn)

TARGET_LINK_LIBRARIES(${TEST_APP} ${OpenCV_LIBS})

TARGET_LINK_LIBRARIES(${TEST_APP} ${TORCH_LIBRARIES} pthread)

TARGET_LINK_LIBRARIES(${TEST_APP} ${PROJECT_NAME} )

#TARGET_LINK_LIBRARIES(${TEST_APP} -ldl)

TARGET_LINK_LIBRARIES(${TEST_APP} -ldl /home/nvidia/sd/miniforge-pypy3/envs/centerpoint/lib/libpython3.6m.so)

1.txt

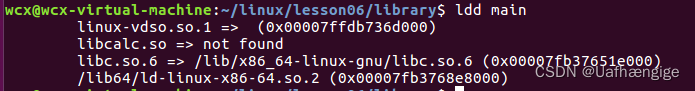

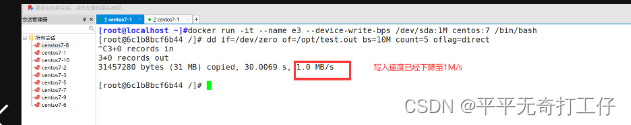

export LD_LIBRARY_PATH=/home/nvidia/sd/miniforge-pypy3/envs/centerpoint/lib:/home/nvidia/sd/miniforge-pypy3/envs/centerpoint/lib/python3.6/site-packages/numpy/core:/home/nvidia/sd/miniforge-pypy3/envs/centerpoint/lib/python3.6/config-3.6m-aarch64-linux-gnu:$LD_LIBRARY_PATH

nvidia的torch cuda要确认

link_directories是编译代码所需要链接的库,不包含链接库又链接的库,链接库又链接的库找的不对时,可以用LD_LIBRARY_PATH指定程序执行时链接的路径;LD_LIBRARY_PATH是程序运行时曲查找链接库的路径。

1.gdb ./TeTest_app; 2.r; 3.where/bt

ros代码软件路径被修改后,需要重新catkin_make编译一下,具体可以运行命令“catkin_make clean”,或者将build和devel文件夹删除。

ros::Publisher pub_Monocular_result;

pub_Monocular_result =nh_.advertise<gmsl_pub::Detection_result>( "/Detection_result", 1 );

gmsl_pub::Detection_result image_resultMsg;

image_resultMsg.header.stamp = ros::Time::now();

image_resultMsg.header.frame_id = "camera_2";

uint32 size = ; //size代表检测目标的个数

uint32 origin_flag = 2; //origin_flag代表检测算法来源

gmsl_pub::Object_result[] ob_result = ; //代表object_result.msg的数组

pub_Monocular_result.publish( image_resultMsg );

调用的python文件如下:

demo_inference_zs.py

import os

import sys

BASE_DIR = os.path.dirname(os.path.abspath(__file__))

ROOT_DIR = os.path.dirname(BASE_DIR)

sys.path.append(ROOT_DIR)

sys.path.append('/home/nvidia/sd/DEVIANT/code/tools')

sys.path.append('/home/nvidia/sd/miniforge-pypy3/envs/centerpoint/lib/python3.6/site-packages')

import yaml

import logging

import argparse

import numpy as np

print(np.__path__)

import torch

import time

import random

from lib.helpers.dataloader_helper import build_dataloader

from lib.helpers.model_helper import build_model

from lib.helpers.optimizer_helper import build_optimizer

from lib.helpers.scheduler_helper import build_lr_scheduler

from lib.helpers.trainer_helper import Trainer

from lib.helpers.tester_helper import Tester

from lib.datasets.kitti_utils import get_affine_transform

from lib.datasets.kitti_utils import Calibration

from lib.datasets.kitti_utils import compute_box_3d

from lib.datasets.waymo import affine_transform

from lib.helpers.save_helper import load_checkpoint

from lib.helpers.decode_helper import extract_dets_from_outputs

from lib.helpers.decode_helper import decode_detections

from lib.helpers.rpn_util import *

from datetime import datetime

sys.path.append('%s')

sys.path.append('%s')

def print_version():

print(torch.__version__)

print(torch.__path__)

return 100

def create_logger(log_file):

log_format = '%(asctime)s %(levelname)5s %(message)s'

logging.basicConfig(level=logging.INFO, format=log_format, filename=log_file)

console = logging.StreamHandler()

console.setLevel(logging.INFO)

console.setFormatter(logging.Formatter(log_format))

logging.getLogger().addHandler(console)

return logging.getLogger(__name__)

def init_torch(rng_seed, cuda_seed):

"""

Initializes the seeds for ALL potential randomness, including torch, numpy, and random packages.

Args:

rng_seed (int): the shared random seed to use for numpy and random

cuda_seed (int): the random seed to use for pytorch's torch.cuda.manual_seed_all function

"""

# seed everything

os.environ['PYTHONHASHSEED'] = str(rng_seed)

torch.manual_seed(rng_seed)

np.random.seed(rng_seed)

random.seed(rng_seed)

torch.cuda.manual_seed(cuda_seed)

torch.cuda.manual_seed_all(cuda_seed)

# make the code deterministic

torch.backends.cudnn.deterministic = True

torch.backends.cudnn.benchmark = False

def pretty_print(name, input, val_width=40, key_width=0):

"""

This function creates a formatted string from a given dictionary input.

It may not support all data types, but can probably be extended.

Args:

name (str): name of the variable root

input (dict): dictionary to print

val_width (int): the width of the right hand side values

key_width (int): the minimum key width, (always auto-defaults to the longest key!)

Example:

pretty_str = pretty_print('conf', conf.__dict__)

pretty_str = pretty_print('conf', {'key1': 'example', 'key2': [1,2,3,4,5], 'key3': np.random.rand(4,4)})

print(pretty_str)

or

logging.info(pretty_str)

"""

pretty_str = name + ': {\n'

for key in input.keys(): key_width = max(key_width, len(str(key)) + 4)

for key in input.keys():

val = input[key]

# round values to 3 decimals..

if type(val) == np.ndarray: val = np.round(val, 3).tolist()

# difficult formatting

val_str = str(val)

if len(val_str) > val_width:

# val_str = pprint.pformat(val, width=val_width, compact=True)

val_str = val_str.replace('\n', '\n{tab}')

tab = ('{0:' + str(4 + key_width) + '}').format('')

val_str = val_str.replace('{tab}', tab)

# more difficult formatting

format_str = '{0:' + str(4) + '}{1:' + str(key_width) + '} {2:' + str(val_width) + '}\n'

pretty_str += format_str.format('', key + ':', val_str)

# close root object

pretty_str += '}'

return pretty_str

def np2tuple(n):

return (int(n[0]), int(n[1]))

# def main():

# load cfg

config = "/home/nvidia/sd/DEVIANT/code/experiments/run_test.yaml"

cfg = yaml.load(open(config, 'r'), Loader=yaml.Loader)

exp_parent_dir = os.path.join(cfg['trainer']['log_dir'], os.path.basename(config).split(".")[0])

cfg['trainer']['log_dir'] = exp_parent_dir

logger_dir = os.path.join(exp_parent_dir, "log")

os.makedirs(exp_parent_dir, exist_ok=True)

os.makedirs(logger_dir, exist_ok=True)

timestamp = datetime.now().strftime("%Y%m%d_%H%M%S")

logger = create_logger(os.path.join(logger_dir, timestamp))

pretty = pretty_print('conf', cfg)

logging.info(pretty)

# init torch

init_torch(rng_seed= cfg['random_seed']-3, cuda_seed= cfg['random_seed'])

##w,h,l

# Ped [1.7431 0.8494 0.911 ]

# Car [1.8032 2.1036 4.8104]

# Cyc [1.7336 0.823 1.753 ]

# Sign [0.6523 0.6208 0.1254]

cls_mean_size = np.array([[1.7431, 0.8494, 0.9110],

[1.8032, 2.1036, 4.8104],

[1.7336, 0.8230, 1.7530],

[0.6523, 0.6208, 0.1254]])

# build model

model = build_model(cfg,cls_mean_size)

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print(device)

print(cfg['tester']['resume_model'])

load_checkpoint(model = model,

optimizer = None,

filename = cfg['tester']['resume_model'],

logger=logger,

map_location= device)

model.to(device)

torch.set_grad_enabled(False)

model.eval()

calibs = np.array([9.5749393532104671e+02/1920.0*768.0*0.9, 0., (1.0143950223349893e+03)/1920.0*768.0, 0.,

0., 9.5697913744394737e+02/1080.0*512.0*0.9, 5.4154074349276050e+02/1080.0*512.0, 0.,

0.0, 0.0, 1.0, 0.], dtype=np.float32)

calibsP2=calibs.reshape(3, 4)

calibs = torch.from_numpy(calibsP2).unsqueeze(0).to(device)

resolution = np.array([768, 512])

downsample = 4

# coord_ranges = np.array([center-crop_size/2,center+crop_size/2]).astype(np.float32)

coord_ranges = np.array([np.array([0, 0]),resolution]).astype(np.float32)

coord_ranges = torch.from_numpy(coord_ranges).unsqueeze(0).to(device)

features_size = resolution // downsample

# c++调用的接口

def detect_online(frame_chan, frame):

print("image channel :", frame_chan)

img = frame

index = np.array([frame_chan])

#img = img[0:1080, int(150-1):int(1920-150-1)]

# img = cv2.resize(img, (768, 512))

img_cv_rgb = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

img = Image.fromarray(img_cv_rgb)

img_size = np.array(img.size)

center = img_size / 2

crop_size = img_size

info = {'img_id': index,

'img_size': resolution,

'bbox_downsample_ratio': resolution / features_size}

# add affine transformation for 2d images.

trans, trans_inv = get_affine_transform(center, crop_size, 0, resolution, inv=1)

img = img.transform(tuple(resolution.tolist()),

method=Image.AFFINE,

data=tuple(trans_inv.reshape(-1).tolist()),

resample=Image.BILINEAR)

# image encoding

img = np.array(img).astype(np.float32) / 255.0

mean = np.array([0.485, 0.456, 0.406], dtype=np.float32)

std = np.array([0.229, 0.224, 0.225], dtype=np.float32)

img = (img - mean) / std

img = img.transpose(2, 0, 1) # C * H * W

inputs = img

inputs = torch.from_numpy(inputs)

inputs = inputs.unsqueeze(0)

inputs = inputs.to(device)

# the outputs of centernet

outputs = model(inputs,coord_ranges,calibs,K=50,mode='test')

print(np.__path__)

dets = extract_dets_from_outputs(outputs=outputs, K=50)

dets = dets.detach().cpu().numpy()

calibs1 = Calibration(calibsP2)

dets = decode_detections(dets = dets,

info = info,

calibs = calibs1,

cls_mean_size=cls_mean_size,

threshold = cfg['tester']['threshold'])

print(dets)

# img = cv2.imread(img_file, cv2.IMREAD_COLOR)

# # img = cv2.resize(img, (768, 512))

# for i in range(len(dets)):

# #cv2.rectangle(img, (int(dets[i][2]), int(dets[i][3])), (int(dets[i][4]), int(dets[i][5])), (0, 0, 255), 1)

# corners_2d,corners_3d=compute_box_3d(dets[i][6], dets[i][7], dets[i][8], dets[i][12],

# (dets[i][9], dets[i][10], dets[i][11]), calibs)

# for i in range(corners_2d.shape[0]):

# corners_2d[i] = affine_transform(corners_2d[i], trans_inv)

# cv2.line(img,np2tuple(corners_2d[0]),np2tuple(corners_2d[1]),(255,255,0),1,cv2.LINE_4)

# cv2.line(img,np2tuple(corners_2d[1]),np2tuple(corners_2d[2]),(255,255,0),1,cv2.LINE_4)

# cv2.line(img,np2tuple(corners_2d[2]),np2tuple(corners_2d[3]),(255,255,0),1,cv2.LINE_4)

# cv2.line(img,np2tuple(corners_2d[3]),np2tuple(corners_2d[0]),(255,255,0),1,cv2.LINE_4)

# cv2.line(img,np2tuple(corners_2d[4]),np2tuple(corners_2d[5]),(255,255,0),1,cv2.LINE_4)

# cv2.line(img,np2tuple(corners_2d[5]),np2tuple(corners_2d[6]),(255,255,0),1,cv2.LINE_4)

# cv2.line(img,np2tuple(corners_2d[6]),np2tuple(corners_2d[7]),(255,255,0),1,cv2.LINE_4)

# cv2.line(img,np2tuple(corners_2d[7]),np2tuple(corners_2d[4]),(255,255,0),1,cv2.LINE_4)

# cv2.line(img,np2tuple(corners_2d[5]),np2tuple(corners_2d[1]),(255,255,0),1,cv2.LINE_4)

# cv2.line(img,np2tuple(corners_2d[4]),np2tuple(corners_2d[0]),(255,255,0),1,cv2.LINE_4)

# cv2.line(img,np2tuple(corners_2d[6]),np2tuple(corners_2d[2]),(255,255,0),1,cv2.LINE_4)

# cv2.line(img,np2tuple(corners_2d[7]),np2tuple(corners_2d[3]),(255,255,0),1,cv2.LINE_4)

# cv2.imwrite("3.jpg", img)

return dets

if __name__ == '__main__':

img_file = "./code/tools/img/result2_00000575.jpg"

frame = cv2.imread(img_file, cv2.IMREAD_COLOR)

frame_chan = 0

detect_online(frame_chan, frame)