二进制高可用安装k8s集群(生产级)

本文档适用于kubernetes1.23

节点

Etcd Cluster

Etcd是一个数据库,k8s做的一些变更啥的都会存到Etcd中

如果集群比较大建议与master节点分装,单独装Etcd

master节点

master分为几个重要的组件

你所有的流量都会经过Kube-APIServer

ControllerManager就是一个集群的控制器

Scheduler是调度器

生产环境不建议master节点也装载node组件

注意:k8s文档增加了反种族歧视声明,将标签中的master替换成了conrtol-plane

K8s Service 网段: 10.96.0.0/12

K8s Pod 网段: 172.168.0.0/12

所有节点操作

主机名,hosts文件,时间同步,ssh免密登录,安装git,安装docker

配置limit

[root@k8s-master01 ~]# ulimit -SHn 65535

[root@k8s-master01 ~]# cat >> /etc/security/limits.conf <<EOF

* soft nofile 655360

* hard nofile 131072

* soft nproc 655360

* hard nproc 655360

* soft memlock unlimited

* hard memlock unlimited

EOF

[root@k8s-master01 ~]# for NODE in k8s-master02 k8s-master03 k8s-node01 k8s-node02; do

> scp /etc/docker/daemon.json $NODE:/etc/docker/daemon.json;

> done

# 所有节点配置docker配置文件

docker的基础配置

https://blog.csdn.net/llllyh812/article/details/124264385

master节点

[root@k8s-master01 ~]# tar -xf kubernetes-server-linux-amd64.tar.gz --strip-components=3 -C /usr/local/bin/ kubernetes/server/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy}

# 在解压文件的时候,如果压缩包中的文件存在多级目录。解压出来的时候如果你不想要这些多级目录,你就可以使用–strip-component参数来实现。

[root@k8s-master01 ~]# tar -zxvf etcd-v3.5.5-linux-amd64.tar.gz --strip-components=1 -C /usr/local/bin/ etcd-v3.5.5-linux-amd64/etcd{,ctl}

etcd-v3.5.5-linux-amd64/etcdctl

etcd-v3.5.5-linux-amd64/etcd

# 二进制安装其实就是把组件放到对应的目录,就是安装成功了

[root@k8s-master01 ~]# MasterNodes='k8s-master02 k8s-master03'

[root@k8s-master01 ~]# WorkNodes='k8s-node01 k8s-node02'

[root@k8s-master01 ~]# for NODE in $MasterNodes; do echo $NODE;scp /usr/local/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy} $NODE:/usr/local/bin/; scp /usr/local/bin/etcd* $NODE:/usr/local/bin/; done

k8s-master02

kubelet 100% 118MB 52.1MB/s 00:02

kubectl 100% 44MB 48.4MB/s 00:00

kube-apiserver 100% 125MB 51.9MB/s 00:02

kube-controller-manager 100% 116MB 45.8MB/s 00:02

kube-scheduler 100% 47MB 45.2MB/s 00:01

kube-proxy 100% 42MB 53.1MB/s 00:00

etcd 100% 23MB 48.6MB/s 00:00

etcdctl 100% 17MB 53.0MB/s 00:00

k8s-master03

kubelet 100% 118MB 51.6MB/s 00:02

kubectl 100% 44MB 46.8MB/s 00:00

kube-apiserver 100% 125MB 46.8MB/s 00:02

kube-controller-manager 100% 116MB 45.2MB/s 00:02

kube-scheduler 100% 47MB 50.6MB/s 00:00

kube-proxy 100% 42MB 52.3MB/s 00:00

etcd 100% 23MB 53.5MB/s 00:00

etcdctl 100% 17MB 47.9MB/s 00:00

[root@k8s-master01 ~]# for NODE in $WorkNodes; do scp /usr/local/bin/kube{let,-proxy} $NODE:/usr/local/bin/; done

kubelet 100% 118MB 42.3MB/s 00:02

kube-proxy 100% 42MB 54.8MB/s 00:00

kubelet 100% 118MB 47.1MB/s 00:02

kube-proxy 100% 42MB 53.1MB/s 00:00

# 将组件发送到其他节点

切换分支

所有节点操作

[root@k8s-master01 ~]# mkdir -p /opt/cni/bin

[root@k8s-master01 ~]# cd /opt/cni/bin/

# [root@k8s-master01 bin]# git clone https://github.com/forbearing/k8s-ha-install.git

# 内容太多,直接复制需要的文件夹即可

[root@k8s-master01 bin]# mv /root/pki.zip /opt/cni/bin/

[root@k8s-master01 bin]# unzip pki.zip

...暂时没写

生成证书(重要)

二进制安装最关键步骤,一步错误全盘皆输,一定要注意每个步骤都要是正确的

生成证书的CSR文件: 证书签名请求文件,配置了一些域名,公司,单位

https://github.com/cloudflare/cfssl/releases

[root@k8s-master01 ~]# mv cfssl_1.6.3_linux_amd64 /usr/local/bin/cfssl

[root@k8s-master01 ~]# mv cfssljson_1.6.3_linux_amd64 /usr/local/bin/cfssljson

[root@k8s-master01 ~]# chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson

# master01节点下载证书生成工具

[root@k8s-master01 ~]# mkdir -p /etc/etcd/ssl

# 所有节点创建etcd证书目录

[root@k8s-master01 ~]# mkdir -p /etc/kubernetes/pki

# 所有节点创建kubernetes相关目录

[root@k8s-master01 ~]# cd /opt/cni/bin/pki/

[root@k8s-master01 pki]# cfssl gencert -initca etcd-ca-csr.json |cfssljson -bare /etc/etcd/ssl/etcd-ca

2022/10/24 19:19:51 [INFO] generating a new CA key and certificate from CSR

2022/10/24 19:19:51 [INFO] generate received request

2022/10/24 19:19:51 [INFO] received CSR

2022/10/24 19:19:51 [INFO] generating key: rsa-2048

2022/10/24 19:19:52 [INFO] encoded CSR

2022/10/24 19:19:52 [INFO] signed certificate with serial number 141154029411162894054001938470301605874823228651

[root@k8s-master01 pki]# ls /etc/etcd/ssl

etcd-ca.csr etcd-ca-key.pem etcd-ca.pem

[root@k8s-master01 pki]# cfssl gencert \

-ca=/etc/etcd/ssl/etcd-ca.pem \

-ca-key=/etc/etcd/ssl/etcd-ca-key.pem \

-config=ca-config.json \

-hostname=127.0.0.1,k8s-master01,k8s-master02,k8s-master03,172.20.251.107,172.20.251.108,172.20.251.109 \

-profile=kubernetes \

etcd-csr.json | cfssljson -bare /etc/etcd/ssl/etcd

2022/10/24 19:24:45 [INFO] generate received request

2022/10/24 19:24:45 [INFO] received CSR

2022/10/24 19:24:45 [INFO] generating key: rsa-2048

2022/10/24 19:24:45 [INFO] encoded CSR

2022/10/24 19:24:45 [INFO] signed certificate with serial number 223282157701235776819254846371243906472259526809

[root@k8s-master01 pki]# ls /etc/etcd/ssl/

etcd-ca.csr etcd-ca-key.pem etcd-ca.pem etcd.csr etcd-key.pem etcd.pem

# 生成etcd CA证书和CA证书的key

[root@k8s-master01 pki]# for NODE in $MasterNodes; do

ssh $NODE "mkdir -p /etc/etcd/ssl"

for FILE in etcd-ca-key.pem etcd-ca.pem etcd-key.pem etcd.pem; do

scp /etc/etcd/ssl/${FILE} $NODE:/etc/etcd/ssl/${FILE}

done

done

etcd-ca-key.pem 100% 1675 214.7KB/s 00:00

etcd-ca.pem 100% 1318 128.3KB/s 00:00

etcd-key.pem 100% 1679 139.5KB/s 00:00

etcd.pem 100% 1464 97.7KB/s 00:00

etcd-ca-key.pem 100% 1675 87.8KB/s 00:00

etcd-ca.pem 100% 1318 659.6KB/s 00:00

etcd-key.pem 100% 1679 861.1KB/s 00:00

etcd.pem 100% 1464 1.1MB/s 00:00

# 传输证书到其他master节点

Master01生成kubernetes证书

e[root@k8s-master01 pki]# cfssl gencert -initca ca-csr.json | cfssljson -bare /etc/kubernetes/pki/ca

2022/10/24 20:08:22 [INFO] generating a new CA key and certificate from CSR

2022/10/24 20:08:22 [INFO] generate received request

2022/10/24 20:08:22 [INFO] received CSR

2022/10/24 20:08:22 [INFO] generating key: rsa-2048

2022/10/24 20:08:22 [INFO] encoded CSR

2022/10/24 20:08:22 [INFO] signed certificate with serial number 462801853285240841018202532125246688421494972307

# 生成根证书

[root@k8s-master01 pki]# cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=ca-config.json -hostname=10.96.0.1,172.20.251.200,127.0.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,172.20.251.107,172.20.251.108,172.20.251.109 -profile=kubernetes apiserver-csr.json | cfssljson -bare /etc/kubernetes/pki/apiserver

2022/10/24 20:12:12 [INFO] generate received request

2022/10/24 20:12:12 [INFO] received CSR

2022/10/24 20:12:12 [INFO] generating key: rsa-2048

2022/10/24 20:12:12 [INFO] encoded CSR

2022/10/24 20:12:12 [INFO] signed certificate with serial number 690736382497214625223151514350842894019030617359

# 10.96.0.是k8s service的网段,如果说需要更改k8s service的网段,那就需要更改为你设置的ip地址

# 可以预留几个IP地址或域名

# 生成apiServer的客户端证书

[root@k8s-master01 pki]# cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-ca

2022/10/24 20:15:35 [INFO] generating a new CA key and certificate from CSR

2022/10/24 20:15:35 [INFO] generate received request

2022/10/24 20:15:35 [INFO] received CSR

2022/10/24 20:15:35 [INFO] generating key: rsa-2048

2022/10/24 20:15:35 [INFO] encoded CSR

2022/10/24 20:15:35 [INFO] signed certificate with serial number 168281383231764970339015684919770971594394785122

# 生成apiServer的聚合证书. requestheader-client-xxx

# 根据你配置的证书,验证请求是否合法.

# requestheader-allowed-xxx:aggerator验证请求头是否被允许的

# 暂时不了解也没关系,因为是刚入门,学到后面k8s的概念再来看应该就明白了

[root@k8s-master01 pki]# cfssl gencert -ca=/etc/kubernetes/pki/front-proxy-ca.pem -ca-key=/etc/kubernetes/pki/front-proxy-ca-key.pem -config=ca-config.json -profile=kubernetes front-proxy-client-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-client

2022/10/24 20:33:22 [INFO] generate received request

2022/10/24 20:33:22 [INFO] received CSR

2022/10/24 20:33:22 [INFO] generating key: rsa-2048

2022/10/24 20:33:23 [INFO] encoded CSR

2022/10/24 20:33:23 [INFO] signed certificate with serial number 323590969709332384506410320558260817204509478345

2022/10/24 20:33:23 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

# apiServer证书生成完成

# 相同方式生成controller-manager的证书

[root@k8s-master01 pki]# cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

manager-csr.json | cfssljson -bare /etc/kubernetes/pki/controller-manager

2022/10/24 20:36:46 [INFO] generate received request

2022/10/24 20:36:46 [INFO] received CSR

2022/10/24 20:36:46 [INFO] generating key: rsa-2048

2022/10/24 20:36:46 [INFO] encoded CSR

2022/10/24 20:36:46 [INFO] signed certificate with serial number 108710809086222047459631230378556745824189906638

2022/10/24 20:36:46 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

# 没有生成controller-manager的ca证书,直接使用之前的根证书

[root@k8s-master01 pki]# kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://172.20.251.107:8443 \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

Cluster "kubernetes" set.

# set-cluster:设置一个集群项

[root@k8s-master01 pki]# kubectl config set-context system:kube-controller-manager@kubernetes \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

Context "system:kube-controller-manager@kubernetes" created.

# set-context:设置一个环境项,一个上下文

[root@k8s-master01 ~]# kubectl config set-credentials system:kube-controller-manager \

--client-certificate=/etc/kubernetes/pki/controller-manager.pem \

--client-key=/etc/kubernetes/pki/controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

User "system:kube-controller-manager" set.

# set-credentials 设置一个用户项

[root@k8s-master01 ~]# kubectl config use-context system:kube-controller-manager@kubernetes \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

Switched to context "system:kube-controller-manager@kubernetes".

# 使用某个环境当做默认环境

# controller-manager证书生成完成

[root@k8s-master01 pki]# cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

scheduler-csr.json | cfssljson -bare /etc/kubernetes/pki/scheduler

2022/10/25 07:33:39 [INFO] generate received request

2022/10/25 07:33:39 [INFO] received CSR

2022/10/25 07:33:39 [INFO] generating key: rsa-2048

2022/10/25 07:33:39 [INFO] encoded CSR

2022/10/25 07:33:39 [INFO] signed certificate with serial number 61172664806377914143954086942177408078631179977

2022/10/25 07:33:39 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-master01 pki]# kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://172.20.251.107:8443 \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

Cluster "kubernetes" set.

[root@k8s-master01 pki]# kubectl config set-credentials system:kube-scheduler \

--client-certificate=/etc/kubernetes/pki/scheduler.pem \

--client-key=/etc/kubernetes/pki/scheduler-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

User "system:kube-scheduler" set.

[root@k8s-master01 pki]# kubectl config set-context system:kube-scheduler@kubernetes \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

Context "system:kube-scheduler@kubernetes" created.

[root@k8s-master01 pki]# kubectl config use-context system:kube-scheduler@kubernetes \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

Switched to context "system:kube-scheduler@kubernetes".

# 生成scheduler证书,跟之前生成controller-manage的证书流程一样

[root@k8s-master01 pki]# cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssljson -bare /etc/kubernetes/pki/admin

2022/10/25 07:43:32 [INFO] generate received request

2022/10/25 07:43:32 [INFO] received CSR

2022/10/25 07:43:32 [INFO] generating key: rsa-2048

2022/10/25 07:43:32 [INFO] encoded CSR

2022/10/25 07:43:32 [INFO] signed certificate with serial number 77202050989431331897938308725922479340036299536

2022/10/25 07:43:32 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-master01 pki]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://172.20.251.107:8443 --kubeconfig=/etc/kubernetes/admin.kubeconfig

Cluster "kubernetes" set.

[root@k8s-master01 pki]# kubectl config set-credentials kubernetes-admin --client-certificate=/etc/kubernetes/pki/admin.pem --client-key=/etc/kubernetes/pki/admin-key.pem --embed-certs=true --kubeconfig=/etc/kubernetes/admin.kubeconfig

User "kubernetes-admin" set.

[root@k8s-master01 pki]# kubectl config set-context kubernetes-admin@kubernetes --cluster=kubernetes --user=kubernetes-admin --kubeconfig=/etc/kubernetes/admin.kubeconfig

Context "kubernetes-admin@kubernetes" created.

[root@k8s-master01 pki]# kubectl config use-context kubernetes-admin@kubernetes --kubeconfig=/etc/kubernetes/admin.kubeconfig

Switched to context "kubernetes-admin@kubernetes".

# 生成admin证书,如上类似

为什么用.json文件生成证书,那kubernetes怎么区分scheduler和admin呢?

[root@k8s-master01 pki]# cat admin-csr.json

{

"CN": "admin",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:masters",

"OU": "Kubernetes-manual"

}

]

}

# admin -> system:masters

# clusterrole: admin-xxx --> clusterrolebinding --> system:masters

# clusterrole是一个集群角色,相当于一个配置,有这个集群操作权限

# system:masters这个组的所有用户都会有操作集群的权限了

[root@k8s-master01 pki]# cat scheduler-csr.json

{

"CN": "system:kube-scheduler",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-scheduler",

"OU": "Kubernetes-manual"

}

]

}

# 如上类似

# kubelet证书无需手动配置,可以自动生成

[root@k8s-master01 pki]# openssl genrsa -out /etc/kubernetes/pki/sa.key 2048

Generating RSA private key, 2048 bit long modulus (2 primes)

.........................................................+++++

.......................+++++

e is 65537 (0x010001)

# 创建ServerAccount Key -> secret

# 用作之后token生成

[root@k8s-master01 pki]# openssl rsa -in /etc/kubernetes/pki/sa.key -pubout -out /etc/kubernetes/pki/sa.pub

writing RSA key

[root@k8s-master01 pki]# for NODE in k8s-master02 k8s-master03; do

for FILE in `ls /etc/kubernetes/pki | grep -v etcd`; do

scp /etc/kubernetes/pki/${FILE} $NODE:/etc/kubernetes/pki/${FILE};

done;

for FILE in admin.kubeconfig controller-manager.kubeconfig scheduler.kubeconfig; do

scp /etc/kubernetes/${FILE} $NODE:/etc/kubernetes/${FILE};

done;

done

...

[root@k8s-master01 pki]# cat ca-config.json

{

"signing": {

"default": {

"expiry": "876000h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "876000h"

}

}

}

}

# ca证书的配置文件

# 至此证书生成完成

[root@k8s-master01 pki]# ls /etc/kubernetes/pki/

admin.csr apiserver.pem controller-manager-key.pem front-proxy-client.csr scheduler.csr

admin-key.pem ca.csr controller-manager.pem front-proxy-client-key.pem scheduler-key.pem

admin.pem ca-key.pem front-proxy-ca.csr front-proxy-client.pem scheduler.pem

apiserver.csr ca.pem front-proxy-ca-key.pem sa.key

apiserver-key.pem controller-manager.csr front-proxy-ca.pem sa.pub

[root@k8s-master01 pki]# ls /etc/kubernetes/pki/|wc -l

23

# 查看证书文件

Kubernetes系统组件配置

etcd配置

etcd配置大致相同,注意修改每个Master节点的etcd配置的主机名和IP地址

一定采用基数的配置,不要采用偶数的配置,因为etcd容易脑裂

Master01节点

[root@k8s-master01 ~]# unzip conf.zip

[root@k8s-master01 ~]# cp conf/etcd/etcd.config.yaml 1.txt

[root@k8s-master01 ~]# cat 1.txt

name: k8s-master01

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://172.20.251.107:2380'

listen-client-urls: 'https://172.20.251.107:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://172.20.251.107:2380'

advertise-client-urls: 'https://172.20.251.107:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master01=https://172.20.251.107:2380,k8s-master02=https://172.20.251.108:2380,k8s-master03=https://172.20.251.109:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

[root@k8s-master01 ~]# cp 1.txt /etc/etcd/etcd.config.yaml

cp: overwrite '/etc/etcd/etcd.config.yaml'? y

[root@k8s-master01 ~]# scp /etc/etcd/etcd.config.yaml k8s-master02:/root/1.txt

etcd.config.yaml 100% 1457 183.6KB/s 00:00

[root@k8s-master01 ~]# scp /etc/etcd/etcd.config.yaml k8s-master03:/root/1.txt

etcd.config.yaml 100% 1457 48.2KB/s 00:00

# 传输etcd配置文件到其他master节点

[root@k8s-master01 ~]# cp conf/etcd/etcd.service /usr/lib/systemd/system/etcd.service

cp: overwrite '/usr/lib/systemd/system/etcd.service'? y

[root@k8s-master01 ~]# cat /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Service

Documentation=https://coreos.com/etcd/docs/latest/

After=network.target

[Service]

Type=notify

ExecStart=/usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.yaml

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

Alias=etcd3.service

[root@k8s-master01 ~]# scp conf/etcd/etcd.service k8s-master02:/usr/lib/systemd/system/etcd.service

etcd.service 100% 307 26.1KB/s 00:00

[root@k8s-master01 ~]# scp conf/etcd/etcd.service k8s-master03:/usr/lib/systemd/system/etcd.service

etcd.service 100% 307 21.4KB/s 00:00

# 所有Master节点创建etcd service并启动

[root@k8s-master01 ~]# mkdir /etc/kubernetes/pki/etcd

[root@k8s-master01 ~]# ln -s /etc/etcd/ssl/* /etc/kubernetes/pki/etcd/

[root@k8s-master01 ~]# systemctl daemon-reload

[root@k8s-master01 ~]# systemctl start etcd && systemctl enable etcd

Created symlink /etc/systemd/system/etcd3.service → /usr/lib/systemd/system/etcd.service.

Created symlink /etc/systemd/system/multi-user.target.wants/etcd.service → /usr/lib/systemd/system/etcd.service.

# 所有Master节点创建etcd的证书目录

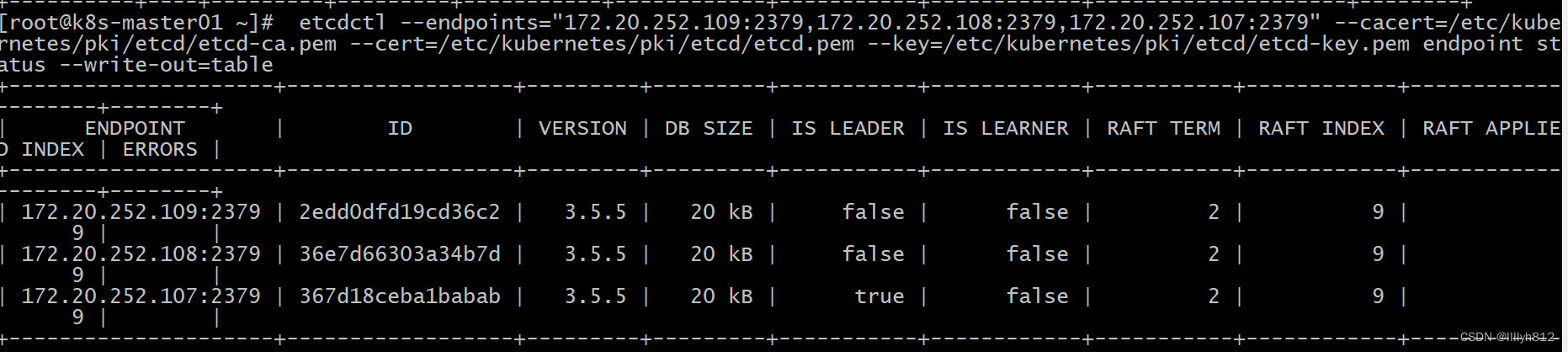

[root@k8s-master01 ~]# export ETCDCTL_API=3

[root@k8s-master01 ~]# etcdctl --endpoints="172.20.251.109:2379,172.20.251.108:2379,172.20.251.107:2379" --cacert=/etc/kubernetes/pki/etcd/etcd-ca.pem --cert=/etc/kubernetes/pki/etcd/etcd.pem --key=/etc/kubernetes/pki/etcd/etcd-key.pem endpoint status --write-out=table

+---------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+---------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| 172.20.251.109:2379 | 8c97fe1a4121e196 | 3.5.5 | 20 kB | true | false | 2 | 8 | 8 | |

| 172.20.251.108:2379 | 5bbeaed610eda0eb | 3.5.5 | 20 kB | false | false | 2 | 8 | 8 | |

| 172.20.251.107:2379 | 5389bd6821125460 | 3.5.5 | 20 kB | false | false | 2 | 8 | 8 | |

+---------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

# 查看etcd状态

Master02节点

[root@k8s-master02 ~]# cat 1.txt

name: k8s-master02

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://172.20.251.108:2380'

listen-client-urls: 'https://172.20.251.108:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://172.20.251.108:2380'

advertise-client-urls: 'https://172.20.251.108:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master01=https://172.20.251.107:2380,k8s-master02=https://172.20.251.108:2380,k8s-master03=https://172.20.251.109:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

[root@k8s-master02 ~]# cp 1.txt /etc/etcd/etcd.config.yaml

[root@k8s-master01 ~]# mkdir /etc/kubernetes/pki/etcd

[root@k8s-master01 ~]# ln -s /etc/etcd/ssl/* /etc/kubernetes/pki/etcd/

[root@k8s-master01 ~]# systemctl daemon-reload

[root@k8s-master01 ~]# systemctl start etcd && systemctl enable etcd

Created symlink /etc/systemd/system/etcd3.service → /usr/lib/systemd/system/etcd.service.

Created symlink /etc/systemd/system/multi-user.target.wants/etcd.service → /usr/lib/systemd/system/etcd.service.

# 所有Master节点创建etcd的证书目录

Master03节点

[root@k8s-master03 ~]# cat 1.txt

name: k8s-master03

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://172.20.251.109:2380'

listen-client-urls: 'https://172.20.251.109:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://172.20.251.109:2380'

advertise-client-urls: 'https://172.20.251.109:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master01=https://172.20.251.107:2380,k8s-master02=https://172.20.251.108:2380,k8s-master03=https://172.20.251.109:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

[root@k8s-master03 ~]# cat 1.txt

name: k8s-master03

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://172.20.251.109:2380'

listen-client-urls: 'https://172.20.251.109:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://172.20.251.109:2380'

advertise-client-urls: 'https://172.20.251.109:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master01=https://172.20.251.107:2380,k8s-master02=https://172.20.251.108:2380,k8s-master03=https://172.20.251.109:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

[root@k8s-master03 ~]# cp 1.txt /etc/etcd/etcd.config.yaml

cp: overwrite '/etc/etcd/etcd.config.yaml'? y

[root@k8s-master01 ~]# mkdir /etc/kubernetes/pki/etcd

[root@k8s-master01 ~]# ln -s /etc/etcd/ssl/* /etc/kubernetes/pki/etcd/

[root@k8s-master01 ~]# systemctl daemon-reload

[root@k8s-master01 ~]# systemctl start etcd && systemctl enable etcd

Created symlink /etc/systemd/system/etcd3.service → /usr/lib/systemd/system/etcd.service.

Created symlink /etc/systemd/system/multi-user.target.wants/etcd.service → /usr/lib/systemd/system/etcd.service.

# 所有Master节点创建etcd的证书目录

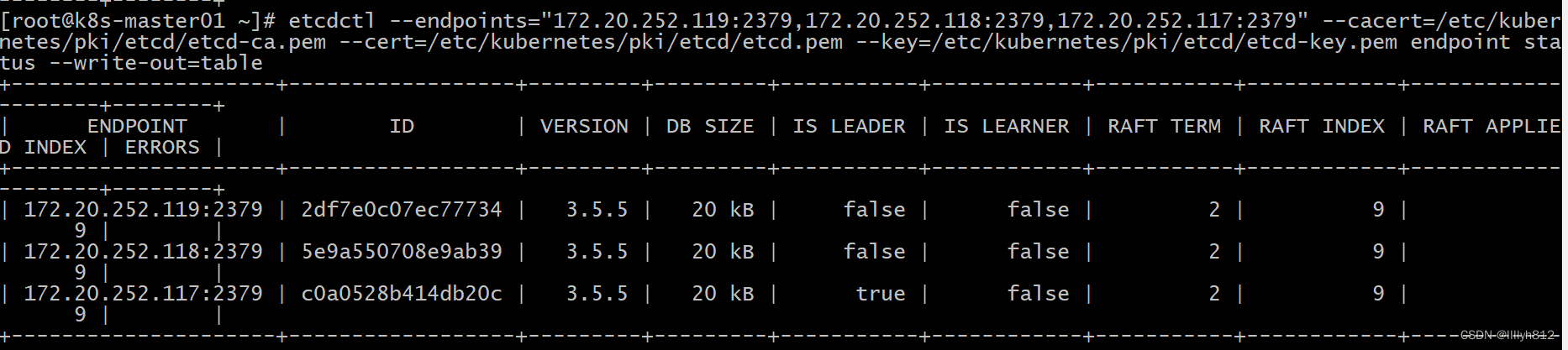

因重装云平台导致环境变更

新环境

高可用配置

所有Master节点

[root@k8s-master01 ~]# dnf -y install keepalived haproxy

# 所有master节点安装haproxy keepalived

Master01节点

[root@k8s-master01 ~]# mv /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg.bak

[root@k8s-master01 ~]# cp conf/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg

[root@k8s-master01 ~]# cat /etc/haproxy/haproxy.cfg

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend k8s-master

bind 0.0.0.0:8443

bind 127.0.0.1:8443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-master01 172.20.252.107:6443 check

server k8s-master02 172.20.252.108:6443 check

server k8s-master03 172.20.252.109:6443 check

[root@k8s-master01 ~]# scp /etc/haproxy/haproxy.cfg k8s-master02:/etc/haproxy/haproxy.cfg

haproxy.cfg 100% 820 1.9MB/s 00:00

[root@k8s-master01 ~]# scp /etc/haproxy/haproxy.cfg k8s-master03:/etc/haproxy/haproxy.cfg

haproxy.cfg 100% 820 1.6MB/s 00:00

# 所有master配置haproxy

[root@k8s-master01 ~]# mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

[root@k8s-master01 ~]# cp conf/keepalived/keepalived.conf /etc/keepalived/keepalived.conf

[root@k8s-master01 ~]# cat /etc/keepalived/keepalived.conf

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface eth0

mcast_src_ip 172.20.252.107

virtual_router_id 51

priority 101

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8S_PASS

}

virtual_ipaddress {

172.20.252.200

}

track_script {

chk_apiserver

} }

[root@k8s-master01 ~]# scp /etc/keepalived/keepalived.conf k8s-master02:/etc/keepalived/keepalived.conf

keepalived.conf 100% 555 575.6KB/s 00:00

[root@k8s-master01 ~]# scp /etc/keepalived/keepalived.conf k8s-master03:/etc/keepalived/keepalived.conf

keepalived.conf 100% 555 763.8KB/s 00:00

[root@k8s-master01 ~]# cp conf/keepalived/check_apiserver.sh /etc/keepalived/check_apiserver.sh

[root@k8s-master01 ~]# cat /etc/keepalived/check_apiserver.sh

#!/usr/bin/env bash

err=0

for k in $(seq 1 3)

do

check_code=$(pgrep haproxy)

if [[ $check_code == "" ]]; then

err=$(expr $err + 1)

sleep 1

continue

else

err=0

break

fi

done

if [[ $err != "0" ]]; then

echo "systemctl stop keepalived"

systemctl stop keepalived

exit 1

else

exit 0

fi

[root@k8s-master01 ~]# scp /etc/keepalived/check_apiserver.sh k8s-master02:/etc/keepalived/

check_apiserver.sh 100% 355 199.5KB/s 00:00

[root@k8s-master01 ~]# scp /etc/keepalived/check_apiserver.sh k8s-master03:/etc/keepalived/

check_apiserver.sh 100% 355 38.3KB/s 00:00

[root@k8s-master01 ~]# chmod +x /etc/keepalived/check_apiserver.sh

# 配置keepalived并编写健康检查脚本

[root@k8s-master01 ~]# systemctl daemon-reload

[root@k8s-master01 ~]# systemctl enable --now haproxy

Created symlink /etc/systemd/system/multi-user.target.wants/haproxy.service → /usr/lib/systemd/system/haproxy.service.

[root@k8s-master01 ~]# systemctl enable --now keepalived

Created symlink /etc/systemd/system/multi-user.target.wants/keepalived.service → /usr/lib/systemd/system/keepalived.service.

# 启动服务并设置开机自启

[root@k8s-master01 ~]# tail /var/log/messages

Oct 27 01:33:56 k8s-master01 Keepalived_vrrp[19149]: Sending gratuitous ARP on eth0 for 172.20.252.200

Oct 27 01:33:56 k8s-master01 Keepalived_vrrp[19149]: Sending gratuitous ARP on eth0 for 172.20.252.200

Oct 27 01:33:56 k8s-master01 Keepalived_vrrp[19149]: Sending gratuitous ARP on eth0 for 172.20.252.200

Oct 27 01:33:56 k8s-master01 NetworkManager[1542]: <info> [1666834436.8400] policy: set-hostname: current hostname was changed outside NetworkManager: 'k8s-master01'

Oct 27 01:34:01 k8s-master01 Keepalived_vrrp[19149]: (VI_1) Sending/queueing gratuitous ARPs on eth0 for 172.20.252.200

Oct 27 01:34:01 k8s-master01 Keepalived_vrrp[19149]: Sending gratuitous ARP on eth0 for 172.20.252.200

Oct 27 01:34:01 k8s-master01 Keepalived_vrrp[19149]: Sending gratuitous ARP on eth0 for 172.20.252.200

Oct 27 01:34:01 k8s-master01 Keepalived_vrrp[19149]: Sending gratuitous ARP on eth0 for 172.20.252.200

Oct 27 01:34:01 k8s-master01 Keepalived_vrrp[19149]: Sending gratuitous ARP on eth0 for 172.20.252.200

Oct 27 01:34:01 k8s-master01 Keepalived_vrrp[19149]: Sending gratuitous ARP on eth0 for 172.20.252.200

# 查看日志

Master02节点

[root@k8s-master02 ~]# chmod +x /etc/keepalived/check_apiserver.sh

# 增加执行权限

[root@k8s-master02 ~]# cat /etc/keepalived/keepalived.conf

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

mcast_src_ip 172.20.252.108

virtual_router_id 51

priority 100

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8S_PASS

}

virtual_ipaddress {

172.20.252.200

}

track_script {

chk_apiserver

} }

# 修改keepalived配置文件

[root@k8s-master02 ~]# systemctl daemon-reload

[root@k8s-master02 ~]# systemctl enable --now haproxy

Created symlink /etc/systemd/system/multi-user.target.wants/haproxy.service → /usr/lib/systemd/system/haproxy.service.

[root@k8s-master02 ~]# systemctl enable --now keepalived

Created symlink /etc/systemd/system/multi-user.target.wants/keepalived.service → /usr/lib/systemd/system/keepalived.service.

# 启动服务并设置开机自启

[root@k8s-master02 ~]# dnf -y install telnet

[root@k8s-master02 ~]# telnet 172.20.252.200 8443

Trying 172.20.252.200...

Connected to 172.20.252.200.

Escape character is '^]'.

# 只要出现这个中括号就说明没有问题

Connection closed by foreign host.

# telnet测试keepalived是否正常

Master03节点

[root@k8s-master03 ~]# chmod +x /etc/keepalived/check_apiserver.sh

# 增加执行权限

[root@k8s-master03 ~]# cat /etc/keepalived/keepalived.conf

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

mcast_src_ip 172.20.252.109

virtual_router_id 51

priority 100

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8S_PASS

}

virtual_ipaddress {

172.20.252.200

}

track_script {

chk_apiserver

} }

# 修改keepalived配置文件

[root@k8s-master03 ~]# systemctl daemon-reload

[root@k8s-master03 ~]# systemctl enable --now haproxy

Created symlink /etc/systemd/system/multi-user.target.wants/haproxy.service → /usr/lib/systemd/system/haproxy.service.

[root@k8s-master03 ~]# systemctl enable --now keepalived

Created symlink /etc/systemd/system/multi-user.target.wants/keepalived.service → /usr/lib/systemd/system/keepalived.service.

# 启动服务并设置开机自启

环境变更2

Kubernetes组件配置

所有Master节点

[root@k8s-master01 ~]# mkdir -p /etc/kubernetes/manifests/ /etc/systemd/system/kubelet.service.d /var/lib/kubelet /var/log/kubernetes

# 所有节点创建相关目录

Master01节点

[root@k8s-master01 ~]# cp kube-controller-manager.txt /usr/lib/systemd/system/kube-controller-manager.service

[root@k8s-master01 ~]# cp conf/k8s/v1.23/kube-apiserver.service kube-apiserver.txt

[root@k8s-master01 ~]# cat kube-apiserver.txt

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--advertise-address=172.20.252.200 \

--allow-privileged=true \

--authorization-mode=Node,RBAC \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--enable-bootstrap-token-auth=true \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--etcd-servers=https://172.20.252.117:2379,https://172.20.252.118:2379,https://172.20.252.119:2379 \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=front-proxy-client \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--secure-port=6443 \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-cluster-ip-range=10.96.0.0/12 \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--v=2

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

[root@k8s-master01 ~]# cp kube-apiserver.txt /usr/lib/systemd/system/kube-apiserver.service

# 所有Master节点创建kubekube-apiserver.service

# 如果不是高可用集群,172.20.252.200改为master01的地址

[root@k8s-master01 ~]# scp kube-apiserver.txt k8s-master02:/usr/lib/systemd/system/kube-apiserver.service

kube-apiserver.txt 100% 1915 57.9KB/s 00:00

[root@k8s-master01 ~]# scp kube-apiserver.txt k8s-master03:/usr/lib/systemd/system/kube-apiserver.service

kube-apiserver.txt 100% 1915 812.3KB/s 00:00

[root@k8s-master01 ~]# systemctl daemon-reload && systemctl enable --now kube-apiserver

Created symlink /etc/systemd/system/multi-user.target.wants/kube-apiserver.service → /usr/lib/systemd/system/kube-apiserver.service.

# 所有Master节点开启kube-apiserver

[root@k8s-master01 ~]# tail -f /var/log/messages

...

[root@k8s-master01 ~]# systemctl status kube-apiserver.service

● kube-apiserver.service - Kubernetes API Server

Loaded: loaded (/usr/lib/systemd/system/kube-apiserver.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2022-10-28 09:32:16 CST; 1min 11s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 16024 (kube-apiserver)

Tasks: 16 (limit: 101390)

Memory: 198.1M

...

I1028 09:32:34.623463 16024 controller.go:611] quota admission added>Oct 28 09:32:34 k8s-master01.novalocal kube-apiserver[16024]:

E1028 09:32:34.923027 16024 controller.go:228] unable to sync kubern>

# 这个报错是因为主机名没有设置,设置后重启服务即可

# 检测kube-server状态

# I开头是正常,E开头是报错

[root@k8s-master01 ~]# cp conf/k8s/v1.23/kube-controller-manager.service kube-controller-manager.txt

[root@k8s-master01 ~]# cat kube-controller-manager.txt

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \

--allocate-node-cidrs=true \

--authentication-kubeconfig=/etc/kubernetes/controller-manager.kubeconfig \

--authorization-kubeconfig=/etc/kubernetes/controller-manager.kubeconfig \

--bind-address=127.0.0.1 \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--cluster-cidr=172.16.0.0/12 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem \

--controllers=*,bootstrapsigner,tokencleaner \

--cluster-signing-duration=876000h0m0s \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig \

--leader-elect=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--root-ca-file=/etc/kubernetes/pki/ca.pem \

--service-account-private-key-file=/etc/kubernetes/pki/sa.key \

--service-cluster-ip-range=10.96.0.0/12 \

--use-service-account-credentials=true \

--v=2

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

[root@k8s-master01 ~]# cp kube-controller-manager.txt /usr/lib/systemd/system/kube-controller-manager.service

[root@k8s-master01 ~]# scp kube-controller-manager.txt k8s-master02:/usr/lib/systemd/system/kube-controller-manager.service

kube-controller-manager.txt 100% 1200 51.3KB/s 00:00

[root@k8s-master01 ~]# scp kube-controller-manager.txt k8s-master03:/usr/lib/systemd/system/kube-controller-manager.service

kube-controller-manager.txt 100% 1200 4.7KB/s 00:00

# 所有Master节点配置kube-controller-manager.service

# 注意本文档使用k8s Pod网段为172.16.0.0/12,该网段不能和宿主机的网段,k8s Service网段若重复,需修改

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

# 通信文件

[root@k8s-master01 ~]# systemctl daemon-reload

[root@k8s-master01 ~]# systemctl enable --now kube-controller-manager

Created symlink /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service → /usr/lib/systemd/system/kube-controller-manager.service.

# 所有Master节点开启kube-controller-manager

[root@k8s-master01 ~]# systemctl status kube-controller-manager.service

● kube-controller-manager.service - Kubernetes Controller Manager

Loaded: loaded (/usr/lib/systemd/system/kube-controller-manager.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2022-10-28 10:05:56 CST; 1min 17s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 19071 (kube-controller)

Tasks: 7 (limit: 101390)

Memory: 24.8M

# 检测kube-controller-manager状态

[root@k8s-master01 ~]# cp conf/k8s/v1.23/kube-scheduler.service kube-scheduler.txt

[root@k8s-master01 ~]# cp kube-scheduler.txt /usr/lib/systemd/system/kube-scheduler.service

[root@k8s-master01 ~]# scp kube-scheduler.txt k8s-master02:/usr/lib/systemd/system/kube-scheduler.service

kube-scheduler.txt 100% 501 195.0KB/s 00:00

[root@k8s-master01 ~]# scp kube-scheduler.txt k8s-master03:/usr/lib/systemd/system/kube-scheduler.service

kube-scheduler.txt 100% 501 326.2KB/s 00:00

# 所有Master节点配置kube-scheduler.service

[root@k8s-master01 ~]# systemctl daemon-reload

[root@k8s-master01 ~]# systemctl enable --now kube-scheduler

Created symlink /etc/systemd/system/multi-user.target.wants/kube-scheduler.service → /usr/lib/systemd/system/kube-scheduler.service.

# 所有Master节点启动kube-scheduler.service

Master02节点

[root@k8s-master02 ~]# cat /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--advertise-address=172.20.252.118 \

--allow-privileged=true \

--authorization-mode=Node,RBAC \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--enable-bootstrap-token-auth=true \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--etcd-servers=https://172.20.252.117:2379,https://172.20.252.118:2379,https://172.20.252.119:2379 \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=front-proxy-client \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--secure-port=6443 \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-cluster-ip-range=10.96.0.0/12 \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--v=2

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

[root@k8s-master02 ~]# systemctl daemon-reload && systemctl enable --now kube-apiserver

Created symlink /etc/systemd/system/multi-user.target.wants/kube-apiserver.service → /usr/lib/systemd/system/kube-apiserver.service.

# 所有Master节点开启kube-apiserver

[root@k8s-master02 ~]# systemctl daemon-reload

[root@k8s-master02 ~]# systemctl enable --now kube-controller-manager

Created symlink /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service → /usr/lib/systemd/system/kube-controller-manager.service.

# 所有Master节点开启kube-controller-manager

[root@k8s-master02 ~]# systemctl daemon-reload

[root@k8s-master02 ~]# systemctl enable --now kube-scheduler

Created symlink /etc/systemd/system/multi-user.target.wants/kube-scheduler.service → /usr/lib/systemd/system/kube-scheduler.service.

# 所有Master节点启动kube-scheduler.service

Master03节点

[root@k8s-master03 ~]# cat /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--advertise-address=172.20.252.119 \

--allow-privileged=true \

--authorization-mode=Node,RBAC \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--enable-bootstrap-token-auth=true \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--etcd-servers=https://172.20.252.117:2379,https://172.20.252.118:2379,https://172.20.252.119:2379 \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=front-proxy-client \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--secure-port=6443 \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-cluster-ip-range=10.96.0.0/12 \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--v=2

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

[root@k8s-master03 ~]# systemctl daemon-reload && systemctl enable --now kube-apiserver

Created symlink /etc/systemd/system/multi-user.target.wants/kube-apiserver.service → /usr/lib/systemd/system/kube-apiserver.service.

# 所有Master节点开启kube-apiserver

[root@k8s-master03 ~]# systemctl daemon-reload

[root@k8s-master03 ~]# systemctl enable --now kube-controller-manager

Created symlink /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service → /usr/lib/systemd/system/kube-controller-manager.service.

# 所有Master节点开启kube-controller-manager

[root@k8s-master03 ~]# systemctl daemon-reload

[root@k8s-master03 ~]# systemctl enable --now kube-scheduler

Created symlink /etc/systemd/system/multi-user.target.wants/kube-scheduler.service → /usr/lib/systemd/system/kube-scheduler.service.

# 所有Master节点启动kube-scheduler.service

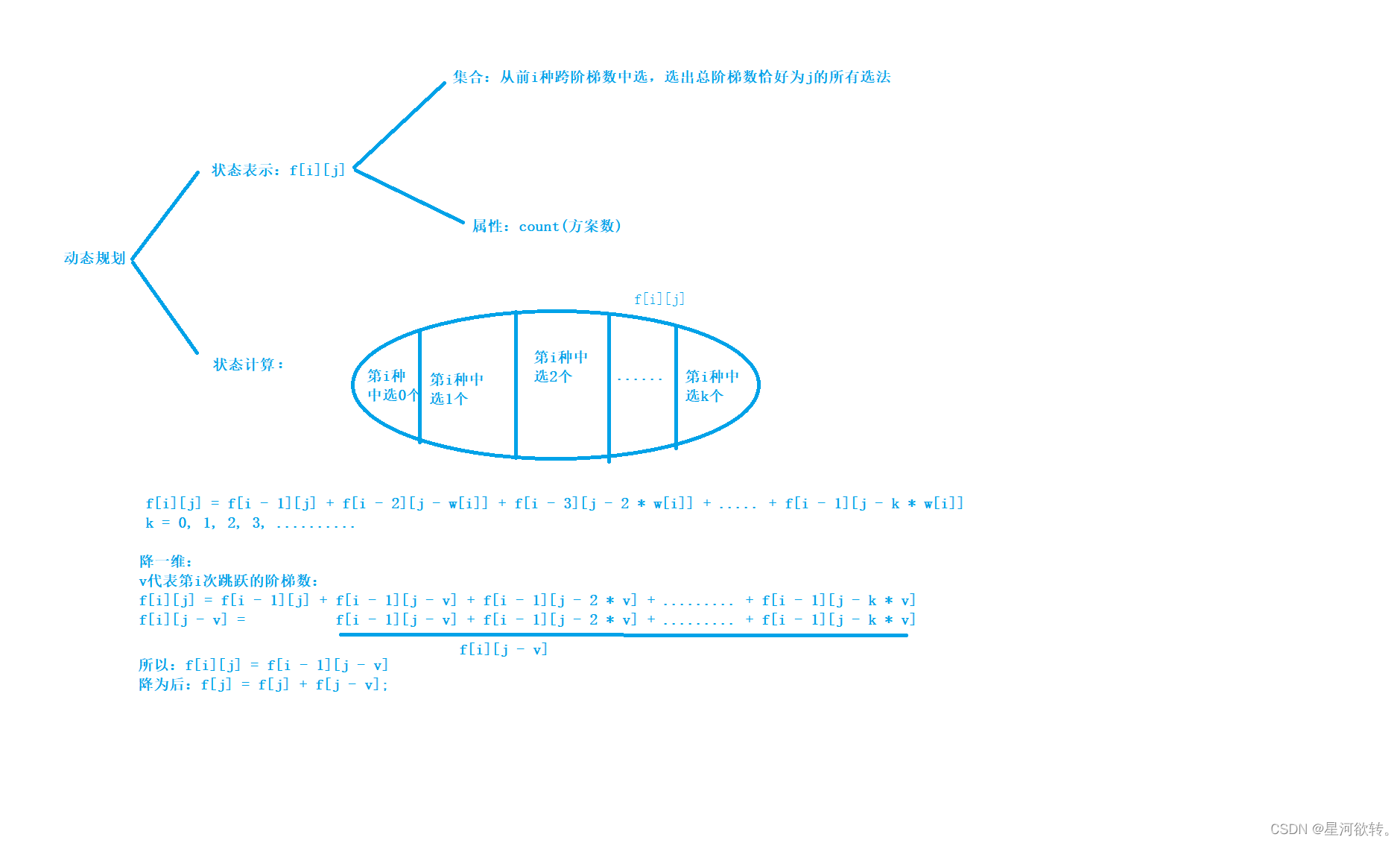

TLS Bootstrapping配置

自动颁发证书组件

为什么kubelet证书不去手动生成呢?

因为k8s主节点是固定的,node节点变换比较多,如果手动管理会很麻烦

Master01节点

[root@k8s-master01 ~]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true --server=https://172.20.252.200:8443 --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

Cluster "kubernetes" set.

# 注意,如果不是高可用集群,172.20.252.200:8443改为master01的地址,8443改为apiserver的端口,默认6443

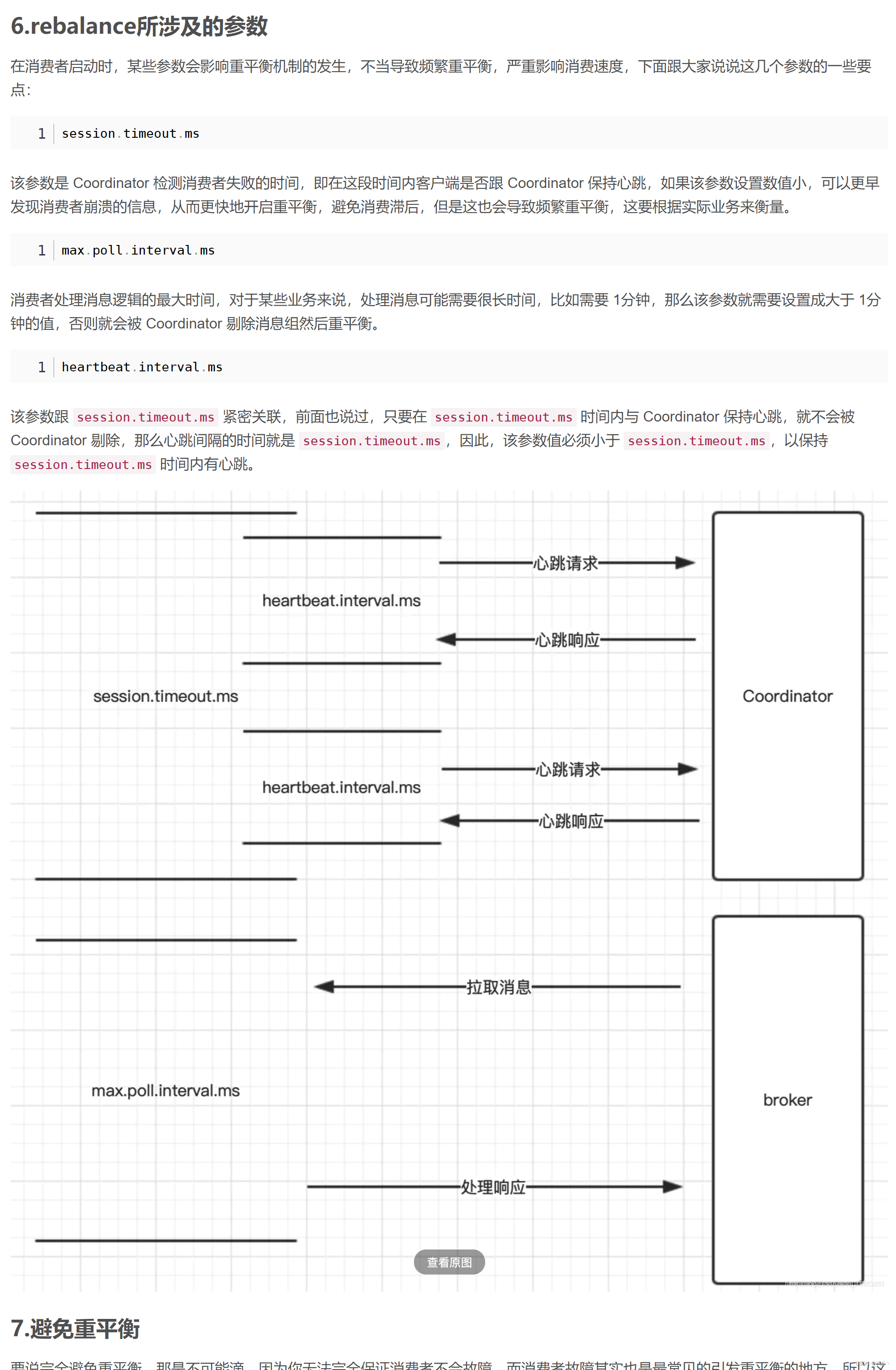

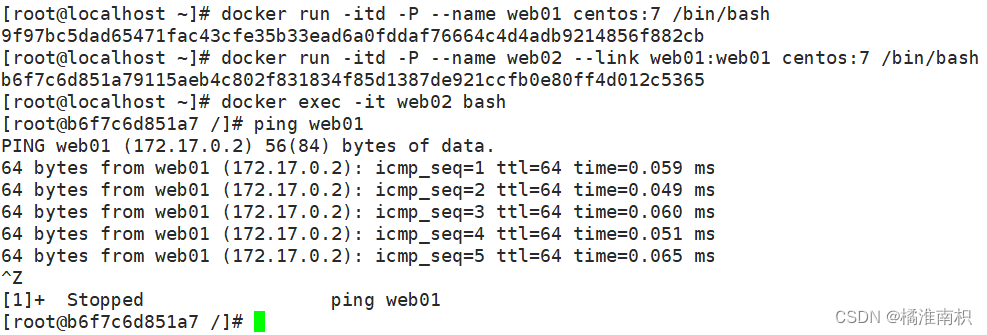

[root@k8s-master01 ~]# kubectl config set-credentials tls-bootstrap-token-user --token=c8ad9c.2e4d610cf3e7426e \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

User "tls-bootstrap-token-user" set.

# 如果要修改bootstrap.secret.yaml的token-id和token-secret,需要保证下图黄色方框内的字符串是一致的,并且位数也是一样的,还要保证上个命令的--token的值与修改的字符串一致

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-zoJum0i9-1685885489334)(.3.二进制高可用安装k8s集群(生产级)]\4bootstrap.secret.yaml.png)

[root@k8s-master01 ~]# kubectl config set-context tls-bootstrap-token-user@kubernetes --cluster=kubernetes \

--user=tls-bootstrap-token-user --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

Context "tls-bootstrap-token-user@kubernetes" created.

[root@k8s-master01 ~]# kubectl config use-context tls-bootstrap-token-user@kubernetes --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

Switched to context "tls-bootstrap-token-user@kubernetes".

# bootstrap-kubelet.kubeconfig就是kubelet拿来向apiserver申请证书的文件

# Master01节点创建TLS Bootstrapping

[root@k8s-master01 bootstrap]# kubectl get nodes

The connection to the server localhost:8080 was refused - did you specify the right host or port?

# 现在没有证书问价无法查询

[root@k8s-master01 ~]# mkdir -p /root/.kube; cp /etc/kubernetes/admin.kubeconfig /root/.kube/config

# 创建kubernetes文件目录,将证书复制到这个目录下,kubectl就能操作集群了

[root@k8s-master01 bootstrap]# kubectl create -f bootstrap.secret.yaml

secret/bootstrap-token-c8ad9c created

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

clusterrolebinding.rbac.authorization.k8s.io/node-autoapprove-bootstrap created

clusterrolebinding.rbac.authorization.k8s.io/node-autoapprove-certificate-rotation created

clusterrole.rbac.authorization.k8s.io/system:kube-apiserver-to-kubelet created

clusterrolebinding.rbac.authorization.k8s.io/system:kube-apiserver created

# kubectl应用yaml文件

[root@k8s-master01 bootstrap]# kubectl get nodes

No resources found

# 已经可以查询

# kubectl只要有一个节点有就可以

# 甚至可以把kubectl放到集群外的节点,只要是能跟集群通信的节点即可

Node节点配置

Master01节点

[root@k8s-master01 ~]# cd /etc/kubernetes/

[root@k8s-master01 kubernetes]# for NODE in k8s-master02 k8s-master03 k8s-node01 k8s-node02; do

ssh $NODE mkdir -p /etc/kubernetes/pki /etc/etcd/ssl/ /etc/etcd/ssl

for FILE in etcd-ca.pem etcd.pem etcd-key.pem; do

scp /etc/etcd/ssl/$FILE $NODE:/etc/etcd/ssl/

done

for FILE in pki/ca.pem pki/ca-key.pem pki/front-proxy-ca.pem bootstrap-kubelet.kubeconfig; do

scp /etc/kubernetes/$FILE $NODE:/etc/kubernetes/${FILE}

done

done

# 将证书复制到其他节点

Kubelet配置

所有节点

[root@k8s-master01 ~]# mkdir -p /var/lib/kubelet /var/log/kubernetes /etc/systemd/system/kubelet.service.d /etc/kubernetes/manifests/

# 所有节点创建相关目录

[root@k8s-master01 ~]# cp conf/k8s/v1.23/kubelet.service /usr/lib/systemd/system/kubelet.service

[root@k8s-master01 ~]# for NODE in k8s-master02 k8s-master03 k8s-node01 k8s-node02; do

> scp /usr/lib/systemd/system/kubelet.service $NODE:/usr/lib/systemd/system/kubelet.service; done

# 所有节点配置kubelet.service

[root@k8s-master01 ~]# cp conf/k8s/v1.23/10-kubelet.conf /etc/systemd/system/kubelet.service.d/10-kubelet.conf

[root@k8s-master01 ~]# for NODE in k8s-master02 k8s-master03 k8s-node01 k8s-node02; do

scp /etc/systemd/system/kubelet.service.d/10-kubelet.conf $NODE:/etc/systemd/system/kubelet.service.d/10-kubelet.conf;

done

# 所有节点配置kubelet.service的配置文件

[root@k8s-master01 ~]# cp conf/k8s/v1.23/kubelet-conf.yaml kubelet-conf.yaml

[root@k8s-master01 ~]# cat kubelet-conf.yaml

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

# 注意修改此处

clusterDomain: cluster.local

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

logging:

flushFrequency: 0

options:

json:

infoBufferSize: "0"

verbosity: 0

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

resolvConf: #resolvConf#

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

allowedUnsafeSysctls:

- "net.core*"

- "net.ipv4.*"

#kubeReserved:

# cpu: "1"

# memory: 1Gi

# ephemeral-storage: 10Gi

#systemReserved:

# cpu: "1"

# memory: 1Gi

# ephemeral-storage: 10Gi

[root@k8s-master01 ~]# cp kubelet-conf.yaml /etc/kubernetes/kubelet-conf.yaml

[root@k8s-master01 ~]# for NODE in k8s-master02 k8s-master03 k8s-node01 k8s-node02; do

> scp /etc/kubernetes/kubelet-conf.yaml $NODE:/etc/kubernetes/kubelet-conf.yaml;

> done

# 所有节点创建kubelet的配置文件

# 注意: 如果更改了k8s的service的网段,需要更改kubelet-conf.yaml的clusterDNS:配置,改成k8s service网段的第十个地址,比如10.96.0.10

[root@k8s-master01 ~]# systemctl daemon-reload

[root@k8s-master01 ~]# systemctl restart docker

[root@k8s-master01 ~]# systemctl enable kubelet

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /usr/lib/systemd/system/kubelet.service.

# 所有节点启动kubelet

[root@k8s-master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady <none> 7m17s v1.23.13

k8s-master02 NotReady <none> 7m18s v1.23.13

k8s-master03 NotReady <none> 7m18s v1.23.13

k8s-node01 NotReady <none> 7m18s v1.23.13

k8s-node02 NotReady <none> 7m17s v1.23.13

[root@k8s-master01 ~]# tail /var/log/messages

...

k8s-master01 kubelet[140700]: I1030 15:32:24.237004 140700 cni.go:240] "Unable to update cni config" err="no networks found in /etc/cni/net.d

...

# 查看日志

# 这是因为Calico还没有装

kube-proxy配置

Master01节点

[root@k8s-master01 ~]# yum -y install conntrack-tools

[root@k8s-master01 ~]# kubectl -n kube-system create serviceaccount kube-proxy

serviceaccount/kube-proxy created

[root@k8s-master01 ~]# kubectl create clusterrolebinding system:kube-proxy --clusterrole system:node-proxier --serviceaccount kube-system:kube-proxy

clusterrolebinding.rbac.authorization.k8s.io/system:kube-proxy created

[root@k8s-master01 ~]# SECRET=$(kubectl -n kube-system get sa/kube-proxy \

--output=jsonpath='{.secrets[0].name}')

[root@k8s-master01 ~]# JWT_TOKEN=$(kubectl -n kube-system get secret/$SECRET \

> --output=jsonpath='{.data.token}'|base64 -d)

[root@k8s-master01 ~]# mkdir k8s-ha-install

[root@k8s-master01 ~]# cd k8s-ha-install/

[root@k8s-master01 k8s-ha-install]# PKI_DIR=/etc/kubernetes/pki

[root@k8s-master01 k8s-ha-install]# K8S_DIR=/etc/kubernetes

[root@k8s-master01 k8s-ha-install]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://172.20.252.200:8443 --kubeconfig=${K8S_DIR}/kube-proxy.kubeconfig

Cluster "kubernetes" set.

[root@k8s-master01 k8s-ha-install]# kubectl config set-credentials kubernetes --token=${JWT_TOKEN} --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

User "kubernetes" set.

[root@k8s-master01 k8s-ha-install]# kubectl config set-context kubernetes --cluster=kubernetes --user=kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

Context "kubernetes" created.

[root@k8s-master01 k8s-ha-install]# kubectl config use-context kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

Switched to context "kubernetes".

# 注意,如果不是高可用集群,172.20.252.200:8443改为master01的地址,8443改为apiserver的端口,默认是6443

[root@k8s-master01 k8s-ha-install]# mkdir kube-proxy

[root@k8s-master01 k8s-ha-install]# cat > kube-proxy/kube-proxy.conf <<EOF

KUBE_PROXY_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/var/log/kubernetes/logs \\

--config=/etc/kubernetes/kube-proxy.yaml"

EOF

[root@k8s-master01 k8s-ha-install]# cp /root/conf/k8s/v1.23/kube-proxy.yaml kube-proxy/

[root@k8s-master01 k8s-ha-install]# sed -ri "s/#POD_NETWORK_CIDR#/172.16.0.0\/12/g" kube-proxy/kube-proxy.yaml

[root@k8s-master01 k8s-ha-install]# sed -ri "s/#KUBE_PROXY_MODE#/ipvs/g" kube-proxy/kube-proxy.yaml

[root@k8s-master01 k8s-ha-install]# for NODE in k8s-master01 k8s-master02 k8s-master03; do

scp ${K8S_DIR}/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig

scp kube-proxy/kube-proxy.conf $NODE:/etc/kubernetes/kube-proxy.conf

scp kube-proxy/kube-proxy.yaml $NODE:/etc/kubernetes/kube-proxy.yaml

scp /root/conf/k8s/v1.23/kube-proxy.service $NODE:/usr/lib/systemd/system/kube-proxy.service

done

[root@k8s-master01 k8s-ha-install]# for NODE in k8s-node01 k8s-node02; do

scp /etc/kubernetes/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig

scp kube-proxy/kube-proxy.conf $NODE:/etc/kubernetes/kube-proxy.conf

scp kube-proxy/kube-proxy.yaml $NODE:/etc/kubernetes/kube-proxy.yaml

scp /root/conf/k8s/v1.23/kube-proxy.service $NODE:/usr/lib/systemd/system/kube-proxy.service

done

# 在Master01将kube-proxy的systemd service文件发送到其他节点

# 如果更改了集群Pod的网段,需要更改kube-proxy/kube-proxy.conf的clusterCIDR: 172.16.0.0/12参数为pod的网段

所有节点

[root@k8s-master01 ~]# systemctl enable --now kube-proxy

Created symlink /etc/systemd/system/multi-user.target.wants/kube-proxy.service → /usr/lib/systemd/system/kube-proxy.service.

[root@k8s-master01 k8s-ha-install]# systemctl status kube-proxy

● kube-proxy.service - Kubernetes Kube Proxy

Loaded: loaded (/usr/lib/systemd/system/kube-proxy.service; enabled; vendor preset: disabled)

Active: active (running) since Sun 2022-10-30 22:38:06 CST; 4s ago

...

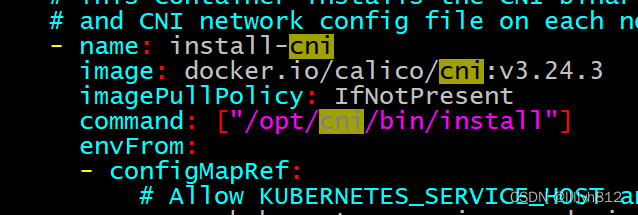

Calico安装

Master01节点

[root@k8s-master01 ~]# cd k8s-ha-install/

[root@k8s-master01 k8s-ha-install]# mkdir calico

[root@k8s-master01 k8s-ha-install]# cd calico/

[root@k8s-master01 calico]# curl https://raw.githubusercontent.com/projectcalico/calico/v3.24.3/manifests/calico-etcd.yaml -O

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 21088 100 21088 0 0 77245 0 --:--:-- --:--:-- --:--:-- 76963

[root@k8s-master01 calico]# sed -ri 's#etcd_endpoints: "http://<ETCD_IP>:<ETCD_PORT>"#etcd_endpoints: "https://172.20.252.117:2379,https://172.20.252.118:2379,https://172.20.252.119:2379"#g' calico-etcd.yaml

[root@k8s-master01 calico]# ETCD_CA=`cat /etc/kubernetes/pki/etcd/etcd-ca.pem | base64 | tr -d '\n'`

[root@k8s-master01 calico]# ETCD_CERT=`cat /etc/kubernetes/pki/etcd/etcd.pem | base64 | tr -d '\n'`

[root@k8s-master01 calico]# ETCD_KEY=`cat /etc/kubernetes/pki/etcd/etcd-key.pem | base64 | tr -d '\n'`

[root@k8s-master01 calico]# sed -i "s@# etcd-key: null@etcd-key: ${ETCD_KEY}@g; s@# etcd-cert: null@etcd-cert: ${ETCD_CERT}@g; s@# etcd-ca: null@etcd-ca: ${ETCD_CA}@g" calico-etcd.yaml

[root@k8s-master01 calico]# sed -i 's#etcd_ca: ""#etcd_ca: "/calico-secrets/etcd-ca"#g; s#etcd_cert: ""#etcd_cert: "/calico-secrets/etcd-cert"#g; s#etcd_key: ""#etcd_key: "/calico-secrets/etcd-key"#g' calico-etcd.yaml

# 修改calico-etcd.yaml文件的以上位置

[root@k8s-master01 calico]# POD_SUBNET="172.16.0.0/12"

[root@k8s-master01 calico]# sed -i 's@# - name: CALICO_IPV4POOL_CIDR@- name: CALICO_IPV4POOL_CIDR@g; s@# value: "192.168.0.0/16"@ value: '"${POD_SUBNET}"'@g' calico-etcd.yaml

# 修改为自己的pod网段

[root@k8s-master01 calico]# kubectl apply -f calico-etcd.yaml

poddisruptionbudget.policy/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

serviceaccount/calico-node created

secret/calico-etcd-secrets created

configmap/calico-config created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

deployment.apps/calico-kube-controllers created

# calico-etcd.yaml只在一个节点执行一次

# 注意,默认拉取镜像从docker.io拉取,如果网络环境差会很慢

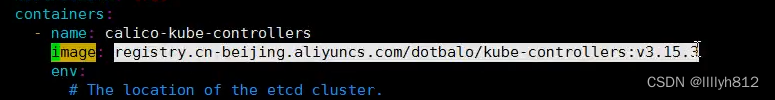

如果网络环境不好建议将image: 改为阿里云的镜像仓库

[root@k8s-master01 calico]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-6c86f69969-8mjqk 1/1 Running 0 13m

calico-node-5cr6v 1/1 Running 2 (10m ago) 13m

calico-node-9ss4w 1/1 Running 1 (10m ago) 13m

calico-node-d4jbp 1/1 Running 1 (10m ago) 13m

calico-node-dqssq 1/1 Running 0 13m

calico-node-tnhjj 1/1 Running 0 13m

# 如果容器状态一次可以使用kubectl describe或者logs查看容器的日志

[root@k8s-master01 calico]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready <none> 18h v1.23.13

k8s-master02 Ready <none> 18h v1.23.13

k8s-master03 Ready <none> 18h v1.23.13

k8s-node01 Ready <none> 18h v1.23.13

k8s-node02 Ready <none> 18h v1.23.13

# 验证节点都为Ready状态

# 个人推荐网络使用Calico而不是Flannel

安装CoreDNS

kubernetes服务之间的访问都是通过service调用的

那service怎么解析成IP地址呢,都是靠这个CoreDNS

Master01节点

[root@k8s-master01 ~]# cd /root/k8s-ha-install/

[root@k8s-master01 k8s-ha-install]# mkdir CoreDNS

[root@k8s-master01 k8s-ha-install]# cd CoreDNS/

[root@k8s-master01 CoreDNS]# curl https://raw.githubusercontent.com/coredns/deployment/master/kubernetes/coredns.yaml.sed -o coredns.yaml

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 4304 100 4304 0 0 6998 0 --:--:-- --:--:-- --:--:-- 6987

# 如果网络环境不好也可以直接去github复制

[root@k8s-master01 CoreDNS]# sed -i "s#clusterIP: CLUSTER_DNS_IP#clusterIP: 10.96.0.10#g" coredns.yaml

# 如果更改了k8s service的网段需要将coredns的serviceIP改成k8s service网段的第十个IP

[root@k8s-master01 CoreDNS]# kubectl create -f coredns.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

# 安装coredns

错误解决

以下为判断错误的解决方案

[root@k8s-master01 CoreDNS]# kubectl get pods -n kube-system -l k8s-app=kube-dns

NAME READY STATUS RESTARTS AGE

coredns-7875fc54bb-v6tsj 0/1 ImagePullBackOff 0 3m52s

# 查看状态,出现镜像拉取错误

[root@k8s-master01 CoreDNS]# docker import /root/coredns_1.9.4_linux_amd64.tgz coredns/coredns:1.9.4

sha256:98855711be7d3e4fe0ae337809191d2a4075702e2c00059f276e5c44da9b2e22

# 到官网下载后上传到Master01节点,使用import导入镜像

[root@k8s-master01 CoreDNS]# for NODE in k8s-master02 k8s-master03; do

> scp /root/coredns_1.9.4_linux_amd64.tgz $NODE:/root/coredns_1.9.4_linux_amd64.tgz

> done

coredns_1.9.4_linux_amd64.tgz 100% 14MB 108.1MB/s 00:00

coredns_1.9.4_linux_amd64.tgz 100% 14MB 61.2MB/s 00:00

# 传输到其他Master节点

[root@k8s-master02 ~]# docker import /root/coredns_1.9.4_linux_amd64.tgz coredns/coredns:1.9.4

sha256:462c1fe56dcaca89d4b75bff0151c638da1aa59457ba49f8ac68552aa3a92203

[root@k8s-master03 ~]# docker import /root/coredns_1.9.4_linux_amd64.tgz coredns/coredns:1.9.4

sha256:c6f17bff4594222312b30b322a7e02e0a78adde6fa082352054bc27734698f69

# 其余Master节点导入镜像

[root@k8s-master01 CoreDNS]# kubectl delete -f coredns.yaml

serviceaccount "coredns" deleted

clusterrole.rbac.authorization.k8s.io "system:coredns" deleted

clusterrolebinding.rbac.authorization.k8s.io "system:coredns" deleted

configmap "coredns" deleted

deployment.apps "coredns" deleted

service "kube-dns" deleted

[root@k8s-master01 CoreDNS]# kubectl create -f coredns.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

# 重新安装coredns

[root@k8s-master01 CoreDNS]# kubectl describe pod coredns-7875fc54bb-gtt7p -n kube-system

Warning Failed 2m32s (x4 over 3m27s) kubelet Error: failed to start container "coredns": Error response from daemon: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: exec: "-conf": executable file not found in $PATH: unknown

# 再次出现报错

[root@k8s-master01 CoreDNS]# kubectl delete -f coredns.yaml

serviceaccount "coredns" deleted

clusterrole.rbac.authorization.k8s.io "system:coredns" deleted

clusterrolebinding.rbac.authorization.k8s.io "system:coredns" deleted

configmap "coredns" deleted

deployment.apps "coredns" deleted

service "kube-dns" deleted

# 删除coredns

[root@k8s-master01 CoreDNS]# docker rmi coredns/coredns:1.9.4

Untagged: coredns/coredns:1.9.4

Deleted: sha256:802f9bb655d0cfcf0141de41c996d659450294b00e54d1eff2e44a90564071ca

Deleted: sha256:1b0937bab1d24d9e264d6adf4e61ffb576981150a9d8bf16c44eea3c79344f43

[root@k8s-master02 ~]# docker rmi coredns/coredns:1.9.4

Untagged: coredns/coredns:1.9.4

Deleted: sha256:462c1fe56dcaca89d4b75bff0151c638da1aa59457ba49f8ac68552aa3a92203

Deleted: sha256:1b0937bab1d24d9e264d6adf4e61ffb576981150a9d8bf16c44eea3c79344f43

[root@k8s-master03 ~]# docker rmi coredns/coredns:1.9.4

Untagged: coredns/coredns:1.9.4

Deleted: sha256:c6f17bff4594222312b30b322a7e02e0a78adde6fa082352054bc27734698f69

Deleted: sha256:1b0937bab1d24d9e264d6adf4e61ffb576981150a9d8bf16c44eea3c79344f43

以下为正确解决方案

[root@k8s-master01 ~]# docker pull coredns/coredns:1.9.4

1.9.4: Pulling from coredns/coredns

c6824c7a0594: Pull complete

8f16f0bc6a9b: Pull complete

Digest: sha256:b82e294de6be763f73ae71266c8f5466e7e03c69f3a1de96efd570284d35bb18

Status: Downloaded newer image for coredns/coredns:1.9.4

docker.io/coredns/coredns:1.9.4

# 所有节点提前拉取coredns的镜像

再次发现报错

[root@k8s-master01 ~]# cd k8s-ha-install/CoreDNS/

[root@k8s-master01 CoreDNS]# kubectl create -f coredns.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

# 再次安装coredns

[root@k8s-master01 CoreDNS]# kubectl -n kube-system logs -f -p coredns-7875fc54bb-mhpgx

/etc/coredns/Corefile:18 - Error during parsing: Unknown directive '}STUBDOMAINS'

[root@k8s-master01 CoreDNS]# sed -i "s/kubernetes CLUSTER_DOMAIN REVERSE_CIDRS/kubernetes cluster.local in-addr.arpa ip6.arpa/g" coredns.yaml

[root@k8s-master01 CoreDNS]# sed -i "s#forward . UPSTREAMNAMESERVER#forward . /etc/resolv.conf#g" coredns.yaml

[root@k8s-master01 CoreDNS]# sed -i "s/STUBDOMAINS//g" coredns.yaml

[root@k8s-master01 CoreDNS]# kubectl delete -f coredns.yaml

serviceaccount "coredns" deleted

clusterrole.rbac.authorization.k8s.io "system:coredns" deleted

clusterrolebinding.rbac.authorization.k8s.io "system:coredns" deleted

configmap "coredns" deleted

deployment.apps "coredns" deleted

service "kube-dns" deleted

[root@k8s-master01 CoreDNS]# kubectl create -f coredns.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

# 再次安装coredns

[root@k8s-master01 CoreDNS]# kubectl get pods -n kube-system -l k8s-app=kube-dns

NAME READY STATUS RESTARTS AGE

coredns-7875fc54bb-l7cl9 1/1 Running 0 109s

# 成功Running

[root@k8s-master01 CoreDNS]# kubectl describe pod coredns-7875fc54bb-l7cl9 -n kube-system |tail -n1

Warning Unhealthy 108s (x6 over 2m15s) kubelet Readiness probe failed: HTTP probe failed with statuscode: 503

# 好像还是有报错,不过不影响的话暂时不处理

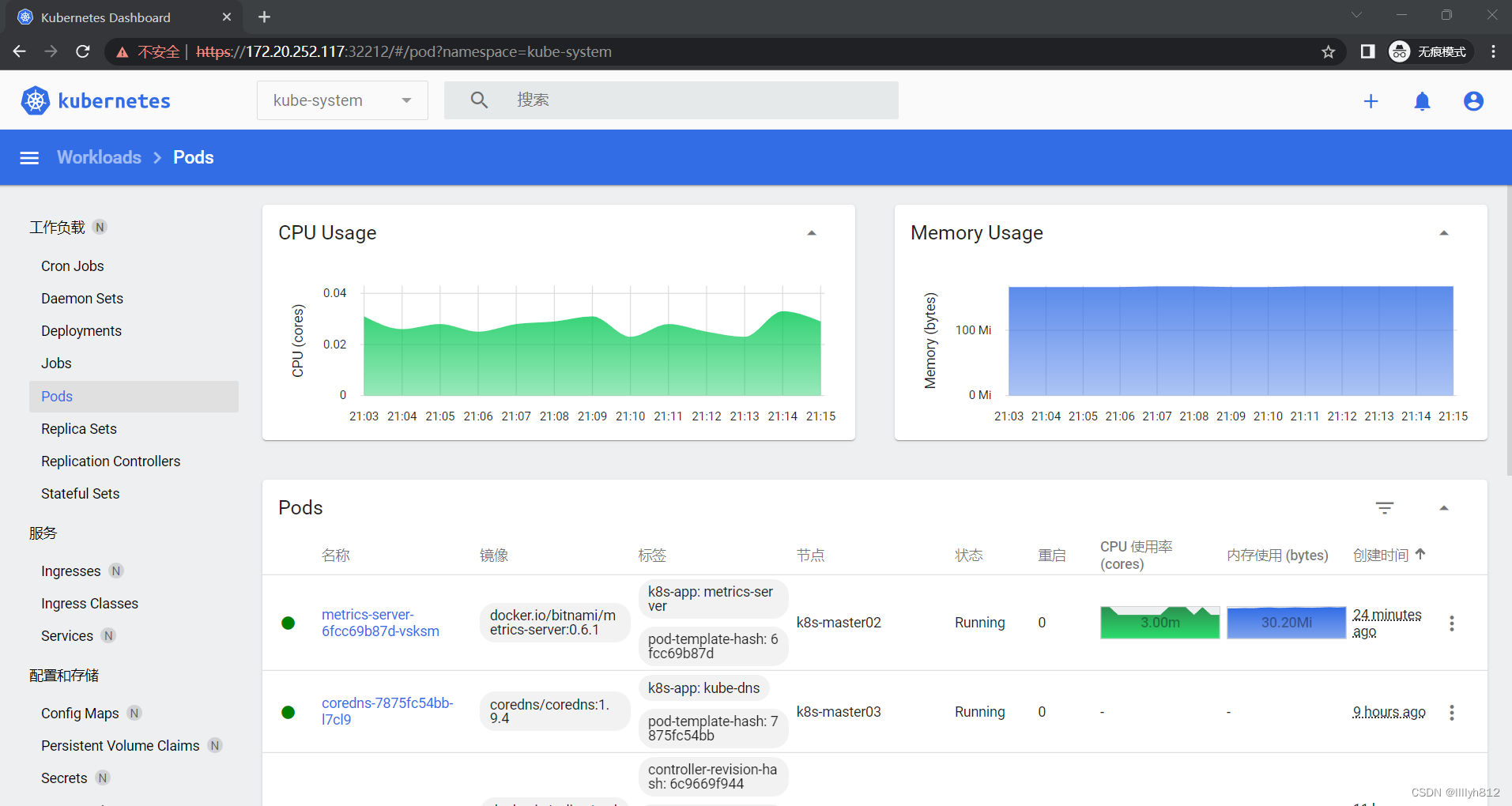

安装Metrics Server

在新版的Kubernetes中系统资源的采集均使用Metrics-server,可以通过Metrics采集节点和Pod的内存,磁盘,CPU和网络的使用率

Master01节点

[root@k8s-master01 CoreDNS]# cd ..

[root@k8s-master01 k8s-ha-install]# mkdir metrics-server-0.6.1

[root@k8s-master01 k8s-ha-install]# cd metrics-server-0.6.1/

[root@k8s-master01 metrics-server-0.6.1]# cp /root/components.yaml ./

[root@k8s-master01 metrics-server-0.6.1]# kubectl create -f .

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

# 安装metrics server

发现报错,又是镜像拉取错误

[root@k8s-master01 metrics-server-0.6.1]# kubectl top node

Error from server (ServiceUnavailable): the server is currently unable to handle the request (get nodes.metrics.k8s.io)

[root@k8s-master01 metrics-server-0.6.1]# kubectl describe pod metrics-server-847dcc659d-jpsbj -n kube-system |tail -n1

Normal BackOff 3m34s (x578 over 143m) kubelet Back-off pulling image "k8s.gcr.io/metrics-server/metrics-server:v0.6.1"

# 镜像拉取失败

[root@k8s-master01 metrics-server-0.6.1]# kubectl delete -f .

serviceaccount "metrics-server" deleted

clusterrole.rbac.authorization.k8s.io "system:aggregated-metrics-reader" deleted

clusterrole.rbac.authorization.k8s.io "system:metrics-server" deleted

rolebinding.rbac.authorization.k8s.io "metrics-server-auth-reader" deleted

clusterrolebinding.rbac.authorization.k8s.io "metrics-server:system:auth-delegator" deleted

clusterrolebinding.rbac.authorization.k8s.io "system:metrics-server" deleted

service "metrics-server" deleted

deployment.apps "metrics-server" deleted

apiservice.apiregistration.k8s.io "v1beta1.metrics.k8s.io" deleted

# 删除Metrics server

[root@k8s-master01 metrics-server-0.6.1]# vim components.yaml

...

- --kubelet-insecure-tls

image: docker.io/bitnami/metrics-server:0.6.1

...

# 添加非安全连接参数以及修改镜像拉取

[root@k8s-master01 metrics-server-0.6.1]# docker pull bitnami/metrics-server:0.6.1

0.6.1: Pulling from bitnami/metrics-server

1d8866550bdd: Pull complete

5dc6be563c2f: Pull complete

Digest: sha256:660be90d36504f10867e5c1cc541dadca13f96c72e5c7d959fd66e3a05c44ff8

Status: Downloaded newer image for bitnami/metrics-server:0.6.1

docker.io/bitnami/metrics-server:0.6.1

# 所有节点提前拉取镜像

[root@k8s-master01 metrics-server-0.6.1]# kubectl create -f .

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

# 安装metrics server

[root@k8s-master01 metrics-server-0.6.1]# kubectl get pods -n kube-system|tail -n1

metrics-server-7bcff67dcd-mxfwk 1/1 Running 1 (4h13m ago) 4h13m

# 成功Running

报错发现其他节点看不了

[root@k8s-master01 metrics-server-0.6.1]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-node02 65m 1% 966Mi 6%

k8s-master01 <unknown> <unknown> <unknown> <unknown>

k8s-master02 <unknown> <unknown> <unknown> <unknown>

k8s-master03 <unknown> <unknown> <unknown> <unknown>

k8s-node01 <unknown> <unknown> <unknown> <unknown>

[root@k8s-master01 metrics-server-0.6.1]# kubectl logs metrics-server-7bcff67dcd-mxfwk -n kube-system|tail -n2

Failed probe" probe="metric-storage-ready" err="no metrics to serve"

E1031 12:19:40.567512 1 scraper.go:140] "Failed to scrape node" err="Get \"https://172.20.252.119:10250/metrics/resource\": context deadline exceeded" node="k8s-master03"

# 暂时没排出来

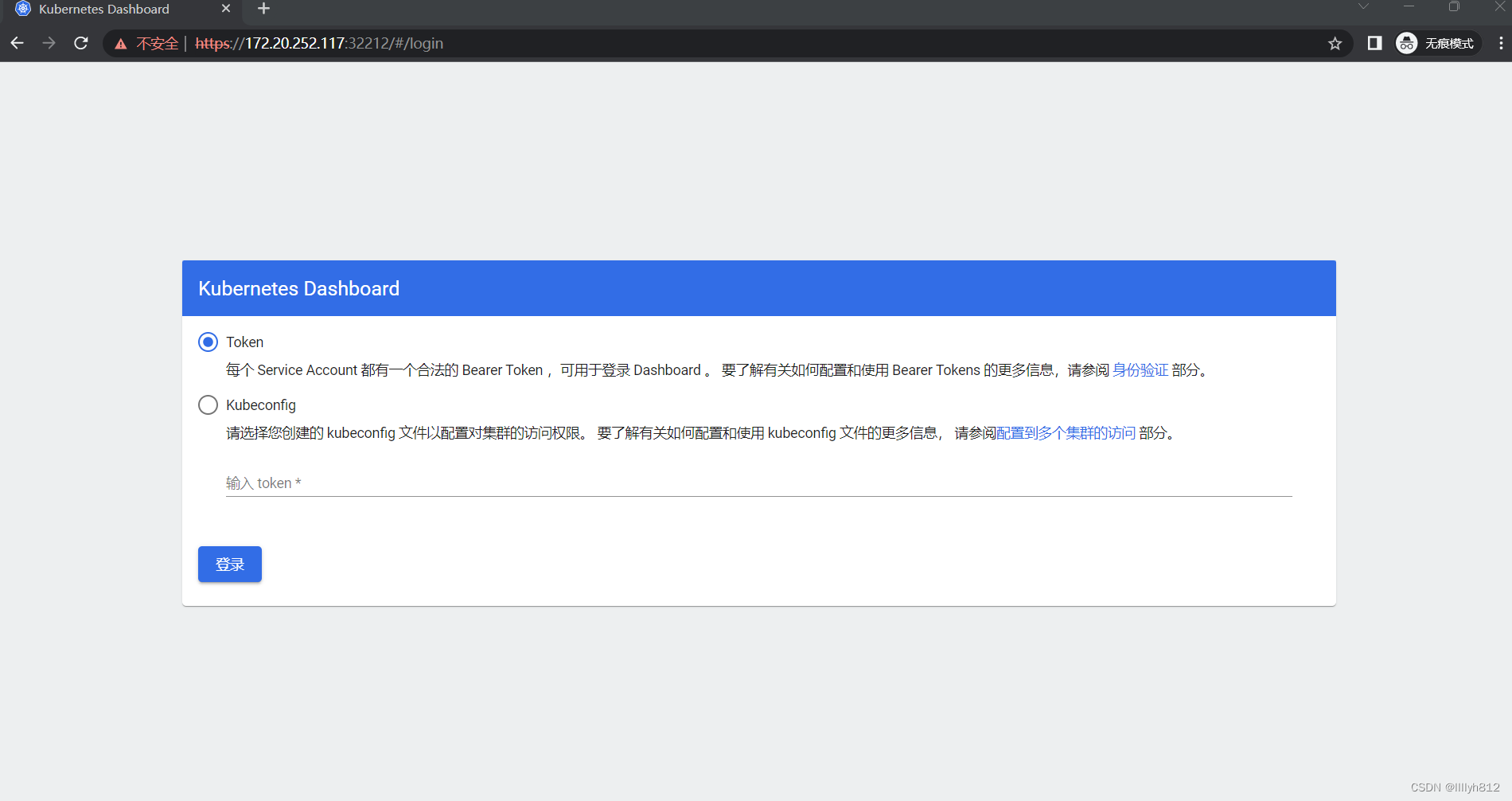

安装Dashboard

Master01节点

[root@k8s-master01 metrics-server-0.6.1]# cd ..

[root@k8s-master01 k8s-ha-install]# mkdir dashboard

[root@k8s-master01 k8s-ha-install]# cd dashboard/

[root@k8s-master01 dashboard]# curl https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml -O

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 7621 100 7621 0 0 11945 0 --:--:-- --:--:-- --:--:-- 11945

# 下载yaml文件

[root@k8s-master01 dashboard]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created