目录

- Problems with POS Tagging 词性标注的问题

- Probabilistic Model of HMM HMM的概率模型

- Two Assumptions of HMM HMM的两个假设

- Training HMM 训练HMM

- Making Predictions using HMM (Decoding) 使用HMM进行预测(解码)

- Viterbi Algorithm

- HMMs in Practice 实际中的HMM

- Generative vs. Discriminative Taggers 生成式vs判别式标签器

Problems with POS Tagging 词性标注的问题

-

Exponentially many combinations: |Tags|M, for length M 组合数量呈指数级增长:|Tags|M,长度为M

-

Tag sequences of different lengths 标记不同长度的序列

-

Tagging is a sentence-level task but as humans we decompose it into small word-level tasks 标注是句级任务,但作为人类,我们将其分解为小型的词级任务

-

Solution:

- Define a model that decomposes process into individual word-level tasks steps. But this takes into account the whole sequence when learning and predicting. 定义一个模型,将过程分解为单个词级任务步骤。但在学习和预测时,考虑整个序列

- This is called sequence labelling, or structured prediction 这被称为序列标注,或结构预测

Probabilistic Model of HMM HMM的概率模型

- Goal: Obtain best tag sequence t from sentence w 目标:从句子w中获取最佳标签序列t

The formulation 表述公式:

Applying Bayes Rule 应用贝叶斯定理:

Decomposing the Elements 分解元素:Probability of a word depends only on the tag 单词的概率只取决于标签:

Probability of a tag depends only on the previous tag 标签的概率只取决于前一个标签:

Two Assumptions of HMM HMM的两个假设

-

Output independence: An observed event(word) depends only on the hidden state(tag) 输出独立性:观察到的事件(词)只取决于隐藏状态(标签) ->

-

Markov assumption: The current state(tag) depends only on the previous state 马尔科夫假设:当前状态(标签)只取决于前一个状态->

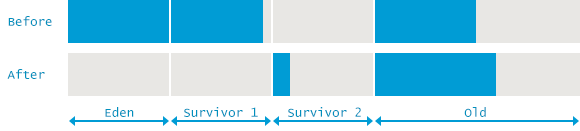

Training HMM 训练HMM

-

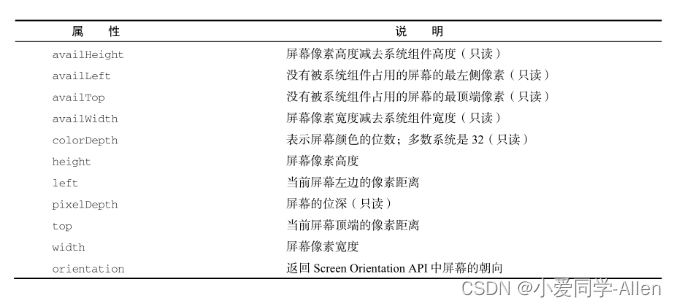

Parameters are individual probabilities: 参数是单个概率

- Emission Probabilities 发射概率 (O):

- Transition Probabilities 转移概率 (A):

- Emission Probabilities 发射概率 (O):

-

Training uses Maximum Likelihood Estimation: Done by simply counting word frequencies according to their tags. 训练使用最大似然估计:只需根据标签计算单词频率

-

E.g.

-

-

The tag for the first word: 第一个单词的标签

- Assume there is a

<s>symbol at the start of the sentence 假设句子开始处有一个符号 - E.g.

- Assume there is a

-

Unseen

(word, tag)and(tag, previous_tag)combinations: Applying smoothing techniques 未见过的(word, tag) 和 (tag, previous_tag) 组合:应用平滑技术 -

Output:

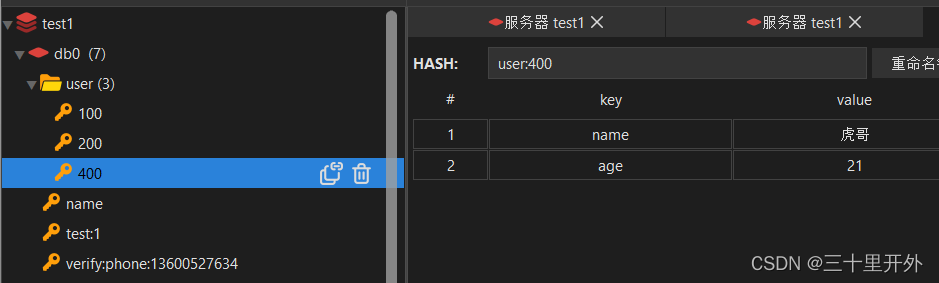

- Transition Matrix 转移矩阵:

- Emission(Observation) Matrix 发射(观察)矩阵:

Making Predictions using HMM (Decoding) 使用HMM进行预测(解码)

-

Simple idea: For each word, take the tag that maximizes

. Do it left-to-right greedily 简单的想法:对于每个单词,选择使

最大的标签。从左到右贪婪地执行

-

However this is wrong. The goal is to find

, not individual

terms. 但这是错误的。目标是找到

,而不是单个

项。

-

Correct way: Consider all possible tag combinations, evaluate them, take the max. 正确的方法:考虑所有可能的标签组合,评估它们,取最大值。

Viterbi Algorithm

-

Use Dynamic Programming. 使用动态规划。

- We can still proceed sequentially but need to be careful. 我们仍然可以顺序进行,但需要小心。

-

POS tag:

can play词性标签:can play -

Best tag for

canis:can的最佳标签是: -

Suppose best tag for

canisNN. To get the tag forplay, we can take, but this is wrong 假设

can的最佳标签是NN。为了得到play的标签,我们可以取,但这是错误的

-

Instead, we keep track of scores for each tag for

canand check them with the different tags forplay相反,我们记录下can的每个标签的分数,并用play的不同标签检查它们 -

E.g.

-

Complexity: O(T2N), where

Tis the size of the tagset, andNis the length of the sequence. 复杂度:O(T2N),其中T是标签集的大小,N是序列的长度。T * Nmatrix, each cell performsToperationsT * N矩阵,每个单元执行T次操作

-

Viterbi Algorithm works because of the independence assumptions that decompose the problem Viterbi算法之所以有效,是因为独立性假设将问题分解了

-

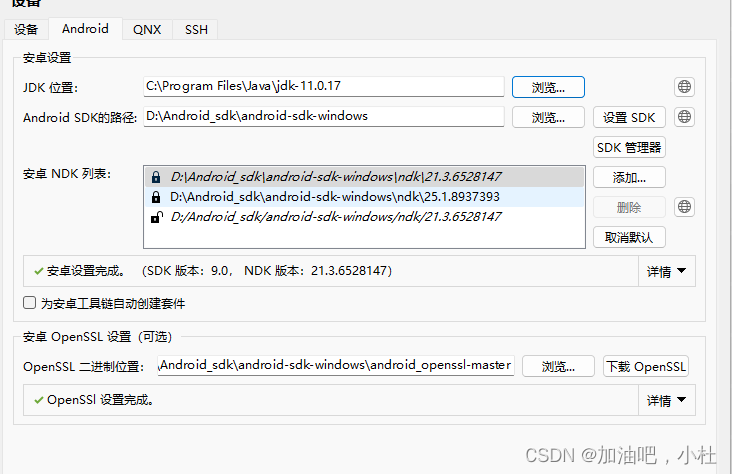

PsuedoCode: 伪代码

alpha = np.zeros(M, T)

for t in range(T):

alpha[1, t] = pi[t] * O[w[1], t]

for i in range(2, M):

for t_i in range(T):

for t_last in range(T):

s = alpha[i-1, t_last] * A[t_last, t_i]

if s > alpha[i, t_i]:

alpha[i, t_i] = s

back[i, t_i] = t_last

best = np.max(alpha[M-1, :])

return backtrace(best, back)

- Good practices:

- Work with log probabilities to prevent underflow 使用对数概率防止下溢

- Vectorization (User matrix-vector operations) 向量化(用户矩阵-向量运算)

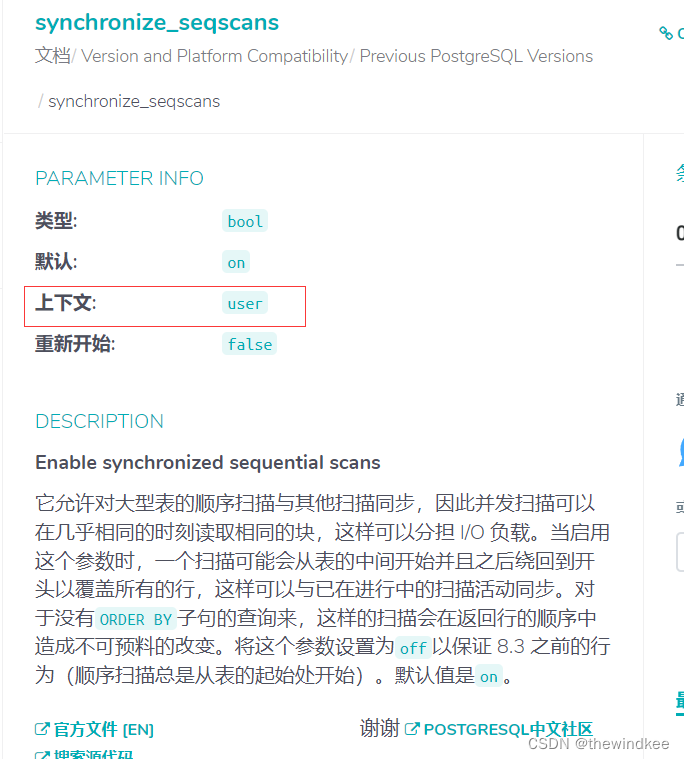

HMMs in Practice 实际中的HMM

-

Examples previously are based on bigrams called first order HMM 前面的例子是基于二元的,称为一阶HMM

-

State-of-the-art model use tag trigams called second order HMM 最先进的模型使用标签三元组,称为二阶HMM

-

- Viterbi is now O(T3N)

-

-

Need to deal with sparsity: Some tag trigram sequences might not be present in training data 需要处理稀疏性:一些标签三元组序列可能在训练数据中不存在

-

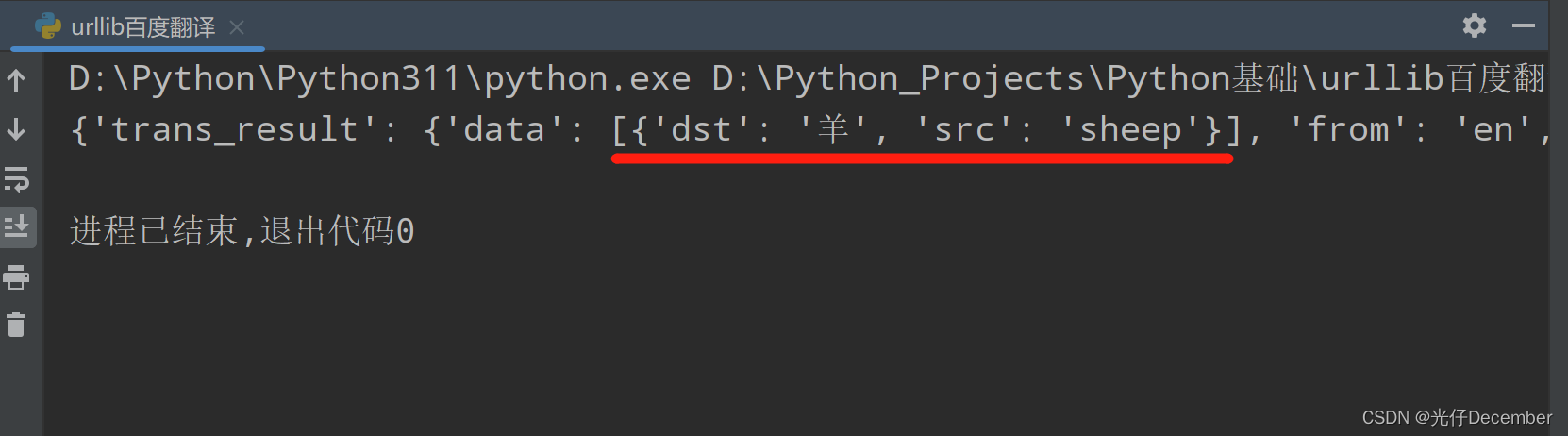

Use interpolation 使用插值:

-

where

-

-

With additional features, HMM model can reach 96.5% accuracy on Penn Treebank 带有额外特征的HMM模型可以在Penn Treebank上达到96.5%的准确率

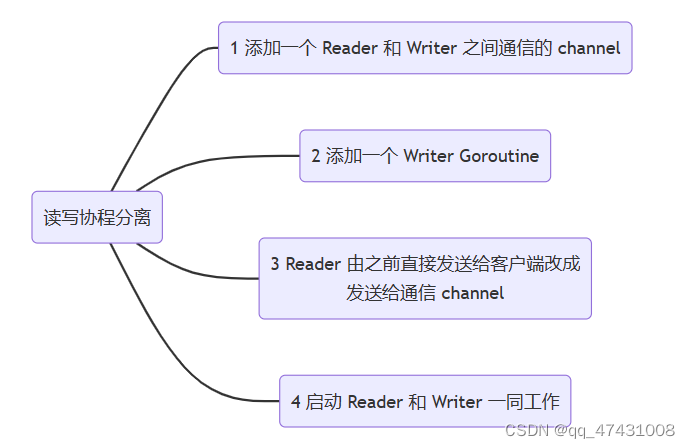

Generative vs. Discriminative Taggers 生成式vs判别式标签器

-

HMM is generative HMM是生成式的:

- Training HMM can generate data (sentences) 训练HMM可以生成数据(句子)

- Allows for unsupervised HMMs: Learn model without any tagged data 允许无监督HMM:无需任何标注数据即可学习模型

-

Discriminative models describe 判别模型直接描述

directly

-

-

Supports richer feature set, generally better accuracy when trained over large supervised datasets 支持更丰富的特征集,在大型监督数据集上准确性更高:

-

E.g. Maximum Entropy Markov Model (MEMM), Conditional Random Field (CRF) 最大熵马尔可夫模型(MEMM),条件随机场(CRF)。

-

Most deep learning models of sequences are discriminative 大多数序列的深度学习模型是有区别的

-