Docker网络

一、docker网络介绍

Docker网络在Docker的基础知识中算比较重要的了,需要多多实验理解。

Docker服务安装启动后默认在host上创建了三个网络:

[root@k8s-m1 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:50:56:89:4a:64 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.140/24 brd 192.168.2.255 scope global ens32

valid_lft forever preferred_lft forever

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:36:92:47:11 brd ff:ff:ff:ff:ff:ff

inet 172.16.0.1/16 brd 172.16.255.255 scope global docker0

valid_lft forever preferred_lft forever

其中,lo 127.0.0.1 为本机回环地址,我们通常通过ping 127.0.0.1 来检查TCP/IP协议栈是否正常工。如果ping不通,一般可以证实为本机TCP/IP协议栈有问题,自然就无法连接网络了。不过,出现这种现象的概率比较低。(网络排查流程,面试中有可能问到)

ens33(eth0) 192.168.2.140为分配的私有IP

docker0 172 .16.0.1 #docker网桥,使用了veth-pair技术(可以理解为一个交换机,各位大佬有其他看法请指正)

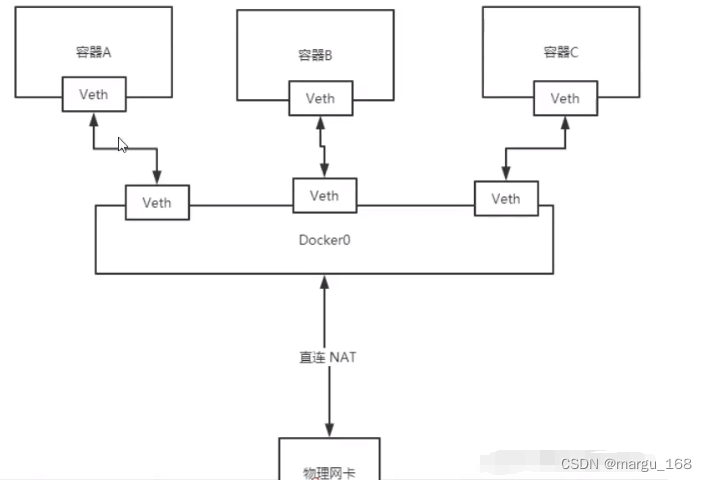

从前面的博客学习知道,docker底层其实是依赖Linux内核技术(Cgroup和Namespace),其中Namespace就包括了六种名称空间:user、uts,mount,ipc,pid,net,而net主要就是用于网络设备、协议栈的隔离。linux内核已经支持二层和三层设备的模拟,宿主机的docker0就是用软件来实现的具有交换功能的虚拟二层设备(类似交换机)。docker中的网卡设备是成对出现的,好比网线的两头,一头处于docker容器中,另外一头在docker0桥上,这个使用brctl工具就能实现。

下面我们使用ip命令操作网络名称空间,简单模拟容器间的网络通信(当我们使用ip命令去管理网络名称空间的时候,只有网络名称空间是被隔离的,其它名称空间都是共享的)。

# 添加网络名称空间

[root@k8s-m1 ~]# ip netns add ns1

[root@k8s-m1 ~]# ip netns add ns2

[root@k8s-m1 ~]# ip netns ls

ns2

ns1

#查看各独立网络命名空间的网卡

[root@k8s-m1 ~]# ip netns exec ns1 ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

[root@k8s-m1 ~]# ip netns exec ns2 ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

#创建网卡对

[root@k8s-m1 ~]# ip link add name veth1.1 type veth peer name veth1.2

[root@k8s-m1 ~]# ip link show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:89:4a:64 brd ff:ff:ff:ff:ff:ff

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:36:92:47:11 brd ff:ff:ff:ff:ff:ff

412: veth1.2@veth1.1: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether ae:76:6f:85:4d:58 brd ff:ff:ff:ff:ff:ff

413: veth1.1@veth1.2: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 06:3b:b5:ad:20:dd brd ff:ff:ff:ff:ff:ff

# 可以看到veth1.1的另一半是veth1.2,veth1.2的另一半是veth1.1,但此时这两块网卡存在于我们的物理机上,且处于未激活状态,现在我们把veth1.2放到ns1名称空间中

[root@k8s-m1 ~]# ip link set dev veth1.2 netns ns1

[root@k8s-m1 ~]# ip link show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 00:50:56:89:4a:64 brd ff:ff:ff:ff:ff:ff

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:36:92:47:11 brd ff:ff:ff:ff:ff:ff

4: dummy0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1

413: veth1.1@if412: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 06:3b:b5:ad:20:dd brd ff:ff:ff:ff:ff:ff link-netnsid 2

# 一个虚拟网卡只能属于一个网络名称空间,我们把veth1.2放到ns1名称空间中,所以在物理机上只剩veth1.1了。而ns1网络名称空间中已经多了一个veth1.2,如下。

[root@k8s-m1 ~]# ip netns exec ns1 ip a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

412: veth1.2@if413: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether ae:76:6f:85:4d:58 brd ff:ff:ff:ff:ff:ff link-netnsid 0

# 当然,我们也可以给网卡改名字,这里为了方便查看就不修改了。命令如下:

[root@k8s-m1 ~]# ip netns exec ns1 ip link set dev veth1.2 name eth0

# 配置地址并激活网卡使宿主机能与ns1通信

[root@k8s-m1 ~]# ifconfig veth1.1 10.0.0.1/24 up

#激活后查看并测试

[root@k8s-m1 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:50:56:89:4a:64 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.140/24 brd 192.168.2.255 scope global ens32

valid_lft forever preferred_lft forever

inet 192.168.2.250/24 scope global secondary ens32

valid_lft forever preferred_lft forever

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:36:92:47:11 brd ff:ff:ff:ff:ff:ff

inet 172.16.0.1/16 brd 172.16.255.255 scope global docker0

valid_lft forever preferred_lft forever

413: veth1.1@if412: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state LOWERLAYERDOWN group default qlen 1000

link/ether 06:3b:b5:ad:20:dd brd ff:ff:ff:ff:ff:ff link-netnsid 2

inet 10.0.0.1/24 brd 10.0.0.255 scope global veth1.1

valid_lft forever preferred_lft forever

[root@k8s-m1 ~]# ip netns exec ns1 ifconfig eth0 10.0.0.2/24 up

[root@k8s-m1 ~]# ping 10.0.0.2

PING 10.0.0.2 (10.0.0.2) 56(84) bytes of data.

64 bytes from 10.0.0.2: icmp_seq=1 ttl=64 time=0.199 ms

^C

--- 10.0.0.2 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.199/0.199/0.199/0.000 ms

# 接下来我们再把veth1.1放到ns2为网络名称空间中,并实现ns1和ns2的通信

[root@k8s-m1 ~]# ip link set dev veth1.1 netns ns2

[root@k8s-m1 ~]# ip netns exec ns2 ifconfig veth1.1 10.0.0.3/24 up

#注意此时ns1中网卡ip为10.0.0.2,ns2中网卡ip为10.0.0.3

[root@k8s-m1 ~]# ip netns exec ns2 ping 10.0.0.2

PING 10.0.0.2 (10.0.0.2) 56(84) bytes of data.

64 bytes from 10.0.0.2: icmp_seq=1 ttl=64 time=0.080 ms

64 bytes from 10.0.0.2: icmp_seq=2 ttl=64 time=0.062 ms

[root@k8s-m1 ~]# ip netns exec ns1 ping 10.0.0.3

PING 10.0.0.3 (10.0.0.3) 56(84) bytes of data.

64 bytes from 10.0.0.3: icmp_seq=1 ttl=64 time=0.151 ms

64 bytes from 10.0.0.3: icmp_seq=2 ttl=64 time=0.046 ms

二、docker网络类型

docker支持多种网络类型,安装后默认提供三种,如下:

[root@k8s-m1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

fe2b16d142c1 bridge bridge local

a3ba9b8f4349 host host local

b3903c998a45 none null local

1)none网络

无网络配置,容器创建时,可以通过–network=none指定none网络。这是一个封闭的网络,一些对安全性要高并且不需要联网的应用可以使用none网络。

docker run -it --name docker-none --net=none centos /bin/bash

2)host网络

共享宿主机网络,直接映射到宿主机的网卡,命名空间一样。如果宿主机的80端口被使用,容器将不能使用。通过–network=host指定使用host网络。

host网络特点

共享宿主机网络,网络环境无隔离,网络性能无衰减(不转发),排查网络故障简单,但是端口不易管理,需要考虑端口冲突问题

docker run -it --name docker-host --net=host centos /bin/bash

[root@k8s-m1 /]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:50:56:89:4a:64 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.140/24 brd 192.168.2.255 scope global ens32

valid_lft forever preferred_lft forever

inet 192.168.2.250/24 scope global secondary ens32

valid_lft forever preferred_lft forever

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:36:92:47:11 brd ff:ff:ff:ff:ff:ff

inet 172.16.0.1/16 brd 172.16.255.255 scope global docker0

valid_lft forever preferred_lft forever

进入容器可以看到host的所有网卡,hostname都是Docker 宿主机的。

3)bridge网络

Docker安装时会创建一个网桥docker0,这是默认使用的网络模式,使用其他的网络必须用–network指定。使用docker run -p时,docker实际是在iptables做了DNAT规则,实现端口转发功能。可以使用iptables -t nat -vnL查看。通过brctl show查看,没有容器启动时:

[root@k8s-m1 ~]# brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.024236924711 no veth4dc0206

没有容器启动时,是没有网卡挂载docker0下面。

[root@k8s-m1 ~]# docker run -dit --name docker-bridge centos /bin/bash

260781f94aa25e90f788d5760da0780754a9be4462ce0e4c1ff88ceedf10dd76

[root@k8s-m1 ~]# brctl show

docker0 8000.024236924711 no veth4dc0206

vethf5a75fb

启动容器,发现有个新的网络接口被挂到了docker0下,vethf5a75fb是该容器的虚拟网卡:

进入容器,查看网络配置:

[root@k8s-m1 ~]# docker exec -it 26 /bin/bash

[root@260781f94aa2 /]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

416: eth0@if417: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:10:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.16.0.2/16 brd 172.16.255.255 scope global eth0

valid_lft forever preferred_lft forever

查看容器的网络配置发现容器有一块网卡eth0@if417,和挂到docker0下面的网卡不一样。why?

实际上这两块网卡是一对veth pair。beth pair是一种成对出现的特殊网络设备,可以理解为由一根虚拟网线连接起来的一对网卡,网卡的一头(eth0@if417)在容器中,另一头(vethf5a75fb)在网桥docker0,相当于也就是vethf5a75fb挂在了docker0上。

[root@k8s-m1 ~]# docker network inspect bridge

[

{

"Name": "bridge",

"Id": "69c88fd63b5337dcd13ef57e84f3d24849308684bf773adf3a0c8c1fff3793e3",

"Created": "2023-05-22T21:37:27.731395691+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.16.0.0/16",

"Gateway": "172.16.0.1"

}

]

},

.....

"Containers": {

"260781f94aa25e90f788d5760da0780754a9be4462ce0e4c1ff88ceedf10dd76": {

"Name": "docker-bridge",

"EndpointID": "9af942133a39d79cc1309dc8e7843c32c824325faf5ddde1ef59922fa908692d",

"MacAddress": "02:42:ac:10:00:02",

"IPv4Address": "172.16.0.2/16",

"IPv6Address": ""

},

我们还可以看到容器docker-bridge的IP为172.17.0.2/16,而bridge的网络配置的subnet为172.17.0.0/16,网关是172.17.0.1(这个网关就是docker0),容器创建时,不指定网络模式时docker会自动从172.17.0.0/16中自动分配一个IP,如下。所有的容器在不指定网络情况下,都是由docker0路由的,Docker会给我们容器默认分配一个随机的可用IP地址,这些IP地址之间是可以进行网络交互的,交互模型图如下:

4)other container

借助其他容器的ip访问其他网络,相当于共享。两个容器之间只共享网络空间,不共享其他的命名空间。

5)overlay网络

overlay网络主要用于多节点服务器之间容器通信,overlay网络的特点:跨主机通信 (不同主机),无需做端口管理,无需担心IP冲突。Docker overlay网络需要一个key-value数据库用于保存网络信息状态,包括Network,Endpoint,IP等。Consul,Etcd和Zookeeper都是docker支持的key-value软件。overlay网络相对复杂,我们后续单独分享。

三、 容器互联

在微服务部署的场景下,我们在部署的时候微服务对应的IP地址在重启时可能会发生改变,而注册中心是使用服务名来唯一识别微服务,所以我们需要使用容器名来配置容器间的网络连接。使用–link可以完成这个功能。

首先不设置–link的情况下,是无法通过容器名来进行连接的。centos02容器是可以直接ping通centos01的容器ip,但是无法ping通centos01的容器name:

#运行两个容器

[root@k8s-m1 ~]# docker run -id --name centos1 centos /bin/bash

d66fd0ddc6e319adc0041799e7a4b636369a6cf7517b11cd3258518227786434

[root@k8s-m1 ~]# docker run -id --name centos2 centos /bin/bash

7fc4ca29683e352cb41cac5f65492fc4d8d897f03ad48af7d2ea3253110d9a3b

#查看容器ip地址,也可以直接进入相应容器通过ip a查看

[root@k8s-m1 ~]# docker inspect --format='{{.Name}}-{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}' $(docker ps -aq)

/centos2-172.16.0.4

/centos1-172.16.0.3

[root@k8s-m1 ~]# docker exec -it d6 /bin/bash

[root@d66fd0ddc6e3 /]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

420: eth0@if421: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:10:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.16.0.3/16 brd 172.16.255.255 scope global eth0

valid_lft forever preferred_lft forever

[root@d66fd0ddc6e3 /]# ping 172.16.0.4

PING 172.16.0.4 (172.16.0.4) 56(84) bytes of data.

64 bytes from 172.16.0.4: icmp_seq=1 ttl=64 time=0.207 ms

[root@d66fd0ddc6e3 /]# ping centos2

ping: centos2: Name or service not known

添加参数 --link,可以通过容器name进行连接。centos03容器link到centos01,所以centos03可以直接通过ping centos01的容器名去ping通,但是反过来centos01去ping centos03的容器名是ping不通的。

[root@k8s-m1 ~]# docker run -id --name centos3 --link centos1 centos /bin/bash

d12dd215e6af476862119de18ec2ce25ea5b2c47ae23188b46c978ebed971978

[root@k8s-m1 ~]# docker exec -it centos1 ping centos3

ping: centos3: Name or service not known

[root@k8s-m1 ~]# docker exec -it centos03 ping centos1

PING centos1 (172.16.0.3) 56(84) bytes of data.

64 bytes from centos1 (172.16.0.3): icmp_seq=1 ttl=64 time=0.122 ms

64 bytes from centos1 (172.16.0.3): icmp_seq=2 ttl=64 time=0.080 ms

^C

--- centos1 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 0.080/0.101/0.122/0.021 ms

–link的原理就是在centos3容器的hosts文件中添加了要去link的centos1容器的容器名和ip地址映射。但是因为docker0不支持容器名访问,所以–link设置容器互连的方式也不再推荐使用了,更多地选择自定义网络。

四、自定义网络

docker network 命令及经常使用到的命令:

[root@k8s-m1 ~]# docker network --help

Usage: docker network COMMAND

Manage networks

Commands:

connect Connect a container to a network

create Create a network

disconnect Disconnect a container from a network

inspect Display detailed information on one or more networks

ls List networks

prune Remove all unused networks

rm Remove one or more networks

Run 'docker network COMMAND --help' for more information on a command.

具体使用:

[root@k8s-m1 ~]# docker network create --driver bridge --subnet 192.168.0.0/16 --gateway 192.168.0.1 net-test

0dff816653c09a42f25ba5e355d37aa647676c8c1cc6e183a37c0176c041e69b

[root@k8s-m1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

aef5eac497ff auth_default bridge local

69c88fd63b53 bridge bridge local

85211ff12918 host host local

0dff816653c0 net-test bridge local

c0c03af40894 none null local

#查看新创键的net-test的详细信息:

[root@k8s-m1 ~]# docker network inspect net-test

[

{

“Name”: “net-test”,

“Id”: “0dff816653c09a42f25ba5e355d37aa647676c8c1cc6e183a37c0176c041e69b”,

“Created”: “2023-06-02T14:10:50.091092339+08:00”,

“Scope”: “local”,

“Driver”: “bridge”,

“EnableIPv6”: false,

“IPAM”: {

“Driver”: “default”,

“Options”: {},

“Config”: [

{

“Subnet”: “192.168.0.0/16”,

“Gateway”: “192.168.0.1”

}

]

},

“Internal”: false,

“Attachable”: false,

“Ingress”: false,

“ConfigFrom”: {

“Network”: “”

},

“ConfigOnly”: false,

“Containers”: {},

“Options”: {},

“Labels”: {}

}

]

使用自定义网络创建容器后,相同网络name下的容器,不管是通过容器IP还是容器name,都可以进行网络通信:

# 创建两个使用相同自定义网络的容器

[root@k8s-m1 ~]# docker run -d --name centos-nettest-01 --net net-test -it centos /bin/bash

1274f17fb1a06c4c956ee608a3ead9faa2bc3cd7d151395202bf3383433c246c

[root@k8s-m1 ~]# docker run -d --name centos-nettest-02 --net net-test -it centos /bin/bash

3a27a41ee07bce3b5f5dcf5917c864d167cd78790ff11d8b9ac8950d27b95673

[root@k8s-m1 ~]# docker exec -it centos-nettest-01 ping centos-nettest-02

PING centos-nettest-02 (192.168.0.3) 56(84) bytes of data.

64 bytes from centos-nettest-02.net-test (192.168.0.3): icmp_seq=1 ttl=64 time=0.138 ms

64 bytes from centos-nettest-02.net-test (192.168.0.3): icmp_seq=2 ttl=64 time=0.108 ms

64 bytes from centos-nettest-02.net-test (192.168.0.3): icmp_seq=3 ttl=64 time=0.104 ms

[root@k8s-m1 ~]# docker exec -it centos-nettest-01 ping 192.168.0.3

PING 192.168.0.3 (192.168.0.3) 56(84) bytes of data.

64 bytes from 192.168.0.3: icmp_seq=1 ttl=64 time=0.113 ms

64 bytes from 192.168.0.3: icmp_seq=2 ttl=64 time=0.075 ms

^C

--- 192.168.0.3 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 0.075/0.094/0.113/0.019 ms

五、不同网络间的容器互联

在没有使用connect命令的情况下,不同网络间的容器是无法进行网络连接的。

[root@k8s-m1 ~]# docker inspect --format=‘{{.Name}}-{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}’ $(docker ps -aq)

/centos-nettest-02-192.168.0.3

/centos-nettest-01-192.168.0.2

/centos03-172.16.0.5

/centos2-172.16.0.4

/centos1-172.16.0.3

[root@k8s-m1 ~]# docker exec -it centos-nettest-01 ping 172.16.0.3

PING 172.16.0.3 (172.16.0.3) 56(84) bytes of data. (一直卡住)

不同Docker网络之间的容器想要连接的话,需要把该容器注册到另一个容器所在的网络上,使用docker connect命令。

docker network connect net-test centos1

[root@k8s-m1 ~]# docker network inspect net-test

[

{

"Name": "net-test",

"Id": "0dff816653c09a42f25ba5e355d37aa647676c8c1cc6e183a37c0176c041e69b",

"Created": "2023-06-02T14:10:50.091092339+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"1274f17fb1a06c4c956ee608a3ead9faa2bc3cd7d151395202bf3383433c246c": {

"Name": "centos-nettest-01",

"EndpointID": "7130e74e57ebdf9cd5bc6832f1d9f03870eaf7bbed1686b606ef06652a2a55da",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

},

"3a27a41ee07bce3b5f5dcf5917c864d167cd78790ff11d8b9ac8950d27b95673": {

"Name": "centos-nettest-02",

"EndpointID": "2d23097207b0955236b439dceea505f5e87beafd7d2e45d1025bd01a8c041a85",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

},

"d66fd0ddc6e319adc0041799e7a4b636369a6cf7517b11cd3258518227786434": {

"Name": "centos1",

"EndpointID": "8c749edb2dfb38882811ea12c3886a4adc7500a7aca44ebde1480079bd54dbd2",

"MacAddress": "02:42:c0:a8:00:04",

"IPv4Address": "192.168.0.4/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

[root@k8s-m1 ~]# docker inspect --format='{{.Name}}-{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}' $(docker ps -aq)

/centos-nettest-02-192.168.0.3

/centos-nettest-01-192.168.0.2

/centos03-172.16.0.5

/centos2-172.16.0.4

/centos1-172.16.0.3192.168.0.4

......

[root@k8s-m1 ~]# docker exec -it centos-nettest-01 ping centos1

PING centos1 (192.168.0.4) 56(84) bytes of data.

64 bytes from centos1.net-test (192.168.0.4): icmp_seq=1 ttl=64 time=0.309 ms

64 bytes from centos1.net-test (192.168.0.4): icmp_seq=2 ttl=64 time=0.111 ms

^C

--- centos1 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 0.111/0.210/0.309/0.099 ms

[root@k8s-m1 ~]# docker exec -it centos-nettest-01 ping 192.168.0.4

PING 192.168.0.4 (192.168.0.4) 56(84) bytes of data.

64 bytes from 192.168.0.4: icmp_seq=1 ttl=64 time=0.201 ms

64 bytes from 192.168.0.4: icmp_seq=2 ttl=64 time=0.112 ms

可以看到,以上命令的作用就是多分配了一个IP地址给centos1容器,新分配的容器地址为192.168.0.4,然后net-test网络命名空间的容器去ping 测试centos1容器,通过名字或者新的IP地址都是可以通的。