👨🎓个人主页:研学社的博客

💥💥💞💞欢迎来到本博客❤️❤️💥💥

🏆博主优势:🌞🌞🌞博客内容尽量做到思维缜密,逻辑清晰,为了方便读者。

⛳️座右铭:行百里者,半于九十。

📋📋📋本文目录如下:🎁🎁🎁

目录

💥1 概述

📚2 运行结果

2.1 DAGsvm

2.2 SVMtrial

🌈3 Matlab代码实现

🎉4 参考文献

💥1 概述

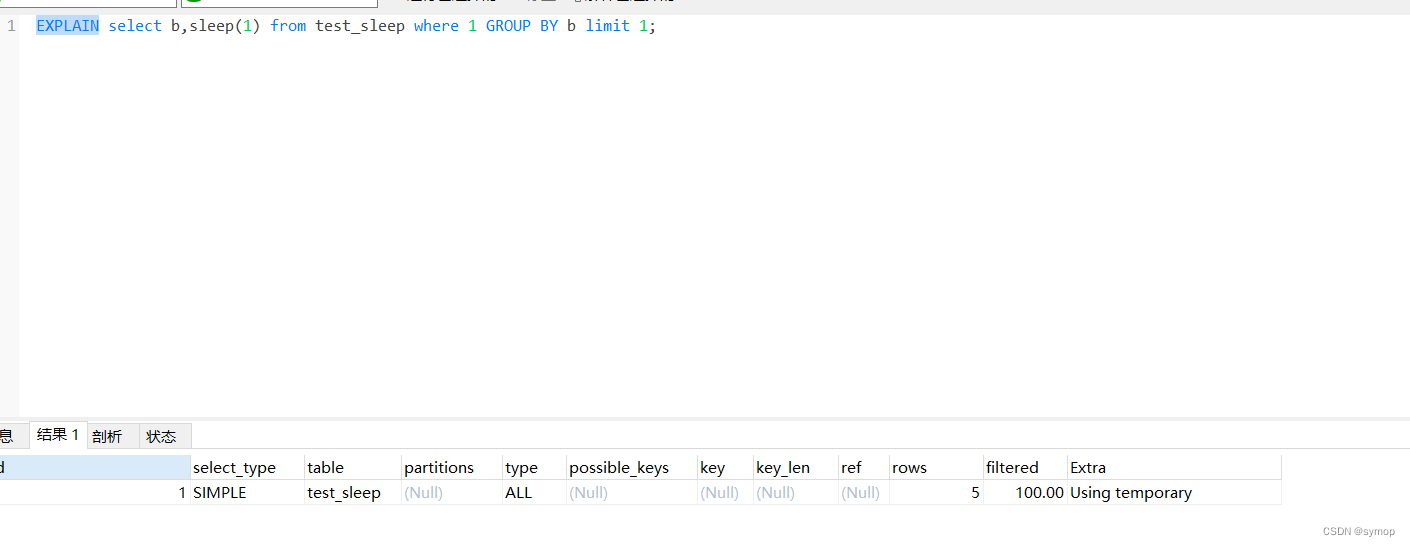

本文旨在帮助可视化学习的分类器,在训练非线性 C-SVM 以将二维数据(2 个特征)分类为 2 个或更多类时。C = Inf 给出硬边距分类器,而 C < Inf 给出 1 范数软边距分类器的情况。

MATLAB 的 quadprog 用于求解对偶变量 a。求解器设置为使用内点方法。高斯径向基函数 (RBF) 核用于生成非线性边界。

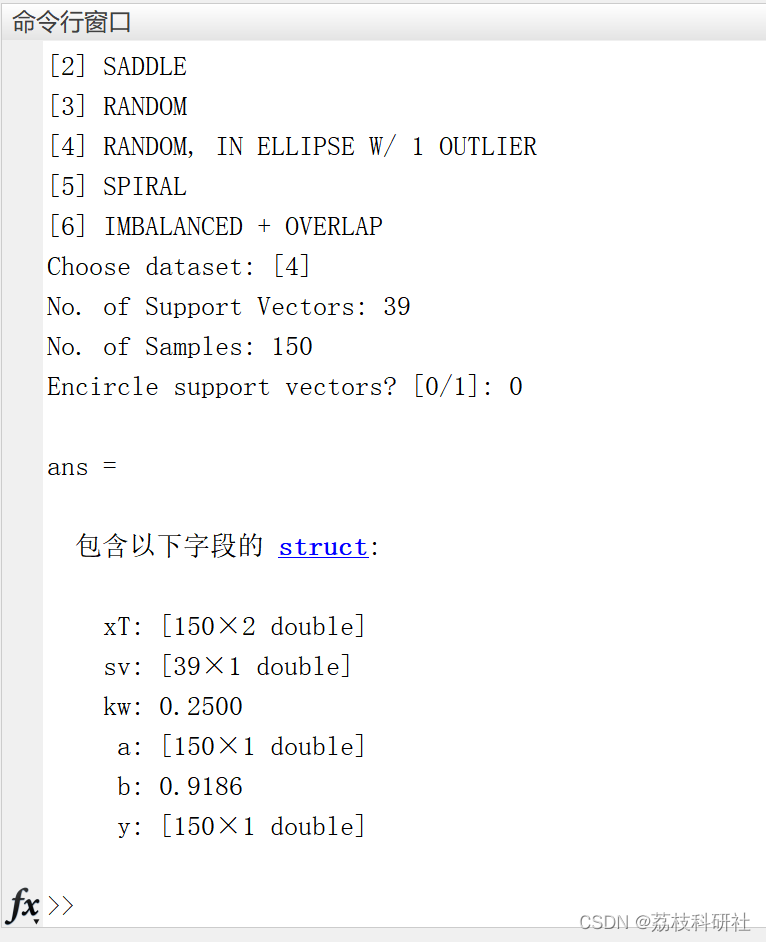

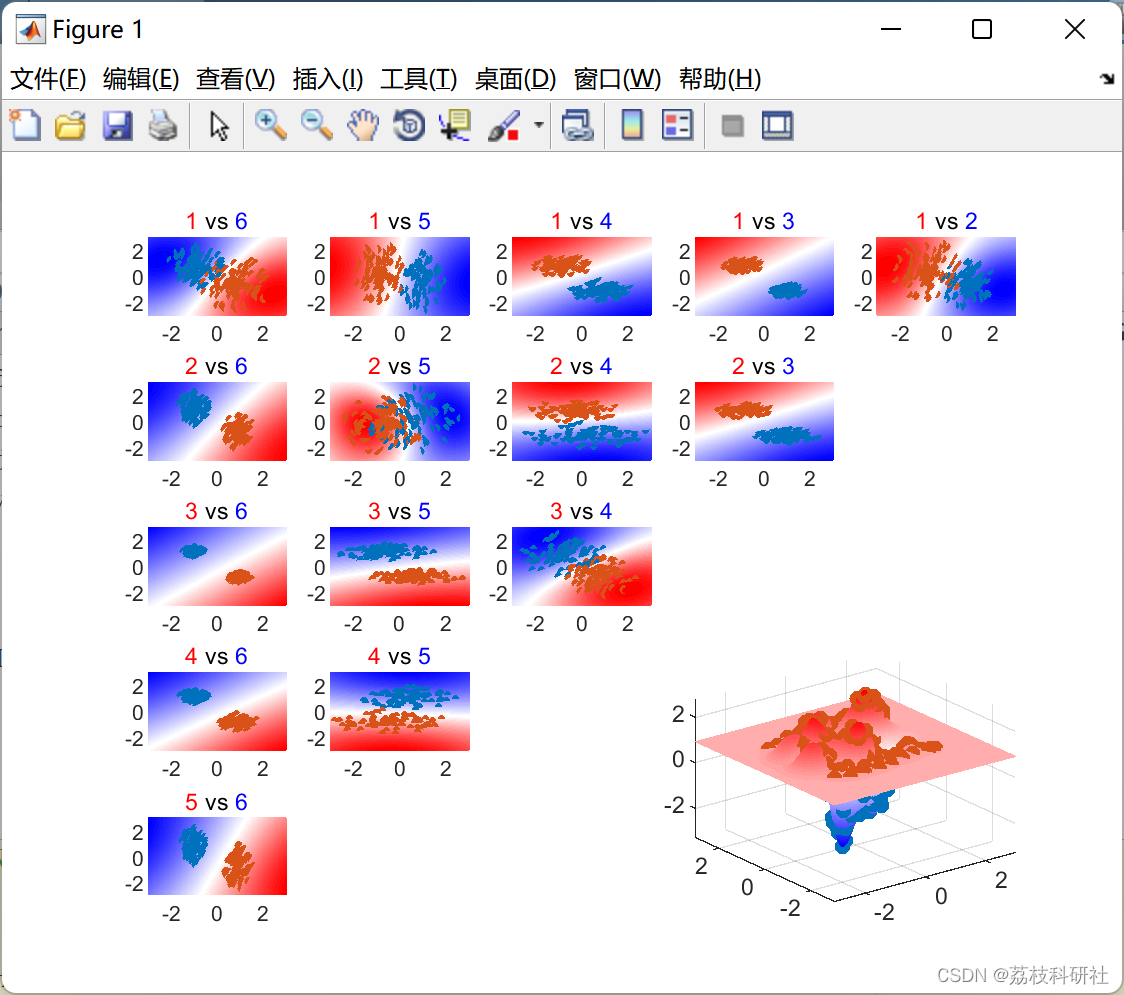

在二进制分类文件 (SVMtrial.m) 中:有 6 个不同的训练集可供使用。输出是分类器的 3D 网格图和支持向量的数量。

二元分类的数据集:

(1) 典型

(2) 鞍座(3) 随机 (4) 随机,椭圆形,带 1 个异常值

(5) 螺旋

(6) 不平衡 + 重叠

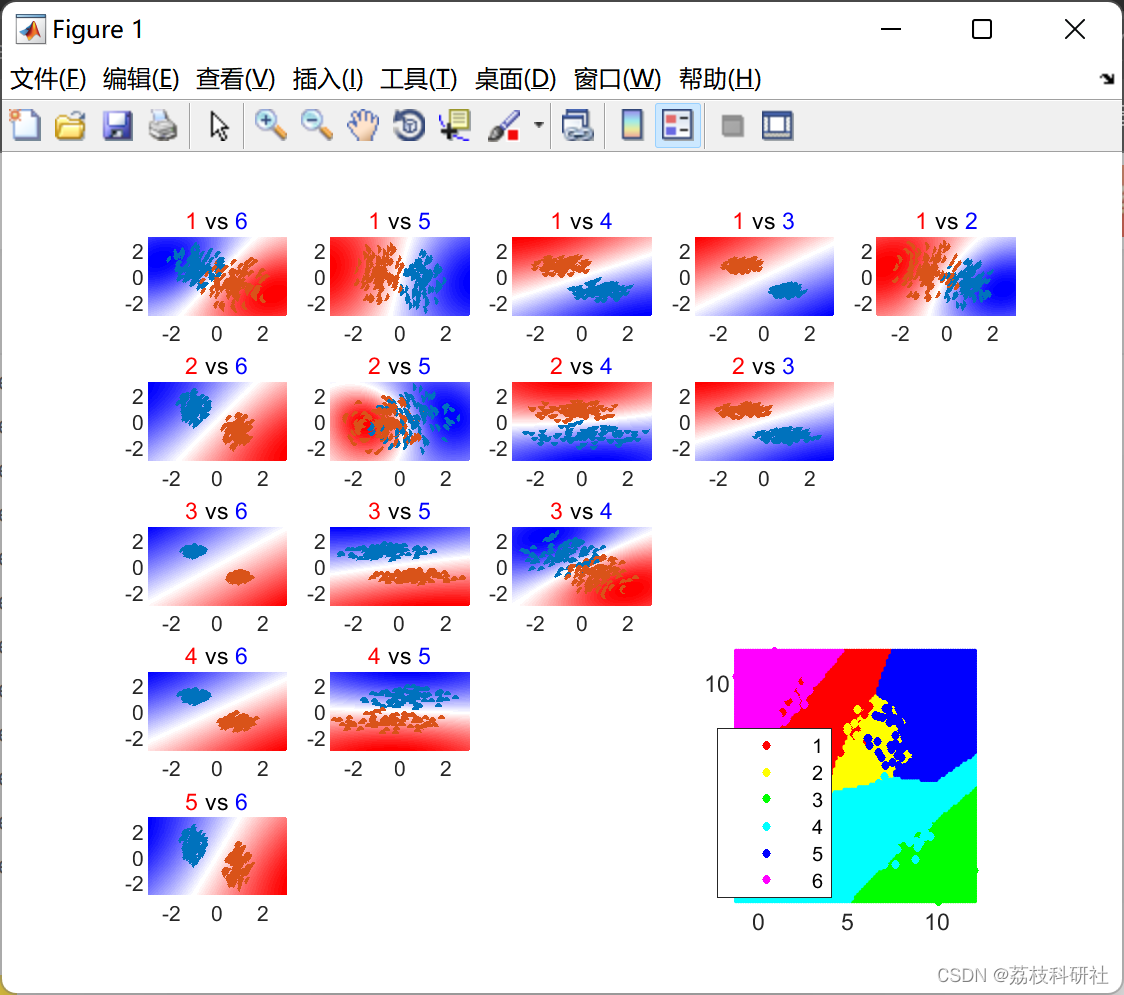

在多类分类文件 (DAGsvm.m) 中:有 5 个不同的训练集可供使用。输出是 K*(K-1)/2 分类器的 3D 网格图、训练集图和错误分类的训练样本列表。您还可以让代码根据 [4] 估计 RBF 内核宽度。我使用了 [3] 中的 DAG-SVM 算法进行多类分类。因此,输出网格图以有向无环图(DAG)排列。

多类分类数据集:

(1) (3类) 费舍尔鸢尾花 - 花瓣(2) (4类) 风扇 带4臂

(3) (6类) 随机圆圈(4) (5类) 东南亚地图

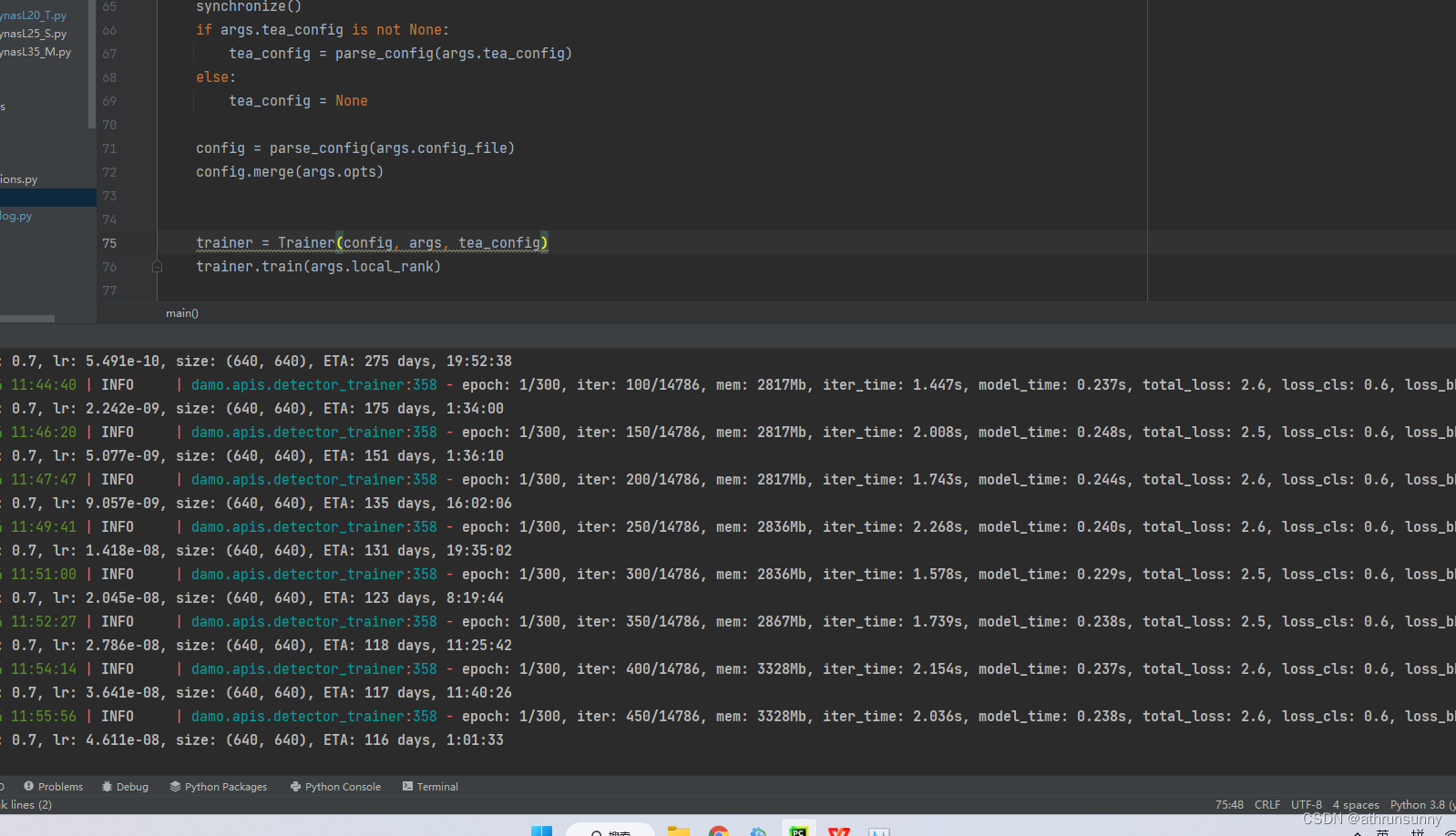

📚2 运行结果

2.1 DAGsvm

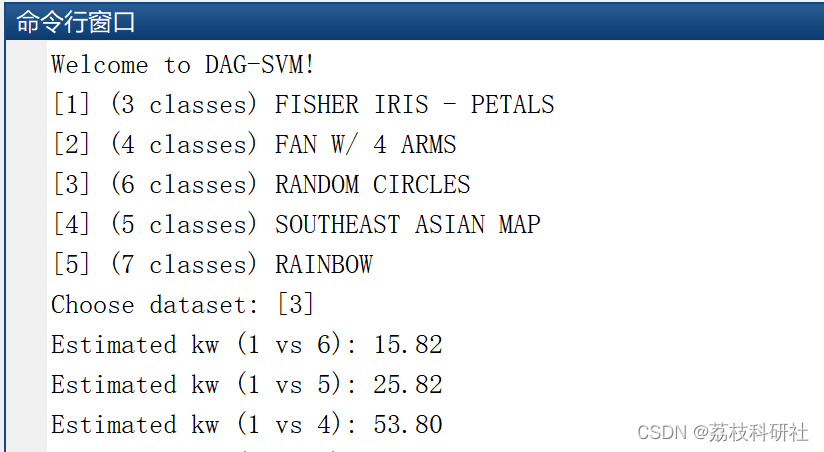

命令框选择自己需要的:

Welcome to DAG-SVM!

[1] (3 classes) FISHER IRIS - PETALS

[2] (4 classes) FAN W/ 4 ARMS

[3] (6 classes) RANDOM CIRCLES

[4] (5 classes) SOUTHEAST ASIAN MAP

[5] (7 classes) RAINBOW

Choose dataset: [3]

Estimated kw (1 vs 6): 15.82

Estimated kw (1 vs 5): 25.82

Estimated kw (1 vs 4): 53.80

Estimated kw (1 vs 3): 108.89

Estimated kw (1 vs 2): 14.45

Estimated kw (2 vs 6): 48.03

Estimated kw (2 vs 5): 7.49

Estimated kw (2 vs 4): 28.20

Estimated kw (2 vs 3): 64.32

Estimated kw (3 vs 6): 180.89

Estimated kw (3 vs 5): 66.41

Estimated kw (3 vs 4): 15.35

Estimated kw (4 vs 6): 104.68

Estimated kw (4 vs 5): 35.06

Estimated kw (5 vs 6): 67.53运行结果:

2.2 SVMtrial

其他就不一一例举啦。

🌈3 Matlab代码实现

部分代:

clc; fprintf('Welcome to DAG-SVM!\n');

if nargin == 0

fprintf('[1] (3 classes) FISHER IRIS - PETALS\n');

fprintf('[2] (4 classes) FAN W/ 4 ARMS\n');

fprintf('[3] (6 classes) RANDOM CIRCLES\n');

fprintf('[4] (5 classes) SOUTHEAST ASIAN MAP\n');

fprintf('[5] (7 classes) RAINBOW\n');

ch = input('Choose dataset: '); % Let the user choose

switch ch

case 1 % Set 1: FISHER IRIS (Petals data only)

load fisheriris meas species;

x = meas(:,3:4); % x = [length, width]

y = zeros(length(species),1);

y(strcmp(species,'setosa') == 1) = 1;

y(strcmp(species,'versicolor') == 1) = 2;

y(strcmp(species,'virginica') == 1) = 3;

xt = x; yt = y; % Let all training set = test set

C = 10; % Recommended box constraint

kw = -1; % Let us estimate kw

case 2 % Set 2: FAN W/ 4 ARMS

load fan x;

y = x(:,3); x = x(:,1:2);

xt = x; yt = y; % Let all training set = test set

C = Inf; % Recommended box constraint

kw = 10; % Recommended kernel width

case 3 % Set 3: RANDOM CIRCLES

x = zeros(600,2); y = ones(length(x),1);

for j = 1:6

ind = (1:100) + 100*(j-1);

y(ind) = j; rd = 2*(rand + 0.5);

t = 2*pi*rand(1,100);

r = rd*rand(1,100);

x(ind,1) = r.*cos(t) + 10*rand;

x(ind,2) = r.*sin(t) + 10*rand;

end

xt = x; yt = y; % Let all training set = test set

C = 1; % Recommended box constraint

kw = -1; % Let us estimate kw

case 4 % Set 4: SOUTHEAST ASIAN MAP

load SEasia x country;

y = x(:,3); x = x(:,1:2); % x = [Vsg, Vsl]

xt = x; yt = y; % Let all training set = test set

C = 1e3; % Recommended box constraint

kw = 10; % Recommended kernel width

case 5 % Set 5: RAINBOW

x = 5*(2*rand(500,2)-1);

y = ones(length(x),1);

for j = 6:-1:1

y(x(:,2) + 2*j - 7 > 0.5*(x(:,1)...

+ sin(2*x(:,1)))) = 8 - j;

end

xt = x; yt = y; % Let all training set = test set

C = Inf; % Recommended box constraint

kw = 0.5; % Recommended kernel width

end

end

%% SVM TRAINING FOR MULTI-CLASS CLASSIFICATION

% See Platt et al. [1]

K = length(unique(y)); % number of classes

CL = cell(K); c = 1; % classifiers [K x K]

for j = 1:(K-1)

for k = K:-1:(j+1)

xPos = x(y == j,:); xNeg = x(y == k,:); % (+) and (-) samples

pN = size(xPos,1); nN = size(xNeg,1); % No. of samples

Y = [ones(pN,1); -ones(nN,1)]; % Assign (+1) and (-1)

🎉4 参考文献

部分理论来源于网络,如有侵权请联系删除。

[1] Coursera - Machine Learning by Andrew Ng.

[2] Support Vector Machines, Cristianini & Shawe-Taylor, 2000

[3] Platt et al. Large Margin DAGs for Multiclass Classification, Advances in NIPS, 2000

.

[4] Karatzoglou et al. Support Vector Machines in R, Journal of Statistical Software, 15(9), 2006.

[5] Eyo et al. “Development of a Real-Time Objective Flow RegimeIdentifier Using Kernel Methods”, IEEE Trans. on Cybernetics, DOI 10.1109/TCYB.2019.2910257.

![[附源码]Python计算机毕业设计Django酒店在线预约咨询小程序](https://img-blog.csdnimg.cn/dec0b4b7c1af4702ba958b452cf1e651.png)