深度学习笔记之循环神经网络——GRU的反向传播过程

引言

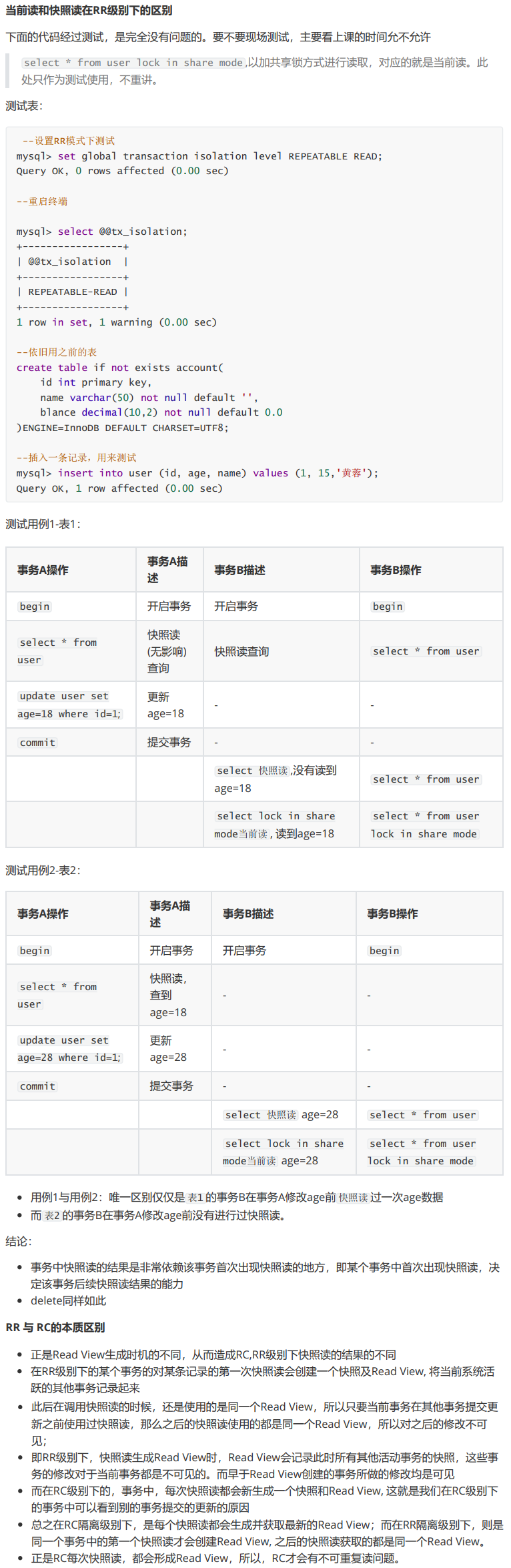

上一节介绍了门控循环单元 ( Gate Recurrent Unit,GRU ) (\text{Gate Recurrent Unit,GRU}) (Gate Recurrent Unit,GRU),本节我们参照 LSTM \text{LSTM} LSTM反向传播的格式,观察 GRU \text{GRU} GRU的反向传播过程。

回顾: GRU \text{GRU} GRU的前馈计算过程

GRU

\text{GRU}

GRU的前馈计算过程表示如下:

为了后续的反向传播过程,将过程分解的细致一些。其中

Z

~

(

t

)

,

r

~

(

t

)

\widetilde{\mathcal Z}^{(t)},\widetilde{r}^{(t)}

Z

(t),r

(t)分别表示更新门、重置门的线性计算过程。

{

Z

~

(

t

)

=

W

H

⇒

Z

⋅

h

(

t

−

1

)

+

W

X

⇒

Z

⋅

x

(

t

)

+

b

Z

Z

(

t

)

=

σ

(

Z

~

(

t

)

)

r

~

(

t

)

=

W

H

⇒

r

⋅

h

(

t

−

1

)

+

W

X

⇒

r

⋅

x

(

t

)

+

b

r

r

(

t

)

=

σ

(

r

~

(

t

)

)

h

~

(

t

)

=

Tanh

[

W

H

⇒

H

~

⋅

(

r

(

t

)

∗

h

(

t

−

1

)

)

+

W

X

⇒

H

~

⋅

x

(

t

)

+

b

H

~

]

h

(

t

)

=

(

1

−

Z

(

t

)

)

∗

h

(

t

−

1

)

+

Z

(

t

)

∗

h

~

(

t

)

\begin{cases} \begin{aligned} & \widetilde{\mathcal Z}^{(t)} = \mathcal W_{\mathcal H \Rightarrow \mathcal Z} \cdot h^{(t-1)} + \mathcal W_{\mathcal X \Rightarrow \mathcal Z} \cdot x^{(t)} + b_{\mathcal Z} \\ & \mathcal Z^{(t)} = \sigma(\widetilde{\mathcal Z}^{(t)}) \\ & \widetilde{r}^{(t)} = \mathcal W_{\mathcal H \Rightarrow r} \cdot h^{(t-1)} + \mathcal W_{\mathcal X \Rightarrow r} \cdot x^{(t)} + b_{r} \\ & r^{(t)} = \sigma(\widetilde{r}^{(t)}) \\ & \widetilde{h}^{(t)} = \text{Tanh} \left[\mathcal W_{\mathcal H \Rightarrow \widetilde{\mathcal H}} \cdot (r^{(t)} * h^{(t-1)}) + \mathcal W_{\mathcal X \Rightarrow \widetilde{\mathcal H}} \cdot x^{(t)} + b_{\widetilde{\mathcal H}}\right] \\ & h^{(t)} = (1 -\mathcal Z^{(t)}) * h^{(t-1)} + \mathcal Z^{(t)} * \widetilde{h}^{(t)} \end{aligned} \end{cases}

⎩

⎨

⎧Z

(t)=WH⇒Z⋅h(t−1)+WX⇒Z⋅x(t)+bZZ(t)=σ(Z

(t))r

(t)=WH⇒r⋅h(t−1)+WX⇒r⋅x(t)+brr(t)=σ(r

(t))h

(t)=Tanh[WH⇒H

⋅(r(t)∗h(t−1))+WX⇒H

⋅x(t)+bH

]h(t)=(1−Z(t))∗h(t−1)+Z(t)∗h

(t)

场景设计

上述仅描述的是 GRU \text{GRU} GRU关于序列信息 h ( t ) ( t = 1 , 2 , ⋯ , T ) h^{(t)}(t=1,2,\cdots,\mathcal T) h(t)(t=1,2,⋯,T)的迭代过程。各时刻的输出特征以及损失函数于循环神经网络相同:

- 使用

Softmax

\text{Softmax}

Softmax激活函数,其输出结果作为模型对

t

t

t时刻的预测结果:

{ C ( t ) = W H ⇒ C ⋅ h ( t ) + b h y ^ ( t ) = Softmax ( C ( t ) ) \begin{cases} \mathcal C^{(t)} = \mathcal W_{\mathcal H \Rightarrow \mathcal C} \cdot h^{(t)} + b_{h} \\ \hat y^{(t)} = \text{Softmax}(\mathcal C^{(t)}) \end{cases} {C(t)=WH⇒C⋅h(t)+bhy^(t)=Softmax(C(t)) - 关于

t

t

t时刻预测结果

y

^

(

t

)

\hat y^{(t)}

y^(t)与真实分布

y

(

t

)

y^{(t)}

y(t)之间的偏差信息使用交叉熵

(

CrossEntropy

)

(\text{CrossEntropy})

(CrossEntropy)进行表示:

其中n Y n_{\mathcal Y} nY表示预测/真实分布的维数。

L ( t ) = L [ y ^ ( t ) , y ( t ) ] = − ∑ j = 1 n Y y j ( t ) log [ y ^ j ( t ) ] \mathcal L^{(t)} = \mathcal L \left[\hat y^{(t)},y^{(t)}\right] = - \sum_{j=1}^{n_\mathcal Y} y_j^{(t)} \log \left[\hat y_j^{(t)}\right] L(t)=L[y^(t),y(t)]=−j=1∑nYyj(t)log[y^j(t)] - 所有时刻交叉熵结果的累加和构成完整的损失函数

L

\mathcal L

L:

L = ∑ t = 1 T L ( t ) = ∑ t = 1 T L [ y ^ ( t ) , y ( t ) ] \begin{aligned} \mathcal L & = \sum_{t=1}^{\mathcal T} \mathcal L^{(t)}\\ & = \sum_{t=1}^{\mathcal T} \mathcal L \left[\hat y^{(t)},y^{(t)}\right] \end{aligned} L=t=1∑TL(t)=t=1∑TL[y^(t),y(t)]

反向传播过程

T \mathcal T T时刻的反向传播过程

以 T \mathcal T T时刻重置门 ∂ L ∂ W h ( T ) ⇒ Z ( T ) \begin{aligned}\frac{\partial \mathcal L}{\partial \mathcal W_{\mathcal h^{(\mathcal T)} \Rightarrow \mathcal Z^{(\mathcal T)}}}\end{aligned} ∂Wh(T)⇒Z(T)∂L的反向传播为例:

- 计算梯度

∂

L

∂

L

(

T

)

\begin{aligned}\frac{\partial \mathcal L}{\partial \mathcal L^{(\mathcal T)}}\end{aligned}

∂L(T)∂L:

其中仅有L ( T ) \mathcal L^{(\mathcal T)} L(T)一项存在梯度,其余项均视作常数。

∂ L ∂ L ( T ) = ∂ ∂ L ( T ) [ ∑ t = 1 T L ( t ) ] = 0 + 0 + ⋯ + 1 = 1 \frac{\partial \mathcal L}{\partial \mathcal L^{(\mathcal T)}} = \frac{\partial}{\partial \mathcal L^{(\mathcal T)}} \left[\sum_{t=1}^{\mathcal T} \mathcal L^{(t)}\right] = 0 + 0 + \cdots + 1 = 1 ∂L(T)∂L=∂L(T)∂[t=1∑TL(t)]=0+0+⋯+1=1 - 计算梯度

∂

L

(

T

)

∂

C

(

T

)

\begin{aligned}\frac{\partial \mathcal L^{(\mathcal T)}}{\partial \mathcal C^{(\mathcal T)}}\end{aligned}

∂C(T)∂L(T):

关于Softmax \text{Softmax} Softmax激活函数与交叉熵组合的梯度描述,见循环神经网络—— Softmax \text{Softmax} Softmax函数的反向传播过程一节,这里不再赘述。

{ L ( T ) = − ∑ j = 1 n Y y j ( T ) log [ y ^ j ( T ) ] y ^ ( T ) = Softmax [ C ( T ) ] ⇒ ∂ L ( T ) ∂ C ( T ) = y ^ ( T ) − y ( T ) \begin{aligned} & \begin{cases} \mathcal L^{(\mathcal T)} = -\sum_{j=1}^{n_{\mathcal Y}}y_j^{(\mathcal T)} \log \left[\hat y_j^{(\mathcal T)}\right] \\ \hat y^{(\mathcal T)} = \text{Softmax}[\mathcal C^{(\mathcal T)}] \end{cases} \\ & \Rightarrow \frac{\partial \mathcal L^{(\mathcal T)}}{\partial \mathcal C^{(\mathcal T)}} = \hat y^{(\mathcal T)} - y^{(\mathcal T)} \end{aligned} {L(T)=−∑j=1nYyj(T)log[y^j(T)]y^(T)=Softmax[C(T)]⇒∂C(T)∂L(T)=y^(T)−y(T) - 继续计算梯度

∂

C

(

T

)

∂

h

(

T

)

\begin{aligned}\frac{\partial \mathcal C^{(\mathcal T)}}{\partial h^{(\mathcal T)}}\end{aligned}

∂h(T)∂C(T):

∂ C ( T ) ∂ h ( T ) = ∂ ∂ h ( T ) [ W H ⇒ C ⋅ h ( T ) + b h ] = W H ⇒ C \frac{\partial \mathcal C^{(\mathcal T)}}{\partial h^{(\mathcal T)}} = \frac{\partial}{\partial h^{(\mathcal T)}} \left[\mathcal W_{\mathcal H \Rightarrow \mathcal C} \cdot h^{(\mathcal T)} + b_{h}\right] = \mathcal W_{\mathcal H \Rightarrow \mathcal C} ∂h(T)∂C(T)=∂h(T)∂[WH⇒C⋅h(T)+bh]=WH⇒C

至此,关于梯度

∂

L

∂

h

(

T

)

\begin{aligned}\frac{\partial \mathcal L}{\partial h^{(\mathcal T)}}\end{aligned}

∂h(T)∂L可表示为:

∂

L

∂

h

(

T

)

=

∂

L

∂

L

(

T

)

⋅

∂

L

(

T

)

∂

C

(

T

)

⋅

∂

C

(

T

)

∂

h

(

T

)

=

1

⋅

[

W

H

⇒

C

]

T

⋅

(

y

^

(

T

)

−

y

(

T

)

)

\begin{aligned} \frac{\partial \mathcal L}{\partial h^{(\mathcal T)}} & = \frac{\partial \mathcal L}{\partial \mathcal L^{(\mathcal T)}} \cdot \frac{\partial \mathcal L^{(\mathcal T)}}{\partial \mathcal C^{(\mathcal T)}} \cdot \frac{\partial \mathcal C^{(\mathcal T)}}{\partial h^{(\mathcal T)}} \\ & = 1 \cdot \left[\mathcal W_{\mathcal H \Rightarrow \mathcal C}\right]^T \cdot (\hat y^{(\mathcal T)} - y^{(\mathcal T)}) \end{aligned}

∂h(T)∂L=∂L(T)∂L⋅∂C(T)∂L(T)⋅∂h(T)∂C(T)=1⋅[WH⇒C]T⋅(y^(T)−y(T))

观察:从

h

(

T

)

h^{(\mathcal T)}

h(T)开始,从

h

(

T

)

⇒

W

h

(

T

)

⇒

Z

(

T

)

h^{(\mathcal T)} \Rightarrow \mathcal W_{h^{(\mathcal T)} \Rightarrow \mathcal Z^{(\mathcal T)}}

h(T)⇒Wh(T)⇒Z(T)的传播路径都有哪些。

只有唯一一条,其前馈计算路径表示为:

{

h

(

t

)

=

(

1

−

Z

(

t

)

)

∗

h

(

t

−

1

)

+

Z

(

t

)

∗

h

~

(

t

)

Z

(

t

)

=

σ

(

Z

~

(

t

)

)

Z

~

(

t

)

=

W

H

⇒

Z

⋅

h

(

t

−

1

)

+

W

X

⇒

Z

⋅

x

(

t

)

+

b

Z

\begin{cases} \begin{aligned} & h^{(t)} = (1 -\mathcal Z^{(t)}) * h^{(t-1)} + \mathcal Z^{(t)} * \widetilde{h}^{(t)} \\ & \mathcal Z^{(t)} = \sigma(\widetilde{\mathcal Z}^{(t)}) \\ & \widetilde{\mathcal Z}^{(t)} = \mathcal W_{\mathcal H \Rightarrow \mathcal Z} \cdot h^{(t-1)} + \mathcal W_{\mathcal X \Rightarrow \mathcal Z} \cdot x^{(t)} + b_{\mathcal Z} \end{aligned} \end{cases}

⎩

⎨

⎧h(t)=(1−Z(t))∗h(t−1)+Z(t)∗h

(t)Z(t)=σ(Z

(t))Z

(t)=WH⇒Z⋅h(t−1)+WX⇒Z⋅x(t)+bZ

对应反向传播结果表示为:

∂

h

(

T

)

∂

W

h

(

T

)

⇒

Z

(

T

)

=

∂

h

(

T

)

∂

Z

(

T

)

⋅

∂

Z

(

T

)

∂

Z

~

(

T

)

⋅

∂

Z

~

(

T

)

∂

W

h

(

T

)

⇒

Z

(

T

)

=

[

h

~

(

T

)

−

h

(

T

−

1

)

]

⋅

[

Sigmoid

(

Z

~

(

T

)

)

]

′

⋅

h

(

T

−

1

)

\begin{aligned} \frac{\partial h^{(\mathcal T)}}{\partial \mathcal W_{h^{(\mathcal T)} \Rightarrow \mathcal Z^{(\mathcal T)}}} & = \frac{\partial h^{(\mathcal T)}}{\partial \mathcal Z^{(\mathcal T)}} \cdot \frac{\partial \mathcal Z^{(\mathcal T)}}{\partial \widetilde{\mathcal Z}^{(\mathcal T)}} \cdot \frac{\partial \widetilde{\mathcal Z}^{(\mathcal T)}}{\partial \mathcal W_{h^{(\mathcal T)} \Rightarrow \mathcal Z^{(\mathcal T)}}} \\ & = \left[\widetilde{h}^{(\mathcal T)} - h^{(\mathcal T - 1)}\right] \cdot \left[\text{Sigmoid}(\widetilde{\mathcal Z}^{(\mathcal T)})\right]' \cdot h^{(\mathcal T - 1)} \end{aligned}

∂Wh(T)⇒Z(T)∂h(T)=∂Z(T)∂h(T)⋅∂Z

(T)∂Z(T)⋅∂Wh(T)⇒Z(T)∂Z

(T)=[h

(T)−h(T−1)]⋅[Sigmoid(Z

(T))]′⋅h(T−1)

最终,关于

∂

L

∂

W

h

(

T

)

⇒

Z

(

T

)

\begin{aligned}\frac{\partial \mathcal L}{\partial \mathcal W_{\mathcal h^{(\mathcal T)} \Rightarrow \mathcal Z^{(\mathcal T)}}}\end{aligned}

∂Wh(T)⇒Z(T)∂L的反向传播结果为:

这里更主要的是描述它的反向传播路径,它的具体展开在后续不再赘述。

∂

L

∂

W

h

(

T

)

⇒

Z

(

T

)

=

∂

L

∂

h

(

T

)

⋅

∂

h

(

T

)

∂

W

h

(

T

)

⇒

Z

(

T

)

=

{

[

W

H

⇒

C

]

T

⋅

(

y

^

(

T

)

−

y

(

T

)

)

}

⋅

{

[

h

~

(

T

)

−

h

(

T

−

1

)

]

⋅

[

Sigmoid

(

Z

~

(

T

)

)

]

′

⋅

h

(

T

−

1

)

}

\begin{aligned} \begin{aligned}\frac{\partial \mathcal L}{\partial \mathcal W_{\mathcal h^{(\mathcal T)} \Rightarrow \mathcal Z^{(\mathcal T)}}}\end{aligned} & = \frac{\partial \mathcal L}{\partial h^{(\mathcal T)}} \cdot \frac{\partial h^{(\mathcal T)}}{\partial \mathcal W_{h^{(\mathcal T)}\Rightarrow \mathcal Z^{(\mathcal T)}}} \\ & = \left\{\left[\mathcal W_{\mathcal H \Rightarrow \mathcal C}\right]^T \cdot (\hat y^{(\mathcal T)} - y^{(\mathcal T)})\right\} \cdot \left\{ \left[\widetilde{h}^{(\mathcal T)} - h^{(\mathcal T - 1)}\right] \cdot \left[\text{Sigmoid}(\widetilde{\mathcal Z}^{(\mathcal T)})\right]' \cdot h^{(\mathcal T - 1)}\right\} \end{aligned}

∂Wh(T)⇒Z(T)∂L=∂h(T)∂L⋅∂Wh(T)⇒Z(T)∂h(T)={[WH⇒C]T⋅(y^(T)−y(T))}⋅{[h

(T)−h(T−1)]⋅[Sigmoid(Z

(T))]′⋅h(T−1)}

T − 1 \mathcal T - 1 T−1时刻的反向传播路径

关于 T − 1 \mathcal T - 1 T−1时刻的重置门梯度 ∂ L ∂ W h ( T − 1 ) ⇒ Z ( T − 1 ) \begin{aligned} \frac{\partial \mathcal L}{\partial \mathcal W_{h^{(\mathcal T - 1)} \Rightarrow \mathcal Z^{(\mathcal T - 1)}}} \end{aligned} ∂Wh(T−1)⇒Z(T−1)∂L,它的路径主要包含两大类:

第一类路径:同

T

\mathcal T

T时刻路径,从对应的

L

(

T

−

1

)

\mathcal L^{(\mathcal T - 1)}

L(T−1)直接传至

W

h

(

T

−

1

)

⇒

Z

(

T

−

1

)

\mathcal W_{h^{(\mathcal T - 1)}\Rightarrow \mathcal Z^{(\mathcal T - 1)}}

Wh(T−1)⇒Z(T−1):

该路径与上述

T

\mathcal T

T时刻的路径类型相同,将对应的上标

T

\mathcal T

T改为

T

−

1

\mathcal T - 1

T−1即可。

∂

L

(

T

−

1

)

∂

W

h

(

T

−

1

)

⇒

Z

(

T

−

1

)

=

∂

L

(

T

−

1

)

∂

h

(

T

−

1

)

⋅

∂

h

(

T

−

1

)

∂

W

h

(

T

−

1

)

⇒

Z

(

T

−

1

)

\begin{aligned} \frac{\partial \mathcal L^{(\mathcal T - 1)}}{\partial \mathcal W_{h^{(\mathcal T - 1)} \Rightarrow \mathcal Z^{(\mathcal T - 1)}}} & = \frac{\partial \mathcal L^{(\mathcal T - 1)}}{\partial h^{(\mathcal T - 1)}} \cdot \frac{\partial h^{(\mathcal T - 1)}}{\partial \mathcal W_{h^{(\mathcal T - 1)} \Rightarrow \mathcal Z^{(\mathcal T - 1)}}} \end{aligned}

∂Wh(T−1)⇒Z(T−1)∂L(T−1)=∂h(T−1)∂L(T−1)⋅∂Wh(T−1)⇒Z(T−1)∂h(T−1)

第二类路径:重新观察

W

h

(

T

−

1

)

⇒

Z

(

T

−

1

)

\mathcal W_{h^{(\mathcal T - 1)}\Rightarrow \mathcal Z^{(\mathcal T - 1)}}

Wh(T−1)⇒Z(T−1)只会出现在

Z

(

T

−

1

)

\mathcal Z^{(\mathcal T - 1)}

Z(T−1)中,并且

Z

(

T

−

1

)

\mathcal Z^{(\mathcal T - 1)}

Z(T−1)只会出现在

h

(

T

−

1

)

h^{(\mathcal T - 1)}

h(T−1)中。因此:仅需要找出与

h

(

T

−

1

)

h^{(\mathcal T - 1)}

h(T−1)相关的所有路径即可,最终都可以使用

∂

h

(

T

−

1

)

∂

W

h

(

T

−

1

)

⇒

Z

(

T

−

1

)

\begin{aligned}\frac{\partial h^{(\mathcal T - 1)}}{\partial \mathcal W_{h^{(\mathcal T - 1)} \Rightarrow \mathcal Z^{(\mathcal T - 1)}}}\end{aligned}

∂Wh(T−1)⇒Z(T−1)∂h(T−1)将梯度传递给

W

h

(

T

−

1

)

⇒

Z

(

T

−

1

)

\mathcal W_{h^{(\mathcal T - 1)} \Rightarrow \mathcal Z^{(\mathcal T - 1)}}

Wh(T−1)⇒Z(T−1)。

其中‘第一类路径’就是其中一种情况。只不过它是从当前

T

−

1

\mathcal T - 1

T−1时刻直接传递得到的梯度结果。而第二类路径我们关注从

T

\mathcal T

T时刻传递产生的梯度信息。

从

T

⇒

T

−

1

\mathcal T \Rightarrow \mathcal T - 1

T⇒T−1时刻中,关于

h

(

T

−

1

)

h^{(\mathcal T - 1)}

h(T−1)的梯度路径一共包含

4

4

4条:

- 第一条:通过

T

\mathcal T

T时刻

h

(

T

)

h^{(\mathcal T)}

h(T)中的

h

(

T

−

1

)

h^{(\mathcal T - 1)}

h(T−1)进行传递。

{ Forword : h ( T ) = ( 1 − Z ( T ) ) ∗ h ( T − 1 ) + Z ( T ) ∗ h ~ ( T ) Backward : ∂ L ( T ) ∂ W h ( T − 1 ) ⇒ Z ( T − 1 ) ⇒ ∂ L ( T ) ∂ h ( T ) ⋅ ∂ h ( T ) ∂ h ( T − 1 ) ⋅ ∂ h ( T − 1 ) ∂ W h ( T − 1 ) ⇒ Z ( T − 1 ) \begin{cases} \text{Forword : } h^{(\mathcal T)} = (1 -\mathcal Z^{(\mathcal T)}) * h^{(\mathcal T -1)} + \mathcal Z^{(\mathcal T)} * \widetilde{h}^{(\mathcal T)} \\ \quad \\ \text{Backward : }\begin{aligned} \frac{\partial \mathcal L^{(\mathcal T)}}{\partial \mathcal W_{h^{(\mathcal T - 1)} \Rightarrow \mathcal Z^{(\mathcal T - 1)}}} \Rightarrow \frac{\partial \mathcal L^{(\mathcal T)}}{\partial h^{(\mathcal T)}} \cdot \frac{\partial h^{(\mathcal T)}}{\partial h^{(\mathcal T - 1)}} \cdot \frac{\partial h^{(\mathcal T - 1)}}{\partial \mathcal W_{h^{(\mathcal T - 1)} \Rightarrow \mathcal Z^{(\mathcal T - 1)}}} \end{aligned} \end{cases} ⎩ ⎨ ⎧Forword : h(T)=(1−Z(T))∗h(T−1)+Z(T)∗h (T)Backward : ∂Wh(T−1)⇒Z(T−1)∂L(T)⇒∂h(T)∂L(T)⋅∂h(T−1)∂h(T)⋅∂Wh(T−1)⇒Z(T−1)∂h(T−1) - 第二条:通过

T

\mathcal T

T时刻

h

(

T

)

h^{(\mathcal T)}

h(T)中的

Z

(

T

)

\mathcal Z^{(\mathcal T)}

Z(T)向

h

(

T

−

1

)

h^{(\mathcal T-1)}

h(T−1)进行传递。

{ Forward : { h ( T ) = ( 1 − Z ( T ) ) ∗ h ( T − 1 ) + Z ( T ) ∗ h ~ ( T ) Z ( T ) = σ [ W H ⇒ Z ⋅ h ( T − 1 ) + W X ⇒ Z ⋅ x ( T ) + b Z ] Backward : ∂ L ( T ) ∂ W h ( T − 1 ) ⇒ Z ( T − 1 ) ⇒ ∂ L ( T ) ∂ h ( T ) ⋅ ∂ h ( T ) ∂ Z ( T ) ⋅ ∂ Z ( T ) ∂ h ( T − 1 ) ⋅ ∂ h ( T − 1 ) ∂ W h ( T − 1 ) ⇒ Z ( T − 1 ) \begin{cases} \text{Forward : } \begin{cases} h^{(\mathcal T)} = (1 -\mathcal Z^{(\mathcal T)}) * h^{(\mathcal T -1)} + \mathcal Z^{(\mathcal T)} * \widetilde{h}^{(\mathcal T)} \\ \mathcal Z^{(\mathcal T)} = \sigma \left[\mathcal W_{\mathcal H \Rightarrow \mathcal Z} \cdot h^{(\mathcal T -1)} + \mathcal W_{\mathcal X \Rightarrow \mathcal Z} \cdot x^{(\mathcal T)} + b_{\mathcal Z}\right] \end{cases} \quad \\ \text{Backward : } \begin{aligned} \frac{\partial \mathcal L^{(\mathcal T)}}{\partial \mathcal W_{h^{(\mathcal T - 1)} \Rightarrow \mathcal Z^{(\mathcal T - 1)}}} \Rightarrow \frac{\partial \mathcal L^{(\mathcal T)}}{\partial h^{(\mathcal T)}} \cdot \frac{\partial h^{(\mathcal T)}}{\partial \mathcal Z^{(\mathcal T)}} \cdot \frac{\partial \mathcal Z^{(\mathcal T)}}{\partial h^{(\mathcal T - 1)}} \cdot \frac{\partial h^{(\mathcal T - 1)}}{\partial \mathcal W_{h^{(\mathcal T - 1)} \Rightarrow \mathcal Z^{(\mathcal T - 1)}}} \end{aligned} \end{cases} ⎩ ⎨ ⎧Forward : {h(T)=(1−Z(T))∗h(T−1)+Z(T)∗h (T)Z(T)=σ[WH⇒Z⋅h(T−1)+WX⇒Z⋅x(T)+bZ]Backward : ∂Wh(T−1)⇒Z(T−1)∂L(T)⇒∂h(T)∂L(T)⋅∂Z(T)∂h(T)⋅∂h(T−1)∂Z(T)⋅∂Wh(T−1)⇒Z(T−1)∂h(T−1) - 第三条:通过

T

\mathcal T

T时刻

h

(

T

)

h^{(\mathcal T)}

h(T)中的

h

~

(

T

)

\widetilde{h}^{(\mathcal T)}

h

(T)向

h

(

T

−

1

)

h^{(\mathcal T - 1)}

h(T−1)进行传递。

{ Forward : { h ( T ) = ( 1 − Z ( T ) ) ∗ h ( T − 1 ) + Z ( T ) ∗ h ~ ( T ) h ~ ( T ) = Tanh [ W H ⇒ H ~ ⋅ ( r ( T ) ∗ h ( T − 1 ) ) + W X ⇒ H ~ ⋅ x ( T ) + b H ~ ] Backward : ∂ L ( T ) ∂ W h ( T − 1 ) ⇒ Z ( T − 1 ) ⇒ ∂ L ( T ) ∂ h ( T ) ⋅ ∂ h ( T ) ∂ h ~ ( T ) ⋅ h ~ ( T ) ∂ h ( T − 1 ) ⋅ ∂ h ( T − 1 ) ∂ W h ( T − 1 ) ⇒ Z ( T − 1 ) \begin{cases} \text{Forward : } \begin{cases} h^{(\mathcal T)} = (1 -\mathcal Z^{(\mathcal T)}) * h^{(\mathcal T -1)} + \mathcal Z^{(\mathcal T)} * \widetilde{h}^{(\mathcal T)} \\ \widetilde{h}^{(\mathcal T)} = \text{Tanh} \left[\mathcal W_{\mathcal H \Rightarrow \widetilde{\mathcal H}} \cdot (r^{(\mathcal T)} * h^{(\mathcal T -1)}) + \mathcal W_{\mathcal X \Rightarrow \widetilde{\mathcal H}} \cdot x^{(\mathcal T)} + b_{\widetilde{\mathcal H}}\right] \end{cases}\\ \quad \\ \text{Backward : } \begin{aligned} \frac{\partial \mathcal L^{(\mathcal T)}}{\partial \mathcal W_{h^{(\mathcal T - 1)} \Rightarrow \mathcal Z^{(\mathcal T - 1)}}} \Rightarrow \frac{\partial \mathcal L^{(\mathcal T)}}{\partial h^{(\mathcal T)}} \cdot \frac{\partial h^{(\mathcal T)}}{\partial \widetilde{h}^{(\mathcal T)}} \cdot \frac{\widetilde{h}^{(\mathcal T)}}{\partial h^{(\mathcal T - 1)}} \cdot \frac{\partial h^{(\mathcal T - 1)}}{\partial \mathcal W_{h^{(\mathcal T - 1)} \Rightarrow \mathcal Z^{(\mathcal T - 1)}}} \end{aligned} \end{cases} ⎩ ⎨ ⎧Forward : {h(T)=(1−Z(T))∗h(T−1)+Z(T)∗h (T)h (T)=Tanh[WH⇒H ⋅(r(T)∗h(T−1))+WX⇒H ⋅x(T)+bH ]Backward : ∂Wh(T−1)⇒Z(T−1)∂L(T)⇒∂h(T)∂L(T)⋅∂h (T)∂h(T)⋅∂h(T−1)h (T)⋅∂Wh(T−1)⇒Z(T−1)∂h(T−1) - 第四条:与第三条路径类似,只不过从

h

~

(

T

)

\widetilde{h}^{(\mathcal T)}

h

(T)中的

r

(

T

)

r^{(\mathcal T)}

r(T)向

h

(

T

−

1

)

h^{(\mathcal T - 1)}

h(T−1)进行传递。

{ Forward : { h ( T ) = ( 1 − Z ( T ) ) ∗ h ( T − 1 ) + Z ( T ) ∗ h ~ ( T ) h ~ ( T ) = Tanh [ W H ⇒ H ~ ⋅ ( r ( T ) ∗ h ( T − 1 ) ) + W X ⇒ H ~ ⋅ x ( T ) + b H ~ ] r ~ ( T ) = W H ⇒ r ⋅ h ( T − 1 ) + W X ⇒ r ⋅ x ( T ) + b r Backward : ∂ L ( T ) ∂ W h ( T − 1 ) ⇒ Z ( T − 1 ) ⇒ ∂ L ( T ) ∂ h ( T ) ⋅ ∂ h ( T ) ∂ h ~ ( T ) ⋅ ∂ h ~ ( T ) ∂ r ( T ) ⋅ ∂ r ( T ) ∂ h ( T − 1 ) ⋅ ∂ h ( T − 1 ) ∂ W h ( T − 1 ) ⇒ Z ( T − 1 ) \begin{cases} \text{Forward : } \begin{cases} h^{(\mathcal T)} = (1 -\mathcal Z^{(\mathcal T)}) * h^{(\mathcal T -1)} + \mathcal Z^{(\mathcal T)} * \widetilde{h}^{(\mathcal T)} \\ \widetilde{h}^{(\mathcal T)} = \text{Tanh} \left[\mathcal W_{\mathcal H \Rightarrow \widetilde{\mathcal H}} \cdot (r^{(\mathcal T)} * h^{(\mathcal T -1)}) + \mathcal W_{\mathcal X \Rightarrow \widetilde{\mathcal H}} \cdot x^{(\mathcal T)} + b_{\widetilde{\mathcal H}}\right] \\ \widetilde{r}^{(\mathcal T)} = \mathcal W_{\mathcal H \Rightarrow r} \cdot h^{(\mathcal T -1)} + \mathcal W_{\mathcal X \Rightarrow r} \cdot x^{(\mathcal T)} + b_{r} \end{cases}\\ \quad \\ \text{Backward : } \begin{aligned} \frac{\partial \mathcal L^{(\mathcal T)}}{\partial \mathcal W_{h^{(\mathcal T - 1)} \Rightarrow \mathcal Z^{(\mathcal T - 1)}}} \Rightarrow \frac{\partial \mathcal L^{(\mathcal T)}}{\partial h^{(\mathcal T)}} \cdot \frac{\partial h^{(\mathcal T)}}{\partial \widetilde{h}^{(\mathcal T)}} \cdot \frac{\partial \widetilde{h}^{(\mathcal T)}}{\partial r^{(\mathcal T)}} \cdot \frac{\partial r^{(\mathcal T)}}{\partial h^{(\mathcal T - 1)}} \cdot \frac{\partial h^{(\mathcal T - 1)}}{\partial \mathcal W_{h^{(\mathcal T - 1)} \Rightarrow \mathcal Z^{(\mathcal T - 1)}}} \end{aligned} \end{cases} ⎩ ⎨ ⎧Forward : ⎩ ⎨ ⎧h(T)=(1−Z(T))∗h(T−1)+Z(T)∗h (T)h (T)=Tanh[WH⇒H ⋅(r(T)∗h(T−1))+WX⇒H ⋅x(T)+bH ]r (T)=WH⇒r⋅h(T−1)+WX⇒r⋅x(T)+brBackward : ∂Wh(T−1)⇒Z(T−1)∂L(T)⇒∂h(T)∂L(T)⋅∂h (T)∂h(T)⋅∂r(T)∂h (T)⋅∂h(T−1)∂r(T)⋅∂Wh(T−1)⇒Z(T−1)∂h(T−1)

至此, T ⇒ T − 1 \mathcal T \Rightarrow \mathcal T - 1 T⇒T−1时刻的 5 5 5条路径已全部找全。其中:

- 1 1 1条是 T − 1 \mathcal T - 1 T−1时刻自身路径;

- 剩余 4 4 4条均是 T ⇒ T − 1 \mathcal T \Rightarrow \mathcal T - 1 T⇒T−1的传播路径。

T − 2 \mathcal T - 2 T−2时刻的反向传播路径

再往下走一步,观察它路径传播数量的规律:

- 第

1

1

1条依然是

L

(

T

−

2

)

\mathcal L^{(\mathcal T - 2)}

L(T−2)向

W

h

(

T

−

2

)

⇒

Z

(

T

−

2

)

\mathcal W_{h^{(\mathcal T - 2)} \Rightarrow \mathcal Z^{(\mathcal T - 2)}}

Wh(T−2)⇒Z(T−2)直接传递的梯度:

∂ L ( T − 2 ) ∂ W h ( T − 2 ) ⇒ Z ( T − 2 ) = ∂ L ( T − 2 ) ∂ h ( T − 2 ) ⋅ ∂ h ( T − 2 ) ∂ W h ( T − 2 ) ⇒ Z ( T − 2 ) \begin{aligned} \frac{\partial \mathcal L^{(\mathcal T - 2)}}{\partial \mathcal W_{h^{(\mathcal T - 2)} \Rightarrow \mathcal Z^{(\mathcal T - 2)}}} = \frac{\partial \mathcal L^{(\mathcal T - 2)}}{\partial h^{(\mathcal T - 2)}} \cdot \frac{\partial h^{(\mathcal T - 2)}}{\partial \mathcal W_{h^{(\mathcal T - 2)} \Rightarrow \mathcal Z^{(\mathcal T - 2)}}} \end{aligned} ∂Wh(T−2)⇒Z(T−2)∂L(T−2)=∂h(T−2)∂L(T−2)⋅∂Wh(T−2)⇒Z(T−2)∂h(T−2) - 存在

4

4

4条是从

L

(

T

−

1

)

\mathcal L^{(\mathcal T - 1)}

L(T−1)开始,从

T

−

1

⇒

T

−

2

\mathcal T - 1 \Rightarrow \mathcal T - 2

T−1⇒T−2时刻传递的路径:

∂ L ( T − 1 ) ∂ W h ( T − 2 ) ⇒ Z ( T − 2 ) = { ∂ L ( T − 1 ) ∂ h ( T − 1 ) ⋅ ∂ h ( T − 1 ) ∂ h ( T − 2 ) ⋅ ∂ h ( T − 2 ) ∂ W h ( T − 2 ) ⇒ Z ( T − 2 ) ∂ L ( T − 1 ) ∂ h ( T − 1 ) ⋅ ∂ h ( T − 1 ) ∂ Z ( T − 1 ) ⋅ ∂ Z ( T − 1 ) ∂ h ( T − 2 ) ⋅ ∂ h ( T − 2 ) ∂ W h ( T − 2 ) ⇒ Z ( T − 2 ) ∂ L ( T − 1 ) ∂ h ( T − 1 ) ⋅ ∂ h ( T − 1 ) ∂ h ~ ( T − 1 ) ⋅ ∂ h ~ ( T − 1 ) ∂ h ( T − 2 ) ⋅ ∂ h ( T − 2 ) ∂ W h ( T − 2 ) ⇒ Z ( T − 2 ) ∂ L ( T − 1 ) ∂ h ( T − 1 ) ⋅ ∂ h ( T − 1 ) ∂ h ~ ( T − 1 ) ⋅ ∂ h ~ ( T − 1 ) ∂ r ( T − 1 ) ⋅ ∂ r ( T − 1 ) ∂ h ( T − 2 ) ⋅ ∂ h ( T − 2 ) ∂ W h ( T − 2 ) ⇒ Z ( T − 2 ) \frac{\partial \mathcal L^{(\mathcal T - 1)}}{\partial \mathcal W_{h^{(\mathcal T - 2)} \Rightarrow \mathcal Z^{(\mathcal T - 2)}}} = \begin{cases} \begin{aligned} & \frac{\partial \mathcal L^{(\mathcal T - 1)}}{\partial h^{(\mathcal T - 1)}} \cdot \frac{\partial h^{(\mathcal T - 1)}}{\partial h^{(\mathcal T - 2)}} \cdot \frac{\partial h^{(\mathcal T - 2)}}{\partial \mathcal W_{h^{(\mathcal T - 2)} \Rightarrow \mathcal Z^{(\mathcal T - 2)}}} \\ & \frac{\partial \mathcal L^{(\mathcal T - 1)}}{\partial h^{(\mathcal T - 1)}} \cdot \frac{\partial h^{(\mathcal T - 1)}}{\partial \mathcal Z^{(\mathcal T - 1)}} \cdot \frac{\partial \mathcal Z^{(\mathcal T - 1)}}{\partial h^{(\mathcal T - 2)}} \cdot \frac{\partial h^{(\mathcal T - 2)}}{\partial \mathcal W_{h^{(\mathcal T - 2)} \Rightarrow \mathcal Z^{(\mathcal T - 2)}}}\\ & \frac{\partial \mathcal L^{(\mathcal T - 1)}}{\partial h^{(\mathcal T - 1)}} \cdot \frac{\partial h^{(\mathcal T - 1)}}{\partial \widetilde{h}^{(\mathcal T - 1)}} \cdot \frac{\partial \widetilde{h}^{(\mathcal T - 1)}}{\partial h^{(\mathcal T - 2)}} \cdot \frac{\partial h^{(\mathcal T - 2)}}{\partial \mathcal W_{h^{(\mathcal T - 2)} \Rightarrow \mathcal Z^{(\mathcal T - 2)}}} \\ & \frac{\partial \mathcal L^{(\mathcal T - 1)}}{\partial h^{(\mathcal T - 1)}} \cdot \frac{\partial h^{(\mathcal T - 1)}}{\partial \widetilde{h}^{(\mathcal T - 1)}} \cdot \frac{\partial \widetilde{h}^{(\mathcal T - 1)}}{\partial r^{(\mathcal T - 1)}} \cdot \frac{\partial r^{(\mathcal T - 1)}}{\partial h^{(\mathcal T - 2)}} \cdot \frac{\partial h^{(\mathcal T - 2)}}{\partial \mathcal W_{h^{(\mathcal T - 2)} \Rightarrow \mathcal Z^{(\mathcal T - 2)}}} \end{aligned} \end{cases} ∂Wh(T−2)⇒Z(T−2)∂L(T−1)=⎩ ⎨ ⎧∂h(T−1)∂L(T−1)⋅∂h(T−2)∂h(T−1)⋅∂Wh(T−2)⇒Z(T−2)∂h(T−2)∂h(T−1)∂L(T−1)⋅∂Z(T−1)∂h(T−1)⋅∂h(T−2)∂Z(T−1)⋅∂Wh(T−2)⇒Z(T−2)∂h(T−2)∂h(T−1)∂L(T−1)⋅∂h (T−1)∂h(T−1)⋅∂h(T−2)∂h (T−1)⋅∂Wh(T−2)⇒Z(T−2)∂h(T−2)∂h(T−1)∂L(T−1)⋅∂h (T−1)∂h(T−1)⋅∂r(T−1)∂h (T−1)⋅∂h(T−2)∂r(T−1)⋅∂Wh(T−2)⇒Z(T−2)∂h(T−2) - 存在

4

×

4

4 \times 4

4×4条是从

L

(

T

)

\mathcal L^{(\mathcal T)}

L(T)开始,从

T

⇒

T

−

2

\mathcal T \Rightarrow \mathcal T- 2

T⇒T−2时刻传递的路径。

这个就不写了,太墨迹了。

这仅仅是 T ⇒ T − 2 \mathcal T \Rightarrow \mathcal T - 2 T⇒T−2时刻的路径数量, T − 3 \mathcal T - 3 T−3时刻关于 L ( T ) \mathcal L^{(\mathcal T)} L(T)相关的梯度路径有 4 × 4 × 4 = 64 4 \times 4 \times 4 = 64 4×4×4=64条,以此类推。

总结

和

LSTM

\text{LSTM}

LSTM的反向传播路径相比,

LSTM

\text{LSTM}

LSTM仅仅从

T

⇒

T

−

2

\mathcal T \Rightarrow \mathcal T - 2

T⇒T−2时刻传递的路径就有

24

24

24条,而

GRU

\text{GRU}

GRU仅有

16

16

16条,相比之下,极大地减小了反向传播路径的数量;

降低了时间、空间复杂度;

其次, GRU \text{GRU} GRU相比 LSTM \text{LSTM} LSTM减少了模型参数的更新数量,降低了过拟合 ( OverFitting ) (\text{OverFitting}) (OverFitting)的风险。

它的抑制梯度消失原理与 LSTM \text{LSTM} LSTM思想相同。首先随着反向传播深度的加深,相关梯度路径依然会呈指数级别增长,但规模明显小于 LSTM \text{LSTM} LSTM;并且其梯度计算过程依然有更新门、重置门自身参与梯度运算,从而调节各梯度分量的配置情况。

相关参考:

GRU循环神经网络 —— LSTM的轻量级版本