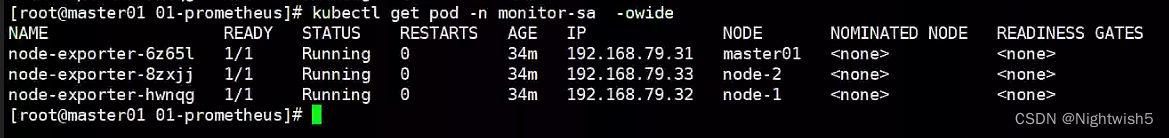

环境

k8s v1.18.0

192.168.79.31 master

192.168.79.32 node-1

192.168.79.33 node-2

一、Prometheus 对 kubernetes 的监控

1.1 node-exporter 组件安装和配置

node-exporter 可以采集机器(物理机、虚拟机、云主机等)的监控指标数据,能够采集到的指标包括 CPU, 内存,磁盘,网络,文件数等信息。

安装:

kubectl create

docker load -i node-exporter.tar.gz

node-exporter.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: monitor-sa

labels:

name: node-exporter

spec:

selector:

matchLabels:

name: node-exporter

template:

metadata:

labels:

name: node-exporter

spec:

hostPID: true

hostIPC: true

hostNetwork: true

containers:

- name: node-exporter

image: prom/node-exporter:v0.16.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9100

resources:

requests:

cpu: 0.15

securityContext:

privileged: true

args:

- --path.procfs #配置挂载宿主机(node 节点)的路径

- /host/proc

- --path.sysfs #配置挂载宿主机(node 节点)的路径

- /host/sys

- --collector.filesystem.ignored-mount-points #通过正则表达式忽略某些文件系统挂载点的信息收集

- '"^/(sys|proc|dev|host|etc)($|/)"'

volumeMounts:

- name: dev

mountPath: /host/dev

- name: proc

mountPath: /host/proc

- name: sys

mountPath: /host/sys

- name: rootfs

mountPath: /rootfs

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule"

volumes:

- name: proc

hostPath:

path: /proc

- name: dev

hostPath:

path: /dev

- name: sys

hostPath:

path: /sys

- name: rootfs

hostPath:

path: /

#解释:

hostNetwork、hostIPC、hostPID 都为 True 时,表示这个 Pod 里的所有容器,会直接使用宿主机的网络,直接与宿主机进行 IPC(进程间通信)通信,可以看到宿主机里正在运行的所有进程。

加入了 hostNetwork:true 会直接将我们的宿主机的 9100 端口映射出来,从而不需要创建 service 在我们的宿主机上就会有一个 9100 的端口

1.2 通过 node-exporter 采集数据

curl http://主机 ip:9100/metrics

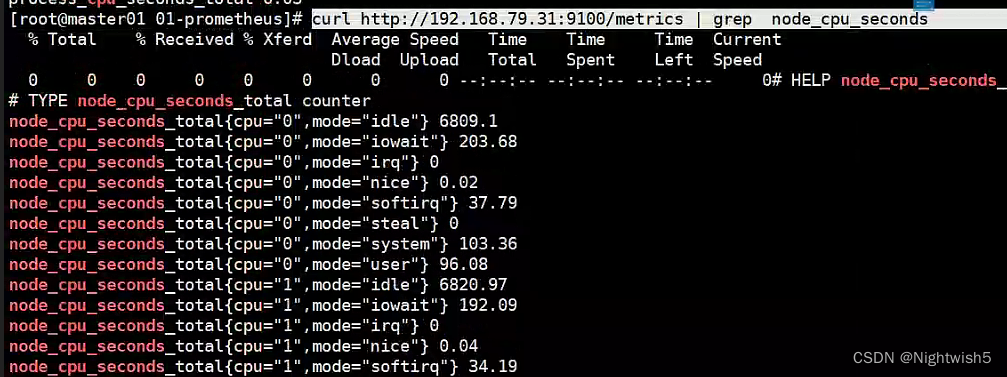

curl http://192.168.79.31:9100/metrics | grep node_cpu_seconds

#HELP:解释当前指标的含义,上面表示在每种模式下 node 节点的 cpu 花费的时间,以 s 为单位

#TYPE:说明当前指标的数据类型,上面是 counter 类型

node_cpu_seconds_total{cpu="0",mode="idle"} :

cpu0 上 idle 进程占用 CPU 的总时间,CPU 占用时间是一个只增不减的度量指标,从类型中也可以看

出 node_cpu 的数据类型是 counter(计数器) 。counter 计数器:只是采集递增的指标

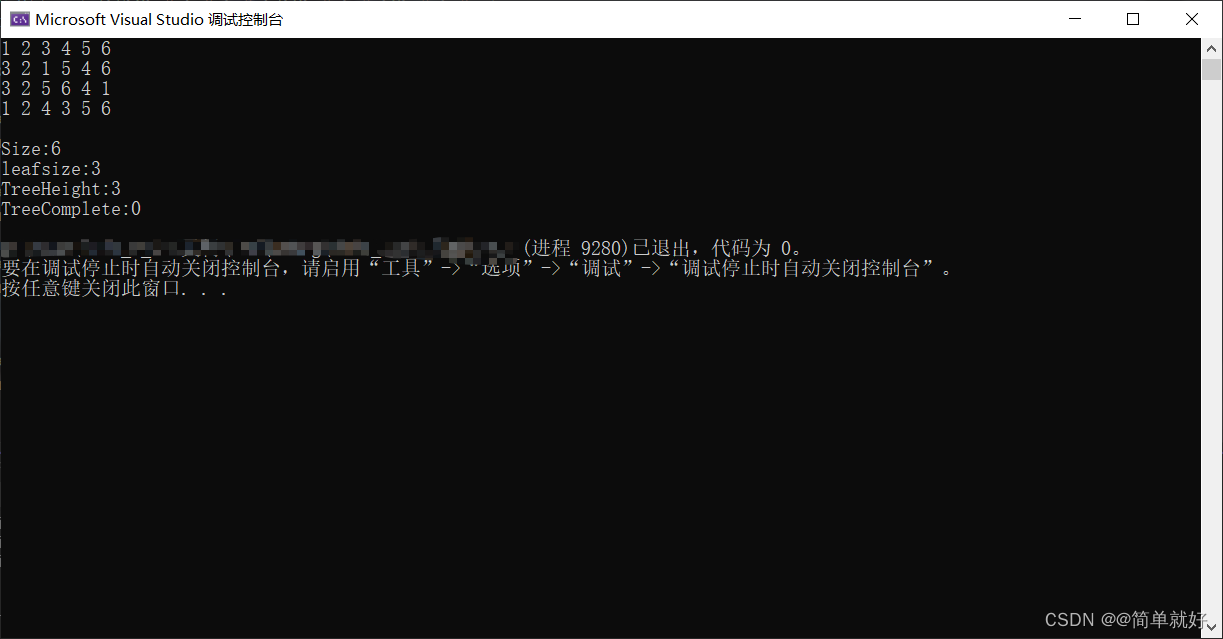

[root@master01 01-prometheus]# curl http://192.168.79.31:9100/metrics | grep node_load

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 95166 100 95166 0 0 13.0M 0 --:--:-- --:--:-- --:--:-- 15.1M

# HELP node_load1 1m load average.

# TYPE node_load1 gauge

node_load1 0.28

node_load1 该指标反映了当前主机在最近一分钟以内的负载情况,系统的负载情况会随系统资源的

使用而变化,因此 node_load1 反映的是当前状态,数据可能增加也可能减少,从注释中可以看出当前指

标类型为 gauge(标准尺寸)

gauge 标准尺寸:统计的指标可增加可减少

二、Prometheus server 安装和配置

2.1 创建 sa 账号,对 sa 做 rbac 授权

kubectl create serviceaccount monitor -n monitor-sa

kubectl create clusterrolebinding monitor-clusterrolebinding -n monitor-sa --clusterrole=cluster-admin --serviceaccount=monitor-sa:monitor

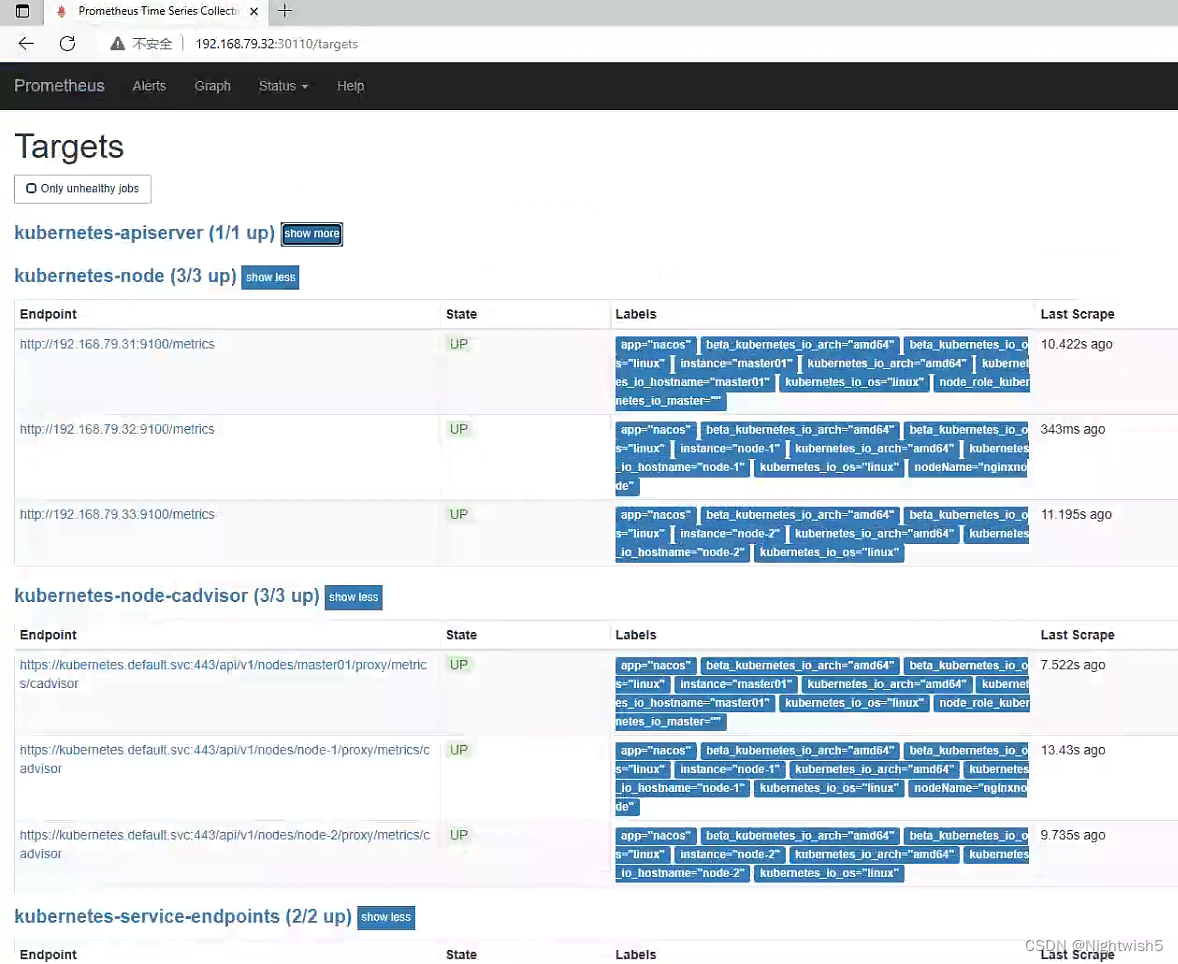

监控的有:

job_name:

kubernetes-node

kubernetes-node-cadvisor

kubernetes-apiserver

kubernetes-service-endpoints

将 docker load -i prometheus-2-2-1.tar.gz上传到node-1 ,由于prometheus-dp.yaml指定了nodeName, 可根据情况弄。

并 mkdir /data && chmod 777 /data/

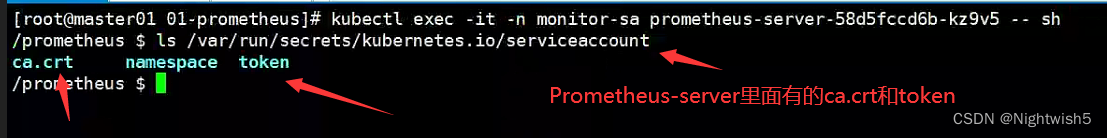

#一些补充:

#查看token

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep default | awk '{print $1}')

#查看ca.crt证书

kubectl config view --raw=true | grep -oP "(?<=certificate-authority-data:\s).+" | base64 -d > /usr/local/share/ca-certificates/kubernetes.crt

update-ca-certificates

#根据实际情况写: 二进制部署k8s和 kubeadm部署k8s, 这两文件位置有所不同。

#/etc/kubernetes/pki/ca.crt

#/etc/kubernetes/pki/token

上面这段话是错误的,当时没理解,以为是master,node1,node2节点上的ca.crt和token 。有丶臊皮。

不然报错:

Prometheus的target页面中 kubernetes-apiserver报错 Get https://192.168.79.31:6443/metrics: x509: certificate signed by unknown authority

和

Prometheus出现 Get https://192.168.79.31:6443/metrics: unable to read bearer token file /etc/kubernetes/pki/token: open /etc/kubernetes/pki/token: no such file or directory

下面cfg的详细解释, 《Prometheus构建企业级监控系统》的19页

2.2 prometheus-cfg.yaml

kind: ConfigMap

apiVersion: v1

metadata:

labels:

app: prometheus

name: prometheus-config

namespace: monitor-sa

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 10s

evaluation_interval: 1m

scrape_configs:

- job_name: 'kubernetes-node'

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: 'kubernetes-node-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-apiserver'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

2.3 prometheus-deploy.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus-server

namespace: monitor-sa

labels:

app: prometheus

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

component: server

#matchExpressions:

#- {key: app, operator: In, values: [prometheus]}

#- {key: component, operator: In, values: [server]}

template:

metadata:

labels:

app: prometheus

component: server

annotations:

prometheus.io/scrape: 'false'

spec:

nodeName: node-1

serviceAccountName: monitor

containers:

- name: prometheus

image: prom/prometheus:v2.2.1

imagePullPolicy: IfNotPresent

command:

- prometheus

- --config.file=/etc/prometheus/prometheus.yml

- --storage.tsdb.path=/prometheus

- --storage.tsdb.retention=720h

- --web.enable-lifecycle

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: /etc/prometheus/prometheus.yml

name: prometheus-config

subPath: prometheus.yml

- mountPath: /prometheus/

name: prometheus-storage-volume

volumes:

- name: prometheus-config

configMap:

name: prometheus-config

items:

- key: prometheus.yml

path: prometheus.yml

mode: 0644

- name: prometheus-storage-volume

hostPath:

path: /data

type: Directory

2.4 prometheus-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: monitor-sa

labels:

app: prometheus

spec:

type: NodePort

ports:

- port: 9090

targetPort: 9090

protocol: TCP

selector:

app: prometheus

component: server

2.5 访问Prometheus server

http://192.168.79.32:32732/graph 和status --> targets页面