最近开始研究NLP,然后根据手写CV UP主的视频,写了一个N Gram的NLP模型,算是该领域里的hello world吧。然后我又添加了几行代码实现了一个非常简易的输入法。

项目说明:

数据集可以自创,导入txt文件即可;

单词联想功能:输入前两个单词,预测(联想)第三个单词【也就是输入法中的提升功能】

本人有关NLP学习记录可以参考下面的博客【会持续更新】

NLP(自然语言处理)学习记录_爱吃肉的鹏的博客-CSDN博客

目录

数据集

N Gram网络结构

模型训练

输入法测试

数据集

我这里的数据集是个非常非常简易的,仅供学习用的。数据集格式为txt格式。支持中文。

我这里是一段chatgpt帮我写的一段话,我用来做数据集。

Introduction: Artificial Intelligence (AI) is revolutionizing the world as we know it. With its ability to mimic human intelligence and perform tasks that were once deemed impossible for machines, AI has become a driving force behind innovation across various industries. In this article, we will delve into the fascinating world of AI, exploring its applications, challenges, and the potential it holds for the future. Section 1: Understanding AI. Artificial Intelligence refers to the development of computer systems that can perform tasks that typically require human intelligence. These tasks include speech recognition, decision-making, problem-solving, and even visual perception. AI systems are designed to learn from data, adapt to new information, and improve their performance over time. Machine Learning, a subset of AI, enables computers to learn from experience and make predictions or take actions without explicit programming. Section 2: Applications of AI. AI has found extensive applications in various fields. In healthcare, AI is being used to assist in medical diagnosis, drug discovery, and personalized treatment plans. In finance, AI algorithms analyze vast amounts of data to detect fraud, predict market trends, and optimize investment strategies. AI-powered virtual assistants like Siri and Alexa have become ubiquitous, enhancing our daily lives with voice recognition and natural language processing capabilities. Section 3: Challenges and Ethical Considerations. While AI brings immense potential, it also poses significant challenges and ethical considerations. One concern is job displacement, as AI and automation may replace certain human roles. Striking a balance between technological advancement and employment opportunities becomes crucial. Additionally, AI systems must be developed with transparency and accountability to ensure they make fair and unbiased decisions. Ethical dilemmas arise when AI is used in areas like facial recognition, privacy invasion, or autonomous weapons. Section 4: The Future of AI. The future of AI holds boundless possibilities. Advancements in AI research, such as Deep Learning and Neural Networks, continue to push the boundaries of what machines can achieve. AI is expected to revolutionize transportation with autonomous vehicles, transform manufacturing through robotics, and enhance customer experiences with personalized recommendations. However, it is vital to address concerns like data privacy, algorithmic bias, and regulations to foster responsible AI development. Section 5: NLP and AI Training. Natural Language Processing (NLP), a branch of AI, focuses on the interaction between computers and human language. NLP enables machines to understand, interpret, and generate human language, facilitating applications like language translation, sentiment analysis, and chatbots. Training NLP networks requires vast amounts of text data, annotated with corresponding labels or outcomes. The collected data is used to train models using algorithms like recurrent neural networks (RNNs) or transformer models like GPT-3. Conclusion: Artificial Intelligence is reshaping our world in unprecedented ways, with endless opportunities and challenges. As we continue to explore the realms of AI, it is crucial to ensure responsible development, ethical considerations, and regulations that safeguard the well-being of society. With ongoing advancements, AI has the potential to augment human capabilities and revolutionize industries, opening doors to a future where machines and humans collaborate harmoniously.

def NLP_Sentence(file_path):

# test_sentence = """When forty winters shall besiege thy brow,

# And dig deep trenches in thy beauty's field,

# Thy youth's proud livery so gazed on now,

# Will be a totter'd weed of small worth held:

# Then being asked, where all thy beauty lies,

# Where all the treasure of thy lusty days;

# To say, within thine own deep sunken eyes,

# Were an all-eating shame, and thriftless praise.

# How much more praise deserv'd thy beauty's use,

# If thou couldst answer 'This fair child of mine

# Shall sum my count, and make my old excuse,'

# Proving his beauty by succession thine!

# This were to be new made when thou art old,

# And see thy blood warm when thou feel'st it cold.""".split() # 按空格进行单词的划分

# print(type(test_sentence))

with open(file_path,'r',encoding='utf-8') as f:

test_sentence = f.read()

test_sentence = test_sentence.split()

print(test_sentence)

return test_sentence

def build_dataset(test_sentence):

# 建立数据集

trigram = [((test_sentence[i], test_sentence[i + 1]), test_sentence[i + 2]) for i in range(len(test_sentence) - 2)]

vocb = set(test_sentence) # 通过set将重复的单词去掉 这个vocb顺序是随机的

word_to_idx = {word: i for i, word in enumerate(vocb)} # 将每个单词编码,即用数字来表示每个单词,只有这样才能够传入nn.Embedding得到词向量。

idx_to_word = {word_to_idx[word]: word for word in

word_to_idx} # 返回的是{0: 'Will', 1: 'praise.', 2: 'by', 3: 'worth',是word_to_idx步骤的逆操作

return word_to_idx, idx_to_word, trigramNLP_Sentence函数是读取数据集txt文件,单词之间用空格划分。build_dataset是数据集的处理,trgram是将三个单词划分为一组,前两个单词用来训练,后一个单词作为标签。这就好比CV中,前两个单词是特征,后一个单词是Label,就是一个简单的分类任务而已。

vocb是去除重复单词。

word_to_idx:是将词转为索引,也就是编码操作。

idx_to_word:解码操作

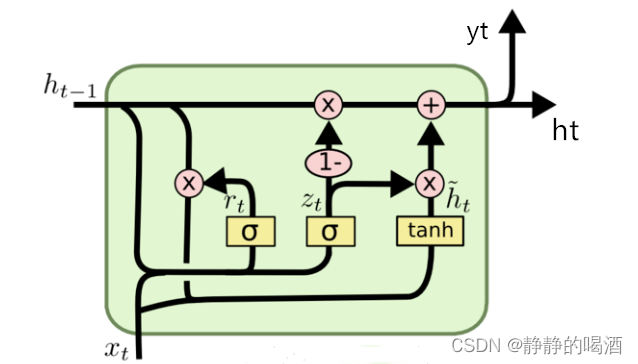

N Gram网络结构

vocb_size:单词的长度

context_size:上下文的窗口长度

import torch.nn as nn

import torch.nn.functional as F

# 定义 N Gram模型

class NgramModel(nn.Module):

def __init__(self, vocb_size, context_size, n_dim):

super(NgramModel, self).__init__()

self.n_word = vocb_size # 已经去除了重复的单词,有97个单词

self.context_size = context_size

self.n_dim = n_dim

self.embedding = nn.Embedding(self.n_word, self.n_dim) # (97,10)

self.linear1 = nn.Linear(self.context_size * self.n_dim, 128) # 全连接层(20,128) 每次输入两个词有contest_size*n_dim个维度(20维度)

self.linear2 = nn.Linear(128, self.n_word) # (128,97)

def forward(self, x):

emb = self.embedding(x)

emb = emb.view(1, -1) # 输出的是1行 len(x) / 1 列 就是按行平铺开

out = self.linear1(emb)

out = F.relu(out)

out = self.linear2(out)

log_prob = F.log_softmax(out) # 得出每个词的词嵌入(词属性)的概率值

return log_prob模型训练

CONTEXT_SIZE是上下文窗口长度,这里默认为2,表示根据输入的前两个词预测后一个,如果测试的时候要修改,要重训练的,不然会报维度错误。

test_sentence是数据集,训练好的权重命名为myNLP.pth.

#coding utf-8

import torch

from torch import optim

import torch.nn as nn

from torch.autograd import Variable

from NLP_dataset import NLP_Sentence, build_dataset

from NLP_model import NgramModel

CONTEXT_SIZE = 2 # 表示想由前面的几个单词来预测这个单词,这里设置为2,就是说我们希望通过这个单词的前两个单词来预测这一个单词

test_sentence = NLP_Sentence('data2.txt')

word_to_idx, idx_to_word, trigram = build_dataset(test_sentence)

# train

ngrammodel = NgramModel(len(word_to_idx), CONTEXT_SIZE, 10).cuda()

criterion = nn.NLLLoss()

optimizer = optim.SGD(ngrammodel.parameters(), lr=1e-3)

for epoch in range(300):

print('epoch: {}'.format(epoch+1))

print('*'*10)

running_loss = 0

total_samples_epoch = 0

correct_predictions_epoch = 0

for data in trigram:

# we use 'word' to represent the two words forward the predict word, we use 'label' to represent the predict word

word, label = data # word = (When,forty) label=winters

word = Variable(torch.LongTensor([word_to_idx[e] for e in word])).cuda()

label = Variable(torch.LongTensor([word_to_idx[label]])).cuda()

# forward

out = ngrammodel(word) # word为索引形式

loss = criterion(out, label)

running_loss += loss.item()

_, predicted = torch.max(out.data, 1)

total_samples_epoch += label.size(0)

correct_predictions_epoch += (predicted == label).sum().item()

# backward

optimizer.zero_grad()

loss.backward()

optimizer.step()

epoch_accuracy = correct_predictions_epoch / total_samples_epoch

print('loss: {:.6f}'.format(running_loss / len(word_to_idx)))

print('accuracy: {:.2%}'.format(epoch_accuracy))

torch.save(ngrammodel.state_dict(), "myNLP.pth")输入法测试

import torch

from torch.autograd import Variable

from NLP_model import NgramModel

from NLP_dataset import NLP_Sentence, build_dataset

test_sentence = NLP_Sentence('data2.txt')

word_to_idx, idx_to_word, trigram = build_dataset(test_sentence)

CONTEXT_SIZE = 2

# predict

while True:

word = input("请输入单词,按回车\n")

word_ = word.split()

word_ = Variable(torch.LongTensor([word_to_idx[i] for i in word_]))

model = NgramModel(len(word_to_idx), CONTEXT_SIZE, 10)

pretrained = torch.load('myNLP.pth')

model.load_state_dict(pretrained)

model.eval()

out = model(word_)

_, predict_label = torch.max(out, 1)

predict_word = idx_to_word[predict_label.item()]

print("最大概率词语:", predict_word)

prob, pre_labels = out.sort(descending=True) # 从大到小排序

pre_labels = pre_labels.squeeze(0)[:10]

print_dict = {}

# for idx in pre_labels:

for i, idx in enumerate(pre_labels):

idx = idx.item()

try:

print_dict[str(i)] = idx_to_word[idx]

except:

continue

print(print_dict)

idx_input = input("你可选择以下序号补充你的输出\n")

print('{} {}'.format(word, print_dict[idx_input]))

比如输入以下内容(the word):

请输入单词,按回车

the world

会根据你的输入自动预测你第三个词,只需要输入编号即可补全。

最大概率词语: programming.

{'0': 'programming.', '1': 'development.', '2': 'discovery,', '3': 'across', '4': 'can', '5': 'refers', '6': 'typically', '7': 'fascinating', '8': 'language', '9': 'or'}

你可选择以下序号补充你的输出

8

the world language

以上就是一个简易办的用NLP实习的输入法【就是用来给枯燥的学习过程消遣一下哈哈~】