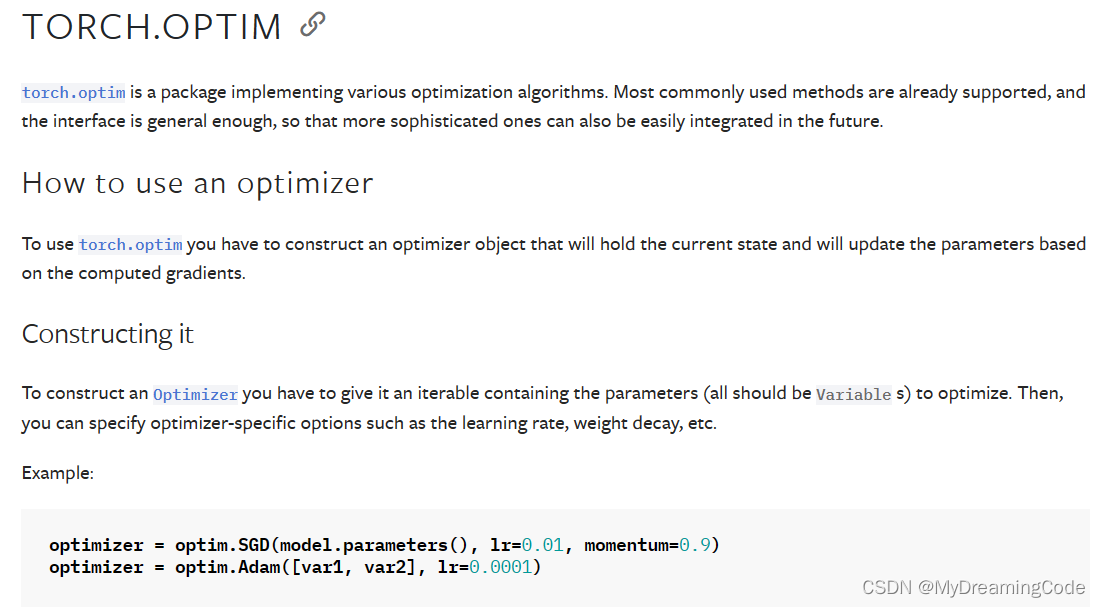

目的:优化器可以将神经网络中的参数根据损失函数和反向传播来进行优化,以得到最佳的参数值,让模型预测的更准确。

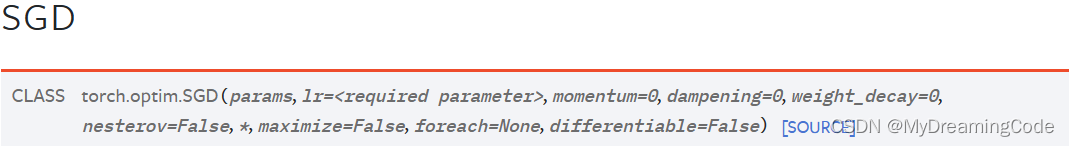

1. SGD

import torch

import torchvision

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10('./dataset', train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=1)

class MyModule(nn.Module):

def __init__(self):

super(MyModule, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss = nn.CrossEntropyLoss()

myModule1 = MyModule()

# 1.定义一个优化器

optim = torch.optim.SGD(myModule1.parameters(), lr=0.01)

for data in dataloader:

imgs, targets = data

outputs = myModule1(imgs)

res_loss = loss(outputs, targets)

# 2.梯度清零

optim.zero_grad()

# 3.反向传播,求出每个参数的梯度

res_loss.backward()

# 4.对参数进行调优

optim.step()

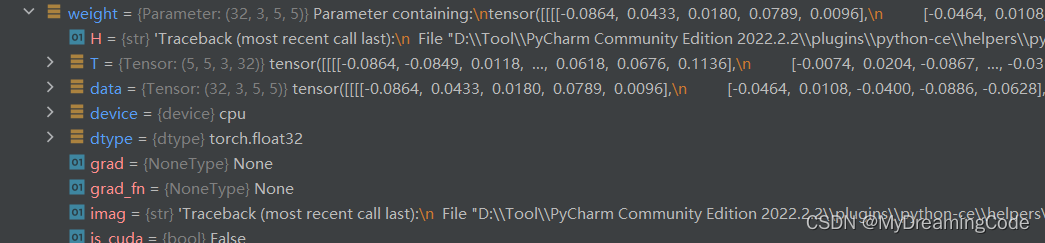

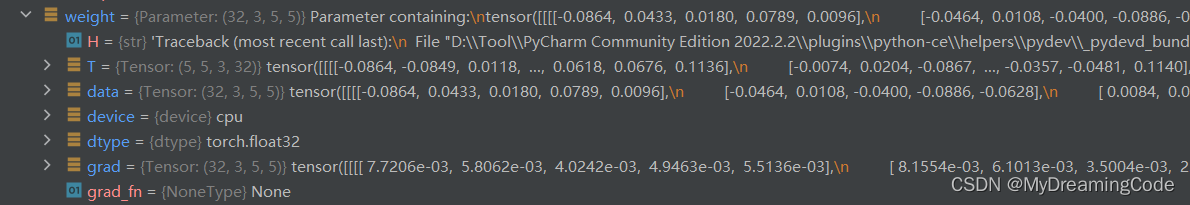

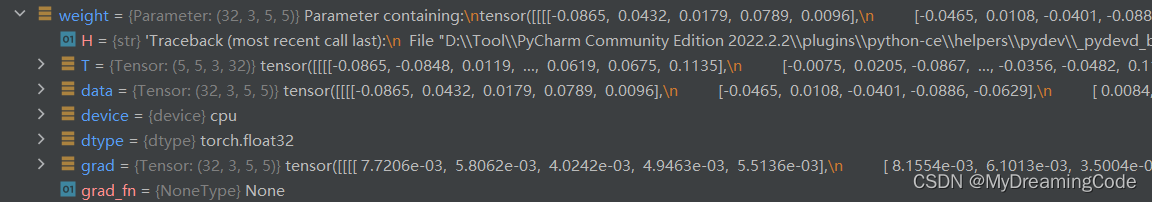

a. 梯度清零

b. 反向传播(此时grad中就有数了)

c. 优化参数(在这里变化有些小,但是有变化)

nn_loss_network.py

import torch

import torchvision

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10('./dataset', train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=1)

class MyModule(nn.Module):

def __init__(self):

super(MyModule, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

loss = nn.CrossEntropyLoss()

myModule1 = MyModule()

# 1.定义一个优化器

optim = torch.optim.SGD(myModule1.parameters(), lr=0.01)

for epoch in range(20):

running_loss = 0.0

for data in dataloader:

imgs, targets = data

outputs = myModule1(imgs)

res_loss = loss(outputs, targets)

# 2.梯度清零

optim.zero_grad()

# 3.反向传播,求出每个参数的梯度

res_loss.backward()

# 4.对参数进行调优

optim.step()

# 在这一轮学习中,整体的loss求和

running_loss = running_loss + res_loss

print(running_loss)

tensor(18636.9590, grad_fn=<AddBackward0>)

tensor(16148.1221, grad_fn=<AddBackward0>)

tensor(15489.6064, grad_fn=<AddBackward0>)...

2. VGG

weights的使用:

weights=None 表示随机ImageNet初始化;不为None则为具体给定的权值。

model_pretrained.py

import torchvision

vgg16_false = torchvision.models.vgg16(weights=None)

vgg16_true = torchvision.models.vgg16(weights=True)

print(vgg16_true) # 1000个分类

VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=4096, out_features=1000, bias=True)

)

)Process finished with exit code 0

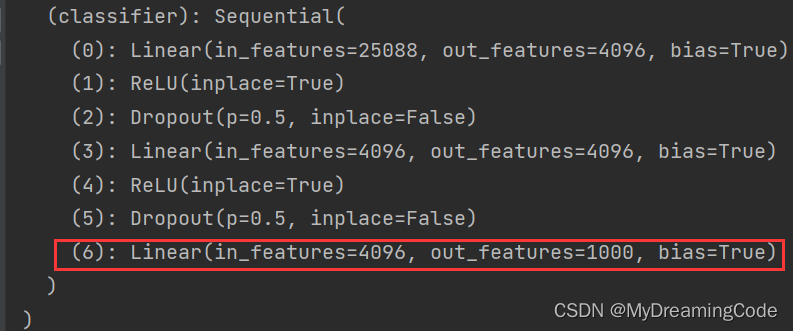

如果用Cifar10(只有10分类的话)+VGG模型,该怎么去修改呢?

方法一:添加一层

# 方法一: 加一层

# vgg16_true.add_module('add_linear', nn.Linear(1000, 10))

# 若想在classifier里加,则:

vgg16_true.classifier.add_module('add_linear', nn.Linear(1000, 10))

print(vgg16_true)(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=4096, out_features=1000, bias=True)

(add_linear): Linear(in_features=1000, out_features=10, bias=True)

)

)

方法二:修改

vgg16_false.classifier[6] = nn.Linear(4096, 10)

print(vgg16_false)(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=4096, out_features=10, bias=True)

)