在《研发工程师玩转Kubernetes——自动扩缩容》一文中,我们使用在本地使用wrk进行了压力测试。如果我们希望在容器中运行,该怎么做呢?

构建/推送wrk镜像

Dockerfile如下。主要就是在Ubuntu22中安装wrk。

From ubuntu:22.04

RUN apt-get update

RUN apt-get install -y wrk

WORKDIR /

然后构建镜像:

docker build -t wrk:v1 .

docker tag wrk:v1 localhost:32000/wrk:v1

docker push localhost:32000/wrk:v1

非定时任务

顾名思义,非定时任务是指不可以指定执行周期的任务。在kubernetes中,我们通过Job来实现。

单次任务

apiVersion: batch/v1

kind: Job

metadata:

name: wrk-job

spec:

template:

spec:

containers:

- name: wrk

image: localhost:32000/wrk:v1

command: ["wrk", "-t20", "-c20", "-d30", "http://192.168.137.248:30000"]

restartPolicy: Never

Job会创建一个Pod来实现。template字段用于描述Pod的内容。在本例中,新创建的Pod包含一个容器。这个容器使用了上面创建的wrk镜像,并且使用wrk进行了30秒的压测。

kubectl describe jobs.batch wrk-job

Name: wrk-job

Namespace: default

Selector: controller-uid=80799172-670b-4769-9078-8da47c3a571b

Labels: controller-uid=80799172-670b-4769-9078-8da47c3a571b

job-name=wrk-job

Annotations: batch.kubernetes.io/job-tracking:

Parallelism: 1

Completions: 1

Completion Mode: NonIndexed

Start Time: Mon, 29 May 2023 17:55:01 +0800

Pods Statuses: 1 Active (1 Ready) / 0 Succeeded / 0 Failed

Pod Template:

Labels: controller-uid=80799172-670b-4769-9078-8da47c3a571b

job-name=wrk-job

Containers:

wrk:

Image: localhost:32000/wrk:v1

Port: <none>

Host Port: <none>

Command:

wrk

-t20

-c20

-d30

http://192.168.137.248:30000

Environment: <none>

Mounts: <none>

Volumes: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 7s job-controller Created pod: wrk-job-wxwmc

可以通过下面指令查看该Job创建的Pod。

kubectl describe pod

Name: wrk-job-wxwmc

Namespace: default

Priority: 0

Service Account: default

Node: fangliang-virtual-machine/192.168.137.248

Start Time: Mon, 29 May 2023 17:55:01 +0800

Labels: controller-uid=80799172-670b-4769-9078-8da47c3a571b

job-name=wrk-job

Annotations: cni.projectcalico.org/containerID: 1b92441ff39e22b76a3c1b3523068347e2aee8ed070a02260fd51e7d48bcf23b

cni.projectcalico.org/podIP: 10.1.62.165/32

cni.projectcalico.org/podIPs: 10.1.62.165/32

Status: Running

IP: 10.1.62.165

IPs:

IP: 10.1.62.165

Controlled By: Job/wrk-job

Containers:

wrk:

Container ID: containerd://ef5b58f80bf5b091bba87332df0f4fb9dd94b765fe7167e2ce4024b411429d46

Image: localhost:32000/wrk:v1

Image ID: localhost:32000/wrk@sha256:3548119fa498e871ac75ab3cefb901bf5a069349dc4b1b92afab8db4653f6b25

Port: <none>

Host Port: <none>

Command:

wrk

-t20

-c20

-d30

http://192.168.137.248:30000

State: Running

Started: Mon, 29 May 2023 17:55:02 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-x8sss (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-x8sss:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 28s default-scheduler Successfully assigned default/wrk-job-wxwmc to fangliang-virtual-machine

Normal Pulled 28s kubelet Container image "localhost:32000/wrk:v1" already present on machine

Normal Created 28s kubelet Created container wrk

Normal Started 28s kubelet Started container wrk

待wrk执行完毕,我们调用log指令查看其输出

kubectl logs wrk-job-wxwmc

Running 30s test @ http://192.168.137.248:30000

20 threads and 20 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 14.50ms 21.82ms 87.86ms 90.48%

Req/Sec 10.92 8.23 20.00 38.46%

21 requests in 30.05s, 2.91KB read

Socket errors: connect 0, read 21, write 0, timeout 0

Requests/sec: 0.70

Transfer/sec: 99.00B

需要注意的是,上面的清单创建Job以及Pod在运行结束后不会自动被删除,容器会处于Terminated状态。下面指令可以查看到该Pod的状态已经发生改变。

kubectl describe pod wrk-job-wxwmc

Name: wrk-job-wxwmc

Namespace: default

Priority: 0

Service Account: default

Node: fangliang-virtual-machine/192.168.137.248

Start Time: Mon, 29 May 2023 17:55:01 +0800

Labels: controller-uid=80799172-670b-4769-9078-8da47c3a571b

job-name=wrk-job

Annotations: cni.projectcalico.org/containerID: 1b92441ff39e22b76a3c1b3523068347e2aee8ed070a02260fd51e7d48bcf23b

cni.projectcalico.org/podIP:

cni.projectcalico.org/podIPs:

Status: Succeeded

IP: 10.1.62.165

IPs:

IP: 10.1.62.165

Controlled By: Job/wrk-job

Containers:

wrk:

Container ID: containerd://ef5b58f80bf5b091bba87332df0f4fb9dd94b765fe7167e2ce4024b411429d46

Image: localhost:32000/wrk:v1

Image ID: localhost:32000/wrk@sha256:3548119fa498e871ac75ab3cefb901bf5a069349dc4b1b92afab8db4653f6b25

Port: <none>

Host Port: <none>

Command:

wrk

-t20

-c20

-d30

http://192.168.137.248:30000

State: Terminated

Reason: Completed

Exit Code: 0

Started: Mon, 29 May 2023 17:55:02 +0800

Finished: Mon, 29 May 2023 17:55:32 +0800

Ready: False

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-x8sss (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

kube-api-access-x8sss:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 3m13s default-scheduler Successfully assigned default/wrk-job-wxwmc to fangliang-virtual-machine

Normal Pulled 3m12s kubelet Container image "localhost:32000/wrk:v1" already present on machine

Normal Created 3m12s kubelet Created container wrk

Normal Started 3m12s kubelet Started container wrk

手工删除任务

下面指令

kubectl delete jobs.batch wrk-job

job.batch “wrk-job” deleted

自动删除

如果我们不用查看Job创建的Pod的输出,则可以在Job运行结束后自动删除,而不用手工删除。具体的方法就是在清单文件中新增ttlSecondsAfterFinished: 0。它表示Job处于Complete 或 Failed状态后,立即删除自己。

apiVersion: batch/v1

kind: Job

metadata:

name: wrk-job

spec:

ttlSecondsAfterFinished: 0

template:

spec:

containers:

- name: wrk

image: localhost:32000/wrk:v1

command: ["wrk", "-t20", "-c20", "-d30", "http://192.168.137.248:30000"]

restartPolicy: Never

多次任务

只需要在上述清单中新增spec.completions字段即可。为了清晰的看到Pod的创建过程,我删除了创建后立即删除的字段ttlSecondsAfterFinished。

apiVersion: batch/v1

kind: Job

metadata:

name: wrk-job

spec:

completions: 3

template:

spec:

containers:

- name: wrk

image: localhost:32000/wrk:v1

command: ["wrk", "-t20", "-c20", "-d30", "http://192.168.137.248:30000"]

restartPolicy: Never

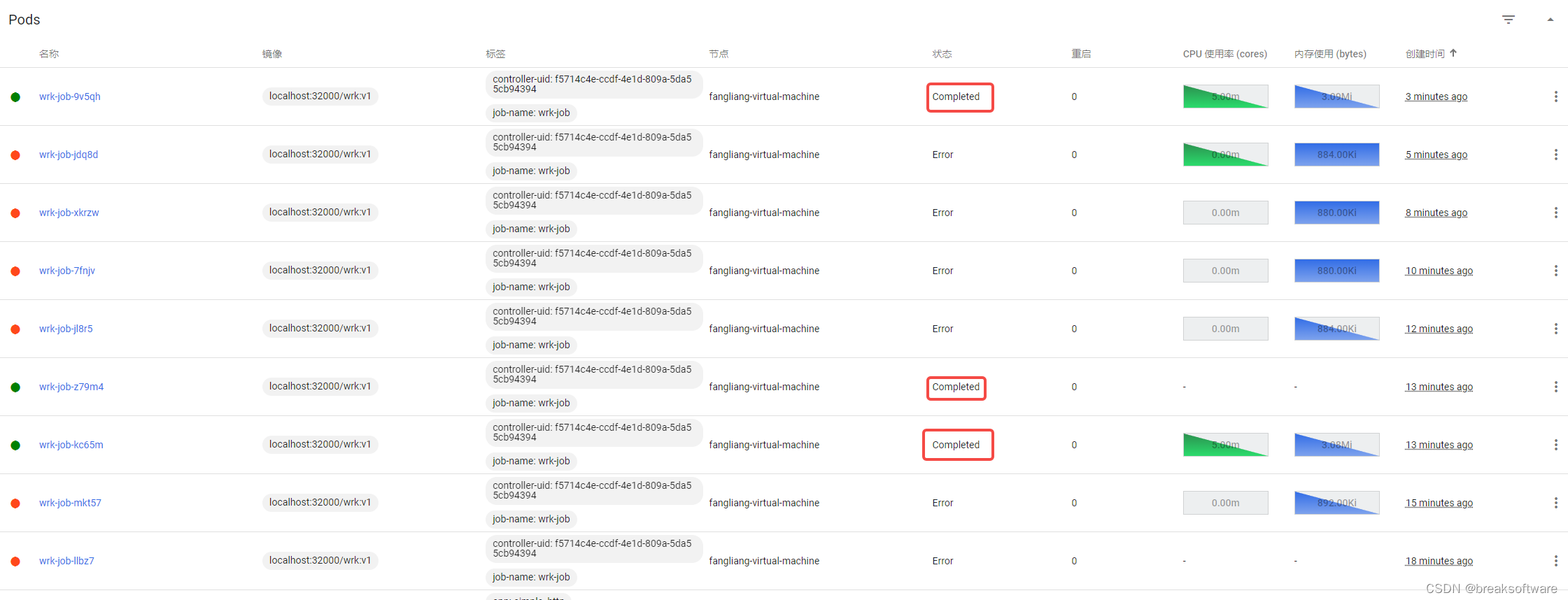

上面清单表示,我们将挨个创建Pod。只有Pod处于完成状态时,下一个Pod才会创建。直至达到3个。

通过下面指令创建这个任务

kubectl create -f wrk_job.yaml

下图可以见得,只有处于Completed状态的Pod才算会让completions计数自增。

如果没有dashboard,可以使用下面指令查看

kubectl get jobs.batch wrk-job --watch

NAME COMPLETIONS DURATION AGE

wrk-job 0/3 53s 53s

wrk-job 0/3 2m13s 2m13s

wrk-job 0/3 2m14s 2m14s

wrk-job 0/3 2m14s 2m14s

wrk-job 0/3 2m15s 2m15s

wrk-job 0/3 4m27s 4m27s

wrk-job 0/3 4m28s 4m28s

wrk-job 0/3 4m28s 4m28s

wrk-job 0/3 4m29s 4m29s

wrk-job 0/3 5m 5m

wrk-job 0/3 5m1s 5m1s

wrk-job 1/3 5m1s 5m1s

wrk-job 1/3 5m2s 5m2s

wrk-job 1/3 5m33s 5m33s

wrk-job 1/3 5m34s 5m34s

wrk-job 2/3 5m34s 5m34s

wrk-job 2/3 5m35s 5m35s

wrk-job 2/3 7m47s 7m47s

wrk-job 2/3 7m48s 7m48s

wrk-job 2/3 7m48s 7m48s

wrk-job 2/3 7m49s 7m49s

wrk-job 2/3 10m 10m

wrk-job 2/3 10m 10m

wrk-job 2/3 10m 10m

wrk-job 2/3 10m 10m

wrk-job 2/3 12m 12m

wrk-job 2/3 12m 12m

wrk-job 2/3 12m 12m

wrk-job 2/3 12m 12m

wrk-job 2/3 14m 14m

wrk-job 2/3 14m 14m

wrk-job 2/3 14m 14m

wrk-job 2/3 14m 14m

wrk-job 2/3 14m 14m

wrk-job 2/3 15m 15m

wrk-job 3/3 15m 15m

并行Pod

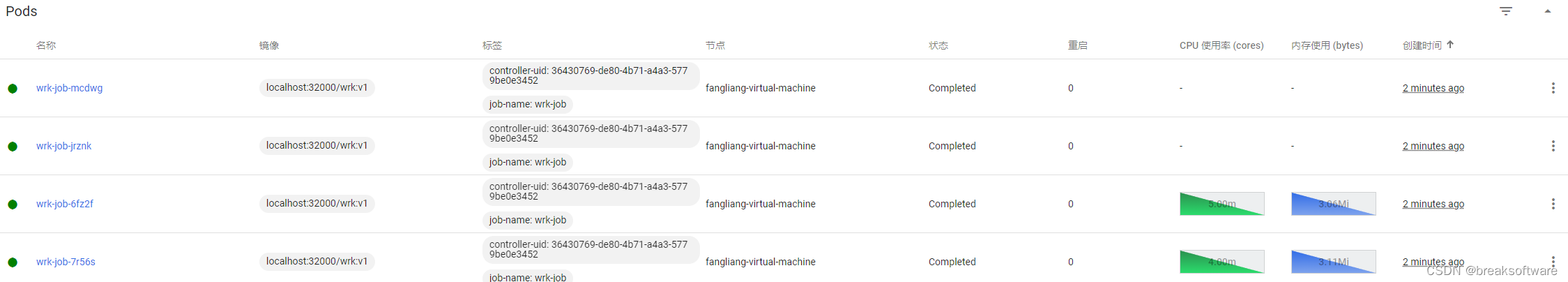

如果单个Pod的压力不够,我们同时启动多个Pod。这是通过设置spec.parallelism字段来设置的。

apiVersion: batch/v1

kind: Job

metadata:

name: wrk-job

spec:

completions: 4

parallelism: 2

template:

spec:

containers:

- name: wrk

image: localhost:32000/wrk:v1

command: ["wrk", "-t20", "-c20", "-d30", "http://192.168.137.248:30000"]

restartPolicy: Never

这个清单文件表示,我们需要运行成功4(completions)个Pod,同时最大并行2(parallelism)个Pod。

如果没有dashboard可以通过下面指令查看过程。

kubectl get jobs.batch wrk-job --watch

NAME COMPLETIONS DURATION AGE

wrk-job 0/4 14s 14s

wrk-job 0/4 32s 32s

wrk-job 0/4 33s 33s

wrk-job 2/4 34s 34s

wrk-job 2/4 36s 36s

wrk-job 2/4 66s 66s

wrk-job 2/4 68s 68s

wrk-job 4/4 67s 68s

参考资料

- https://kubernetes.io/zh-cn/docs/concepts/workloads/controllers/job/