论文:General Instance Distillation for Object Detection

论文地址:https://arxiv.org/pdf/2103.02340.pdf![]() https://arxiv.org/pdf/2103.02340.pdf

https://arxiv.org/pdf/2103.02340.pdf

摘要

In recent years, knowledge distillation has been proved to be an effective solution for model compression. This approach can make lightweight student models acquire the knowledge extracted from cumbersome teacher models. However, previous distillation methods of detection have weak generalization for different detection frameworks and rely heavily on ground truth (GT), ignoring the valuable relation information between instances. Thus, we propose a novel distillation method for detection tasks based on discriminative instances without considering the positive or negative distinguished by GT, which is called general instance distillation (GID). Our approach contains a general instance selection module (GISM) to make full use offeature-based, relation-based and response-based knowledge for distillation. Extensive results demonstrate that the student model achieves significant AP improvement and even outperforms the teacher in various detection frameworks. Specifically, RetinaNet with ResNet-50 achieves 39.1% in mAP with GID on COCO dataset, which surpasses the baseline 36.2% by 2.9%, and even better than the ResNet-101 based teacher model with 38.1% AP.

近年来,知识蒸馏被证明是一种有效的解决模型压缩的方法。这种方法可以使轻量级的学生模型获得从繁琐的教师模型中提取的知识。然而,以前的蒸馏检测方法具有较弱的推广不同的检测框架,严重依赖地面真相(GT),忽略了有价值的实例之间的关系信息。因此,我们提出了一种新的蒸馏方法的检测任务的基础上的歧视性的实例,而不考虑的积极或消极区分GT,这被称为一般的实例蒸馏(GID)。我们的方法包含一个通用的实例选择模块(GISM),以充分利用offeature-based,基于关系和响应的知识蒸馏。大量的结果表明,学生模型实现了显着的AP改进,甚至在各种检测框架中优于教师。具体来说,RetinaNet与ResNet-50在COCO数据集上的GID的mAP中达到39.1%,超过基线36.2% 2.9%,甚至优于基于ResNet-101的教师模型38.1%的AP。

1介绍

In recent years, the accuracy of object detection has made a great progress due to the blossom of deep convolutional neural network (CNN). The deep learning network structure, including a variety of one-stage detection models [19, 23, 24, 25, 17] and two-stage detection models [26, 16, 8, 2], has replaced the traditional object detection and has become the mainstream method in this field. Furthermore, the anchor-free frameworks [13, 5, 32] have also achieved better performance with more simplified ap proaches. However, these high-precision deep learning based models are usually cumbersome, while a lightweight with high performance model is demanded in practical applications. Therefore, how to find a better trade-off between the accuracy and efficiency has become a crucial problem.

近年来,由于深度卷积神经网络(CNN)的开花,目标检测的准确性有了很大的进步。深度学习网络结构,包括各种一阶段检测模型和两阶段检测模型,已经取代了传统的对象检测,成为该领域的主流方法。此外,无锚框架也通过更简单的方法实现了更好的性能。然而,这些基于高精度深度学习的模型通常是繁琐的,而在实际应用中需要一个轻量级的高性能模型。因此,如何在准确性和效率之间找到一个更好的平衡点成为一个至关重要的问题。

Knowledge Distillation (KD), proposed by Hinton et al. [10], is a promising solution for the above problem. Knowledge distillation is to transfer the knowledge of large model to small model, thereby improving the performance of the small model and achieving the purpose of model compression. At present, the typical forms of knowledge can be divided into three categories [7], response-based knowledge [10, 22], feature-based knowledge [27, 35, 9] and relationbased knowledge [22, 20, 31, 33, 15]. However, most of the distillation methods are mainly designed for multi-class classification problems. Directly migrating the classification specific distillation method to the detection model is less effective, because of the extremely unbalanced ratio of positive and negative instances in the detection task. Some distillation frameworks designed for detection tasks cope with this problem and achieve impressive results, e.g. Li et al. [14] address the problem by distilling the positive and negative instances in a certain proportion sampled by RPN, and Wang et al. [34] further propose to only distill the near ground truth area. Nevertheless, the ratio between positive and negative instances for distillation needs to be meticulously designed, and distilling only GT-related area may ignore the potential informative area in the background. Moreover, current detection distillation methods cannot work well in multi detection frameworks simultaneously, e.g. two-stage, anchor-free methods. Therefore, we hope to design a general distillation method for various detection frameworks to use as much knowledge as possible effectively without concerning the positive or negative.

知识蒸馏(KD),由欣顿等人提出。是解决上述问题的一个有希望的解决方案。知识蒸馏是将大模型中的知识转移到小模型中,从而提高小模型的性能,达到模型压缩的目的。目前,知识的典型形式可以分为三类,基于响应的知识,基于特征的知识和基于关系的知识。然而,大多数蒸馏方法主要是针对多类分类问题设计的。直接将分类指定蒸馏方法迁移到检测模型的效率较低,因为检测任务中阳性和阴性实例的比例极不平衡。一些为检测任务设计的蒸馏框架科普这个问题并取得了令人印象深刻的结果,例如。Li等人通过以RPN采样的一定比例提取正面和负面实例来解决这个问题,Wang等人进一步提出仅提取近地面实况区域。然而,需要精心设计用于提取的正实例和负实例之间的比率,并且仅提取GT相关区域可能忽略背景中的潜在信息区域。此外,当前的检测蒸馏方法不能同时在多个检测框架中很好地工作,例如:两阶段无锚方法。因此,我们希望为各种检测框架设计一种通用的蒸馏方法,以有效地使用尽可能多的知识,而不考虑积极或消极的。

Towards this goal, we propose a distillation method based on discriminative instances, utilizing response-based knowledge, feature-based knowledge as well as relationbased knowledge, as shown in Fig 1. There are several advantages: (i) We can model the relational knowledge between instances in one image for distillation. Hu et al. [11] demonstrates the effectiveness of relational information on detection tasks. However, the relation-based knowledge distillation in object detection has not been explored yet. (ii) We avoid manually setting the proportion of the positive and negative areas or selecting only the GT-related areas for distillation. Though GT-related areas are almost informative, the extremely hard and simple instances may be useless, and even some informative patches from the background can be useful for students to learn the generalization of teachers. Besides, we find that the automatic selection of some discriminative instances between the student and teacher for distillation can make knowledge transferring more effective. Those discriminative instances are called general instances (GIs), since our method does not care about the proportion between positive and negative instances, nor does it rely on GT labels. (iii) Our methods have robust generalization for various detection frameworks. GIs are calculated upon the output from student and teacher model without relying on certain modules from a specific detector or some key characteristic, such as anchor, from a particular detection framework.

为了这个目标,我们提出了一种基于判别实例的蒸馏方法,利用基于响应的知识,基于特征的知识以及基于关系的知识,如图1所示。有几个优点:

(1)我们可以对一个图像中的实例之间的关系知识进行建模以进行提炼。Hu等人证明了关系信息对检测任务的有效性。然而,基于关系的知识提取在目标检测中的研究还没有得到深入的研究。

(2)我们避免手动设置正区域和负区域的比例或仅选择GT相关区域进行蒸馏。虽然与GT相关的领域几乎是信息量大的,但极其困难和简单的例子可能是无用的,甚至一些信息补丁的背景可以帮助学生学习教师的概括。此外,我们发现学生和教师之间自动选择一些区分实例进行提炼,可以使知识传递更有效。这些判别实例被称为一般实例(GI),因为我们的方法不关心阳性和阴性实例之间的比例,也不依赖于GT标签。

(3)我们的方法具有强大的泛化能力,各种检测框架。GI是根据来自学生和教师模型的输出来计算的,而不依赖于来自特定检测器的某些模块或来自特定检测框架的一些关键特性,例如锚。

综上所述,本文做出了以下贡献:

- 定义一般实例(GI)作为蒸馏目标,可以有效提高检测模型的蒸馏效果。(Define general instance (GI) as the distillation target, which can effectively improve the distillation effect of

the detection model.) - 在GI的基础上,首先引入基于关系的知识,对检测任务进行提炼,并将其与基于响应和基于特征的知识相结合,使学生超越教师。(Based on GI, we first introduce the relation-based

knowledge for distillation on detection tasks and inte-grate it with response-based and feature-based knowl-edge, which makes student surpass the teacher.) - 我们在MSCOCO和PASCAL VOC数据集上验证了我们的方法的有效性,包括一阶段,两阶段和无锚方法,实现了最先进的性能。(We verify the effectiveness of our method on the MSCOCO [18] and PASCAL VOC [6] datasets, including one-stage, two-stage and anchor-free methods, achieving state-of-the-art performance.)

2相关工作

2.1目标检测

The current mainstream object detection algorithms are roughly divided into two-stage and one-stage detectors. Two-stage methods [16, 8, 2] represented by Faster R-CNN [26] maintain the highest accuracy in the detection field. These methods utilize region proposal network (RPN) and refinement procedure of classification and location to obtain better performance. However, high demands for lower latency bring one-stage detectors [19, 23] under the spotlight, which achieve classification and location of targets through the feature map directly.

目前主流的目标检测算法大致分为两阶段和一阶段检测器。以Faster R-CNN 为代表的两阶段方法在检测领域保持了最高的准确性。这些方法利用区域建议网络(RPN)和分类和定位的细化过程,以获得更好的性能。然而,对较低延迟的高需求使一级检测器成为焦点,其直接通过特征图实现目标的分类和定位。

In recent years, another criterion divides detection algorithm into anchor-based and anchor-free methods. Anchorbased detectors such as [24, 17, 19] solve object detection tasks with the help of anchor boxes, which can be viewed as pre-defined sliding windows or proposals. Nevertheless, all anchor-based methods need to be meticulously designed and calculate a large number of anchor boxes which takes much computation. To avoid tunning hyper-parameters and calculation related to anchor boxes, anchor-free methods [23, 13, 5, 32] predict several key points of target, such as center and distance to boundaries, reach a better performance with less cost.

近年来,另一种标准将检测算法分为基于锚点的方法和无锚点的方法。基于锚点的检测器,如,在锚框的帮助下解决了对象检测任务,锚框可以被视为预定义的滑动窗口或建议。然而,所有基于锚的方法都需要精心设计和计算大量的锚箱,这需要大量的计算。为了避免调整超参数和与锚框相关的计算,无锚方法预测目标的几个关键点,例如中心和到边界的距离,以更少的成本达到更好的性能。

2.2知识蒸馏

Knowledge distillation is a kind of model compression and acceleration approach which can effectively improve the performance of small models with guiding of teacher models. In knowledge distillation, knowledge takes many forms, e.g. the soft targets of the output layer [10], the intermediate feature map [27], the distribution of the intermediate feature [12], the activation status of each neuron [9], the mutual information of intermediate feature [1], the transformation of the intermediate feature [35] and the instance relationship [22, 20, 31, 33]. Those knowledge for distillation can be classified into the following categories [7]: response-based [10], feature-based [27, 12, 9, 1, 35], and relation-based [22, 20, 31, 33].

知识提炼是一种模型压缩和加速方法,在教师模型的指导下,可以有效地提高小模型的性能。在知识蒸馏中,知识有多种形式,例如:输出层软目标、中间特征图、中间特征分布、各神经元激活状态、中间特征互信息、中间特征变换和实例关系。这些蒸馏知识可以分为以下几类:基于响应,基于特征和基于关系。

Recently, there are some works applying knowledge distillation to object detection tasks. Unlike the classification tasks, the distillation losses in detection tasks will encounter the extreme unbalance between positive and negative instances. Chen et al. [3] first deals with this problem by underweighting the background distillation loss in the classification head while remaining imitating the full feature map in the backbone. Li et al. [14] designs a distillation framework for two-stage detectors, applying the L2 distilla tion loss to the features sampled by RPN of student model, which consists of randomly sampled negative and positive proposals discriminated by ground truth (GT) labels in a certain proportion. Wang et al. [34] proposes a fine-grained feature imitation for anchor-based detectors, distilling the near objects regions which are calculated by the intersection between GT boxes and anchors generated from detectors. That is to say, the background areas will hardly be distilled even if it may contain several information-rich areas. Similar to Wang et al. [34], Sun et al. [30] only distilling the GT-related region both on feature map and detector head.

最近,有一些工作将知识提炼应用于目标检测任务。与分类任务不同,检测任务中的蒸馏损失将遇到正负实例之间的极端不平衡。Chen等人首先通过降低分类头中的背景蒸馏损失的权重,同时保持模仿主干中的完整特征图来解决这个问题。Li等人设计了一个两阶段检测器的蒸馏框架,将L2蒸馏损失应用于学生模型的RPN采样的特征,该特征由随机采样的负和正建议组成,由地面真值(GT)标签以一定比例区分。Wang等人提出了一种基于锚点的检测器的细粒度特征模仿,提取通过GT盒和检测器生成的锚点之间的交集计算的近物体区域。也就是说,即使背景区域可能包含多个信息丰富的区域,也很难提取背景区域。类似于Wang et al.,Sun et al.仅在特征图和探测器头上提取GT相关区域。

In summary, the previous distillation framework for detection tasks all manually set the ratio between distilled positive and negative instances distinguished by the GT labels to cope with the disproportion of foreground and background area in detection tasks. Thus, the main difference between our method and the previous works can be summarized as follows: (i) Our method does not rely on GT labels, nor does it care about the proportion between positive and negative instances selected for distillation. It is the information gap between student and teacher that guides the model to choose the discriminative patches for imitation. (ii) None of the previous methods take advantage of the relation-based knowledge for distillation. However, it is widely acknowledged that the relation between objects contains tremendous information even within one single image. Thus, based on our selected discriminative patches, we extract the relation-based knowledge among them for distillation, achieving further performance gain.

综上所述,以前的检测任务的提取框架都是手动设置由GT标签区分的提取的正实例和负实例之间的比率,以科普检测任务中前景和背景区域的不均衡。因此,我们的方法和以前的工作之间的主要区别可以总结如下:

- 我们的方法不依赖于GT标签,也不关心选择用于蒸馏的阳性和阴性实例之间的比例。学生和教师之间的信息差引导模型选择用于模仿的判别块。

- 以前的方法都没有利用基于关系的知识进行蒸馏。然而,人们普遍认为,即使在一个单一的图像中,对象之间的关系也包含了大量的信息。因此,基于我们选择的判别补丁,我们提取其中的关系为基础的知识蒸馏,实现进一步的性能增益。

3一般实例蒸馏

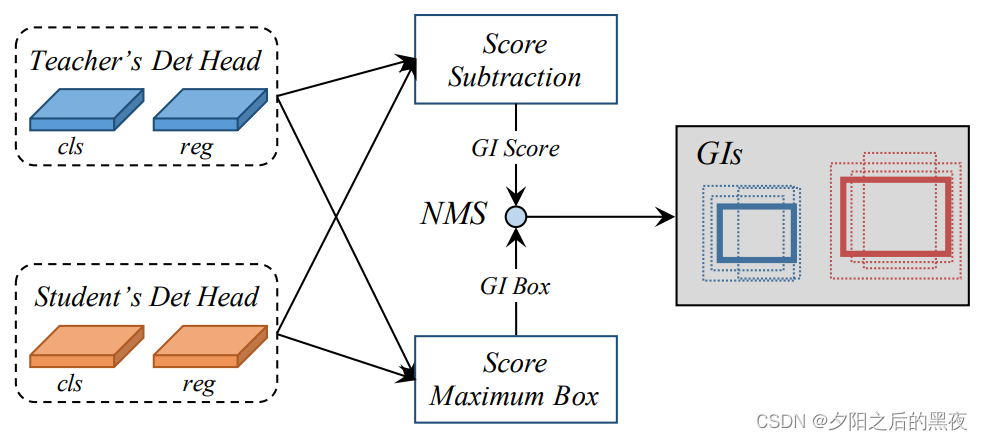

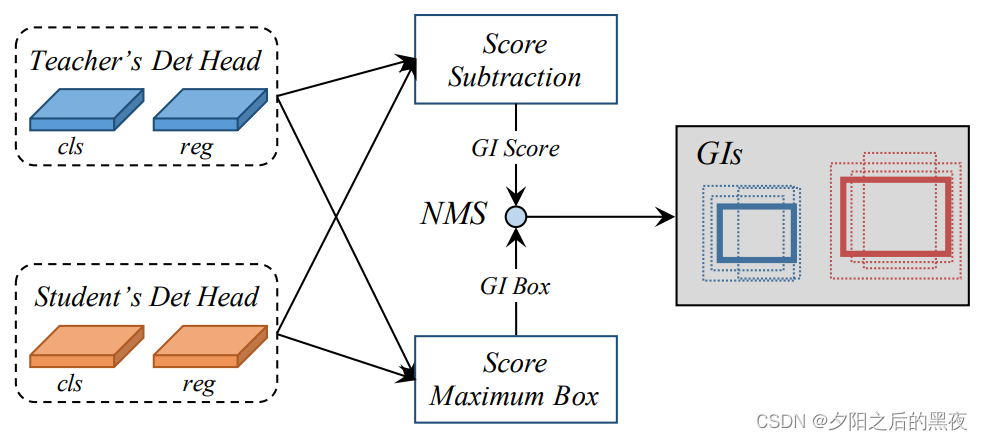

Previous work [34] proposed that the feature regions near objects have considerable information which is useful for knowledge distillation. However, we find that not only the feature regions near objects but also the discriminative patches even from the background area have meaningful knowledge. Base on this finding, we design the general instance selection module (GISM), as shown in Fig 2. The module utilizes the predictions from both teacher and student model to select the key instances for distillation.

以前的工作提出,物体附近的特征区域具有相当多的信息,这对于知识蒸馏是有用的。然而,我们发现,不仅特征区域附近的对象,但也歧视补丁,甚至从背景区域有意义的知识。基于这一发现,我们设计了通用实例选择模块(GISM),如图2所示。该模块利用来自教师和学生模型的预测来选择用于蒸馏的关键实例。

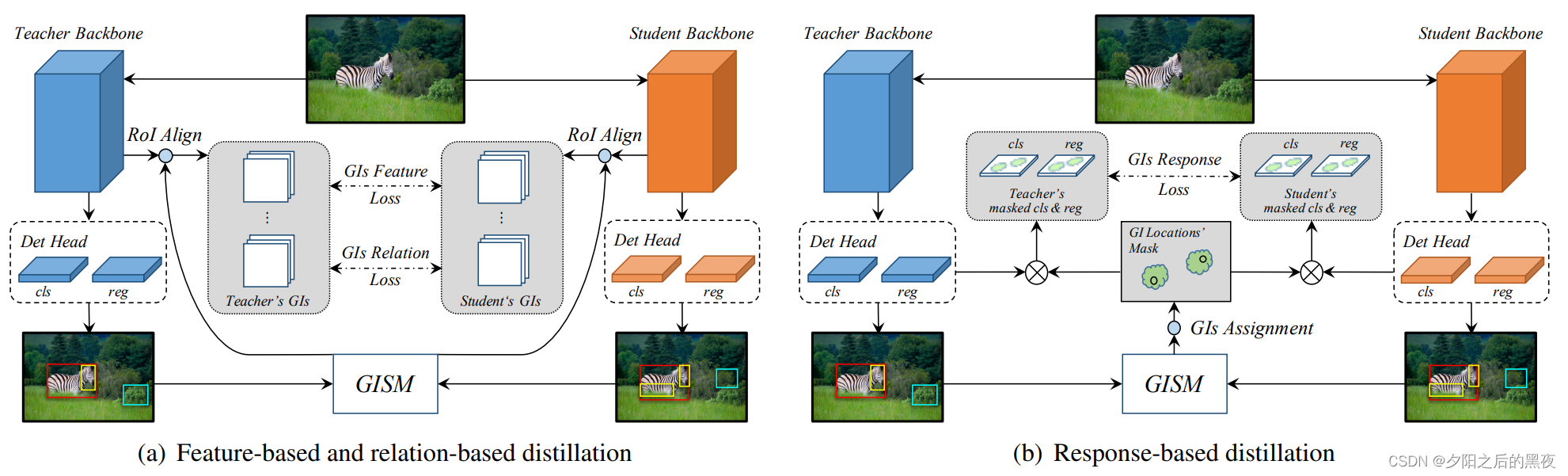

Furthermore, to make better use of the information provided by the teacher, we extract and take advantage of feature-based, relation-based and the response-based knowledge for distillation, as shown in Fig 3. The experimental results show that our distillation framework is general for current state-of-the-art detection models.

此外,为了更好地利用教师提供的信息,我们提取并利用基于特征,基于关系和基于响应的知识进行蒸馏,如图3所示。实验结果表明,我们的蒸馏框架是一般的当前国家的最先进的检测模型。

3.1常规实例选择模块

In detection model, predictions indicate the attention patches which are commonly meaningful areas. The difference of such patches between teacher and student model is also closely related to their performance gap. In order to quantify the difference for each instance and then select the discriminative instances for distillation, we propose two indicator: GI score and GI box. Both of them are dynamically calculated during each training step. For saving the computation resources during training, we simply calculate the L1 distance of classification score as GI score and choose box with higher score as GI box. Fig 2 illustrates the procedure of generating GI, and the score and box of which from each predicted instance r is defined as below.

在检测模型中,预测指示通常有意义的区域的注意补丁。教师和学生模型之间的这种补丁的差异也与他们的表现差距密切相关。为了量化每个实例的差异,然后选择用于蒸馏的判别实例,我们提出了两个指标:GI评分和GI箱。在每个训练步骤期间动态地计算它们两者。为了节省训练过程中的计算资源,我们简单地计算分类得分的L1距离作为GI得分,并选择得分较高的框作为GI框。图2示出了生成GI的过程,

并且来自每个预测实例r的GI的得分和框定义如下:

待续......