基础环境:centos7 + cuda10.2+cudnn7

显卡:Tesla V100

1 C++编译准备

代码准备:

git clone -b release/v5.0_tag https://github.com/NVIDIA/FasterTransformer.git

mkdir -p FasterTransformer/build

cd FasterTransformer/build

git submodule init && git submodule update

(1)安装cmake

yum install -y gcc-c++ ncurses-devel openssl-devel

wget https://cmake.org/files/v3.16/cmake-3.16.3.tar.gz

tar xzf cmake-3.16.3.tar.gz

cd cmake-3.16.3

./bootstrap --prefix=/usr/local/cmake-3.16.3

make -j$(nproc)

make install

export PATH=/usr/local/cmake-3.16.3/bin:$PATH

(2)安装mpi

yum install perl

wget https://download.open-mpi.org/release/open-mpi/v4.1/openmpi-4.1.0.tar.gz

tar -xvf openmpi-4.1.0.tar.gz

cd openmpi-4.1.0

./configure --prefix=/usr/local/mpi

make -j8

make install

export PATH=$PATH:/usr/local/mpi/bin

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/mpi/lib

source ~/.bashrc

如果已经有mpi,添加软链接即可:ln -s /opt/mpi /usr/local/mpi

(3)安装gcc7.3.1

yum install centos-release-scl

yum install devtoolset-7-gcc devtoolset-7-gcc-c++ devtoolset-7-binutils

scl enable devtoolset-7 bash

gcc --version

2 C++编译

cd FasterTransformer/build

cmake -DSM=70 -DCMAKE_BUILD_TYPE=Release -DBUILD_MULTI_GPU=ON ..

make

3 运行

3.1 运行环境准备

(1)安装miniconda

wget https://repo.anaconda.com/miniconda/Miniconda3-py37_23.1.0-1-Linux-x86_64.sh

mkdir -p /opt/miniconda3

bash Miniconda3-py37_23.1.0-1-Linux-x86_64.sh -b -f -p "/opt/miniconda3/"

/opt/miniconda3/bin/conda init

source ~/.bashrc

这里安装路径为:/opt/miniconda3/,可自定义安装路径

(2)安装依赖环境

pip install torch==1.10.0+cu102 -f https://download.pytorch.org/whl/cu102/torch_stable.html

pip install -r ../examples/pytorch/requirement.txt

(3)模型准备(以megatron和huggingface的模型为例)

------ megatron模型的下载与转换

wget --content-disposition https://api.ngc.nvidia.com/v2/models/nvidia/megatron_lm_345m/versions/v0.0/zip -O megatron_lm_345m_v0.0.zip

mkdir -p ../models/megatron-models/345m

unzip megatron_lm_345m_v0.0.zip -d ../models/megatron-models/345m

python ../examples/pytorch/gpt/utils/megatron_ckpt_convert.py -head_num 16 -i ../models/megatron-models/345m/release/ -o ../models/megatron-models/c-model/345m/ -t_g 1 -i_g 1

参数说明:这里-i为模型的输入路径;-o为模型转换后的输出路径;-t_g为训练的gpu数量;-i_g为推理的gpu数量。默认转换为fp32的模型,如需使用fp16的模型,则添加-weight_data_type fp16

------ huggingface模型的下载与转换

mkdir -p ../models/huggingface-models

cd ../models/huggingface-models

git clone https://huggingface.co/gpt2-xl (注:这里模型下载不完整)

可以使用如下方法下载:

from transformers import GPT2Tokenizer, GPT2Model

tokenizer = GPT2Tokenizer.from_pretrained('gpt2-xl')

model = GPT2Model.from_pretrained('gpt2-xl')

转换模型 (参数说明同megatron)

python ../examples/pytorch/gpt/utils/huggingface_gpt_convert.py -i ../models/huggingface-models/gpt2_xl/ -o ../models/huggingface-models/c-model/gpt2-xl -i_g 1

3.2 运行

以megatron模型为例

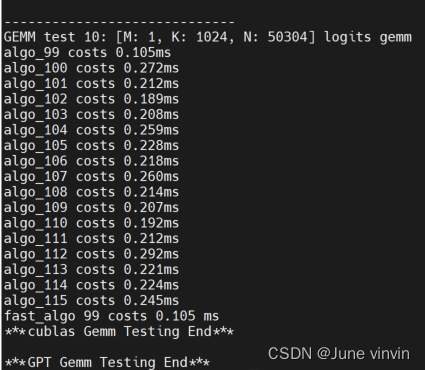

(1)生成gemm_config.in文件

data_type = 0 (FP32) or 1 (FP16) or 2 (BF16)

运行参数说明:

./bin/gpt_gemm <batch_size> <beam_width> <max_input_len> <head_number> <size_per_head> <inter_size> <vocab_size> <data_type> <tensor_para_size>

./bin/gpt_gemm 1 1 20 16 64 4096 50304 1 1

修改参数配置:vim …/examples/cpp/multi_gpu_gpt/gpt_config.ini

注意修改model_name,model_dir,以及按需修改其他的参数

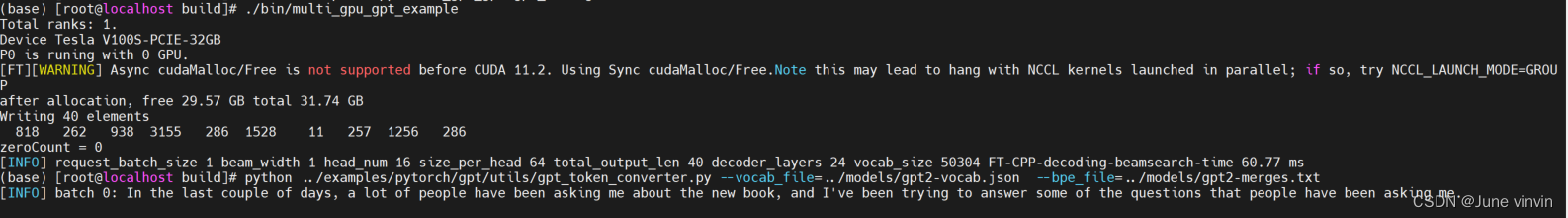

(2)在 C++ 上运行 GPT

./bin/multi_gpu_gpt_example

将id转成tokens

python ../examples/pytorch/gpt/utils/gpt_token_converter.py --vocab_file=../models/gpt2-vocab.json --bpe_file=../models/gpt2-merges.txt

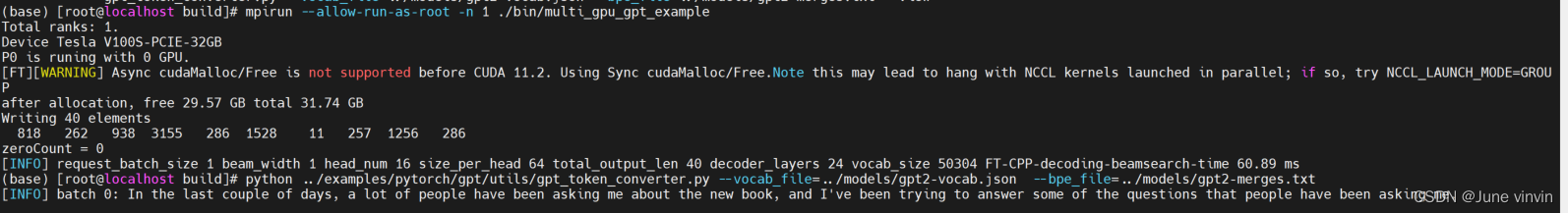

(3)以张量并行(TP)、流水线并行(PP)运行

mpirun --allow-run-as-root -n 1 ./bin/multi_gpu_gpt_example

python ../examples/pytorch/gpt/utils/gpt_token_converter.py --vocab_file=../models/gpt2-vocab.json --bpe_file=../models/gpt2-merges.txt