1.赛事相关信息

- 点击查看

2.赛题分析

- 软体对GPU要求较高,环境配置复杂,选择刚体环境

- 先以模仿学习/强化学习的刚体环境为基础,后期再考虑无限制刚体环境

- 部分任务(如将物块移动到指定位置),存在相机之外的额外输入:目标位置,rgbd图像难以将其作为可视化输入(超出了相机范围),所以选择点云

- 由于机械臂操作策略只关心末端执行器的运动,所以控制执行器做出动作,控制模式

pd_ee_delta_pose,动作控制的是末端执行器的每一步运动

3.演示转换

3.1.演示重现

- 使用maniskill2提供的脚本:可以交互查看不同相机拍摄的内容,赛题里面只能使用两个相机(手上相机

hand_camera、机械臂斜上方的相机base_camera),视野范围都很小,并且存在遮挡:

python tools/replay_trajectory.py --traj-path demos/rigid_body_envs/PickCube-v0/PickCube-v0.h5 --vis

3.2.控制模式转换

- 提供的演示示例需要进行控制模式的转换:使用maniskill2提供的脚本,10线程转换,

i7-7700HQ CPU,大概五分钟,五分钟之内电脑卡的别的什么都干不了:

python tools/replay_trajectory.py --traj-path demos/rigid_body_envs/PickCube-v0/PickCube-v0.h5 \

--save-traj --target-control-mode pd_ee_delta_pose --num-procs 10

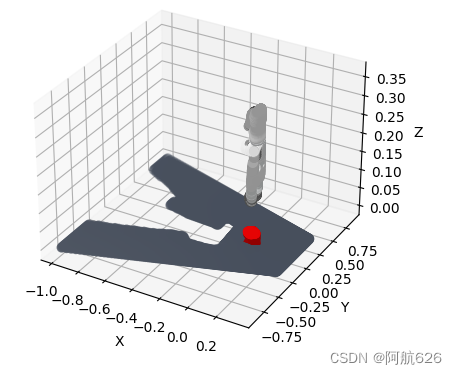

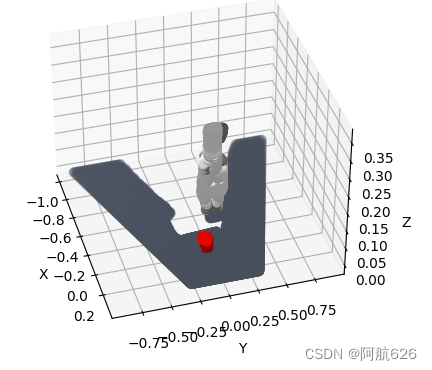

3.3.点云可视化

- 使用

matplotlib散点图可视化

import gym

import mani_skill2.envs

import numpy as np

import open3d as o3d

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

#obs_mode = pointcloud, rgbd, state_dict, state

env = gym.make("PickCube-v0", obs_mode="pointcloud", control_mode="pd_ee_delta_pose")

env.seed(0)

obs = env.reset()

done = False

fig = plt.figure()

i = 0

while not done:

action = np.array([0.1,0.1,0.1,0,0,0,1])

obs, reward, done, info = env.step(action)

point = np.array(obs['pointcloud']['xyzw'])

rgb = np.array(obs['pointcloud']['rgb'])

print(point.shape)#(32768,4)

print(rgb.shape)#(32768,3)

i += 1

if i == 10:

ax = fig.add_subplot(projection='3d')

#point_xyz = point[:,[0,1,2]]

ax.scatter(point[:,[0]], point[:,[1]], point[:,[2]], zdir="z",facecolors=rgb/255, marker="o", s=40)

#ax.scatter(xs, ys, zs=zs2, zdir="z", facecolors=rgb, marker="^", s=40)

ax.set(xlabel="X", ylabel="Y", zlabel="Z")

plt.show()

env.render() # a display is required to render

env.close()

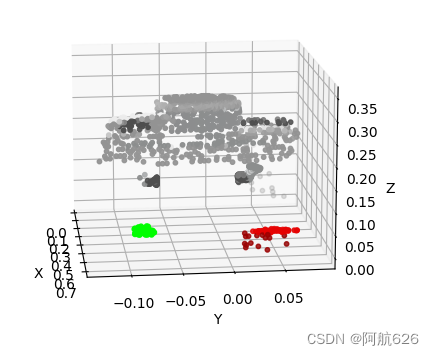

- 效果:

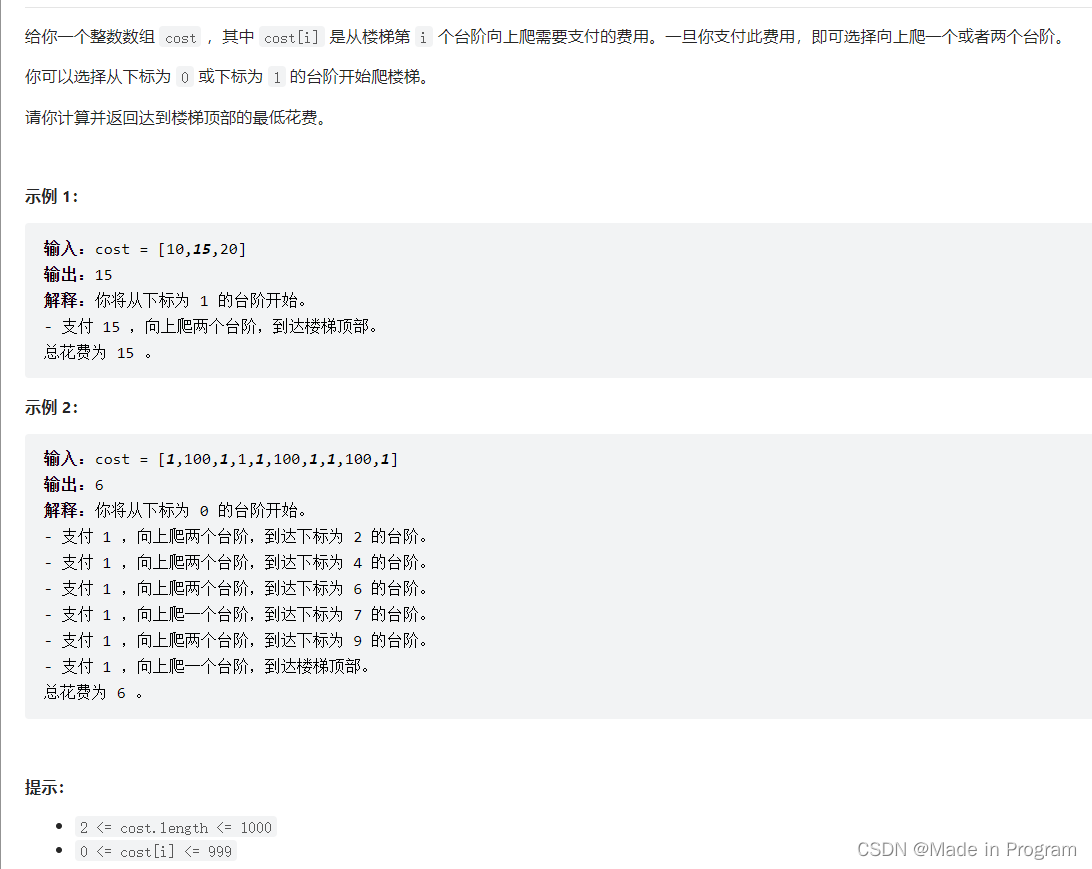

3.4.示例点云转换

- 使用maniskill2_learn脚本:

python tools/convert_state.py --env-name PickCube-v0 --num-procs 1 \

--traj-name /home/jiangyvhang/ManiSkill2/demos/rigid_body_envs/PickCube-v0/PickCube-v0.none.pd_ee_delta_pose.h5 \

--json-name /home/jiangyvhang/ManiSkill2/demos/rigid_body_envs/PickCube-v0/PickCube-v0.none.pd_ee_delta_pose.json \

--output-name /home/jiangyvhang/ManiSkill2/demos/rigid_body_envs/PickCube-v0/PickCube-v0.trajectory.none.pd_ee_delta_pose_pcd.h5 \

--control-mode pd_ee_delta_pose \

--max-num-traj 6 \

--obs-mode pointcloud \

--n-points 1200 \

--obs-frame base \

--reward-mode dense \

--n-goal-points 50 \

--obs-frame base \

--render \

- 参数解读:

--env-name:环境名字 \

--num-procs :线程数,越多转换越快,但启动多线程会比较慢,1 \

--traj-name .h5示例文件路径 \

--json-name .json示例文件路径 \

--output-name 输出的转换后的.h5文件路径 \

--control-mode :控制模式,pd_ee_delta_pose \

--max-num-traj :转换的路径个数,小一点,更快完成转换,快速验证,6 \

--obs-mode :观测方式,pointcloud \

--n-points :点云点数,1200 \

--obs-frame :观测点云基准,base \

--reward-mode:选择稠密或稀疏奖赏 dense:稠密,sparse:稀疏 \

--n-goal-points :目标位置处点云数量,50 \

--render :演示,会降低转换速度,并且只能开启一个线程\

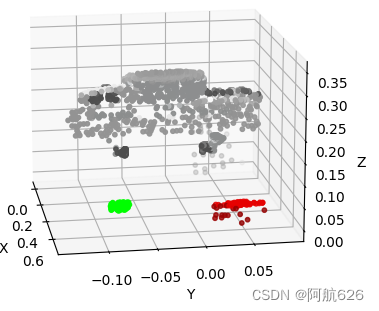

- 点云可视化:

import h5py

import numpy as np

file = '/home/jiangyvhang/ManiSkill2/demos/rigid_body_envs/PickCube-v0/PickCube-v0.trajectory.none.pd_ee_delta_pose_pcd.h5'

h5file = h5py.File(file, "r")

print(np.array(h5file['traj_0']))

for key in h5file['traj_0'].keys():

#print(h5file['traj_0'][key])

'''

<HDF5 dataset "dict_str_actions": shape (88, 7), type "<f4">

<HDF5 dataset "dict_str_dones": shape (88, 1), type "|b1">

<HDF5 dataset "dict_str_episode_dones": shape (88, 1), type "|b1">

<HDF5 dataset "dict_str_is_truncated": shape (88, 1), type "|b1">

<HDF5 group "/traj_0/dict_str_obs" (5 members)>

<HDF5 dataset "dict_str_rewards": shape (88, 1), type "<f4">

<HDF5 dataset "dict_str_worker_indices": shape (88, 1), type "<i4">

'''

#print( np.array( h5file['traj_0'][key] ) )

pass

'''

for key in h5file['traj_0']['dict_str_obs']:

print(h5file['traj_0']['dict_str_obs'][key])

print( np.array( h5file['traj_0']['dict_str_obs'][key] ) )

'''

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

fig = plt.figure()

ax = fig.add_subplot(projection='3d')

point = np.array( h5file['traj_0']['dict_str_obs']['dict_str_xyz'] )

rgb = np.array( h5file['traj_0']['dict_str_obs']['dict_str_rgb'] )

i = 80#查看第i帧

ax.scatter(point[[i],:,[0]], point[[i],:,[1]], point[[i],:,[2]], zdir="z",facecolors=np.squeeze(rgb[[i],:],0), marker=".", s=40)

ax.set(xlabel="X", ylabel="Y", zlabel="Z")

plt.show()

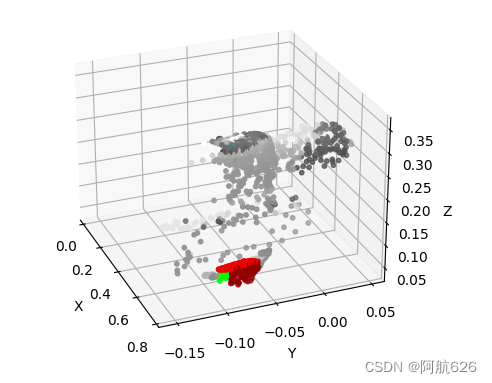

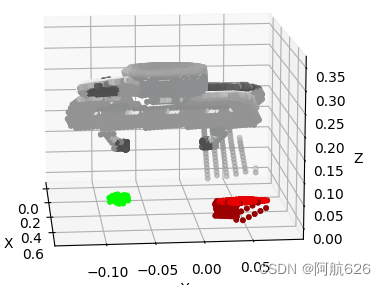

效果:

i = 0

i = 10

i = 80

- 调整参数,重新转换(需要删除上一步输出的文件,或更改输出的文件名):

python tools/convert_state.py --env-name PickCube-v0 --num-procs 1 \

--traj-name /home/jiangyvhang/ManiSkill2/demos/rigid_body_envs/PickCube-v0/PickCube-v0.none.pd_ee_delta_pose.h5 \

--json-name /home/jiangyvhang/ManiSkill2/demos/rigid_body_envs/PickCube-v0/PickCube-v0.none.pd_ee_delta_pose.json \

--output-name /home/jiangyvhang/ManiSkill2/demos/rigid_body_envs/PickCube-v0/PickCube-v0.trajectory.none.pd_ee_delta_pose_pcd.h5 \

--control-mode pd_ee_delta_pose \

--max-num-traj 2 \

--obs-mode pointcloud \

--n-points 10000 \

--obs-frame world \

--reward-mode dense \

--n-goal-points 50 \

--render \

- 输出:

Obs mode: pointcloud; Control mode: pd_ee_delta_pose

Obs frame: world; n_points: 10000; n_goal_points: 50

Reset kwargs for the current trajectory: {'seed': 0}

Convert Trajectory: completed 1 / 2; this trajectory has length 88

Reset kwargs for the current trajectory: {'seed': 1}

Convert Trajectory: completed 2 / 2; this trajectory has length 101

Finish using /tmp/0.h5

maniskill2_learn - (record_utils.py:280) - INFO - 2022-12-02,16:31:19 - Total number of trajectories 2

Finish merging files to /home/jiangyvhang/ManiSkill2/demos/rigid_body_envs/PickCube-v0/PickCube-v0.trajectory.none.pd_ee_delta_pose_pcd.h5

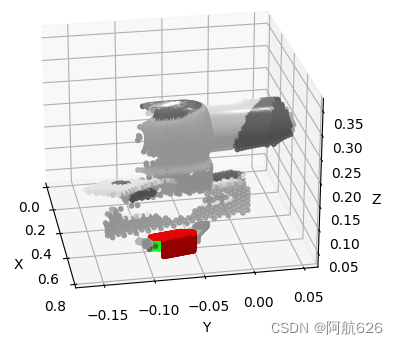

- 效果:

i = 0

i = 10

i = 80

![[附源码]计算机毕业设计springboot新能源汽车租赁](https://img-blog.csdnimg.cn/dda64d9657be4f0798ae2fedf5db3664.png)