基于概率论的分类方法:朴素贝叶斯

文章目录

- 基于概率论的分类方法:朴素贝叶斯

- 概述

- 条件概率

- 贝叶斯公式

- 朴素贝叶斯分类器

- 2个假设

- Example:文本分类

- 欢迎关注公众号【三戒纪元】

概述

朴素贝叶斯可以处理多类别问题,在数据较少的情况下仍然有效,但对于输入数据的准备方式较为敏感。

朴素贝叶斯是贝叶斯决策理论的一部分。

核心思想:选择高概率对应的类别,即选择具有最高概率的决策。

例如,当我们识别了一个障碍物,根据其特征,判断它是汽车的概率为60%,判定是卡车的概率为45%,判定是自行车的概率为30%,判定是行人的概率是5%,则最终我们会选择相信,该障碍物极有可能是一辆汽车,因为它是汽车的概率最大。

条件概率

设A、B是两个事件,且P(A) > 0,则称

P

(

B

∣

A

)

=

P

(

A

B

)

p

(

A

)

P(B|A) = \frac{P(AB)}{p(A)}

P(B∣A)=p(A)P(AB)为事件A发生的条件下事件B的条件概率

{

P

(

A

B

)

=

P

(

A

)

P

(

B

∣

A

)

,

P(A) > 0

P

(

A

B

)

=

P

(

B

)

P

(

A

∣

B

)

,

P(B) > 0

\begin{cases} P(AB) = P(A) P(B|A), & \text{P(A) > 0} \\ P(AB) = P(B) P(A|B), & \text{P(B) > 0} \\ \end{cases}

{P(AB)=P(A)P(B∣A),P(AB)=P(B)P(A∣B),P(A) > 0P(B) > 0

贝叶斯公式

设

A

1

,

A

2

,

.

.

.

,

A

n

A_1,A_2,...,A_n

A1,A2,...,An 是完备事件组,且

P

(

A

i

)

>

0

(

i

=

1

,

2

,

.

.

.

,

n

)

P(A_i) > 0(i = 1,2,...,n)

P(Ai)>0(i=1,2,...,n),B为任意事件,

P

(

B

)

>

0

P(B) > 0

P(B)>0,则

P

(

A

k

∣

B

)

=

P

(

A

k

)

P

(

B

∣

A

k

)

P

(

B

)

=

P

(

A

k

)

P

(

B

∣

A

k

)

∑

i

=

1

N

(

P

(

A

i

)

P

(

B

∣

A

i

)

)

P(A_k|B) = \frac{P(A_k)P(B|A_k)}{P(B)}=\frac{P(A_k)P(B|A_k)}{\sum_{i=1}^N (P(A_i)P(B|A_i))}

P(Ak∣B)=P(B)P(Ak)P(B∣Ak)=∑i=1N(P(Ai)P(B∣Ai))P(Ak)P(B∣Ak)

朴素贝叶斯分类器

2个假设

- 特征之间相互独立

- 每个特征同等重要

Example:文本分类

构建快速过滤器,屏蔽社区侮辱性言论。

-

首先从文本中构建词向量,将句子转化为向量

-

基于这些向量计算条件概率

-

构建分类器

创建一个名为 bayes.py的文件

from numpy import *

# 1. 词表到向量的转换函数

## 创建实验样本

def loadDataSet():

postingList=[['my', 'dog', 'has', 'flea', 'problems', 'help', 'please'],

['maybe', 'not', 'take', 'him', 'to', 'dog', 'park', 'stupid'],

['my', 'dalmation', 'is', 'so', 'cute', 'I', 'love', 'him'],

['stop', 'posting', 'stupid', 'worthless', 'garbage'],

['mr', 'licks', 'ate', 'my', 'steak', 'how', 'to', 'stop', 'him'],

['quit', 'buying', 'worthless', 'dog', 'food', 'stupid']]

classVec = [0,1,0,1,0,1] #1 is abusive, 0 not

return postingList,classVec

## 创建一个包含在所有文档中出现的不重复词的列表

def createVocabList(dataSet):

vocabSet = set([]) #create empty set

for document in dataSet:

vocabSet = vocabSet | set(document) #union of the two sets

return list(vocabSet)

## 词汇表中的单词是否在输入文档中出现

def setOfWords2Vec(vocabList, inputSet):

returnVec = [0]*len(vocabList)

for word in inputSet:

if word in vocabList:

returnVec[vocabList.index(word)] = 1

else: print "the word: %s is not in my Vocabulary!" % word

return returnVec

# 2.朴素贝叶斯分类器训练函数

def trainNB0(trainMatrix,trainCategory):

numTrainDocs = len(trainMatrix)

numWords = len(trainMatrix[0])

pAbusive = sum(trainCategory)/float(numTrainDocs)

p0Num = ones(numWords); p1Num = ones(numWords) #change to ones()

p0Denom = 2.0; p1Denom = 2.0 #change to 2.0

for i in range(numTrainDocs):

if trainCategory[i] == 1:

p1Num += trainMatrix[i]

p1Denom += sum(trainMatrix[i])

else:

p0Num += trainMatrix[i]

p0Denom += sum(trainMatrix[i])

p1Vect = log(p1Num/p1Denom) #change to log()

p0Vect = log(p0Num/p0Denom) #change to log()

return p0Vect,p1Vect,pAbusive

# 3. 朴素贝叶斯分类函数

def classifyNB(vec2Classify, p0Vec, p1Vec, pClass1):

p1 = sum(vec2Classify * p1Vec) + log(pClass1) #element-wise mult

p0 = sum(vec2Classify * p0Vec) + log(1.0 - pClass1)

if p1 > p0:

return 1

else:

return 0

def bagOfWords2VecMN(vocabList, inputSet):

returnVec = [0]*len(vocabList)

for word in inputSet:

if word in vocabList:

returnVec[vocabList.index(word)] += 1

return returnVec

def testingNB():

listOPosts,listClasses = loadDataSet()

myVocabList = createVocabList(listOPosts)

trainMat=[]

for postinDoc in listOPosts:

trainMat.append(setOfWords2Vec(myVocabList, postinDoc))

p0V,p1V,pAb = trainNB0(array(trainMat),array(listClasses))

testEntry = ['love', 'my', 'dalmation']

thisDoc = array(setOfWords2Vec(myVocabList, testEntry))

print testEntry,'classified as: ',classifyNB(thisDoc,p0V,p1V,pAb)

testEntry = ['stupid', 'garbage']

thisDoc = array(setOfWords2Vec(myVocabList, testEntry))

print testEntry,'classified as: ',classifyNB(thisDoc,p0V,p1V,pAb)

def textParse(bigString): #input is big string, #output is word list

import re

listOfTokens = re.split(r'\W*', bigString)

return [tok.lower() for tok in listOfTokens if len(tok) > 2]

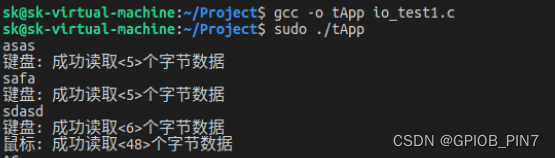

测试代码如下:

from bayes import *

def test_randy():

print("******* 测试词表到向量的转换函数 createVocabList setOfWords2Vec ******* ")

listOPosts, listClasses = loadDataSet()

randyVocabList = createVocabList(listOPosts)

print("============> randyVocabList \n", randyVocabList)

print("============> cute existed? \n", setOfWords2Vec(randyVocabList, listOPosts[0]))

print("============> garbage existed? \n", setOfWords2Vec(randyVocabList, listOPosts[3]))

print("******* 测试朴素贝叶斯分类器训练函数 trainNB0 ******* ")

trainMat = []

for postinDoc in listOPosts:

trainMat.append(setOfWords2Vec(randyVocabList, postinDoc))

p0V, p1V, pAB = trainNB0(trainMat, listClasses)

print("============> p0V \n", p0V)

print("============> p1V \n", p1V)

print("============> pAB \n", pAB)

print("******* 测试朴素贝叶斯分类函数 testingNB ******* ")

testingNB()

if __name__ == "main":

test_randy

结果为:

******* 测试词表到向量的转换函数 createVocabList setOfWords2Vec *******

============> randyVocabList

['maybe', 'so', 'not', 'stop', 'ate', 'cute', 'my', 'dog', 'to', 'park', 'worthless', 'how', 'posting', 'has', 'flea', 'help', 'him', 'love', 'buying', 'is', 'licks', 'food', 'garbage', 'problems', 'dalmation', 'I', 'steak', 'take', 'please', 'stupid', 'quit', 'mr']

============> cute existed?

[0, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0]

============> garbage existed?

[0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0]

******* 测试朴素贝叶斯分类器训练函数 trainNB0 *******

============> p0V

[-3.25809654 -2.56494936 -3.25809654 -2.56494936 -2.56494936 -2.56494936

-1.87180218 -2.56494936 -2.56494936 -3.25809654 -3.25809654 -2.56494936

-3.25809654 -2.56494936 -2.56494936 -2.56494936 -2.15948425 -2.56494936

-3.25809654 -2.56494936 -2.56494936 -3.25809654 -3.25809654 -2.56494936

-2.56494936 -2.56494936 -2.56494936 -3.25809654 -2.56494936 -3.25809654

-3.25809654 -2.56494936]

============> p1V

[-2.35137526 -3.04452244 -2.35137526 -2.35137526 -3.04452244 -3.04452244

-3.04452244 -1.94591015 -2.35137526 -2.35137526 -1.94591015 -3.04452244

-2.35137526 -3.04452244 -3.04452244 -3.04452244 -2.35137526 -3.04452244

-2.35137526 -3.04452244 -3.04452244 -2.35137526 -2.35137526 -3.04452244

-3.04452244 -3.04452244 -3.04452244 -2.35137526 -3.04452244 -1.65822808

-2.35137526 -3.04452244]

============> pAB

0.5

******* 测试朴素贝叶斯分类函数 testingNB *******

['love', 'my', 'dalmation'] classified as: 0

['stupid', 'garbage'] classified as: 1

['love', 'my', 'dalmation'] classified as: 0

['stupid', 'garbage'] classified as: 1