文章目录

- 一. jps查看各个节点后台进程

- 二. 启停hadoop集群

- 三. 群起zookeeper集群脚本zk.sh

- 四. 同步文件

- 五. 启动停止整个集群

一. jps查看各个节点后台进程

我们经常需要查看各个节点的进程情况

vi jps.sh

#!/bin/bash

for i in hp5 hp6 hp7

do

echo -------------------------------- $i 节点进程情况 ---------------------------

ssh $i "Jps | grep -v Jps"

done

二. 启停hadoop集群

hadoop 3.3版本后启动集群其实也方便,在主节点有启动和停止所有节点的命令,但是historyserver最好在每个节点都启动,所以还是可以写一个脚本。

原来操作的方法:

cd /home/hadoop-3.3.2/sbin

./start-all.sh #启动集群

./stop-all.sh #停止集群

cd /home/hadoop-3.3.2/bin/

./mapred --daemon start historyserver#每个节点启动

脚本的方法:

#!/bin/bash

HADOOP_HOME=/home/hadoop-3.3.2

export HADOOP_HOME

case $1 in

"start"){

echo ---------- 开始启动集群 ------------

$HADOOP_HOME/sbin/start-all.sh

for i in hp5 hp6 hp7

do

echo ---------- 启动 $i historyserver ------------

ssh $i "$HADOOP_HOME/bin/mapred --daemon start historyserver"

done

echo ---------- 结束启动集群 ------------

};;

"stop"){

echo ---------- 开始关闭集群 ------------

$HADOOP_HOME/sbin/stop-all.sh

for i in hp5 hp6 hp7

do

echo ---------- 关闭 $i historyserver ------------

ssh $i "$HADOOP_HOME/bin/mapred --daemon stop historyserver"

done

echo ---------- 结束关闭集群 ------------

};;

"status"){

for i in hp5 hp6 hp7

do

echo -------------------------------- $i 节点进程情况 ---------------------------

ssh $i "jps | grep -v Jps"

done

};;

esac

三. 群起zookeeper集群脚本zk.sh

#!/bin/bash

case $1 in

"start"){

for i in hp5 hp6 hp7

do

echo ---------- zookeeper $i 启动 ------------

ssh $i "/home/software/zookeeper-3.4.6/bin/zkServer.sh start"

done

};;

"stop"){

for i in hp5 hp6 hp7

do

echo ---------- zookeeper $i 停止 ------------

ssh $i "/home/software/zookeeper-3.4.6/bin/zkServer.sh stop"

done

};;

"status"){

for i in hp5 hp6 hp7

do

echo ---------- zookeeper $i 状态 ------------

ssh $i "/home/software/zookeeper-3.4.6/bin/zkServer.sh status"

done

};;

esac

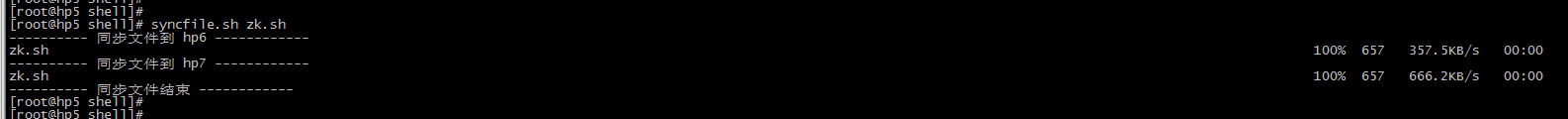

四. 同步文件

我们经常会遇到修改配置文件,需要分发到其它节点

#!/bin/bash

# 把当前目录下修改的文件同步到其它节点的相同目录下(需要首先进入到文件所在的目录)

cur_dir=$(pwd)

filename=$1

for i in hp6 hp7

do

echo ---------- 同步文件到 $i ------------

scp $cur_dir/$filename root@$i:$cur_dir/

done

echo ---------- 同步文件结束 ------------

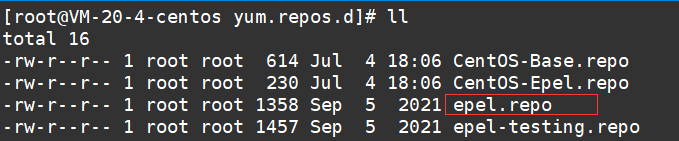

脚本我都放在/home/shell目录了,为了让脚本可以在任意目录使用,需要配置环境变量,在PATH: 后增加

vi /etc/profile

:/home/shell

测试:

五. 启动停止整个集群

代码:

#!/bin/bash

function start_cluster()

{

echo "######开始启动Hadoop集群############"

sh cluster.sh start

echo "######结束启动Hadoop集群############"

echo " "

echo "######开始启动zookeeper集群############"

sh zk.sh start

echo "######结束启动zookeeper集群############"

echo " "

echo "######开始启动hive metastore############"

nohup hive --service metastore &

echo "######结束启动hive metastore集群############"

echo " "

echo "######开始启动Spark集群############"

start-all.sh

echo "######结束启动Spark集群############"

echo " "

echo "######开始启动Flink集群############"

start-cluster.sh

echo "######结束启动Flink集群############"

echo " "

echo " "

echo " "

echo " "

echo " "

echo " "

echo "#################整个集群启动完成#####################"

}

function stop_cluster()

{

echo "######开始关闭Hadoop集群############"

sh cluster.sh stop

echo "######结束关闭Hadoop集群############"

echo " "

echo "######开始关闭zookeeper集群############"

sh zk.sh stop

echo "######结束关闭zookeeper集群############"

echo " "

echo "######开始关闭hive metastore############"

ps -ef | grep HiveMetaStore | grep -v grep | awk '{print $2}'|xargs kill -9

echo "######结束关闭hive metastore集群############"

echo " "

echo "######开始关闭Spark集群############"

stop-all.sh

echo "######结束关闭Spark集群############"

echo " "

echo "######开始关闭Flink集群############"

stop-cluster.sh

echo "######结束关闭Flink集群############"

echo " "

echo " "

echo " "

echo " "

echo " "

echo " "

echo "#################整个集群关闭完成#####################"

}

case $1 in

"start"){

start_cluster

}

;;

"stop"){

stop_cluster

}

;;

"restart"){

stop_cluster

start_cluster

}

;;

esac

测试记录: