当自己的数据集是从大图中切割下来的小图(根据检测框外括一小部分比例的抠图,后续更新此方法),此时数据集一定是大小不一的图片,若每张都直接输入进行训练那么太小的图片会失真严重,本篇对此提出了解决方法,根据图像大小的范围进行宫格拼图(拼成输入大小)。

为了防止拼成后的数据量减少太多,每个大小范围内的小图都按一定的比例进行打乱拼图。

这样同时解决了两个问题:1.小图被resize比例太大的失真问题;2.数据增强。

准备

首先是将数据的json格式转化为txt格式的py文件json2txt.py:

import json

import os

import cv2

print(cv2.__version__)

def getBoundingBox(points):

xmin = points[0][0]

xmax = points[0][0]

ymin = points[0][1]

ymax = points[0][1]

for p in points:

if p[0] > xmax:

xmax = p[0]

elif p[0] < xmin:

xmin = p[0]

if p[1] > ymax:

ymax = p[1]

elif p[1] < ymin:

ymin = p[1]

return [int(xmin), int(xmax), int(ymin), int(ymax)]

def json2txt(json_path, txt_path):

json_data = json.load(open(json_path))

img_h = json_data["imageHeight"]

img_w = json_data["imageWidth"]

shape_data = json_data["shapes"]

shape_data_len = len(shape_data)

img_name = os.path.split(json_path)[-1].split(".json")[0]

name = img_name + '.jpg'

data = ''

for i in range(shape_data_len):

lable_name = shape_data[i]["label"]

points = shape_data[i]["points"]

[xmin, xmax, ymin, ymax] = getBoundingBox(points)

if xmin <= 0:

xmin = 0

if ymin <= 0:

ymin = 0

if xmax >= img_w:

xmax = img_w - 1

if ymax >= img_h:

ymax = img_h - 1

b = name + ' ' + lable_name + ' ' + str(xmin) + ' ' + str(ymin) + ' ' + str(xmax) + ' ' + str(ymax)

# print(b)

data += b + '\n'

with open(txt_path + '/' + img_name + ".txt", 'w', encoding='utf-8') as f:

f.writelines(data)

if __name__ == "__main__":

json_path = "/data/cch/yolov5-augment/train/json"

saveTxt_path = "/data/cch/yolov5-augment/train/txt"

filelist = os.listdir(json_path)

for file in filelist:

old_dir = os.path.join(json_path, file)

if os.path.isdir(old_dir):

continue

filetype = os.path.splitext(file)[1]

if(filetype != ".json"): continue

json2txt(old_dir, saveTxt_path)

def main_import(json_path, txt_path):

filelist = os.listdir(json_path)

for file in filelist:

old_dir = os.path.join(json_path, file)

if os.path.isdir(old_dir):

continue

filetype = os.path.splitext(file)[1]

if(filetype != ".json"): continue

json2txt(old_dir, txt_path)

随机取了一个txt文件,查看其格式:

body_21.jpg cloth 51 12 255 270

body_21.jpg hand 50 206 79 257

body_21.jpg hand 195 217 228 269

body_21.jpg other 112 0 194 1

格式:为图片名 类名 x1 y1 x2 y2(为目标框的左上右下角坐标,此txt格式并非yolo训练的darknet格式)。

然后是将数据的txt格式转化为darknet格式的py文件modeTxt.py:

import os

from numpy.lib.twodim_base import triu_indices_from

import pandas as pd

from glob import glob

import cv2

import codecs

def txt2darknet(txt_path, img_path, saved_path):

data = pd.DataFrame()

filelist = os.listdir(txt_path)

for file in filelist:

if not os.path.splitext(file)[-1] == ".txt":

continue

# print(file)

file_path = os.path.join(txt_path, file)

filename = os.path.splitext(file)[0]

imgName = filename + '.jpg'

imgPath = os.path.join(img_path, imgName)

img = cv2.imread(imgPath)

[img_h, img_w, _] = img.shape

data = ""

with codecs.open(file_path, 'r', encoding='utf-8',errors='ignore') as f1:

for line in f1.readlines():

line = line.strip('\n')

a = line.split(' ')

if a[1] == 'other' or a[1] == 'mask' or a[1] == 'del': continue

# if a[1] == 'mouth':

# a[1] = '0'

# elif a[1] == 'wearmask':

# a[1] = '1'

if a[1] == 'head':

a[1] = '0'

elif a[1] == 'hat':

a[1] = '1'

elif a[1] == 'helmet':

a[1] = '2'

elif a[1] == 'eye':

a[1] = '3'

elif a[1] == 'glasses' or a[1] == 'glass':

a[1] = '4'

'''这里根据自己的类别名称及顺序'''

x1 = float(a[2])

y1 = float(a[3])

w = float(a[4]) - float(a[2])

h = float(a[5]) - float(a[3])

# if w <= 15 and h <= 15: continue

center_x = float(a[2]) + w / 2

center_y = float(a[3]) + h / 2

a[2] = str(center_x / img_w)

a[3] = str(center_y / img_h)

a[4] = str(w / img_w)

a[5] = str(h / img_h)

b = a[1] + ' ' + a[2] + ' ' + a[3] + ' ' + a[4] + ' ' + a[5]

# print(b)

data += b + '\n'

with open(saved_path + '/' + filename + ".txt", 'w', encoding='utf-8') as f2:

f2.writelines(data)

print(data)

txt_path = '/data/cch/yolov5/runs/detect/hand_head_resize/labels'

saved_path = '/data/cch/yolov5/runs/detect/hand_head_resize/dr'

img_path = '/data/cch/data/pintu/test/hand_head_resize/images'

if __name__ == '__main__':

txt2darknet(txt_path, img_path, saved_path)

以上两个转换代码都是在拼图当中会调用到。

拼图

下面开始我们的拼图代码:

'''

1*1 到 5*5

随机打乱拼图

'''

import sys

import codecs

from genericpath import exists

import random

import PIL.Image as Image

import os

import cv2

'''因为我的json2txt和modeTxt与此拼图代码不在一个目录中,所以用添加路径的方式让python

能找到这俩文件路径'''

sys.path.append("/data/cch/拼图代码/format_transform")

import json2txt

import modeTxt

import shutil

# 图像拼接,根据划分好的宫格将图片一张一张粘贴上去

def image_compose(idx, ori_tmp, num, save_path, gt_resized_path, bg_resized_path, imgsize):

to_image = Image.new('RGB', (imgsize, imgsize)) #创建一个新图

for y in range(idx):

for x in range(idx):

index = y*idx + x

if index >= len(ori_tmp):

break

open_path = [gt_resized_path, bg_resized_path]

for op in open_path:

if os.path.exists(os.path.join(op, ori_tmp[index])):

to_image.paste(Image.open(os.path.join(op, ori_tmp[index])), (

int(x * (imgsize / idx)), int(y * (imgsize / idx))))

break

else:

continue

new_name = os.path.join(save_path, "wear_" + str(idx) + "_" + str(num) + ".jpg")

to_image.save(new_name) # 保存新图

# print(new_name)

return new_name

# 根据图片拼接的顺序及目标框在新的大图中的位置重新编写拼图后的标签文件

def labels_merge(idx, ori_tmp, new_name, txt_resized_path, txt_pintu_path, imgsize):

data = ""

for y in range(idx):

for x in range(idx):

index = y*idx + x

if index >= len(ori_tmp):

break

txt_path = os.path.join(txt_resized_path, ori_tmp[index].split(".")[0] + ".txt")

try:

os.path.exists(txt_path)

except:

print(txt_path, "file not exists!")

if os.path.exists(txt_path):

with codecs.open(txt_path, 'r', encoding='utf-8',errors='ignore') as f1:

for line in f1.readlines():

line = line.strip('\n')

a = line.split(' ')

a[2] = str(float(a[2]) + (x * (imgsize / idx)))

a[3] = str(float(a[3]) + (y * (imgsize / idx)))

a[4] = str(float(a[4]) + (x * (imgsize / idx)))

a[5] = str(float(a[5]) + (y * (imgsize / idx)))

b =a[0] + ' ' + a[1] + ' ' + a[2] + ' ' + a[3] + ' ' + a[4] + ' ' + a[5]

data += b + "\n"

write_path = os.path.join(txt_pintu_path, os.path.splitext(new_name)[0].split("/")[-1] + ".txt")

with open(write_path, 'w', encoding='utf-8') as f2:

f2.writelines(data)

'''将图片中的某些区域涂黑处理(一些有歧义误导模型学习的区域的other目标框,或者区域太小不适合学习

的目标框),做涂黑处理的目标框对应的标签也会进行删除'''

def pintu2black(txt_pintu_path, save_path, to_black_num, to_black_min_num, label_black, label_del):

files = os.listdir(txt_pintu_path)

for file in files:

img_path = os.path.join(save_path, os.path.splitext(file)[0] + ".jpg")

img_origal = cv2.imread(img_path)

data = ""

with codecs.open(txt_pintu_path+"/"+file, encoding="utf-8", errors="ignore") as f1:

for line in f1.readlines():

line = line.strip("\n")

a = line.split(" ")

xmin = int(eval(a[2]))

ymin = int(eval(a[3]))

xmax = int(eval(a[4]))

ymax = int(eval(a[5]))

if ((xmax - xmin < to_black_num) and (ymax - ymin < to_black_num)) or \

((xmax - xmin < to_black_min_num) or (ymax - ymin < to_black_min_num)) \

or a[1] in label_black:

img_origal[ymin:ymax, xmin:xmax, :] = (0, 0, 0)

cv2.imwrite(img_path, img_origal)

line = ""

if a[1] in label_del:

line = ""

if line:

data += line + "\n"

with open(txt_pintu_path+"/"+file, 'w', encoding='utf-8') as f2:

f2.writelines(data)

# print(data)

# 划分5个范围,将每张图大小根据满足的范围resize成固定大小(让图片以最小的比例resize)(无标签的背景图)

def bg_resize(img, img_name, img_size, x, bg_resized_path):

img = cv2.resize(img, (img_size, img_size), interpolation=cv2.INTER_CUBIC)

if not exists(bg_resized_path):

os.mkdir(bg_resized_path)

bg_resized = bg_resized_path + "/" + img_name.split(".")[0] + "_" + str(x) + ".jpg"

cv2.imwrite(bg_resized, img)

return bg_resized.split("/")[-1]

# 将resize后的图片根据大小相同的放入同一容器中(无标签的背景图)

def bg_distribute(bg_path, bg_resized_path, ori, bg_range):

image_names = os.listdir(bg_path)

for image_name in image_names:

imgPath = os.path.join(bg_path, image_name)

img = cv2.imread(imgPath)

[img_h, img_w, _] = img.shape

max_len = max(img_h, img_w)

for index in range(len(bg_range)):

if bg_range[index][0] <= max_len < bg_range[index][1]:

bg_resized = bg_resize(img, image_name, bg_range[index][0], index+1, bg_resized_path)

ori[index].append(bg_resized)

# 将resize后的图片根据大小相同的放入同一容器中(有标签的)

def gt_distribute(images_path, ori, gt_resized_path, txt_path, gt_range):

image_names = os.listdir(images_path)

for image_name in image_names:

imgPath = os.path.join(images_path, image_name)

img = cv2.imread(imgPath)

[img_h, img_w, _] = img.shape

max_len = max(img_h, img_w)

for index in range(len(gt_range)):

if gt_range[index][0] <= max_len < gt_range[index][1]:

gt_resized_name = gt_resize(gt_resized_path, txt_path, image_name, img, gt_range[index][0], index+1)

ori[index].append(gt_resized_name)

# 划分5个范围,将每张图大小根据满足的范围resize成固定大小(让图片以最小的比例resize)(有标签的)

def gt_resize(gt_resized_path, txt_path, image_name, img, img_size, x):

if not os.path.exists(gt_resized_path):

os.mkdir(gt_resized_path)

[img_h, img_w, _] = img.shape

img_read = [0, 0, 0]

if img_h < img_w:

precent = img_size / img_w

img_read = cv2.resize(img, (img_size, int(img_h * precent)), interpolation=cv2.INTER_CUBIC)

else:

precent = img_size / img_h

img_read = cv2.resize(img, (int(img_w * precent), img_size), interpolation=cv2.INTER_CUBIC)

img_resized = gt_resized_path + "/" + image_name.split(".")[0] + "_" + str(x) + ".jpg"

cv2.imwrite(img_resized, img_read)

txt_name = txt_path + "/" + image_name.split(".")[0] + ".txt"

txt_resized_name = gt_resized_path + "/" + image_name.split(".")[0] + "_" + str(x) + ".txt"

if os.path.exists(txt_name):

data = ""

with codecs.open(txt_name, 'r', encoding='utf-8',errors='ignore') as f1:

for line in f1.readlines():

line = line.strip('\n')

a = line.split(' ')

a[2] = str(float(a[2]) * precent)

a[3] = str(float(a[3]) * precent)

a[4] = str(float(a[4]) * precent)

a[5] = str(float(a[5]) * precent)

b =a[0] + ' ' + a[1] + ' ' + a[2] + ' ' + a[3] + ' ' + a[4] + ' ' + a[5]

data += b + "\n"

with open(txt_resized_name, 'w', encoding='utf-8') as f2:

f2.writelines(data)

return img_resized.split("/")[-1]

if __name__ == "__main__":

images_path = '/data/cch/pintu_data/wear_3/test_all/images' # 图片集地址

# 如果没有背景图直接注释bg_path和底下的bg_distribute的调用

# bg_path = "/data/weardata/标定数据/all-labels/test/0712/bg"

json_path = "/data/cch/pintu_data/wear_3/test_all/json"

save_path = '/data/cch/pintu_data/wear_3/test_all/save'

'''下面的“无则创建有则删了载创建”的清空操作,都是防止上一次的运行失败或终止导致的残留文件

对本次的运行造成影响(也可以每次运行前手动清空,但是显得有点不自动化)'''

if not os.path.exists(save_path):

os.mkdir(save_path)

else:

shutil.rmtree(save_path)

os.mkdir(save_path)

tmp = "/data/cch/pintu_data/wear_3/test_all/tmp"

if not os.path.exists(tmp):

os.mkdir(tmp)

else:

shutil.rmtree(tmp)

os.mkdir(tmp)

bg_resized_path = os.path.join(tmp, "bg_resized")

gt_resized_path = os.path.join(tmp, "gt_resized")

txt_path = os.path.join(tmp, "txt") # 原数据txt

txt_pintu_path = os.path.join(tmp, "txt_pintu")

os.mkdir(txt_path)

os.mkdir(txt_pintu_path)

label_black = ["other"] # 需要涂黑的标签

label_del = ["del"] # 需要删除的标签

imgsize = 416 # 拼成的大图的大小

model = "train" # train/test,拼用于训练或测试的数据集

if model == "train":

to_black_num = 15

to_black_min_num = 5

# 划分5个大小范围,满足对应范围的图像resize成其对应的大小

bg_range = [[imgsize, 10000], [int(imgsize/2), 10000], [int(imgsize/3), imgsize],\

[int(imgsize/4), int(imgsize/2)], [int(imgsize/5), int(imgsize/3)]]

gt_range = [[imgsize, 10000], [int(imgsize/2), 10000], [int(imgsize/3), imgsize],\

[int(imgsize/4), int(imgsize/2)], [int(imgsize/5), int(imgsize/3)]]

img_threshold = [0.3, 1 / 4 * 1.8, 1 / 9 * 1.7, 1 / 16 * 1.6, 1 / 25 * 1.5] # 训练集阈值,每种宫格拼成的图没打到阈值将继续打乱拼图

if model == "test":

to_black_num = 20

to_black_min_num = 10

bg_range = [[imgsize, 10000], [int(imgsize/2), 10000], [int(imgsize/3), imgsize],\

[int(imgsize/4), int(imgsize/2)], [int(imgsize/5), int(imgsize/3)]]

gt_range = [[imgsize, 10000], [int(imgsize/2), 10000], [int(imgsize/3), imgsize],\

[int(imgsize/4), int(imgsize/2)], [int(imgsize/5), int(imgsize/3)]]

img_threshold = [0.3, 1 / 4, 1 / 9, 1 / 16, 1 / 25] # 测试集

json2txt.main_import(json_path, txt_path) # 将json转为txt格式

ori = []

for i in range(5):

ori.append([])

# 根据划分的范围resize成对应大小,再放入对应容器中

gt_distribute(images_path, ori, gt_resized_path, txt_path, gt_range)

# bg_distribute(bg_path, bg_resized_path, ori, bg_range) # 若有负样本

idx = 1

'''第n个容器代表里面的小图将会被拼成n宫格的大图,所以每次从中取n×n张小图出来进行粘贴到

大图上,再找到这n×n个对应的标签文件,根据在图中的位置融合成一个标签文件'''

for ori_n in ori:

if len(ori_n) == 0:

idx += 1

continue

# ori_path = os.path.join(save_path, "ori_" + str(idx) + ".txt")

img_threshold[idx-1] *= len(ori_n)

if idx == 1 and img_threshold[idx-1] > 80: # 这里我觉得1×1的没必要那么多所以限制封顶80

img_threshold[idx-1] = 80

num = 0

random.shuffle(ori_n)

picknum = idx * idx

if len(ori_n) < picknum:

img_threshold[idx-1] = 1

index = 0

while num < int(img_threshold[idx-1]):

ori_tmp = []

if idx == 1:

new_name = image_compose(idx, [ori_n[num]], num, save_path, gt_resized_path, bg_resized_path, imgsize)

labels_merge(idx, [ori_n[num]], new_name, gt_resized_path, txt_pintu_path, imgsize)

# with open(ori_path, 'a') as file:

# file.write(ori_n[num])

# file.write("\n")

else:

if len(ori_n) > picknum:

# random.sample(ori_n, picknum)

if index >= len(ori_n):

random.shuffle(ori_n)

index = 0

ori_tmp = ori_n[index:index+picknum]

index = index + picknum

else:

ori_tmp = ori_n.copy()

new_name = image_compose(idx, ori_tmp, num, save_path, gt_resized_path, bg_resized_path, imgsize) #调用函数

labels_merge(idx, ori_tmp, new_name, gt_resized_path, txt_pintu_path, imgsize)

# for i in ori_tmp:

# with open(ori_path, 'a') as file:

# file.write(i)

# file.write("\n")

ori_tmp.clear()

num += 1

# print(ori_n)

print(idx, num, len(ori_n))

idx += 1

pintu2black(txt_pintu_path, save_path, to_black_num, to_black_min_num, label_black, label_del)

# txt格式转为yolo的darknet格式

modeTxt.txt2darknet(txt_pintu_path, save_path, save_path)

shutil.rmtree(tmp)

这里的model有train和test两种模型,两个模型之间的差别在于:

- 判断目标框小于一定的阈值作涂黑处理,而训练集的阈值会低于测试集;(这里小框涂黑处理是基于作为同一张图的其他目标框都较大,模型会更倾向于大目标的检测所以对太小的目标我们不进行学习和检测,且本意也不在于小目标的检测)

- 训练集中同一张小图可能会被再次拼图,旨在使拼出来的数据不会减少太多,而测试集控制每张小图只被拼一次。(因为若是一张小图出现了误检,重复拼之后重复出现误检,对于测这批测试集的mAP指标就不准确了)

结果

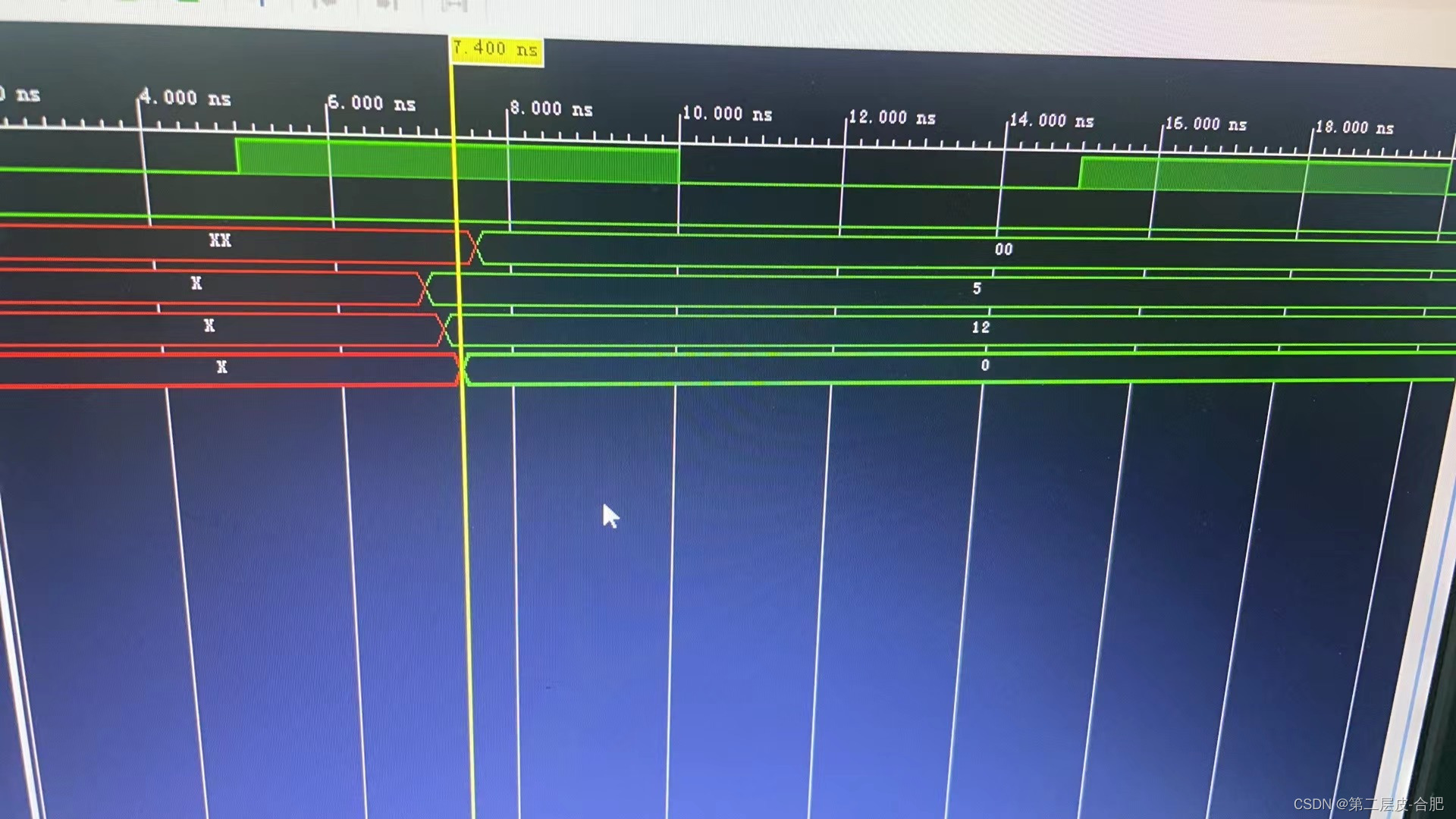

可以看到输出打印:

1 4 15

2 45 101

3 24 131

4 4 46

5 1 1

代表可以拼1×1的图片有15张,但只拼成了4张;可以拼2×2的图片有101张,拼成了45张;可以拼3×3的图片有131张,拼成了24张;可以拼4×4的图片有46张,拼成了4张;可以拼5×5的图片有1张,拼成了1张。

拼成的图片如下:

1×1宫格的:

2×2宫格的:

这里就出现了涂黑区域,右下角的手部(局部太模糊特征已经基本丢失而又有对手的检测,不标表示告诉模型这块不是手,但事实确实又是手,标了但图中的特征已经与手的特征差太多了,所以最好的处理就是涂黑处理)。

3×3宫格的:

4×4宫格的:

5×5宫格的:

由于这么小的图片只有一张,所以图中只有一张小图。

缺点

优点几乎已经从头讲到尾了,这里说说缺点。

- 图片的长宽比不能太大。这里根据的是图片最大边判断在哪个范围然后保持宽高比对最大边进行resize的,如果宽高比太大的话最小边被resize的比例就很大,还是会造成失真破坏特征的情况。(后续将发布另一篇改进)

- 若数据量上万的话,运行时间就会相对较长,后续可能在另一篇使用多线程应用来解决。