- webrtc版本5841-m110

时间又过去了一年,再分析一下底层有什么改进,依然从video_loopback开始。

我想先不去看信令及协商的过程,只看媒体层有什么变化。所以从这个demo开始

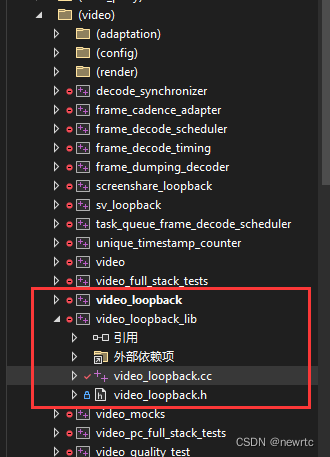

video_loopback

项目路径 src/video/video_loopback

在video_loopback_main.cc 中就一个函数main,进入webrtc::RunLoopbackTest

video_loopback_lib

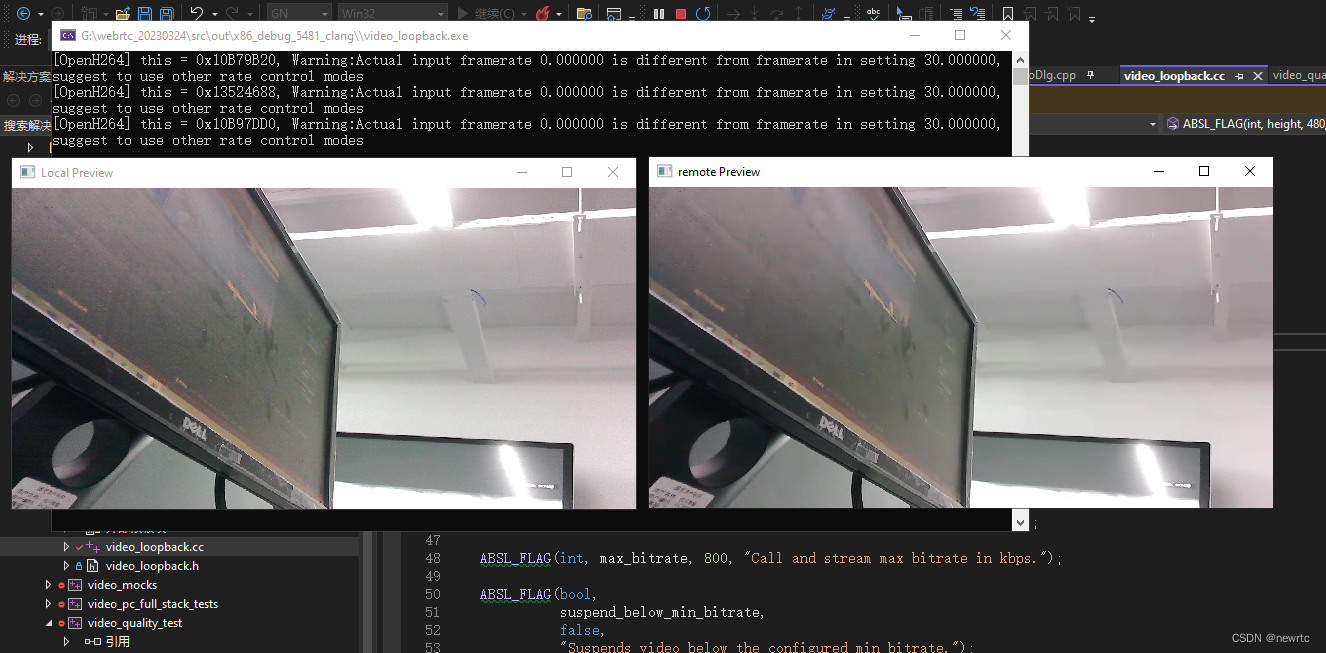

这个文件里面主要是测试功能的一些配置,列出我测试修改的几个

//修改编码用h264

// Flags common with screenshare loopback, with equal default values.

ABSL_FLAG(std::string, codec, "H264" /*"VP8"*/, "Video codec to use.");

//修改起音频测试

ABSL_FLAG(bool, audio, true, "Add audio stream");

//修改启用真实音频采集

ABSL_FLAG(bool,

use_real_adm,

true,/* false,*/

"Use real ADM instead of fake (no effect if audio is false)");

通过修改参数配置 我们可以跟踪调试,接下来跟踪webrtc::test::RunTest(webrtc::Loopback);

- Loopback() 这个函数就是初始化参数,我们简单看一下初始化的值是不是我们想要的,

- 进入 fixture->RunWithRenderers(params);

video_quality_test

主要的流程在video_quality_test.cc 里面

跟踪试试,运行就崩溃,和之前版本一样的问题,解决方法参考之前的文章

https://newrtc.blog.csdn.net/article/details/118379956

运行起来了,不过没有声音,哪里出了问题么,这demo越来越不负责了。我们先找找音频的问题再分析代码。

代码在video_quality_tesy.cc

先解决没有声音的问题,查看代码是使用了新的音频采集模块,不知道为什么没有声音,等有时间先去看看为什么要替换原来的coreaudio,莫非真的要刷kpi么。

上代码

rtc::scoped_refptr<AudioDeviceModule> VideoQualityTest::CreateAudioDevice() {

//增加这句,初始化的是原来的coreaudio采集方式

return AudioDeviceModule::Create(AudioDeviceModule::kPlatformDefaultAudio,

task_queue_factory_.get());

//这是新版的测试代码,大概跟踪了一下

#ifdef WEBRTC_WIN

RTC_LOG(LS_INFO) << "Using latest version of ADM on Windows";

// We must initialize the COM library on a thread before we calling any of

// the library functions. All COM functions in the ADM will return

// CO_E_NOTINITIALIZED otherwise. The legacy ADM for Windows used internal

// COM initialization but the new ADM requires COM to be initialized

// externally.

com_initializer_ =

std::make_unique<ScopedCOMInitializer>(ScopedCOMInitializer::kMTA);

RTC_CHECK(com_initializer_->Succeeded());

RTC_CHECK(webrtc_win::core_audio_utility::IsSupported());

RTC_CHECK(webrtc_win::core_audio_utility::IsMMCSSSupported());

return CreateWindowsCoreAudioAudioDeviceModule(task_queue_factory_.get());

#else

//这是原来的方式,可以正常使用

// Use legacy factory method on all platforms except Windows.

return AudioDeviceModule::Create(AudioDeviceModule::kPlatformDefaultAudio,

task_queue_factory_.get());

#endif

}

到此,demo的音视频都能够测试通过了,接下来开始代码流程

初始化过程

我们只看几个重要的函数

void VideoQualityTest::RunWithRenderers(const Params& params) {

//发送和接收的transport,继承自Transport,实现SendRtp,SendRtcp接口

std::unique_ptr<test::LayerFilteringTransport> send_transport;

std::unique_ptr<test::DirectTransport> recv_transport;

//渲染采集到的数据和解码后的数据yuv420p

std::unique_ptr<test::VideoRenderer> local_preview;

std::vector<std::unique_ptr<test::VideoRenderer>> loopback_renderers;

//初始化音频模块,这里创建了send call 和recv call两个call,其实可以创建一个

InitializeAudioDevice(&send_call_config, &recv_call_config,

params_.audio.use_real_adm);

//创建发送call 接收call

CreateCalls(send_call_config, recv_call_config);

//创建transport

send_transport = CreateSendTransport();

recv_transport = CreateReceiveTransport();

// TODO(ivica): Use two calls to be able to merge with RunWithAnalyzer or at

// least share as much code as possible. That way this test would also match

// the full stack tests better.

//设置transport的receiver,模拟网络接收的rtp,rtcp传递给call

send_transport->SetReceiver(receiver_call_->Receiver());

recv_transport->SetReceiver(sender_call_->Receiver());

//设置视频相关,设置一些配置。后续仔细分析

SetupVideo(send_transport.get(), recv_transport.get());

//创建视频流

CreateVideoStreams();

//创建视频采集

CreateCapturers();

//将采集关联到视频流

ConnectVideoSourcesToStreams();

//音频设置及创建音频流

SetupAudio(send_transport.get());

//开始测试

Start();

}

重要函数分析

先看音频的接收发送流程吧,视频的类似

1、音频设备初始化,在call 创建之前,

调用入口

InitializeAudioDevice(&send_call_config, &recv_call_config,

params_.audio.use_real_adm);

void VideoQualityTest::InitializeAudioDevice(Call::Config* send_call_config,

Call::Config* recv_call_config,

bool use_real_adm) {

rtc::scoped_refptr<AudioDeviceModule> audio_device;

if (use_real_adm) {

// Run test with real ADM (using default audio devices) if user has

// explicitly set the --audio and --use_real_adm command-line flags.

//我们测试参数设置用实际的音频设备,在此初始化

audio_device = CreateAudioDevice();

} else {

// By default, create a test ADM which fakes audio.

audio_device = TestAudioDeviceModule::Create(

task_queue_factory_.get(),

TestAudioDeviceModule::CreatePulsedNoiseCapturer(32000, 48000),

TestAudioDeviceModule::CreateDiscardRenderer(48000), 1.f);

}

RTC_CHECK(audio_device);

AudioState::Config audio_state_config;

audio_state_config.audio_mixer = AudioMixerImpl::Create();

audio_state_config.audio_processing = AudioProcessingBuilder().Create();

audio_state_config.audio_device_module = audio_device;

//把device设置为创建call的参数,多个call可以共用一个device

send_call_config->audio_state = AudioState::Create(audio_state_config);

recv_call_config->audio_state = AudioState::Create(audio_state_config);

if (use_real_adm) {

// The real ADM requires extra initialization: setting default devices,

// setting up number of channels etc. Helper class also calls

// AudioDeviceModule::Init().

//这里面是初始化设置,选取音频采集,播放设备

webrtc::adm_helpers::Init(audio_device.get());

} else {

audio_device->Init();

}

// Always initialize the ADM before injecting a valid audio transport.

RTC_CHECK(audio_device->RegisterAudioCallback(

send_call_config->audio_state->audio_transport()) == 0);

}

在CreateAudioDevice()中,调用AudioDeviceModule创建device

rtc::scoped_refptr<AudioDeviceModule> VideoQualityTest::CreateAudioDevice() {

return AudioDeviceModule::Create(AudioDeviceModule::kPlatformDefaultAudio,

task_queue_factory_.get());

}

调用webrtc::adm_helpers::Init(audio_device.get()); 设置音频设备

void Init(AudioDeviceModule* adm) {

RTC_DCHECK(adm);

RTC_CHECK_EQ(0, adm->Init()) << "Failed to initialize the ADM.";

// Playout device.

{

//设置播放设备ID,adm可以遍历有哪些设备的

if (adm->SetPlayoutDevice(AUDIO_DEVICE_ID) != 0) {

RTC_LOG(LS_ERROR) << "Unable to set playout device.";

return;

}

//初始化音频播放设备

if (adm->InitSpeaker() != 0) {

RTC_LOG(LS_ERROR) << "Unable to access speaker.";

}

// Set number of channels

bool available = false;

//双声道支持

if (adm->StereoPlayoutIsAvailable(&available) != 0) {

RTC_LOG(LS_ERROR) << "Failed to query stereo playout.";

}

if (adm->SetStereoPlayout(available) != 0) {

RTC_LOG(LS_ERROR) << "Failed to set stereo playout mode.";

}

}

// Recording device.

{

//设置麦克风id,同样adm可以设置ID

if (adm->SetRecordingDevice(AUDIO_DEVICE_ID) != 0) {

RTC_LOG(LS_ERROR) << "Unable to set recording device.";

return;

}

//初始化

if (adm->InitMicrophone() != 0) {

RTC_LOG(LS_ERROR) << "Unable to access microphone.";

}

// Set number of channels

bool available = false;

if (adm->StereoRecordingIsAvailable(&available) != 0) {

RTC_LOG(LS_ERROR) << "Failed to query stereo recording.";

}

if (adm->SetStereoRecording(available) != 0) {

RTC_LOG(LS_ERROR) << "Failed to set stereo recording mode.";

}

}

2、创建call

CreateCalls

void CallTest::CreateSenderCall(const Call::Config& config) {

auto sender_config = config;

sender_config.task_queue_factory = task_queue_factory_.get();

sender_config.network_state_predictor_factory =

network_state_predictor_factory_.get();

sender_config.network_controller_factory = network_controller_factory_.get();

sender_config.trials = &field_trials_;

sender_call_.reset(Call::Create(sender_config));

}

void CallTest::CreateReceiverCall(const Call::Config& config) {

auto receiver_config = config;

receiver_config.task_queue_factory = task_queue_factory_.get();

receiver_config.trials = &field_trials_;

receiver_call_.reset(Call::Create(receiver_config));

}

3、音频编码器设置

void VideoQualityTest::SetupAudio(Transport* transport) {

AudioSendStream::Config audio_send_config(transport);

audio_send_config.rtp.ssrc = kAudioSendSsrc;

// Add extension to enable audio send side BWE, and allow audio bit rate

// adaptation.

audio_send_config.rtp.extensions.clear();

//采用opus48k 双声道

audio_send_config.send_codec_spec = AudioSendStream::Config::SendCodecSpec(

kAudioSendPayloadType,

{"OPUS",

48000,

2,

{{"usedtx", (params_.audio.dtx ? "1" : "0")}, {"stereo", "1"}}});

if (params_.call.send_side_bwe) {

audio_send_config.rtp.extensions.push_back(

webrtc::RtpExtension(webrtc::RtpExtension::kTransportSequenceNumberUri,

kTransportSequenceNumberExtensionId));

audio_send_config.min_bitrate_bps = kOpusMinBitrateBps;

audio_send_config.max_bitrate_bps = kOpusBitrateFbBps;

audio_send_config.send_codec_spec->transport_cc_enabled = true;

// Only allow ANA when send-side BWE is enabled.

audio_send_config.audio_network_adaptor_config = params_.audio.ana_config;

}

audio_send_config.encoder_factory = audio_encoder_factory_;

SetAudioConfig(audio_send_config);

std::string sync_group;

if (params_.video[0].enabled && params_.audio.sync_video)

sync_group = kSyncGroup;

CreateMatchingAudioConfigs(transport, sync_group);

CreateAudioStreams();

}

4 创建音频stream

void CallTest::CreateAudioStreams() {

RTC_DCHECK(audio_send_stream_ == nullptr);

RTC_DCHECK(audio_receive_streams_.empty());

//通过call创建音频发送stream

audio_send_stream_ = sender_call_->CreateAudioSendStream(audio_send_config_);

for (size_t i = 0; i < audio_receive_configs_.size(); ++i) {

audio_receive_streams_.push_back(

//通过call创建音频接收stream

receiver_call_->CreateAudioReceiveStream(audio_receive_configs_[i]));

}

}

5 start音视频

void CallTest::Start() {

StartVideoStreams();

if (audio_send_stream_) {

//开始音频发送

audio_send_stream_->Start();

}

for (AudioReceiveStreamInterface* audio_recv_stream : audio_receive_streams_)

//开始音频接收

audio_recv_stream->Start();

}