文章目录

- 1、环境

- 2、Temporal Joins

- 2.1、基于处理时间(重点)

- 2.1.1、设置状态保留时间

- 2.2、基于事件时间

- 3、Lookup Join(重点)

- 4、Interval Joins(基于间隔JOIN)

重点是Lookup Join和Processing Time Temporal Join,其它随意

1、环境

WIN10+IDEA2021+JDK1.8+本地MySQL8

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

<flink.version>1.13.6</flink.version>

<scala.binary.version>2.12</scala.binary.version>

<slf4j.version>2.0.3</slf4j.version>

<log4j.version>2.17.2</log4j.version>

<fastjson.version>2.0.19</fastjson.version>

<lombok.version>1.18.24</lombok.version>

</properties>

<!-- https://mvnrepository.com/ -->

<dependencies>

<!-- Flink -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-runtime-web_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<!-- FlinkSQL -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner-blink_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-csv</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-json</artifactId>

<version>${flink.version}</version>

</dependency>

<!-- 日志 -->

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>${slf4j.version}</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>${slf4j.version}</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-to-slf4j</artifactId>

<version>${log4j.version}</version>

</dependency>

<!-- JSON解析 -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>${fastjson.version}</version>

</dependency>

<!-- 简化JavaBean书写 -->

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>${lombok.version}</version>

</dependency>

</dependencies>

2、Temporal Joins

2.1、基于处理时间(重点)

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

public class Hi {

public static void main(String[] args) {

//创建流执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment().setParallelism(1);

//创建流式表执行环境

StreamTableEnvironment tbEnv = StreamTableEnvironment.create(env);

//双流

DataStreamSource<Tuple2<String, Integer>> d1 = env.fromElements(

Tuple2.of("a", 2),

Tuple2.of("b", 3));

DataStreamSource<P> d2 = env.fromElements(

new P("a", 4000L),

new P("b", 5000L));

//创建临时视图

tbEnv.createTemporaryView("v1", d1);

tbEnv.createTemporaryView("v2", d2);

//双流JOIN

tbEnv.sqlQuery("SELECT * FROM v1 LEFT JOIN v2 ON v1.f0=v2.pid").execute().print();

}

@Data

@NoArgsConstructor

@AllArgsConstructor

public static class P {

private String pid;

private Long timestamp;

}

}

结果

+----+-------+-------+-------------+-------------+

| op | f0 | f1 | pid | timestamp |

+----+-------+-------+-------------+-------------+

| +I | a | 2 | (NULL) | (NULL) |

| -D | a | 2 | (NULL) | (NULL) |

| +I | a | 2 | a | 4000 |

| +I | b | 3 | (NULL) | (NULL) |

| -D | b | 3 | (NULL) | (NULL) |

| +I | b | 3 | b | 5000 |

+----+-------+-------+-------------+-------------+

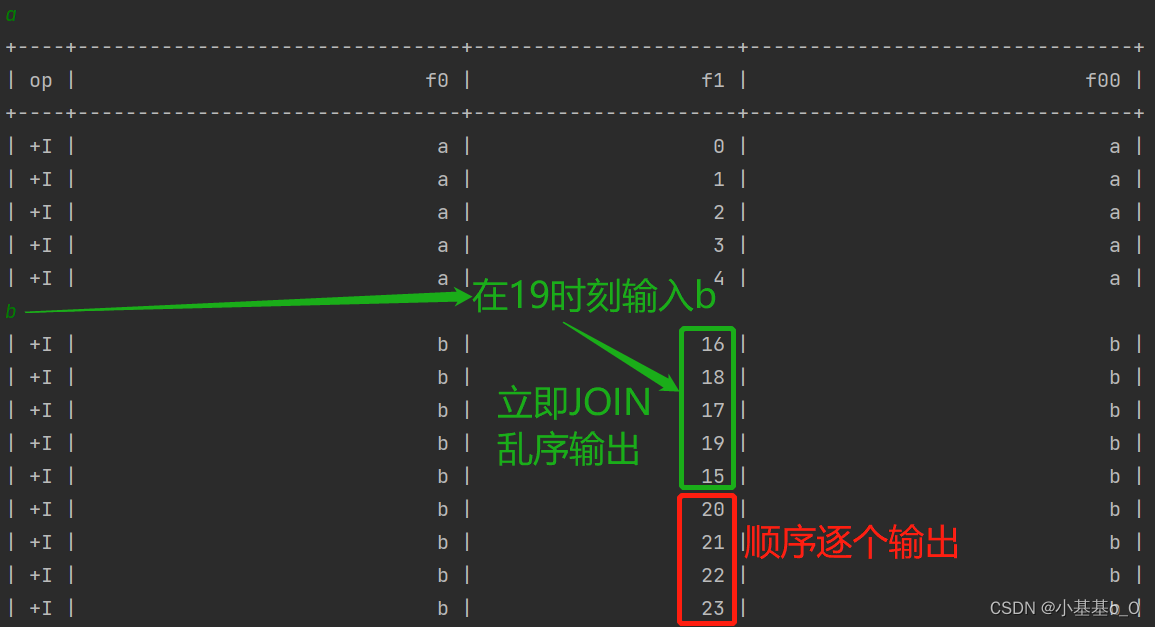

2.1.1、设置状态保留时间

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.SourceFunction;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import java.time.Duration;

import java.util.Scanner;

public class Hi {

public static void main(String[] args) {

//创建流执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment().setParallelism(1);

//创建流式表执行环境

StreamTableEnvironment tbEnv = StreamTableEnvironment.create(env);

//设置状态保留时间

tbEnv.getConfig().setIdleStateRetention(Duration.ofSeconds(5L));

//双流

DataStreamSource<Tuple2<String, Long>> d1 = env.addSource(new AutomatedSource());

DataStreamSource<String> d2 = env.addSource(new ManualSource());

//创建临时视图

tbEnv.createTemporaryView("v1", d1);

tbEnv.createTemporaryView("v2", d2);

//双流JOIN

tbEnv.sqlQuery("SELECT * FROM v1 INNER JOIN v2 ON v1.f0=v2.f0").execute().print();

}

/** 手动输入的数据源(请输入a或b进行测试) */

public static class ManualSource implements SourceFunction<String> {

public ManualSource() {}

@Override

public void run(SourceFunction.SourceContext<String> sc) {

Scanner scanner = new Scanner(System.in);

while (true) {

String str = scanner.nextLine().trim();

if (str.equals("STOP")) {break;}

if (!str.equals("")) {sc.collect(str);}

}

scanner.close();

}

@Override

public void cancel() {}

}

/** 自动输入的数据源 */

public static class AutomatedSource implements SourceFunction<Tuple2<String, Long>> {

public AutomatedSource() {}

@Override

public void run(SourceFunction.SourceContext<Tuple2<String, Long>> sc) throws InterruptedException {

for (long i = 0L; i < 999L; i++) {

Thread.sleep(1000L);

sc.collect(Tuple2.of("a", i));

sc.collect(Tuple2.of("b", i));

}

}

@Override

public void cancel() {}

}

}

测试结果

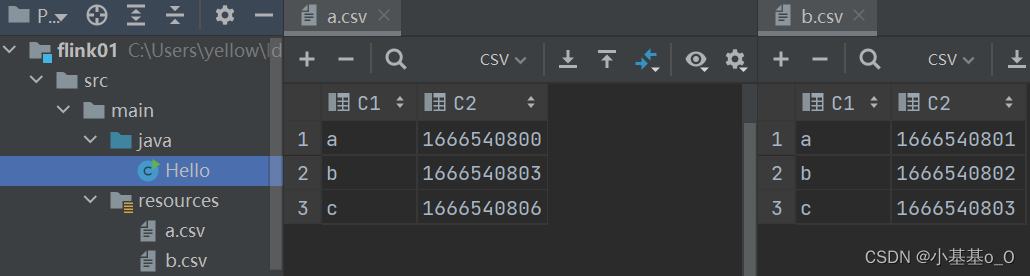

2.2、基于事件时间

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

public class Hello {

public static void main(String[] args) {

//创建流和表的执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment().setParallelism(1);

StreamTableEnvironment tbEnv = StreamTableEnvironment.create(env);

//创建数据流,设定水位线

tbEnv.executeSql("CREATE TABLE v1 (" +

" x STRING PRIMARY KEY," +

" y BIGINT," +

" ts AS to_timestamp(from_unixtime(y,'yyyy-MM-dd HH:mm:ss'))," +

" watermark FOR ts AS ts - INTERVAL '2' SECOND" +

") WITH (" +

" 'connector'='filesystem'," +

" 'path'='src/main/resources/a.csv'," +

" 'format'='csv'" +

")");

tbEnv.executeSql("CREATE TABLE v2 (" +

" x STRING PRIMARY KEY," +

" y BIGINT," +

" ts AS to_timestamp(from_unixtime(y,'yyyy-MM-dd HH:mm:ss'))," +

" watermark FOR ts AS ts - INTERVAL '2' SECOND" +

") WITH (" +

" 'connector'='filesystem'," +

" 'path'='src/main/resources/b.csv'," +

" 'format'='csv'" +

")");

//执行查询

tbEnv.sqlQuery("SELECT * " +

"FROM v1 " +

"LEFT JOIN v2 FOR SYSTEM_TIME AS OF v1.ts " +

"ON v1.x = v2.x"

).execute().print();

}

}

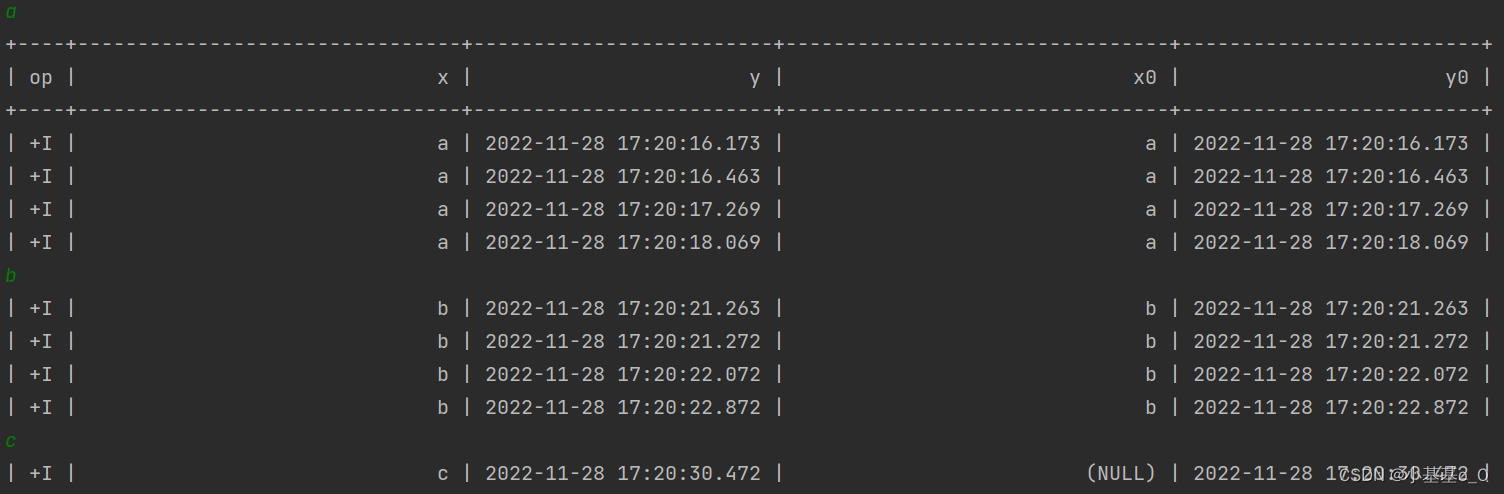

打印结果

+----+---+------------+-------------------------+--------+------------+-------------------------+

| op | x | y | ts | x0 | y0 | ts0 |

+----+---+------------+-------------------------+--------+------------+-------------------------+

| +I | a | 1666540800 | 2022-10-24 00:00:00.000 | (NULL) | (NULL) | (NULL) |

| +I | b | 1666540803 | 2022-10-24 00:00:03.000 | b | 1666540802 | 2022-10-24 00:00:02.000 |

| +I | c | 1666540806 | 2022-10-24 00:00:06.000 | c | 1666540803 | 2022-10-24 00:00:03.000 |

+----+---+------------+-------------------------+--------+------------+-------------------------+

3、Lookup Join(重点)

Lookup Join是基于Processing Time Temporal Join的

详细链接:https://yellow520.blog.csdn.net/article/details/128070761

4、Interval Joins(基于间隔JOIN)

SQL

SELECT * FROM v1

LEFT JOIN v2 ON v1.x=v2.x AND

v1.y BETWEEN (v2.y - INTERVAL '2' SECOND) AND (v2.y + INTERVAL '1' SECOND)

Java

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.SourceFunction;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import java.util.Scanner;

import static org.apache.flink.table.api.Expressions.$;

public class Hi {

public static void main(String[] args) {

//创建流和表的执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment().setParallelism(1);

StreamTableEnvironment tbEnv = StreamTableEnvironment.create(env);

//创建数据流

DataStreamSource<String> d1 = env.addSource(new ManualSource());

DataStreamSource<String> d2 = env.addSource(new AutomatedSource());

//创建动态表,并声明一个额外的字段来作为处理时间字段

Table t1 = tbEnv.fromDataStream(d1, $("x"), $("y").proctime());

Table t2 = tbEnv.fromDataStream(d2, $("x"), $("y").proctime());

//创建临时视图

tbEnv.createTemporaryView("v1", t1);

tbEnv.createTemporaryView("v2", t2);

//执行查询

tbEnv.sqlQuery("SELECT * FROM v1 LEFT JOIN v2 ON v1.x=v2.x AND " +

"v1.y BETWEEN (v2.y - INTERVAL '2' SECOND) AND (v2.y + INTERVAL '1' SECOND)"

).execute().print();

}

/** 手动输入的数据源 */

public static class ManualSource implements SourceFunction<String> {

public ManualSource() {}

@Override

public void run(SourceFunction.SourceContext<String> sc) {

Scanner scanner = new Scanner(System.in);

while (true) {

String str = scanner.nextLine().trim();

if (str.equals("STOP")) {break;}

if (!str.equals("")) {sc.collect(str);}

}

scanner.close();

}

@Override

public void cancel() {}

}

/** 自动输入的数据源 */

public static class AutomatedSource implements SourceFunction<String> {

public AutomatedSource() {}

@Override

public void run(SourceFunction.SourceContext<String> sc) throws InterruptedException {

for (int i = 0; i < 999; i++) {

Thread.sleep(801);

sc.collect("a");

sc.collect("b");

}

}

@Override

public void cancel() {}

}

}

测试结果

![[附源码]计算机毕业设计springboot青栞系统](https://img-blog.csdnimg.cn/24cd620699b34fde958ed69f48a0fa9b.png)

![[附源码]JAVA毕业设计工程车辆动力电池管理系统(系统+LW)](https://img-blog.csdnimg.cn/223111ab239c4149aa7e6a35f7e035f9.png)

![[附源码]JAVA毕业设计个人信息管理系统(系统+LW)](https://img-blog.csdnimg.cn/f3ed4c3b1c9e462883690461440f89b8.png)