前言:

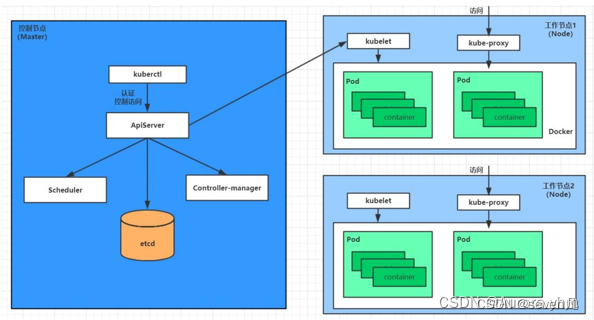

在实际的生产中,我们可能会有许多的由开发制作的docker镜像,这也就造成使用这些镜像需要打包成tar文件,然后上传到服务器内然后在导入并使用,但,kubernetes节点很多,有时候并不是明确的要在哪个节点使用,因此需要每个节点都上传或者是在部署的时候做节点亲和。那么,很明显这样的方法是不够优雅的。

那么,我们将需要的镜像统一上传到一个自己搭建的私有镜像仓库是一个比较好的解决方案,只需要在部署阶段指定使用私有镜像仓库就可以解决了,并且harbor这样的私有镜像仓库还有安全漏洞扫描功能,能够提升整个系统的安全性。

下面,将就如何在kubernetes集群内使用私有镜像仓库做一个简单的示例。

一,

在kubernetes内使用私有镜像仓库之前,我们需要先有一个私有镜像仓库,并保证这个仓库是可用的。

本文的私有镜像仓库是harbor仓库,该仓库搭建在服务器IP为192.168.217.23。

具体的搭建过程请移步上一篇文章;harbor仓库的构建及简单使用(修订版)_晚风_END的博客-CSDN博客

二,

检查私有镜像仓库是否可用

[root@node3 manifests]# systemctl status harbor

● harbor.service - Harbor

Loaded: loaded (/usr/lib/systemd/system/harbor.service; enabled; vendor preset: disabled)

Active: active (running) since Thu 2022-12-01 11:43:31 CST; 1h 22min ago

Docs: http://github.com/vmware/harbor

Main PID: 2690 (docker-compose)

Memory: 41.6M

CGroup: /system.slice/harbor.service

├─2690 /usr/bin/docker-compose -f /usr/local/harbor/docker-compose.yml up

└─2876 /usr/bin/docker-compose -f /usr/local/harbor/docker-compose.yml up

Dec 01 11:43:33 node3 docker-compose[2690]: harbor-jobservice is up-to-date

Dec 01 11:43:33 node3 docker-compose[2690]: nginx is up-to-date

Dec 01 11:43:33 node3 docker-compose[2690]: Attaching to harbor-log, redis, harbor-adminserver, registry, harbor-db, harbor-ui, harbor-jobservice, nginx

Dec 01 11:43:33 node3 docker-compose[2690]: harbor-adminserver | WARNING: no logs are available with the 'syslog' log driver

Dec 01 11:43:33 node3 docker-compose[2690]: harbor-db | WARNING: no logs are available with the 'syslog' log driver

Dec 01 11:43:33 node3 docker-compose[2690]: harbor-jobservice | WARNING: no logs are available with the 'syslog' log driver

Dec 01 11:43:33 node3 docker-compose[2690]: harbor-ui | WARNING: no logs are available with the 'syslog' log driver

Dec 01 11:43:33 node3 docker-compose[2690]: nginx | WARNING: no logs are available with the 'syslog' log driver

Dec 01 11:43:33 node3 docker-compose[2690]: redis | WARNING: no logs are available with the 'syslog' log driver

Dec 01 11:43:33 node3 docker-compose[2690]: registry | WARNING: no logs are available with the 'syslog' log driver

健康检查,如下。 都是healthy即可,如果是unhealthy,表示此镜像仓库不可用,一般这样的情况重启服务器即可恢复。

[root@node3 harbor]# docker-compose ps

Name Command State Ports

-------------------------------------------------------------------------------------------------------------------------------------

harbor-adminserver /harbor/start.sh Up (healthy)

harbor-db /usr/local/bin/docker-entr ... Up (healthy) 3306/tcp

harbor-jobservice /harbor/start.sh Up

harbor-log /bin/sh -c /usr/local/bin/ ... Up (healthy) 127.0.0.1:1514->10514/tcp

harbor-ui /harbor/start.sh Up (healthy)

nginx nginx -g daemon off; Up (healthy) 0.0.0.0:443->443/tcp, 0.0.0.0:4443->4443/tcp, 0.0.0.0:80->80/tcp

redis docker-entrypoint.sh redis ... Up 6379/tcp

registry /entrypoint.sh serve /etc/ ... Up (healthy) 5000/tcp 登录私有镜像仓库,生成登录记录文件(注意,这个文件的生成不管是https的私有仓库还是http的仓库,只需要登录成功即可,有几个私有仓库,它都会记录进来的):

登录记录文件是kubernetes使用私有镜像仓库的关键文件,一会会使用此文件生成一个secret,在使用私有仓库内的镜像时,将该secret挂载到部署文件内。

[root@node3 harbor]# docker login https://192.168.217.23

Authenticating with existing credentials...

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@node3 harbor]# cat /root/.docker/config.json

{

"auths": {

"192.168.217.23": {

"auth": "YWRtaW46U2hpZ3VhbmdfMzI="

}

},

"HttpHeaders": {

"User-Agent": "Docker-Client/19.03.9 (linux)"

}

三,

将密钥进行base64加密

cat /root/.docker/config.json | base64 -w 0输出如下;

ewoJImF1dGhzIjogewoJCSIxOTIuMTY4LjIxNy4yMyI6IHsKCQkJImF1dGgiOiAiWVdSdGFXNDZVMmhwWjNWaGJtZGZNekk9IgoJCX0KCX0sCgkiSHR0cEhlYWRlcnMiOiB7CgkJIlVzZXItQWdlbnQiOiAiRG9ja2VyLUNsaWVudC8xOS4wMy45IChsaW51eCkiCgl9Cn0=四,

新建secret部署文件,保存上述生成的密钥

cat >harbor_secret.yaml <<EOF

apiVersion: v1

kind: Secret

metadata:

name: harbor-login

type: kubernetes.io/dockerconfigjson

data:

# 这里添加上述base64加密后的密钥

.dockerconfigjson: ewoJImF1dGhzIjogewoJCSIxOTIuMTY4LjIxNy4yMyI6IHsKCQkJImF1dGgiOiAiWVdSdGFXNDZVMmhwWjNWaGJtZGZNekk9IgoJCX0KCX0sCgkiSHR0cEhlYWRlcnMiOiB7CgkJIlVzZXItQWdlbnQiOiAiRG9ja2VyLUNsaWVudC8xOS4wMy45IChsaW51eCkiCgl9Cn0=

EOF生成这个secret:

k apply -f harbor_secret.yaml查看这个secret:

[root@node3 harbor]# k describe secrets harbor-login

Name: harbor-login

Namespace: default

Labels: <none>

Annotations:

Type: kubernetes.io/dockerconfigjson

Data

====

.dockerconfigjson: 152 bytes

五,

kubernetes的部署文件调用此secret

假设已有镜像上传到了私有镜像仓库内,该镜像是nginx,版本是1.20

现在部署nginx的时候指定使用私有镜像仓库里的镜像,那么,部署文件应该是这样的:

cat >nginx.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: nginx

name: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: nginx

spec:

containers:

- image: 192.168.217.23/library/nginx:1.20

name: nginx

resources: {}

imagePullSecrets:

- name: harbor-login

status: {}

EOF

主要是在部署的时候增加了这么两行:

imagePullSecrets:

- name: harbor-login在部署一个MySQL,同样也是使用私有镜像仓库:

同样的,增加这么两行:

imagePullSecrets:

- name: harbor-login

cat /etc/kubernetes/manifests/mysql.yaml

apiVersion: v1

kind: Pod

metadata:

name: mysql

labels:

run: mysql

name: mysql

namespace: default

spec:

containers:

- env:

- name: MYSQL_ROOT_PASSWORD

value: shiguang32

image: 192.168.217.23/test/mysql:5.7.39

imagePullPolicy: IfNotPresent

resources:

requests:

cpu: 200m

name: mysql

volumeMounts:

- mountPath: /var/lib/mysql

name: mysql-data

readOnly: false

- mountPath: /etc/mysql/mysql.conf.d

name: mysql-conf

readOnly: false

dnsPolicy: ClusterFirst

restartPolicy: Always

volumes:

- name: mysql-data

hostPath:

path: /opt/mysql/data

type: DirectoryOrCreate

- name: mysql-conf

hostPath:

path: /opt/mysql/conf

type: DirectoryOrCreate

imagePullSecrets:

- name: harbor-login

hostNetwork: true

priorityClassName: system-cluster-critical

status: {}

[root@node3 harbor]# k get po

NAME READY STATUS RESTARTS AGE

mysql 1/1 Running 7 76m

nginx-58bf645545-xtnsn 1/1 Running 1 161m

查看pod详情,看看是不是使用了私有仓库的镜像:

可以看到,确实是正确的下载了私有镜像仓库里的镜像

[root@node3 harbor]# k describe pod nginx-58bf645545-xtnsn

Name: nginx-58bf645545-xtnsn

Namespace: default

Priority: 0

Node: node3/192.168.217.23

Start Time: Thu, 01 Dec 2022 10:59:50 +0800

Labels: app=nginx

pod-template-hash=58bf645545

Annotations: <none>

Status: Running

IP: 10.244.0.243

IPs:

IP: 10.244.0.243

Controlled By: ReplicaSet/nginx-58bf645545

Containers:

nginx:

Container ID: docker://07fc2a45709ff4698de6e4c168a175d1c10b9f23c1240c29fc1cb463142193c7

Image: 192.168.217.23/library/nginx:1.20

Image ID: docker-pullable://192.168.217.23/library/nginx@sha256:cba27ee29d62dfd6034994162e71c399b08a84b50ab25783eabce64b1907f774

[root@node3 harbor]# k describe pod mysql

Name: mysql

Namespace: default

Priority: 2000000000

Priority Class Name: system-cluster-critical

Node: node3/192.168.217.23

Start Time: Thu, 01 Dec 2022 12:24:50 +0800

Labels: run=mysql

Annotations: Status: Running

IP: 192.168.217.23

IPs:

IP: 192.168.217.23

Containers:

mysql:

Container ID: docker://f6b6e9324bc17a2b3425edee382c01f0a3095379fbe4af4209bf4c7dc05bd55d

Image: 192.168.217.23/test/mysql:5.7.39

Image ID: docker-pullable://192.168.217.23/test/mysql@sha256:b39b95329c868c3875ea6eb23c9a2a27168c3531f83c96c24324213f75793636

至此,kubernetes使用私有镜像仓库圆满成功!!!