chunjun (纯钧) 官方文档纯钧

chunjun 有四种运行方式:local、standalone、yarn session、yarn pre-job 。

| 运行方式/环境依赖 | flink环境 | hadoop环境 |

|---|---|---|

| local | × | × |

| standalone | √ | × |

| yarn session | √ | √ |

| yarn pre-job | √ | √ |

1.下载

官网已经提供了编译好的插件压缩包,可以直接下载:https://github.com/DTStack/chunjun/releases

chunjun-dist-1.12-SNAPSHOT.tar.gz

2.解压

先创建 chunjun 目录

再解压 chunjun-dist-1.12-SNAPSHOT.tar.gz 到 chunjun 这个目录当中

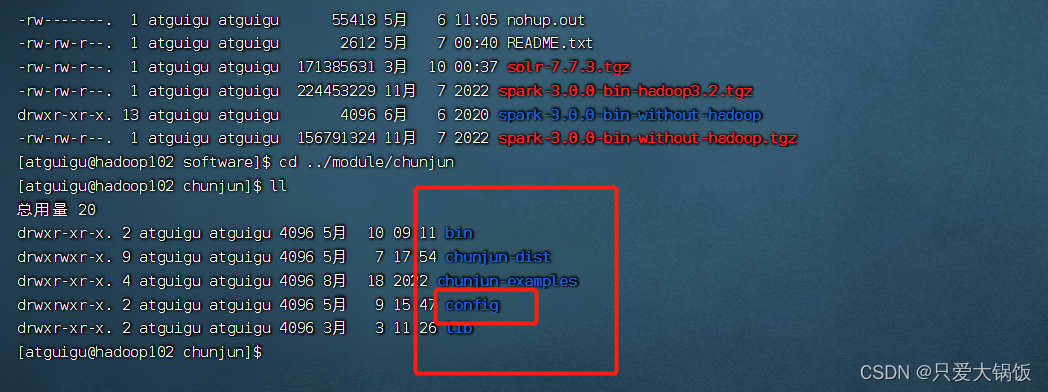

tar -zxvf chunjun-dist-1.12-SNAPSHOT.tar.gz -C ../module/chunjun查看目录结构:config 是自己创建的,取什么名称都行,里面放置 脚本文件

3.案例

mysql->hdfs (local)

根据chunjun 案例 纯钧

编写 mysql 数据同步 hdfs 脚本

vim config/mysql_hdfs_polling.json脚本:

{

"job":{

"content":[

{

"reader":{

"name":"mysqlreader",

"parameter":{

"column":[

{

"name":"group_id",

"type":"varchar"

},

{

"name":"company_id",

"type":"varchar"

},

{

"name":"group_name",

"type":"varchar"

}

],

"username":"root",

"password":"000000",

"queryTimeOut":2000,

"connection":[

{

"jdbcUrl":[

"jdbc:mysql://192.168.233.130:3306/gmall?characterEncoding=UTF-8&autoReconnect=true&failOverReadOnly=false"

],

"table":[

"cus_group_info"

]

}

],

"polling":false,

"pollingInterval":3000

}

},

"writer":{

"name":"hdfswriter",

"parameter":{

"fileType":"text",

"path":"hdfs://192.168.233.130:8020/user/hive/warehouse/stg.db/cus_group_info",

"defaultFS":"hdfs://192.168.233.130:8020",

"fileName":"cus_group_info",

"fieldDelimiter":",",

"encoding":"utf-8",

"writeMode":"overwrite",

"column":[

{

"name":"group_id",

"type":"VARCHAR"

},

{

"name":"company_id",

"type":"VARCHAR"

},

{

"name":"group_name",

"type":"VARCHAR"

}

]

}

}

}

],

"setting":{

"speed":{

"readerChannel":1,

"writerChannel":1

}

}

}

}启动:

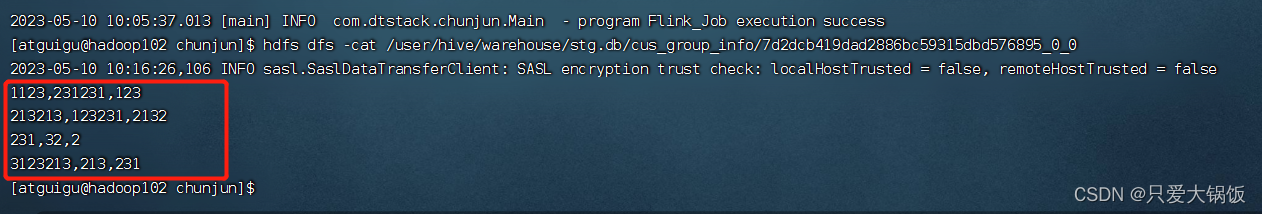

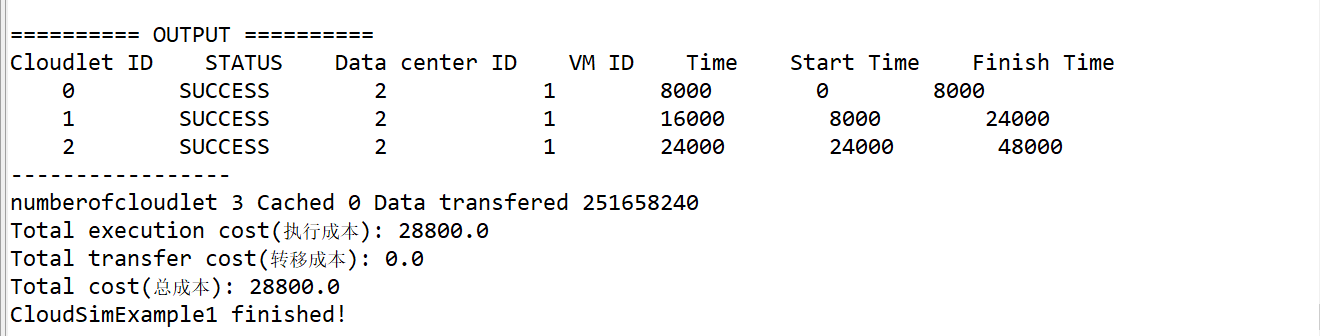

sh bin/chunjun-local.sh -job config/mysql_hdfs_polling.json 运行日志:

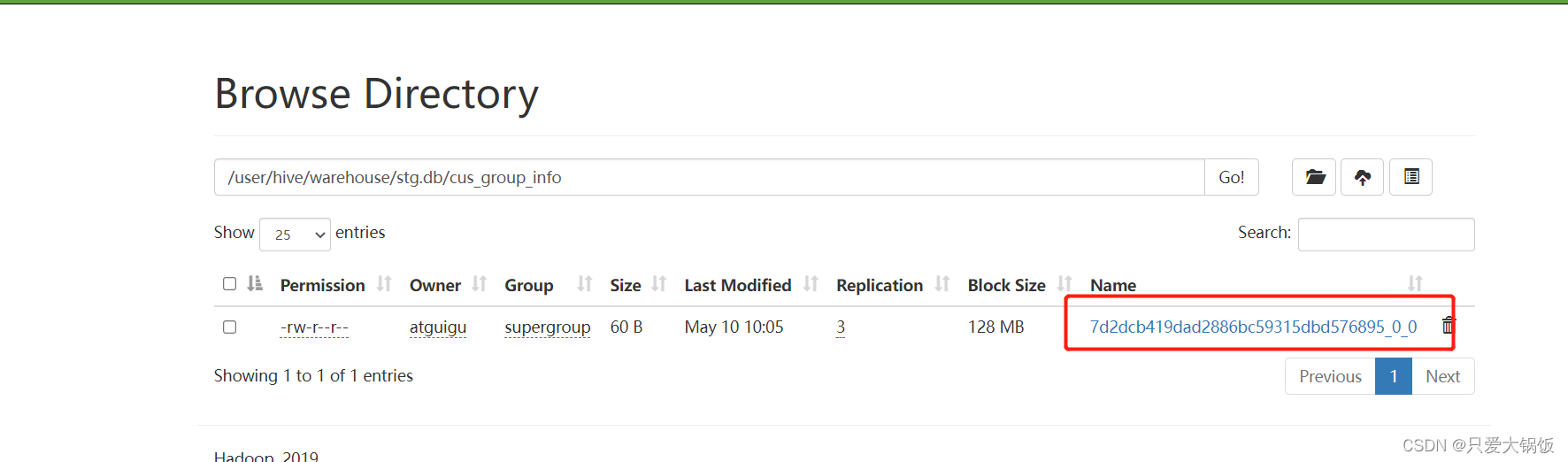

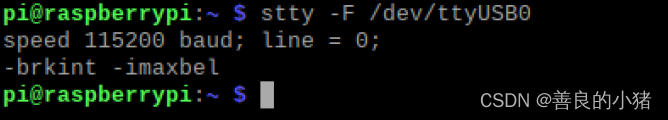

HDFS上的文件:

数据同步成功!

![[Java基础练习-002]综合应用(基础进阶)](https://img-blog.csdnimg.cn/022445a7ea4b4549aaed79cf7a92ecc4.png)