讲完了训练部分 接下来是检测部分

惯例看看结构

VOC_CLASS_BGR是不同类别应该用什么颜色画框容易区分,比如A用红色,B用绿色,不容易在途中颜色混在一起

画框框

def visualize_boxes(image_bgr, boxes, class_names, probs, name_bgr_dict=None, line_thickness=2):

if name_bgr_dict is None:

name_bgr_dict = VOC_CLASS_BGR

image_boxes = image_bgr.copy()#分配到新内存中去

for box, class_name, prob in zip(boxes, class_names, probs):

# Draw box on the image.

left_top, right_bottom = box

left, top = int(left_top[0]), int(left_top[1])

right, bottom = int(right_bottom[0]), int(right_bottom[1])

bgr = name_bgr_dict[class_name]

cv2.rectangle(image_boxes, (left, top), (right, bottom), bgr, thickness=line_thickness)

# Draw text on the image.

text = '%s %.2f' % (class_name, prob)

size, baseline = cv2.getTextSize(text, cv2.FONT_HERSHEY_SIMPLEX, fontScale=0.5, thickness=2)

text_w, text_h = size

x, y = left, top

x1y1 = (x, y)

x2y2 = (x + text_w + line_thickness, y + text_h + line_thickness + baseline)

cv2.rectangle(image_boxes, x1y1, x2y2, bgr, -1)

cv2.putText(image_boxes, text, (x + line_thickness, y + 2*baseline + line_thickness),

cv2.FONT_HERSHEY_SIMPLEX, fontScale=0.4, color=(255, 255, 255), thickness=1, lineType=8)

return image_boxes

传入计算好的box和class名和可能性的值 这里遍历画出来 此处四个值两个坐标全为以图片真实像素大小的值,不再是归一化

取出左上,右下坐标 cv2画出来,从name_bgr_dict中取出 本class应该对应的什么颜色 然后画框

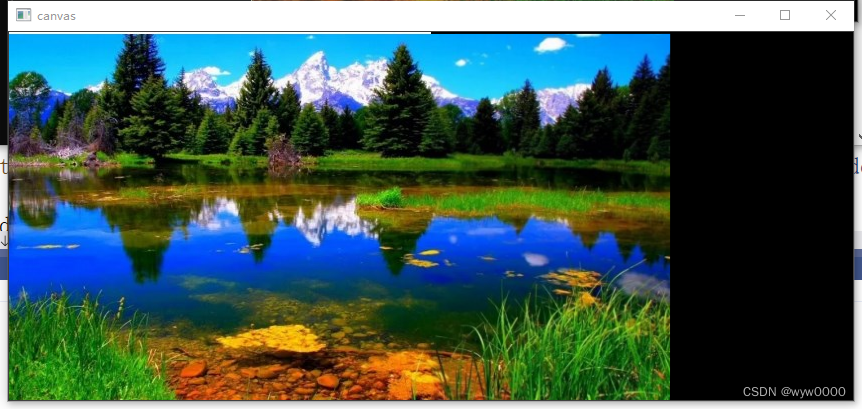

接着做出文本,在以框左上角开始为起始坐标,往右下方向画小正方形填入种类名和概率, 就像这样

解析YOLODetector类

def __init__(self,

model_path, class_name_list=None, mean_rgb=[122.67891434, 116.66876762, 104.00698793],

conf_thresh=0.1, prob_thresh=0.1, nms_thresh=0.5,

gpu_id=0):

os.environ["CUDA_VISIBLE_DEVICES"] = str(gpu_id)

use_gpu = torch.cuda.is_available()

assert use_gpu, 'Current implementation does not support CPU mode. Enable CUDA.'

# Load YOLO model.

print("Loading YOLO model...")

self.yolo = resnet50()#这里就已经有了 随机的参数w权重

sd = torch.load(model_path)

self.yolo.load_state_dict(sd)#读取原来模型的权重

self.yolo.cuda()

print("Done loading!")

self.yolo.eval()

self.S = 7

self.B = 2

self.C = 20

self.class_name_list = class_name_list if (class_name_list is not None) else list(VOC_CLASS_BGR.keys())#给数据集里指定的list还是自己重新定义class list

assert len(self.class_name_list) == self.C

self.mean = np.array(mean_rgb, dtype=np.float32)

assert self.mean.shape == (3,)

self.conf_thresh = conf_thresh

self.prob_thresh = prob_thresh

self.nms_thresh = nms_thresh

self.to_tensor = transforms.ToTensor()

# Warm up. dummy_input 虚拟输入

dummy_input = Variable(torch.zeros((1, 3, 448, 448)))

dummy_input = dummy_input.cuda()

for i in range(3): #为了初始化权重? 为什么 -预热操作的目的是让模型尽可能地填满加速器的缓存

self.yolo(dummy_input) #self.yolo.state_dict().get('conv1.weight')

yolo初始化模型,并读取训练好的model_path位置的权重,放入gpu

用dummy_input ,为gpu热身 先占满缓存不怕防止后面检测过程显存,内存或缓存爆了

def detect(self, image_bgr, image_size=448):

""" Detect objects from given image.

Args:

image_bgr: (numpy array) input image in BGR ids_sorted, sized [h, w, 3].

image_size: (int) image width and height to which input image is resized.

Returns:

boxes_detected: (list of tuple) box corner list like [((x1, y1), (x2, y2))_obj1, ...]. Re-scaled for original input image size.

class_names_detected: (list of str) list of class name for each detected boxe.

probs_detected: (list of float) list of probability(=confidence x class_score) for each detected box.

"""

h, w, _ = image_bgr.shape

img = cv2.resize(image_bgr, dsize=(image_size, image_size), interpolation=cv2.INTER_LINEAR)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB) # assuming the model is trained with RGB images.

img = (img - self.mean) / 255.0

img = self.to_tensor(img) # [image_size, image_size, 3] -> [3, image_size, image_size]

img = img[None, :, :, :] # [3, image_size, image_size] -> [1, 3, image_size, image_size]扩大维度 第一维是batch

img = Variable(img)

img = img.cuda()

with torch.no_grad():

pred_tensor = self.yolo(img)

pred_tensor = pred_tensor.cpu().data

pred_tensor = pred_tensor.squeeze(0) # squeeze batch dimension.

# Get detected boxes_detected, labels, confidences, class-scores.

boxes_normalized_all, class_labels_all, confidences_all, class_scores_all = self.decode(pred_tensor)

if boxes_normalized_all.size(0) == 0:

return [], [], [] # if no box found, return empty lists.

# Apply non maximum supression for boxes of each class.

boxes_normalized, class_labels, probs = [], [], []

for class_label in range(len(self.class_name_list)):

mask = (class_labels_all == class_label)

if torch.sum(mask) == 0:

continue # if no box found, skip that class.

# 找出所有同一类的 进行nms

boxes_normalized_masked = boxes_normalized_all[mask]

class_labels_maked = class_labels_all[mask]

confidences_masked = confidences_all[mask]

class_scores_masked = class_scores_all[mask]

ids = self.nms(boxes_normalized_masked, confidences_masked) #非极大抑制

boxes_normalized.append(boxes_normalized_masked[ids])

class_labels.append(class_labels_maked[ids])

probs.append(confidences_masked[ids] * class_scores_masked[ids])

boxes_normalized = torch.cat(boxes_normalized, 0)

class_labels = torch.cat(class_labels, 0)

probs = torch.cat(probs, 0)

# Postprocess for box, labels, probs.

boxes_detected, class_names_detected, probs_detected = [], [], []

for b in range(boxes_normalized.size(0)):

box_normalized = boxes_normalized[b]

class_label = class_labels[b]

prob = probs[b]

x1, x2 = w * box_normalized[0], w * box_normalized[2] # unnormalize x with image width. 图片真实坐标 从左上开始 0

y1, y2 = h * box_normalized[1], h * box_normalized[3] # unnormalize y with image height.

boxes_detected.append(((x1, y1), (x2, y2)))

class_label = int(class_label) # convert from LongTensor to int.

class_name = self.class_name_list[class_label]

class_names_detected.append(class_name)

prob = float(prob) # convert from Tensor to float.

probs_detected.append(prob)

return boxes_detected, class_names_detected, probs_detected

detect检测主函数:

因为opencv中读取出来是bgr,与rgb不一样 这是由于计算机视觉历史原因引起。后面cvt转成rgb

将图片uint8值先减去voc2007中的均值再归一化除以255, 减均值之后变成均值为0,/255 方差为1 符合正态分布

网络中输入大小应为(batch_size,3,448,448) 此处一张图片只有前3维,用None补一维度,放进网络输出预测detect时候的预测值 ,同时此时不需要计算梯度,提前设好no_grad 不然浪费机器计算性能。之后得到输出张量,并去掉第一维batch维

使用解码器decode解析网络预测输出的张量pred_tensor decode部分下面再讲 先看输出结果

得到4组预测出来的bbox 条件概率(假设含有物体条件下的该类别的概率)最高都是同一类别14 看txt文件可知是person 也就是人

接着以20个类别为循环条件开始循环,mask掩码,每次循环中看预测出来的是否再本次循环的类别中,有的话就赋予true,然后计算true里的类别内容 没有的话跳过

找出所有同一类的 进行nms,清除这些属于同一类别中,他们所有框的排列组合中iou过大,也可以视作重叠了,清除这些框,而比较的值是iou_threshold阈值

nms完事之后得到都不互相重叠的框的索引。加入汇总的list中。list再按行叠成张量便于后面返回给画框的部分。

最后的循环是按输出格式 重新格式化数据。

传入画框的地方需要四个值两个坐标全为以图片真实像素大小的值,不再是归一化。此处分别归一化乘对应的wh 变成实际大小

decode部分

def decode(self, pred_tensor):

""" Decode tensor into box coordinates, class labels, and probs_detected.

Args:

pred_tensor: (tensor) tensor to decode sized [S, S, 5 x B + C], 5=(x, y, w, h, conf)

Returns:

boxes: (tensor) [[x1, y1, x2, y2]_obj1, ...]. Normalized from 0.0 to 1.0 w.r.t. image width/height, sized [n_boxes, 4].

labels: (tensor) class labels for each detected boxe, sized [n_boxes,].

confidences: (tensor) objectness confidences for each detected box, sized [n_boxes,].

class_scores: (tensor) scores for most likely class for each detected box, sized [n_boxes,].

"""

S, B, C = self.S, self.B, self.C

boxes, labels, confidences, class_scores = [], [], [], []

cell_size = 1.0 / float(S)

#每个网格的置信度

conf = pred_tensor[:, :, 4].unsqueeze(2) # [S, S, 1]

for b in range(1, B):

conf = torch.cat((conf, pred_tensor[:, :, 5*b + 4].unsqueeze(2)), 2) #[S,S,2]

conf_mask = conf > self.conf_thresh # [S, S, B]

# TBM, further optimization may be possible by replacing the following for-loops with tensor operations.

for i in range(S): # for x-dimension.

for j in range(S): # for y-dimension.

class_score, class_label = torch.max(pred_tensor[j, i, 5*B:], 0) #找[j,i]网格的最大分类值

for b in range(B): #遍历两预测bbox

conf = pred_tensor[j, i, 5*b + 4]

prob = conf * class_score

if float(prob) < self.prob_thresh: #低于阈值门限继续

continue

# Compute box corner (x1, y1, x2, y2) from tensor.

box = pred_tensor[j, i, 5*b : 5*b + 4]

x0y0_normalized = torch.FloatTensor([i, j]) * cell_size # 该网格的坐上角归一化坐标

xy_normalized = box[:2] * cell_size + x0y0_normalized # 从对cell归一化的中心点位置还原出来 现在是对图片大小归一化

wh_normalized = box[2:] # 归一化的宽高

box_xyxy = torch.FloatTensor(4) # [4,]随便初始4个

box_xyxy[:2] = xy_normalized - 0.5 * wh_normalized # 归一化左上X-》应该是左下角角位置(x1, y1).

box_xyxy[2:] = xy_normalized + 0.5 * wh_normalized # 归一化右下X-》应该是有右上角角位置(x2, y2).

# Append result to the lists.

boxes.append(box_xyxy)

labels.append(class_label)

confidences.append(conf)

class_scores.append(class_score)

if len(boxes) > 0:

boxes = torch.stack(boxes, 0) # [n_boxes, 4] list转张量

labels = torch.stack(labels, 0) # [n_boxes, ]

confidences = torch.stack(confidences, 0) # [n_boxes, ]

class_scores = torch.stack(class_scores, 0) # [n_boxes, ]

else:

# If no box found, return empty tensors.

boxes = torch.FloatTensor(0, 4)

labels = torch.LongTensor(0)

confidences = torch.FloatTensor(0)

class_scores = torch.FloatTensor(0)

return boxes, labels, confidences, class_scores按照预测的张量返回四组数据 分别是box 标签(属于的类的下标) 置信度 类别条件概率

pred_tensor[:, :, 4]取出第一个框置信度,后面再加一维用来叠第二个框的置信度

代码中conf_mask没用到这里也不管了

按照像素进行循环取出每个像素对应最大的类别的下标和概率值

坐标计算顺序是:取出box四个值(cx,cy,w,h),box中是相对该grid cell偏移并以cell归一化的xy偏移的真实框中心的值。这里转换成相对图片归一化的坐上,右下坐标值。

其中第三重遍历2(B)个框,小于实际概率的阈值全部取出,此处实际概率与conf * class_score比(置信度*类别条件概率,也就是此处有物体的概率*假如有物体的时候该类别的概率)

若概率符合要求我们取出这个框的四个数据, 加到四组汇总数据中。

四组list分别叠成张量形式返回

nms部分

def nms(self, boxes, scores):

""" Apply non maximum supression.

Args:

Returns:

"""

threshold = self.nms_thresh

x1 = boxes[:, 0] # [n,]

y1 = boxes[:, 1] # [n,]

x2 = boxes[:, 2] # [n,]

y2 = boxes[:, 3] # [n,]

areas = (x2 - x1) * (y2 - y1) # [n,]

_, ids_sorted = scores.sort(0, descending=True) # [n,]

ids = []

while ids_sorted.numel() > 0:

# Assume `ids_sorted` size is [m,] in the beginning of this iter.

#最后剩下一个的时候detach 脱离出tensor

i = ids_sorted.item() if (ids_sorted.numel() == 1) else ids_sorted[0]

ids.append(i)

if ids_sorted.numel() == 1:

break # If only one box is left (i.e., no box to supress), break.

inter_x1 = x1[ids_sorted[1:]].clamp(min=x1[i]) # [m-1, ]

inter_y1 = y1[ids_sorted[1:]].clamp(min=y1[i]) # [m-1, ]

inter_x2 = x2[ids_sorted[1:]].clamp(max=x2[i]) # [m-1, ] 画图就懂了

inter_y2 = y2[ids_sorted[1:]].clamp(max=y2[i]) # [m-1, ]

inter_w = (inter_x2 - inter_x1).clamp(min=0) # [m-1, ]

inter_h = (inter_y2 - inter_y1).clamp(min=0) # [m-1, ]

inters = inter_w * inter_h # intersections b/w/ box `i` and other boxes, sized [m-1, ].

unions = areas[i] + areas[ids_sorted[1:]] - inters # unions b/w/ box `i` and other boxes, sized [m-1, ].

ious = inters / unions # [m-1, ]

# Remove boxes whose IoU is higher than the threshold.#(ious <= threshold).nonzero() 形状(2,1)

ids_keep = (ious <= threshold).nonzero().squeeze() # [m-1, ]. Because `nonzero()` adds extra dimension, squeeze it.

if ids_keep.numel() == 0:

break # If no box left, break.

ids_sorted = ids_sorted[ids_keep+1] # `+1` is needed because `ids_sorted[0] = i`.

return torch.LongTensor(ids)

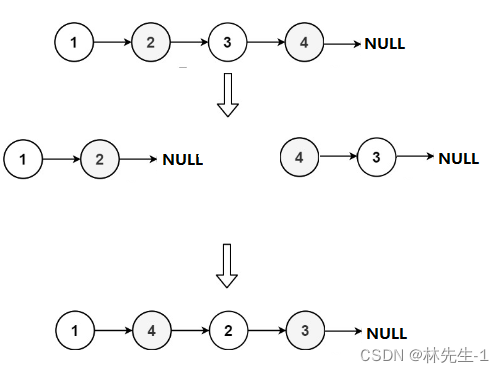

nms比较硬核,不懂需要先看李沐的13.4. 锚框 — 动手学深度学习 2.0.0 documentation 不过实现方式有些许不同

分别取出boxes中相对于图片归一化的左上右下坐标值。算出框面积

以分数(该类中的条件概率)按从大到小排序。inter的x1 x2 y1 y2分别对应需要比较的框的左下右上,

以分数做顺序基准,每次以第一个(下标0)

检查x1,与下标为0的x1做比较,若小于下标为0的x1则自动填充为下标为0的x1

检查y1,与下标为0的y1做比较,若小于下标为0的y1则自动填充为下标为0的y1

检查x2,与下标为0的x2做比较,若大于下标为0的x2则自动填充为下标为0的x2

检查y2,与下标为0的y2做比较,若大于下标为0的y2则自动填充为下标为0的y2

动手画图更容易懂

于是计算两者的w和h,此时两者框不重合 没有交集,w和h有一个就会为负,就会填充为0,于是iou必定为0,也就是表示无交集不可能重叠而保留,其余iou不为0部分与阈值比较,作为下一组的比较对象。同时比较基准放入ids,证明该框与筛选后的比较对象无重叠部分

就这样每次与其他剩余的框比较,筛选出所有互相不重叠或者iou阈值不足以认为是重叠的框返回回detect中处理

总

import torch

from torch.autograd import Variable

import torchvision.transforms as transforms

import os

import cv2

import numpy as np

from resnet_yolo import resnet50

# VOC class names and BGR color.

VOC_CLASS_BGR = {

'aeroplane': (128, 0, 0),

'bicycle': (0, 128, 0),

'bird': (128, 128, 0),

'boat': (0, 0, 128),

'bottle': (128, 0, 128),

'bus': (0, 128, 128),

'car': (128, 128, 128),

'cat': (64, 0, 0),

'chair': (192, 0, 0),

'cow': (64, 128, 0),

'diningtable': (192, 128, 0),

'dog': (64, 0, 128),

'horse': (192, 0, 128),

'motorbike': (64, 128, 128),

'person': (192, 128, 128),

'pottedplant': (0, 64, 0),

'sheep': (128, 64, 0),

'sofa': (0, 192, 0),

'train': (128, 192, 0),

'tvmonitor': (0, 64, 128)

}

def visualize_boxes(image_bgr, boxes, class_names, probs, name_bgr_dict=None, line_thickness=2):

if name_bgr_dict is None:

name_bgr_dict = VOC_CLASS_BGR

image_boxes = image_bgr.copy()#分配到新内存中去

for box, class_name, prob in zip(boxes, class_names, probs):

# Draw box on the image.

left_top, right_bottom = box

left, top = int(left_top[0]), int(left_top[1])

right, bottom = int(right_bottom[0]), int(right_bottom[1])

bgr = name_bgr_dict[class_name]

cv2.rectangle(image_boxes, (left, top), (right, bottom), bgr, thickness=line_thickness)

# Draw text on the image.

text = '%s %.2f' % (class_name, prob)

size, baseline = cv2.getTextSize(text, cv2.FONT_HERSHEY_SIMPLEX, fontScale=0.5, thickness=2)

text_w, text_h = size

x, y = left, top

x1y1 = (x, y)

x2y2 = (x + text_w + line_thickness, y + text_h + line_thickness + baseline)

cv2.rectangle(image_boxes, x1y1, x2y2, bgr, -1)

cv2.putText(image_boxes, text, (x + line_thickness, y + 2*baseline + line_thickness),

cv2.FONT_HERSHEY_SIMPLEX, fontScale=0.4, color=(255, 255, 255), thickness=1, lineType=8)

return image_boxes

class YOLODetector:

def __init__(self,

model_path, class_name_list=None, mean_rgb=[122.67891434, 116.66876762, 104.00698793],

conf_thresh=0.1, prob_thresh=0.1, nms_thresh=0.5,

gpu_id=0):

os.environ["CUDA_VISIBLE_DEVICES"] = str(gpu_id)

use_gpu = torch.cuda.is_available()

assert use_gpu, 'Current implementation does not support CPU mode. Enable CUDA.'

# Load YOLO model.

print("Loading YOLO model...")

self.yolo = resnet50()#这里就已经有了 随机的参数w权重

sd = torch.load(model_path)

self.yolo.load_state_dict(sd)#读取原来模型的权重

self.yolo.cuda()

print("Done loading!")

self.yolo.eval()

self.S = 7

self.B = 2

self.C = 20

self.class_name_list = class_name_list if (class_name_list is not None) else list(VOC_CLASS_BGR.keys())#给数据集里指定的list还是自己重新定义class list

assert len(self.class_name_list) == self.C

self.mean = np.array(mean_rgb, dtype=np.float32)

assert self.mean.shape == (3,)

self.conf_thresh = conf_thresh

self.prob_thresh = prob_thresh

self.nms_thresh = nms_thresh

self.to_tensor = transforms.ToTensor()

# Warm up. dummy_input 虚拟输入

dummy_input = Variable(torch.zeros((1, 3, 448, 448)))

dummy_input = dummy_input.cuda()

for i in range(3): #为了初始化权重? 为什么 -预热操作的目的是让模型尽可能地填满加速器的缓存

self.yolo(dummy_input) #self.yolo.state_dict().get('conv1.weight')

def detect(self, image_bgr, image_size=448):

""" Detect objects from given image.

Args:

image_bgr: (numpy array) input image in BGR ids_sorted, sized [h, w, 3].

image_size: (int) image width and height to which input image is resized.

Returns:

boxes_detected: (list of tuple) box corner list like [((x1, y1), (x2, y2))_obj1, ...]. Re-scaled for original input image size.

class_names_detected: (list of str) list of class name for each detected boxe.

probs_detected: (list of float) list of probability(=confidence x class_score) for each detected box.

"""

h, w, _ = image_bgr.shape

img = cv2.resize(image_bgr, dsize=(image_size, image_size), interpolation=cv2.INTER_LINEAR)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB) # assuming the model is trained with RGB images.

img = (img - self.mean) / 255.0

img = self.to_tensor(img) # [image_size, image_size, 3] -> [3, image_size, image_size]

img = img[None, :, :, :] # [3, image_size, image_size] -> [1, 3, image_size, image_size]扩大维度 第一维是batch

img = Variable(img)

img = img.cuda()

with torch.no_grad():

pred_tensor = self.yolo(img)

pred_tensor = pred_tensor.cpu().data

pred_tensor = pred_tensor.squeeze(0) # squeeze batch dimension.

# Get detected boxes_detected, labels, confidences, class-scores.

boxes_normalized_all, class_labels_all, confidences_all, class_scores_all = self.decode(pred_tensor)

if boxes_normalized_all.size(0) == 0:

return [], [], [] # if no box found, return empty lists.

# Apply non maximum supression for boxes of each class.

boxes_normalized, class_labels, probs = [], [], []

for class_label in range(len(self.class_name_list)):

mask = (class_labels_all == class_label)

if torch.sum(mask) == 0:

continue # if no box found, skip that class.

# 找出所有同一类的 进行nms

boxes_normalized_masked = boxes_normalized_all[mask]

class_labels_maked = class_labels_all[mask]

confidences_masked = confidences_all[mask]

class_scores_masked = class_scores_all[mask]

ids = self.nms(boxes_normalized_masked, confidences_masked) #非极大抑制

boxes_normalized.append(boxes_normalized_masked[ids])

class_labels.append(class_labels_maked[ids])

probs.append(confidences_masked[ids] * class_scores_masked[ids])

boxes_normalized = torch.cat(boxes_normalized, 0)

class_labels = torch.cat(class_labels, 0)

probs = torch.cat(probs, 0)

# Postprocess for box, labels, probs.

boxes_detected, class_names_detected, probs_detected = [], [], []

for b in range(boxes_normalized.size(0)):

box_normalized = boxes_normalized[b]

class_label = class_labels[b]

prob = probs[b]

x1, x2 = w * box_normalized[0], w * box_normalized[2] # unnormalize x with image width. 图片真实坐标 从左上开始 0

y1, y2 = h * box_normalized[1], h * box_normalized[3] # unnormalize y with image height.

boxes_detected.append(((x1, y1), (x2, y2)))

class_label = int(class_label) # convert from LongTensor to int.

class_name = self.class_name_list[class_label]

class_names_detected.append(class_name)

prob = float(prob) # convert from Tensor to float.

probs_detected.append(prob)

return boxes_detected, class_names_detected, probs_detected

def decode(self, pred_tensor):

""" Decode tensor into box coordinates, class labels, and probs_detected.

Args:

pred_tensor: (tensor) tensor to decode sized [S, S, 5 x B + C], 5=(x, y, w, h, conf)

Returns:

boxes: (tensor) [[x1, y1, x2, y2]_obj1, ...]. Normalized from 0.0 to 1.0 w.r.t. image width/height, sized [n_boxes, 4].

labels: (tensor) class labels for each detected boxe, sized [n_boxes,].

confidences: (tensor) objectness confidences for each detected box, sized [n_boxes,].

class_scores: (tensor) scores for most likely class for each detected box, sized [n_boxes,].

"""

S, B, C = self.S, self.B, self.C

boxes, labels, confidences, class_scores = [], [], [], []

cell_size = 1.0 / float(S)

#每个网格的置信度

conf = pred_tensor[:, :, 4].unsqueeze(2) # [S, S, 1]

for b in range(1, B):

conf = torch.cat((conf, pred_tensor[:, :, 5*b + 4].unsqueeze(2)), 2) #[S,S,2]

conf_mask = conf > self.conf_thresh # [S, S, B]

# TBM, further optimization may be possible by replacing the following for-loops with tensor operations.

for i in range(S): # for x-dimension.

for j in range(S): # for y-dimension.

class_score, class_label = torch.max(pred_tensor[j, i, 5*B:], 0) #找[j,i]网格的最大分类值

for b in range(B): #遍历两预测bbox

conf = pred_tensor[j, i, 5*b + 4]

prob = conf * class_score

if float(prob) < self.prob_thresh: #低于阈值门限继续

continue

# Compute box corner (x1, y1, x2, y2) from tensor.

box = pred_tensor[j, i, 5*b : 5*b + 4]

x0y0_normalized = torch.FloatTensor([i, j]) * cell_size # 该网格的坐上角归一化坐标

xy_normalized = box[:2] * cell_size + x0y0_normalized # 从对cell归一化的中心点位置还原出来 现在是对图片大小归一化

wh_normalized = box[2:] # 归一化的宽高

box_xyxy = torch.FloatTensor(4) # [4,]随便初始4个

box_xyxy[:2] = xy_normalized - 0.5 * wh_normalized # 归一化左上X-》应该是左下角角位置(x1, y1).

box_xyxy[2:] = xy_normalized + 0.5 * wh_normalized # 归一化右下X-》应该是有右上角角位置(x2, y2).

# Append result to the lists.

boxes.append(box_xyxy)

labels.append(class_label)

confidences.append(conf)

class_scores.append(class_score)

if len(boxes) > 0:

boxes = torch.stack(boxes, 0) # [n_boxes, 4] list转张量

labels = torch.stack(labels, 0) # [n_boxes, ]

confidences = torch.stack(confidences, 0) # [n_boxes, ]

class_scores = torch.stack(class_scores, 0) # [n_boxes, ]

else:

# If no box found, return empty tensors.

boxes = torch.FloatTensor(0, 4)

labels = torch.LongTensor(0)

confidences = torch.FloatTensor(0)

class_scores = torch.FloatTensor(0)

return boxes, labels, confidences, class_scores

def nms(self, boxes, scores):

""" Apply non maximum supression.

Args:

Returns:

"""

threshold = self.nms_thresh

x1 = boxes[:, 0] # [n,]

y1 = boxes[:, 1] # [n,]

x2 = boxes[:, 2] # [n,]

y2 = boxes[:, 3] # [n,]

areas = (x2 - x1) * (y2 - y1) # [n,]

_, ids_sorted = scores.sort(0, descending=True) # [n,]

ids = []

while ids_sorted.numel() > 0:

# Assume `ids_sorted` size is [m,] in the beginning of this iter.

#最后剩下一个的时候detach 脱离出tensor

i = ids_sorted.item() if (ids_sorted.numel() == 1) else ids_sorted[0]

ids.append(i)

if ids_sorted.numel() == 1:

break # If only one box is left (i.e., no box to supress), break.

inter_x1 = x1[ids_sorted[1:]].clamp(min=x1[i]) # [m-1, ]

inter_y1 = y1[ids_sorted[1:]].clamp(min=y1[i]) # [m-1, ]

inter_x2 = x2[ids_sorted[1:]].clamp(max=x2[i]) # [m-1, ] 画图就懂了

inter_y2 = y2[ids_sorted[1:]].clamp(max=y2[i]) # [m-1, ]

inter_w = (inter_x2 - inter_x1).clamp(min=0) # [m-1, ]

inter_h = (inter_y2 - inter_y1).clamp(min=0) # [m-1, ]

inters = inter_w * inter_h # intersections b/w/ box `i` and other boxes, sized [m-1, ].

unions = areas[i] + areas[ids_sorted[1:]] - inters # unions b/w/ box `i` and other boxes, sized [m-1, ].

ious = inters / unions # [m-1, ]

# Remove boxes whose IoU is higher than the threshold.#(ious <= threshold).nonzero() 形状(2,1)

ids_keep = (ious <= threshold).nonzero().squeeze() # [m-1, ]. Because `nonzero()` adds extra dimension, squeeze it.

if ids_keep.numel() == 0:

break # If no box left, break.

ids_sorted = ids_sorted[ids_keep+1] # `+1` is needed because `ids_sorted[0] = i`.

return torch.LongTensor(ids)

if __name__ == '__main__':

# Paths to input/output images.

image_path = '000369.jpg'

out_path = 'result.png'

# Path to the yolo weight.

model_path = 'weights/model_best.pth'

# GPU device on which yolo is loaded.

gpu_id = 0

# Load model.

yolo = YOLODetector(model_path, gpu_id=gpu_id, conf_thresh=0.15, prob_thresh=0.45, nms_thresh=0.35)

# Load image.

image = cv2.imread(image_path)#某些老的图像处理软件使用的是 BGR 格式,因此 OpenCV 采用 BGR 格式可以与这些软件兼容。

# Detect objects.

boxes, class_names, probs = yolo.detect(image)

# Visualize.

image_boxes = visualize_boxes(image, boxes, class_names, probs)

# Output detection result as an image.

cv2.imwrite(out_path, image_boxes)