1 Yarn常用命令

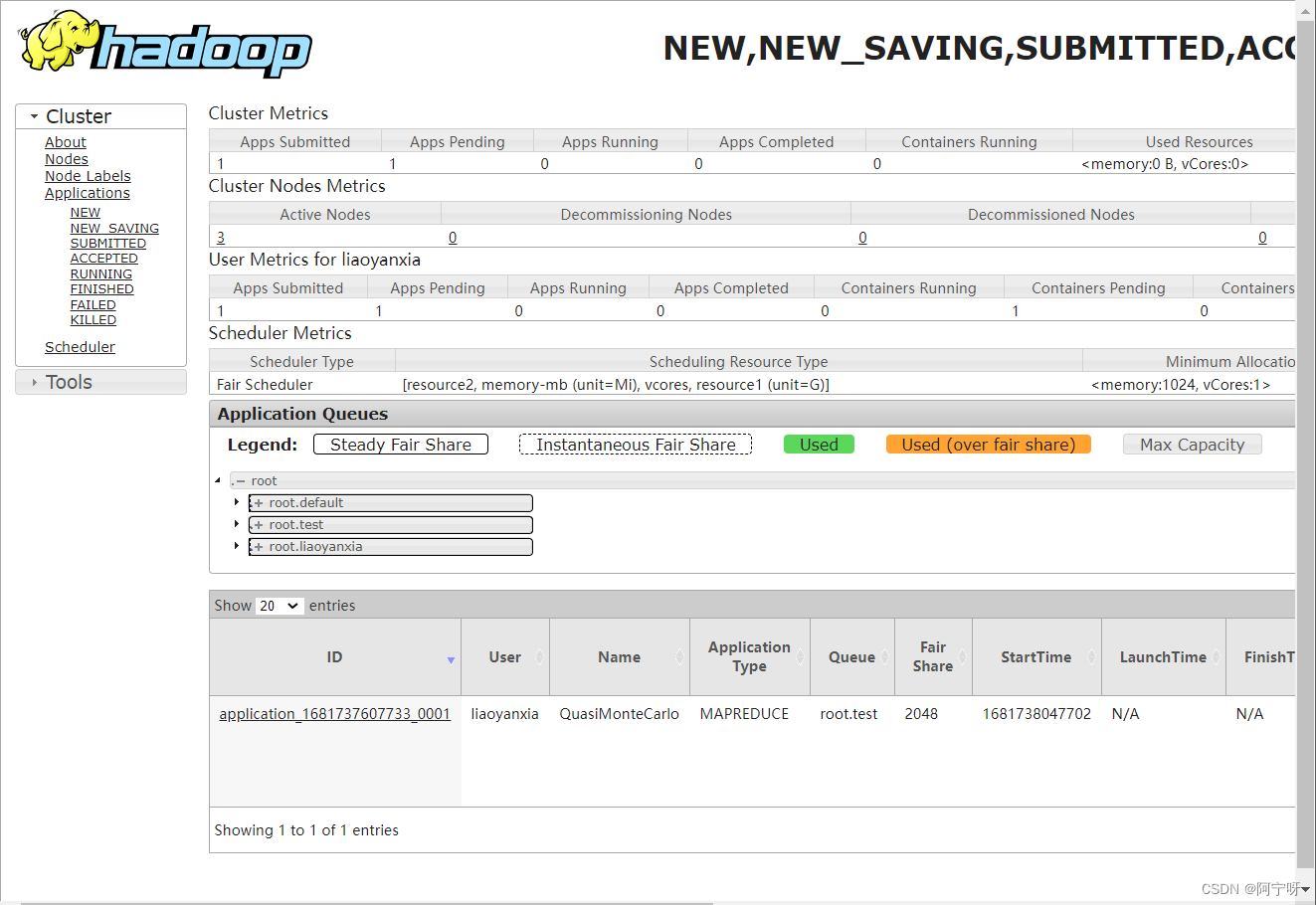

Yarn状态查询可以在hadoop103:8088页面查看,也可以通过命令查看。

先运行案例再查看运行情况。

(1)yarn application 查看任务

yarn application -list //列出所有application

yarn application -list -appStates 状态 //状态:ALL、NEW、NEW_SAVING、SUBMITTED、ACCEPTED、RUNNING、FINISHED、FAILED、KILLED。通过application状态过滤

yarn application -kill application_ID号 //通过application的ID号kill掉application

(2)yarn logs 查看日志

yarn logs -applicationId application_ID号 //查询application日志

yarn logs -applicationId application_ID号-containerId container_容器ID号 //查询Container日志,先指定是哪个application的,再指定是哪个容器的

(3) yarn application attempt 查看尝试运行的任务(即正在运行的任务状态)

yarn applicationattempt -list application_ID号 //列出所有Application尝试的列表

yarn applicationattempt -status appattempt_ApplicationAttemptID号 //打印ApplicationAttemp状态

(4) yarn container查看容器

只有任务在执行过程中才能看到container状态

yarn container -list appattempt_ApplicationAttemptID号 //列出所有container

yarn container -status container_ID号 //打印container状态

(5) yarn node查看节点状态

yarn node -list -all //列出所有节点

(6)yarn rmadmin更新配置

yarn rmadmin -refreshQueues //加载队列配置

(7) yarn queue查看队列

yarn queue -status <QueueName>

yarn queue -status default //打印队列信息

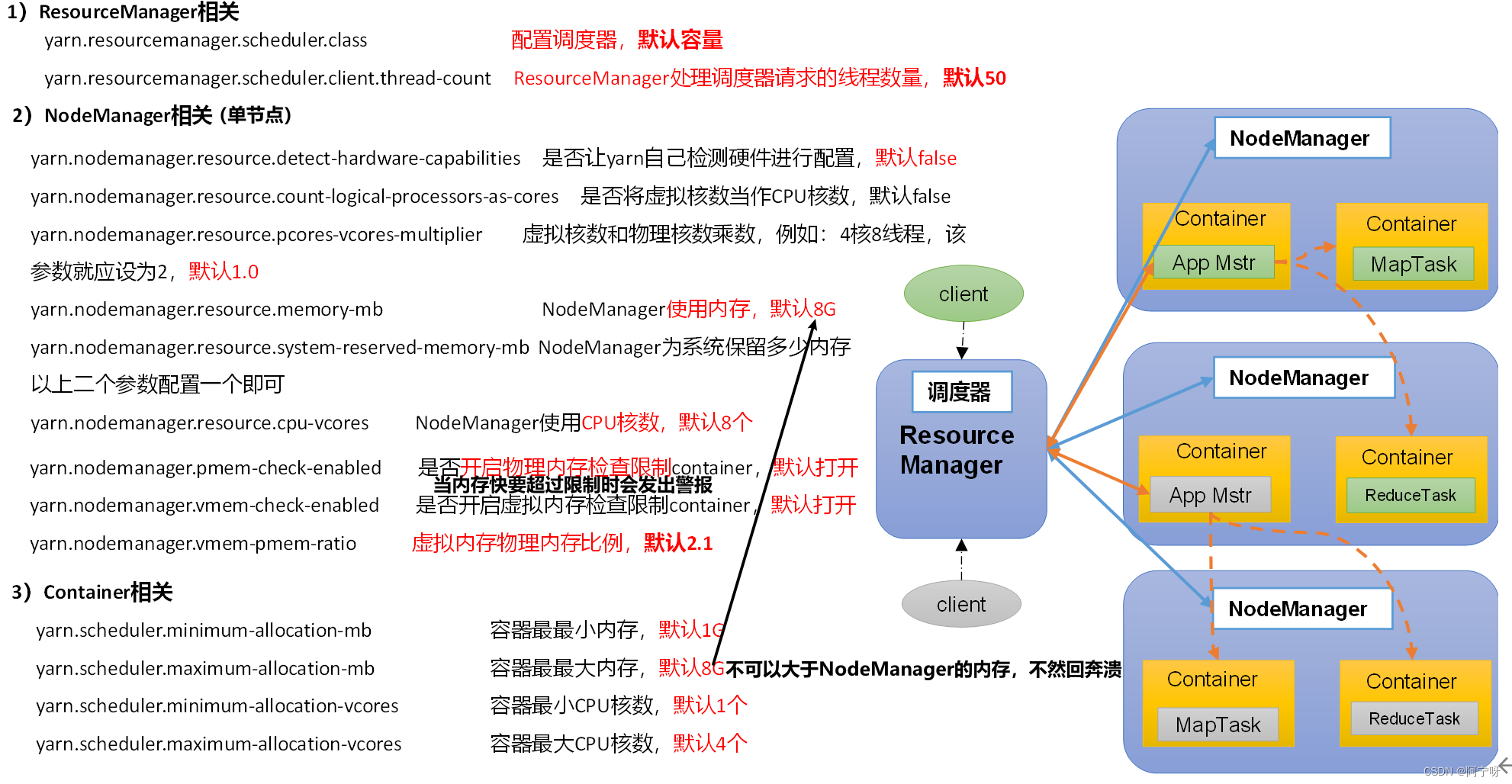

2 yarn生产环境核心参数

3 生产环境下配置Yarn

3台服务器先创建快照。

(1)核心参数配置案例

需求:从0.5G数据中统计每个单词出现的次数。服务器3台,每台配置2G内存,1核CPU,2线程。

分析:0.5G/128m=4MapTask;1ReduceTask;1MrAppMater

平均每个节点运行6/3=2个任务(2,2,2)

修改yarn-site.xml配置:

<!-- 选择调度器,默认容量 -->

<property>

<description>The class to use as the resource scheduler.</description>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler</value>

</property>

<!-- ResourceManager处理调度器请求的线程数量,默认50;如果提交的任务数大于50,可以增加该值,但是不能超过3台 * 2线程 = 6线程(去除其他应用程序实际不能超过8) -->

<property>

<description>Number of threads to handle scheduler interface.</description>

<name>yarn.resourcemanager.scheduler.client.thread-count</name>

<value>8</value>

</property>

<!-- 是否让yarn自动检测硬件进行配置,默认是false,如果该节点有很多其他应用程序,建议手动配置。如果该节点没有其他应用程序,可以采用自动 -->

<property>

<description>Enable auto-detection of node capabilities such as

memory and CPU.

</description>

<name>yarn.nodemanager.resource.detect-hardware-capabilities</name>

<value>false</value>

</property>

<!-- 是否将虚拟核数当作CPU核数,默认是false,采用物理CPU核数 -->

<property>

<description>Flag to determine if logical processors(such as

hyperthreads) should be counted as cores. Only applicable on Linux

when yarn.nodemanager.resource.cpu-vcores is set to -1 and

yarn.nodemanager.resource.detect-hardware-capabilities is true.

</description>

<name>yarn.nodemanager.resource.count-logical-processors-as-cores</name>

<value>false</value>

</property>

<!-- 虚拟核数和物理核数乘数,默认是1.0 -->

<property>

<description>Multiplier to determine how to convert phyiscal cores to

vcores. This value is used if yarn.nodemanager.resource.cpu-vcores

is set to -1(which implies auto-calculate vcores) and

yarn.nodemanager.resource.detect-hardware-capabilities is set to true. Thenumber of vcores will be calculated asnumber of CPUs * multiplier.

</description>

<name>yarn.nodemanager.resource.pcores-vcores-multiplier</name>

<value>1.0</value>

</property>

<!-- NodeManager使用内存数,默认8G,修改为4G内存 -->

<property>

<description>Amount of physical memory, in MB, that can be allocated

for containers. If set to -1 and

yarn.nodemanager.resource.detect-hardware-capabilities is true, it is

automatically calculated(in case of Windows and Linux).

In other cases, the default is 8192MB.

</description>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>2048</value>

</property>

<!-- nodemanager的CPU核数,不按照硬件环境自动设定时默认是8个,修改为1个 -->

<property>

<description>Number of vcores that can be allocated

for containers. This is used by the RM scheduler when allocating

resources for containers. This is not used to limit the number of

CPUs used by YARN containers. If it is set to -1 and

yarn.nodemanager.resource.detect-hardware-capabilities is true, it is

automatically determined from the hardware in case of Windows and Linux.

In other cases, number of vcores is 8 by default.</description>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>1</value>

</property>

<!-- 容器最小内存,默认1G -->

<property>

<description>The minimum allocation for every container request at the RMin MBs. Memory requests lower than this will be set to the value of thisproperty. Additionally, a node manager that is configured to have less memorythan this value will be shut down by the resource manager.

</description>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>1024</value>

</property>

<!-- 容器最大内存,默认8G,修改为1.5G -->

<property>

<description>The maximum allocation for every container request at the RMin MBs. Memory requests higher than this will throw anInvalidResourceRequestException.

</description>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>1536</value>

</property>

<!-- 容器最小CPU核数,默认1个 -->

<property>

<description>The minimum allocation for every container request at the RMin terms of virtual CPU cores. Requests lower than this will be set to thevalue of this property. Additionally, a node manager that is configured tohave fewer virtual cores than this value will be shut down by the resourcemanager.

</description>

<name>yarn.scheduler.minimum-allocation-vcores</name>

<value>1</value>

</property>

<!-- 容器最大CPU核数,默认4个,修改为1个 -->

<property>

<description>The maximum allocation for every container request at the RMin terms of virtual CPU cores. Requests higher than this will throw an

InvalidResourceRequestException.</description>

<name>yarn.scheduler.maximum-allocation-vcores</name>

<value>1</value>

</property>

<!-- 虚拟内存检查,默认打开,修改为关闭 -->

<property>

<description>Whether virtual memory limits will be enforced for

containers.</description>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

<!-- 虚拟内存和物理内存设置比例,默认2.1 -->

<property>

<description>Ratio between virtual memory to physical memory whensetting memory limits for containers. Container allocations areexpressed in terms of physical memory, and virtual memory usageis allowed to exceed this allocation by this ratio.

</description>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>2.1</value>

</property>

当集群硬件资源不一样时需要每个NodeManager单独配置。

分发并重启yarn集群,然后执行wordcount程序,http://hadoop103:8088/cluster/apps 观察yarn的执行。

xsync yarn-site.xml

stop-yarn.sh

start-yarn.sh

hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.1.jar wordcount /input /output

(2)容量调度器案例

1) 需要创建队列的生产环境情况:

(1)调度器只默认一个default队列,不能满足生产要求。

(2)按照框架,hive /spark/ flink 每个框架的任务放入指定的队列。

(3)按照业务模块:登录注册、购物车、下单、业务部门1、业务部门2。

2)多队列好处:

(1)可能出现死循环,耗尽队列的资源。

(2)像双十一、618在资源紧张时期保证任务队列资源充足,给任务设置优先级,优先级高的先处理,即对任务进行降级使用。

需求:

(1)default队列占总内存的40%,最大资源容量占总资源60%,hive队列占总内存的60%,最大资源容量占总资源80%。

(2)配置队列优先级。

配置步骤:

(1)capacity-scheduler.xml

修改以下:

<!-- 指定多队列,增加hive队列 -->

<property>

<name>yarn.scheduler.capacity.root.queues</name>

<value>default,hive</value>

<description>

The queues at the this level (root is the root queue).

</description>

</property>

<!-- 降低default队列资源额定容量为40%,默认100%-->

<property>

<name>yarn.scheduler.capacity.root.default.capacity</name>

<value>40</value>

</property>

<!-- 降低default队列资源最大容量为60%,默认100%-->

<property>

<name>yarn.scheduler.capacity.root.default.maximum-capacity</name>

<value>60</value>

</property>

添加参数:

<!-- 指定hive队列的资源额定容量 -->

<property>

<name>yarn.scheduler.capacity.root.hive.capacity</name>

<value>60</value>

</property>

<!-- 用户最多可以使用队列多少资源,1表示 -->

<property>

<name>yarn.scheduler.capacity.root.hive.user-limit-factor</name>

<value>1</value>

</property>

<!-- 指定hive队列的资源最大容量 -->

<property>

<name>yarn.scheduler.capacity.root.hive.maximum-capacity</name>

<value>80</value>

</property>

<!-- 启动hive队列 -->

<property>

<name>yarn.scheduler.capacity.root.hive.state</name>

<value>RUNNING</value>

</property>

<!-- 哪些用户有权向队列提交作业 -->

<property>

<name>yarn.scheduler.capacity.root.hive.acl_submit_applications</name>

<value>*</value>

</property>

<!-- 哪些用户有权操作队列,管理员权限(查看/杀死) -->

<property>

<name>yarn.scheduler.capacity.root.hive.acl_administer_queue</name>

<value>*</value>

</property>

<!-- 哪些用户有权配置提交任务优先级 -->

<property>

<name>yarn.scheduler.capacity.root.hive.acl_application_max_priority</name>

<value>*</value>

</property>

<!-- 任务的超时时间设置:yarnapplication -appId appId -updateLifetimeTimeout

参考资料:https://blog.cloudera.com/enforcing-application-lifetime-slas-yarn/ -->

<!-- 如果application指定了超时时间,则提交到该队列的application能够指定的最大超时时间不能超过该值。

-->

<property>

<name>yarn.scheduler.capacity.root.hive.maximum-application-lifetime</name>

<value>-1</value>

</property>

<!-- 如果application没指定超时时间,则用default-application-lifetime作为默认值 -->

<property>

<name>yarn.scheduler.capacity.root.hive.default-application-lifetime</name>

<value>-1</value></property>

(2)分配文件,再重启yarn(或执行yarn rmadmin -refreshQueues刷新队列)

xsync capacity-scheduler.xml

stop-yarn.sh

start-yarn.sh

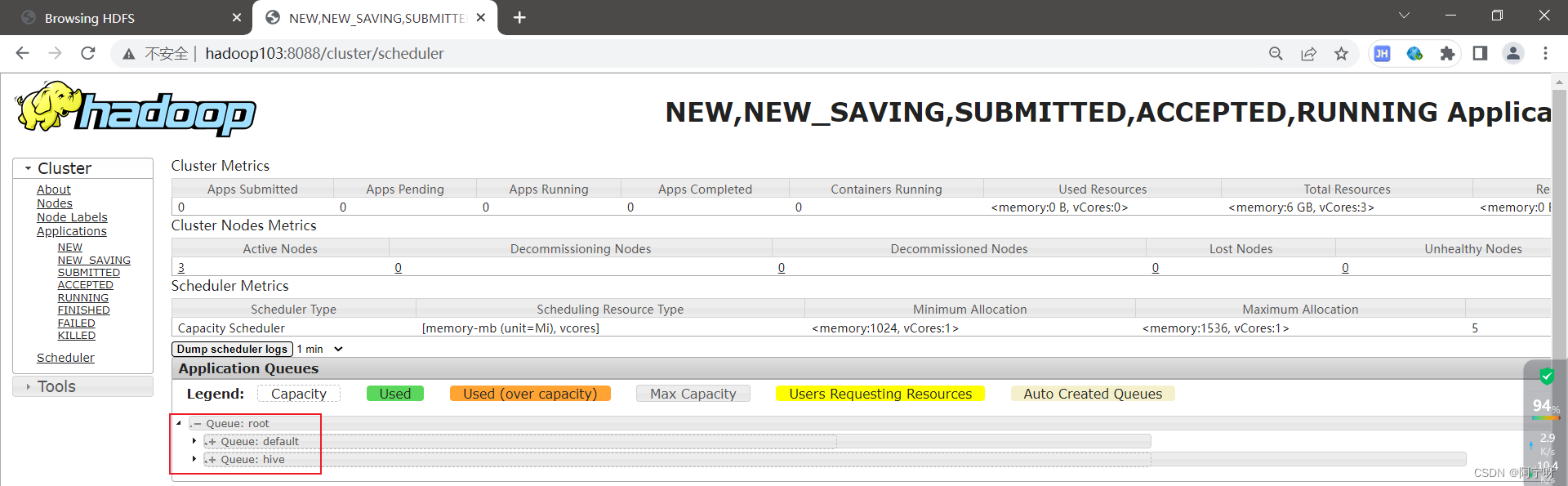

可以看到两个队列

(3)向hive队列提交任务

hadoop jar /share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.1.jar wordcount -Dmapreduce.jar.queuename=hive /input /output

或用打jar包的方式,默认任务提交到default队列,若需要提交到别的队列中,则需要在驱动程序中声明:

public class WcDrvier {

public static void main(String[] args) throws IOException,ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

//声明任务提交到的队列

conf.set("mapreduce.job.queuename","hive");

//1. 获取一个Job实例

Job job = Job.getInstance(conf);

。。。 。。。

//6. 提交Job

boolean b = job.waitForCompletion(true);

System.exit(b ? 0 : 1);

}

}

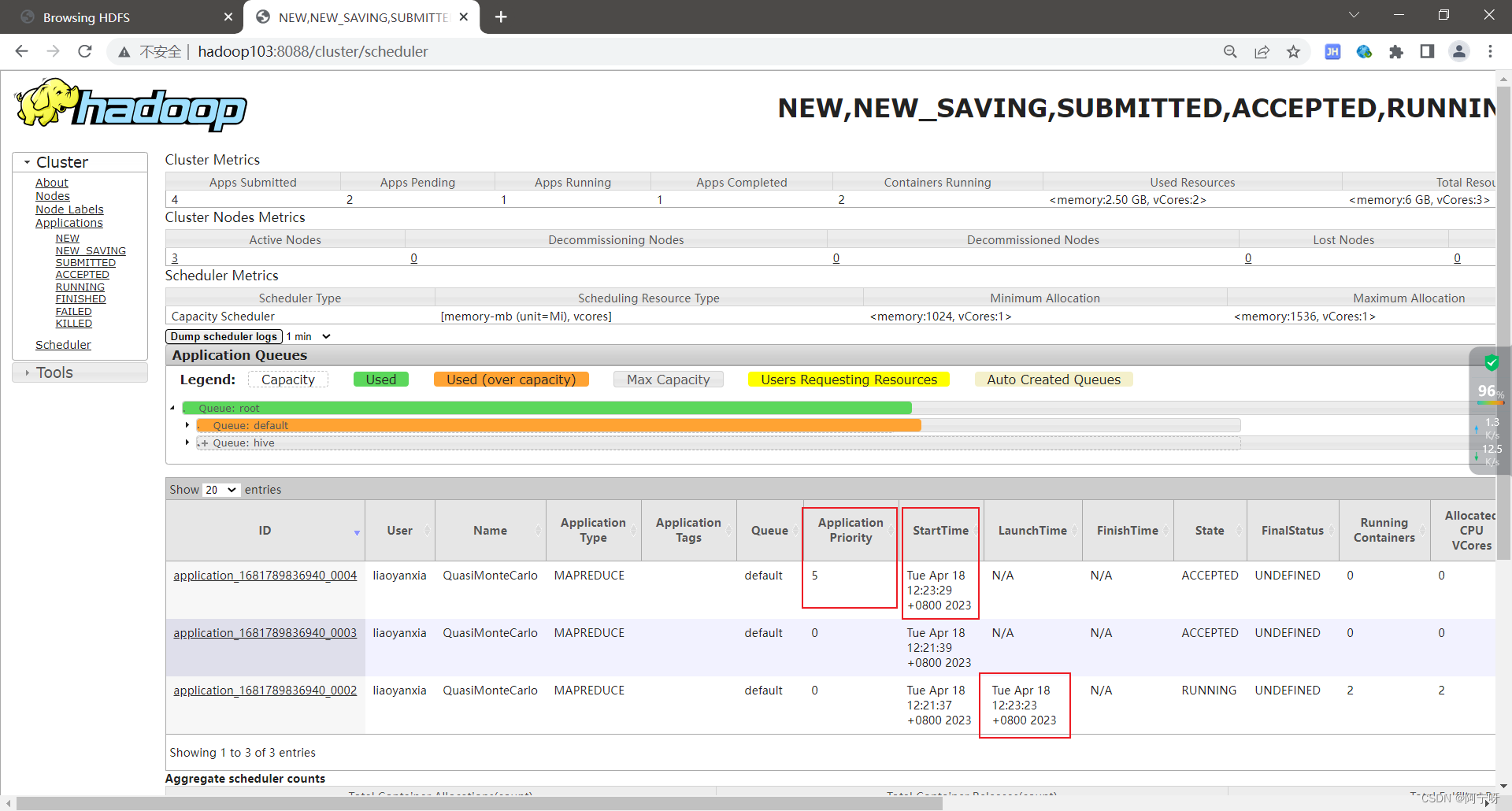

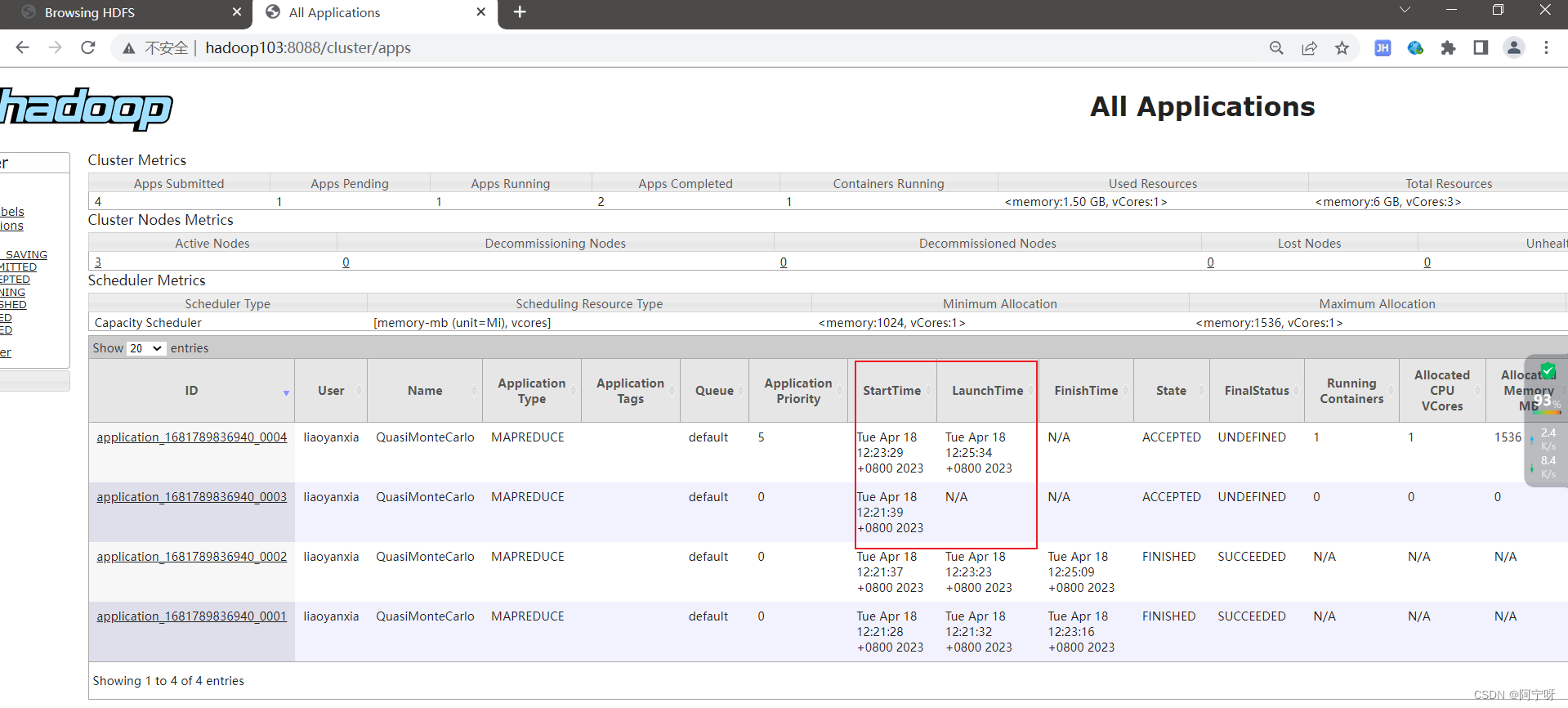

(4)任务优先级配置

容量调度器,支持任务优先级的配置,在资源紧张时,优先级高的任务将优先获取资源。默认情况,Yarn将所有任务的优先级限制为0,若想使用任务的优先级功能,须开放该限制。

在yarn-site.xml添加:

<property>

<name>yarn.cluster.max-application-priority</name>

<value>5</value>

</property>

分配文件且重启yarn

xsync yarn-site.xml

stop-yarn.sh

start-yarn.sh

分别使用hadoop102、hadoop103、hadoop104提交以下任务,模拟资源紧张的环境

hadoop jar /share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.1.jar pi 5 10000

再提交一个优先级高的任务

hadoop jar /share/hadoop/mapreduce/hadoop-mapreduce-examples-3.3.1.jar pi -Dmapreduce.job.priority=5 5 10000

或者用以下命令修改任务的优先级

yarn application -appID application_任务ID号 -updatePriority 优先级

(3)公平调度器案例

需求:创建两个队列test和liaoyanxia,实现若用户提交任务时指定队列则提交到指定队列运行;若不指定队列则test用户提交到root.group.test队列运行,liaoyanxia用户提交到root.group.liaoyanxiat队列运行。

需要配置yarn-site.xml文件和创建配置fair-scheduler.xml。

在yarn-site.xml文件添加参数:

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

<description>配置使用公平调度器</description>

</property>

<property>

<name>yarn.scheduler.fair.allocation.file</name>

<value>/opt/module/hadoop-3.3.1/etc/hadoop/fair-scheduler.xml</value>

<description>指明公平调度器队列分配配置文件</description>

</property>

<property>

<name>yarn.scheduler.fair.preemption</name>

<value>false</value>

<description>禁止队列间资源抢占</description>

</property>

配置fair-scheduler.xml

<?xmlversion="1.0"?>

<allocations>

<!-- 单个队列中ApplicationMaster占用资源的最大比例,取值0-1 ,企业一般配置0.1 -->

<queueMaxAMShareDefault>0.5</queueMaxAMShareDefault>

<!-- 单个队列最大资源的默认值 test liaoyanxia default -->

<queueMaxResourcesDefault>2048mb,2vcores</queueMaxResourcesDefault>

<!-- 增加一个队列test -->

<queue name="test">

<!-- 队列最小资源 -->

<minResources>1024mb,1vcores</minResources>

<!-- 队列最大资源 -->

<maxResources>2048mb,1vcores</maxResources>

<!-- 队列中最多同时运行的应用数,默认50,根据线程数配置 -->

<maxRunningApps>2</maxRunningApps>

<!-- 队列中Application Master占用资源的最大比例 -->

<maxAMShare>0.5</maxAMShare>

<!-- 该队列资源权重,默认值为1.0 -->

<weight>1.0</weight>

<!-- 队列内部的资源分配策略 -->

<schedulingPolicy>fair</schedulingPolicy>

</queue>

<!-- 增加一个队列liaoyanxia -->

<queue name="liaoyanxia"type="parent">

<!-- 队列最小资源 -->

<minResources>1024mb,1vcores</minResources>

<!-- 队列最大资源 -->

<maxResources>2048mb,1vcores</maxResources>

<!-- 队列中最多同时运行的应用数,默认50,根据线程数配置 -->

<maxRunningApps>2</maxRunningApps>

<!-- 队列中Application Master占用资源的最大比例 -->

<maxAMShare>0.5</maxAMShare>

<!-- 该队列资源权重,默认值为1.0 -->

<weight>1.0</weight>

<!-- 队列内部的资源分配策略 -->

<schedulingPolicy>fair</schedulingPolicy>

</queue>

<!-- 任务队列分配策略,可配置多层规则,从第一个规则开始匹配,直到匹配成功 -->

<queuePlacementPolicy>

<!-- 提交任务时指定队列,如未指定提交队列,则继续匹配下一个规则; false表示:如果指定队列不存在,不允许自动创建-->

<rule name="specified"create="false"/>

<!-- 提交到root.group.username队列,若root.group不存在,不允许自动创建;若root.group.user不存在,允许自动创建-->

<rule name="nestedUserQueue"create="true">

<rule name="primaryGroup"create="false"/>

</rule>

<!-- 最后一个规则必须为reject或者default。Reject表示拒绝创建提交失败,default表示把任务提交到default队列 -->

<rule name="reject" />

</queuePlacementPolicy>

</allocations>

分发配置并重启Yarn,提交任务进行测试。

xsync yarn-site.xml fair-scheduler.xml

sbin/stop-yarn.sh

sbin/start-yarn.sh

hadoop jar share/hadoop/mapredduce/hadoop-mapreduce-examples-3.3.1.jar pi -Dmapreduce-jar-queuename=root.test 1 1