文章目录

- 一. 问题引入

- 1. 场景描述

- 2. 日志简析

- 二. 初级问题分析与解决

- 1. 问题分析

- 1.1. yarn的调度器设置

- 1.2. 程序设置

- 2. 问题解决

- 三. (性能)新的问题

- 1. 问题描述

- 2. 理想化的最优方案

- 3. "PlanB"的解决方案

- 四. 反思与迭代

一. 问题引入

1. 场景描述

使用flink引擎,处理hdfs到hive的任务,hdfs的文件数有4000个,这里设置并行度为20,提交任务运行。

2. 日志简析

任务提交之后发现报错,我们简单分析下yarn的日志:

1. 申请的资源超过了yarn最大的container资源限制,也就是说一个taskexecutor所需的资源过大

Could not compute the container Resource from the given TaskExecutorProcessSpec TaskExecutorProcessSpec {cpuCores=10.0, frameworkHeapSize=128.000mb (134217728 bytes), frameworkOffHeapSize=128.000mb (134217728 bytes), taskHeapSize=5.200gb (5583457416 bytes), taskOffHeapSize=0 bytes, networkMemSize=1024.000mb (1073741824 bytes), managedMemorySize=4.300gb (4617089912 bytes), jvmMetaspaceSize=256.000mb (268435456 bytes), jvmOverheadSize=1024.000mb (1073741824 bytes)}. This usually indicates the requested resource is larger than Yarn's max container resource limit.

2. 接着开始请求一个新的worker,这里的worker应该也是container ,那此时正在pend的数量为1.

Requesting new worker with resource spec WorkerResourceSpec {cpuCores=10.0, taskHeapSize=5.200gb (5583457416 bytes), taskOffHeapSize=0 bytes, networkMemSize=1024.000mb (1073741824 bytes), managedMemSize=4.300gb (4617089912 bytes)}, current pending count: 1.

3. 但同样的这个container超过了yarn的资源,这时直接放弃分配资源

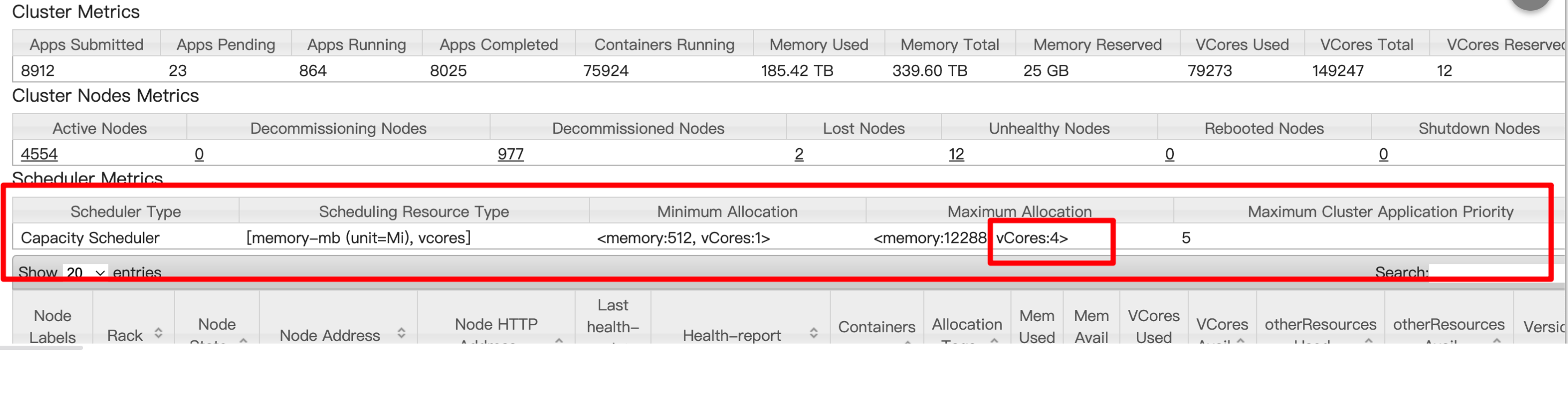

Requested container resource (<memory:12288, vCores:10>) exceeds the max limitation of the Yarn cluster (<memory:12288, vCores:4>). Will not allocate resource.

4. 如此在反复这样执行,似乎陷入到了死循环。

最后任务部署超时而报错。。。

上面的日志说的比较明白,就是:申请的资源超过了yarn最大的container资源限制。

再放出点堆栈信息,供日后参考分析:

2022-11-18 <b>10:12:12</b>,938 WARN org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager [] - Failed requesting worker with resource spec WorkerResourceSpec {cpuCores=10.0, taskHeapSize=5.200gb (5583457416 bytes), taskOffHeapSize=0 bytes, networkMemSize=1024.000mb (1073741824 bytes), managedMemSize=4.300gb (4617089912 bytes)}, current pending count: 0

org.apache.flink.runtime.resourcemanager.exceptions.ResourceManagerException: <b>Could not compute the container Resource from the given</b> TaskExecutorProcessSpec TaskExecutorProcessSpec {cpuCores=10.0, frameworkHeapSize=128.000mb (134217728 bytes), frameworkOffHeapSize=128.000mb (134217728 bytes), taskHeapSize=5.200gb (5583457416 bytes), taskOffHeapSize=0 bytes, networkMemSize=1024.000mb (1073741824 bytes), managedMemorySize=4.300gb (4617089912 bytes), jvmMetaspaceSize=256.000mb (268435456 bytes), jvmOverheadSize=1024.000mb (1073741824 bytes)}. This usually indicates the requested resource is larger than Yarn's max container resource limit.

。。。

at akka.dispatch.forkjoin.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:107) [flink-dist_2.12-1.12.7.jar:1.12.7]

2022-11-18 <b>10:12:12,93</b>9 INFO org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager [] - Requesting new worker with resource spec WorkerResourceSpec {cpuCores=10.0, taskHeapSize=5.200gb (5583457416 bytes), taskOffHeapSize=0 bytes, networkMemSize=1024.000mb (1073741824 bytes), managedMemSize=4.300gb (4617089912 bytes)}, current pending count: 1.

2022-11-18 10:12:12,940 WARN org.apache.flink.yarn.TaskExecutorProcessSpecContainerResourcePriorityAdapter [] - Requested container resource (<memory:12288, vCores:10>) exceeds the max limitation of the Yarn cluster (<memory:12288, vCores:4>). Will not allocate resource.

2022-11-18 10:12:12,940 WARN org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager [] - Failed requesting worker with resource spec WorkerResourceSpec {cpuCores=10.0, taskHeapSize=5.200gb (5583457416 bytes), taskOffHeapSize=0 bytes, networkMemSize=1024.000mb (1073741824 bytes), managedMemSize=4.300gb (4617089912 bytes)}, current pending count: 0

org.apache.flink.runtime.resourcemanager.exceptions.ResourceManagerException: Could not compute the container Resource from the given TaskExecutorProcessSpec TaskExecutorProcessSpec {cpuCores=10.0, frameworkHeapSize=128.000mb (134217728 bytes), frameworkOffHeapSize=128.000mb (134217728 bytes), taskHeapSize=5.200gb (5583457416 bytes), taskOffHeapSize=0 bytes, networkMemSize=1024.000mb (1073741824 bytes), managedMemorySize=4.300gb (4617089912 bytes), jvmMetaspaceSize=256.000mb (268435456 bytes), jvmOverheadSize=1024.000mb (1073741824 bytes)}. This usually indicates the requested resource is larger than Yarn's max container resource limit.

。。。

at akka.dispatch.forkjoin.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:107) [flink-dist_2.12-1.12.7.jar:1.12.7]

2022-11-18 10:12:12,941 INFO org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager [] - Requesting new worker with resource spec WorkerResourceSpec {cpuCores=10.0, taskHeapSize=5.200gb (5583457416 bytes), taskOffHeapSize=0 bytes, networkMemSize=1024.000mb (1073741824 bytes), managedMemSize=4.300gb (4617089912 bytes)}, current pending count: 1.

2022-11-18 10:12:12,941 WARN org.apache.flink.yarn.TaskExecutorProcessSpecContainerResourcePriorityAdapter [] - Requested container resource (<memory:12288, vCores:10>) exceeds the max limitation of the Yarn cluster (<memory:12288, vCores:4>). Will not allocate resource.

2022-11-18 10:12:12,941 WARN org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager [] - Failed requesting worker with resource spec WorkerResourceSpec {cpuCores=10.0, taskHeapSize=5.200gb (5583457416 bytes), taskOffHeapSize=0 bytes, networkMemSize=1024.000mb (1073741824 bytes), managedMemSize=4.300gb (4617089912 bytes)}, current pending count: 0

org.apache.flink.runtime.resourcemanager.exceptions.ResourceManagerException: Could not compute the container Resource from the given TaskExecutorProcessSpec TaskExecutorProcessSpec {cpuCores=10.0, frameworkHeapSize=128.000mb (134217728 bytes), frameworkOffHeapSize=128.000mb (134217728 bytes), taskHeapSize=5.200gb (5583457416 bytes), taskOffHeapSize=0 bytes, networkMemSize=1024.000mb (1073741824 bytes), managedMemorySize=4.300gb (4617089912 bytes), jvmMetaspaceSize=256.000mb (268435456 bytes), jvmOverheadSize=1024.000mb (1073741824 bytes)}. This usually indicates the requested resource is larger than Yarn's max container resource limit.

at org.apache.flink.yarn.YarnResourceManagerDriver.requestResource(YarnResourceManagerDriver.java:254) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestNewWorker(ActiveResourceManager.java:249) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestWorkerIfRequired(ActiveResourceManager.java:310) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.lambda$requestNewWorker$0(ActiveResourceManager.java:261) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at java.util.concurrent.CompletableFuture.uniHandle(CompletableFuture.java:822) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.uniHandleStage(CompletableFuture.java:834) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.handle(CompletableFuture.java:2155) ~[?:1.8.0_152]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestNewWorker(ActiveResourceManager.java:251) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestWorkerIfRequired(ActiveResourceManager.java:310) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.lambda$requestNewWorker$0(ActiveResourceManager.java:261) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at java.util.concurrent.CompletableFuture.uniHandle(CompletableFuture.java:822) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.uniHandleStage(CompletableFuture.java:834) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.handle(CompletableFuture.java:2155) ~[?:1.8.0_152]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestNewWorker(ActiveResourceManager.java:251) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestWorkerIfRequired(ActiveResourceManager.java:310) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.lambda$requestNewWorker$0(ActiveResourceManager.java:261) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at java.util.concurrent.CompletableFuture.uniHandle(CompletableFuture.java:822) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.uniHandleStage(CompletableFuture.java:834) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.handle(CompletableFuture.java:2155) ~[?:1.8.0_152]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestNewWorker(ActiveResourceManager.java:251) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestWorkerIfRequired(ActiveResourceManager.java:310) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.lambda$requestNewWorker$0(ActiveResourceManager.java:261) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at java.util.concurrent.CompletableFuture.uniHandle(CompletableFuture.java:822) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.uniHandleStage(CompletableFuture.java:834) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.handle(CompletableFuture.java:2155) ~[?:1.8.0_152]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestNewWorker(ActiveResourceManager.java:251) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestWorkerIfRequired(ActiveResourceManager.java:310) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.lambda$requestNewWorker$0(ActiveResourceManager.java:261) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at java.util.concurrent.CompletableFuture.uniHandle(CompletableFuture.java:822) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.uniHandleStage(CompletableFuture.java:834) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.handle(CompletableFuture.java:2155) ~[?:1.8.0_152]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestNewWorker(ActiveResourceManager.java:251) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestWorkerIfRequired(ActiveResourceManager.java:310) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.lambda$requestNewWorker$0(ActiveResourceManager.java:261) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at java.util.concurrent.CompletableFuture.uniHandle(CompletableFuture.java:822) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.uniHandleStage(CompletableFuture.java:834) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.handle(CompletableFuture.java:2155) ~[?:1.8.0_152]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestNewWorker(ActiveResourceManager.java:251) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestWorkerIfRequired(ActiveResourceManager.java:310) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.lambda$requestNewWorker$0(ActiveResourceManager.java:261) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at java.util.concurrent.CompletableFuture.uniHandle(CompletableFuture.java:822) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.uniHandleStage(CompletableFuture.java:834) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.handle(CompletableFuture.java:2155) ~[?:1.8.0_152]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestNewWorker(ActiveResourceManager.java:251) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestWorkerIfRequired(ActiveResourceManager.java:310) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.lambda$requestNewWorker$0(ActiveResourceManager.java:261) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at java.util.concurrent.CompletableFuture.uniHandle(CompletableFuture.java:822) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.uniHandleStage(CompletableFuture.java:834) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.handle(CompletableFuture.java:2155) ~[?:1.8.0_152]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestNewWorker(ActiveResourceManager.java:251) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestWorkerIfRequired(ActiveResourceManager.java:310) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.lambda$requestNewWorker$0(ActiveResourceManager.java:261) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at java.util.concurrent.CompletableFuture.uniHandle(CompletableFuture.java:822) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.uniHandleStage(CompletableFuture.java:834) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.handle(CompletableFuture.java:2155) ~[?:1.8.0_152]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestNewWorker(ActiveResourceManager.java:251) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestWorkerIfRequired(ActiveResourceManager.java:310) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.lambda$requestNewWorker$0(ActiveResourceManager.java:261) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at java.util.concurrent.CompletableFuture.uniHandle(CompletableFuture.java:822) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.uniHandleStage(CompletableFuture.java:834) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.handle(CompletableFuture.java:2155) ~[?:1.8.0_152]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestNewWorker(ActiveResourceManager.java:251) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestWorkerIfRequired(ActiveResourceManager.java:310) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.lambda$requestNewWorker$0(ActiveResourceManager.java:261) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at java.util.concurrent.CompletableFuture.uniHandle(CompletableFuture.java:822) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.uniHandleStage(CompletableFuture.java:834) ~[?:1.8.0_152]

at java.util.concurrent.CompletableFuture.handle(CompletableFuture.java:2155) ~[?:1.8.0_152]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.requestNewWorker(ActiveResourceManager.java:251) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.active.ActiveResourceManager.startNewWorker(ActiveResourceManager.java:160) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.ResourceManager$ResourceActionsImpl.allocateResource(ResourceManager.java:1382) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.slotmanager.SlotManagerImpl.allocateResource(SlotManagerImpl.java:1058) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.slotmanager.SlotManagerImpl.fulfillPendingSlotRequestWithPendingTaskManagerSlot(SlotManagerImpl.java:954) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.slotmanager.SlotManagerImpl.lambda$internalRequestSlot$9(SlotManagerImpl.java:943) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.util.OptionalConsumer.ifNotPresent(OptionalConsumer.java:51) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.slotmanager.SlotManagerImpl.internalRequestSlot(SlotManagerImpl.java:941) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.slotmanager.SlotManagerImpl.registerSlotRequest(SlotManagerImpl.java:410) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.resourcemanager.ResourceManager.requestSlot(ResourceManager.java:529) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_152]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_152]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_152]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_152]

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRpcInvocation(AkkaRpcActor.java:305) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRpcMessage(AkkaRpcActor.java:212) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.rpc.akka.FencedAkkaRpcActor.handleRpcMessage(FencedAkkaRpcActor.java:77) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleMessage(AkkaRpcActor.java:158) ~[flink-dist_2.12-1.12.7.jar:1.12.7]

at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:26) [flink-dist_2.12-1.12.7.jar:1.12.7]

at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:21) [flink-dist_2.12-1.12.7.jar:1.12.7]

at scala.PartialFunction.applyOrElse(PartialFunction.scala:123) [flink-dist_2.12-1.12.7.jar:1.12.7]

at scala.PartialFunction.applyOrElse$(PartialFunction.scala:122) [flink-dist_2.12-1.12.7.jar:1.12.7]

at akka.japi.pf.UnitCaseStatement.applyOrElse(CaseStatements.scala:21) [flink-dist_2.12-1.12.7.jar:1.12.7]

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:171) [flink-dist_2.12-1.12.7.jar:1.12.7]

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:172) [flink-dist_2.12-1.12.7.jar:1.12.7]

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:172) [flink-dist_2.12-1.12.7.jar:1.12.7]

at akka.actor.Actor.aroundReceive(Actor.scala:517) [flink-dist_2.12-1.12.7.jar:1.12.7]

at akka.actor.Actor.aroundReceive$(Actor.scala:515) [flink-dist_2.12-1.12.7.jar:1.12.7]

at akka.actor.AbstractActor.aroundReceive(AbstractActor.scala:225) [flink-dist_2.12-1.12.7.jar:1.12.7]

at akka.actor.ActorCell.receiveMessage(ActorCell.scala:592) [flink-dist_2.12-1.12.7.jar:1.12.7]

at akka.actor.ActorCell.invoke(ActorCell.scala:561) [flink-dist_2.12-1.12.7.jar:1.12.7]

at akka.dispatch.Mailbox.processMailbox(Mailbox.scala:258) [flink-dist_2.12-1.12.7.jar:1.12.7]

at akka.dispatch.Mailbox.run(Mailbox.scala:225) [flink-dist_2.12-1.12.7.jar:1.12.7]

at akka.dispatch.Mailbox.exec(Mailbox.scala:235) [flink-dist_2.12-1.12.7.jar:1.12.7]

at akka.dispatch.forkjoin.ForkJoinTask.doExec(ForkJoinTask.java:260) [flink-dist_2.12-1.12.7.jar:1.12.7]

at akka.dispatch.forkjoin.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1339) [flink-dist_2.12-1.12.7.jar:1.12.7]

at akka.dispatch.forkjoin.ForkJoinPool.runWorker(ForkJoinPool.java:1979) [flink-dist_2.12-1.12.7.jar:1.12.7]

at akka.dispatch.forkjoin.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:107) [flink-dist_2.12-1.12.7.jar:1.12.7]

<br/>

二. 初级问题分析与解决

1. 问题分析

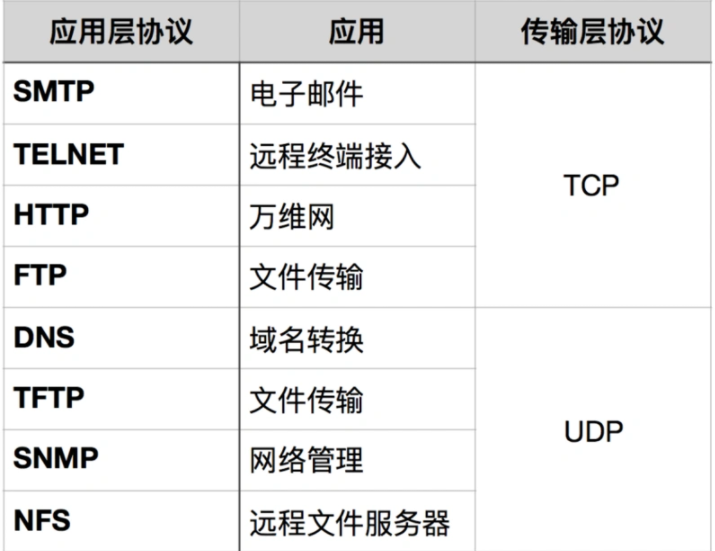

报错很明确,也就是我们向yarn多申请了资源。所以我们关注两点:yarn的调度器设置(规定了队列,每个任务申请的限制)、程序中是如何设置的并行度的。

1.1. yarn的调度器设置

打开yarn 看到Maximum Allocation限制为<memory:12288, vCores:4> ,具体的说,就是我们申请一个container最大内存能申请12G、最大核心数为4

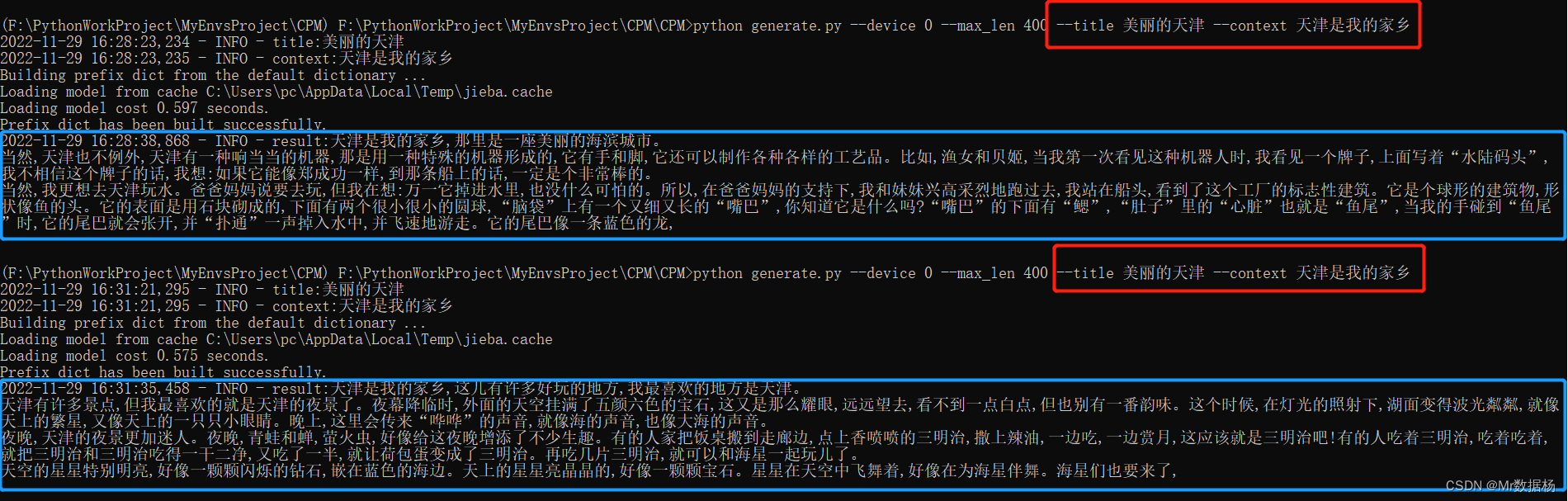

1.2. 程序设置

看下运行的shell的伪代码

tmp_value=`echo $parallelism 10 | awk '{if($1 > $2) print 1; else print 0;}'`

if [ $tmp_value -eq 1 ] ;then

tm=12288

vcores=10

numberOfTaskSlots=10

fi

...

"yarn.containers.vcores":${vcores}

"taskmanager.numberOfTaskSlots":${numberOfTaskSlots}

...

这里可以看到冲突:当我们的并行度设置超过10时,vcores设置为10,但yarn最大让设置为4,所以会报错。。。

2. 问题解决

解决问题的方式也比较简单,让vcores最大保持为4,然后再运行。

这里我们设置整个job的并行度还是为20,然后申请一个container中vcores=4,那么按照一个并行度分配一个线程的逻辑

即一个taskmanager(container)中有4核对应有4个slot,那将会有20/4=5个taskmanager

三. (性能)新的问题

1. 问题描述

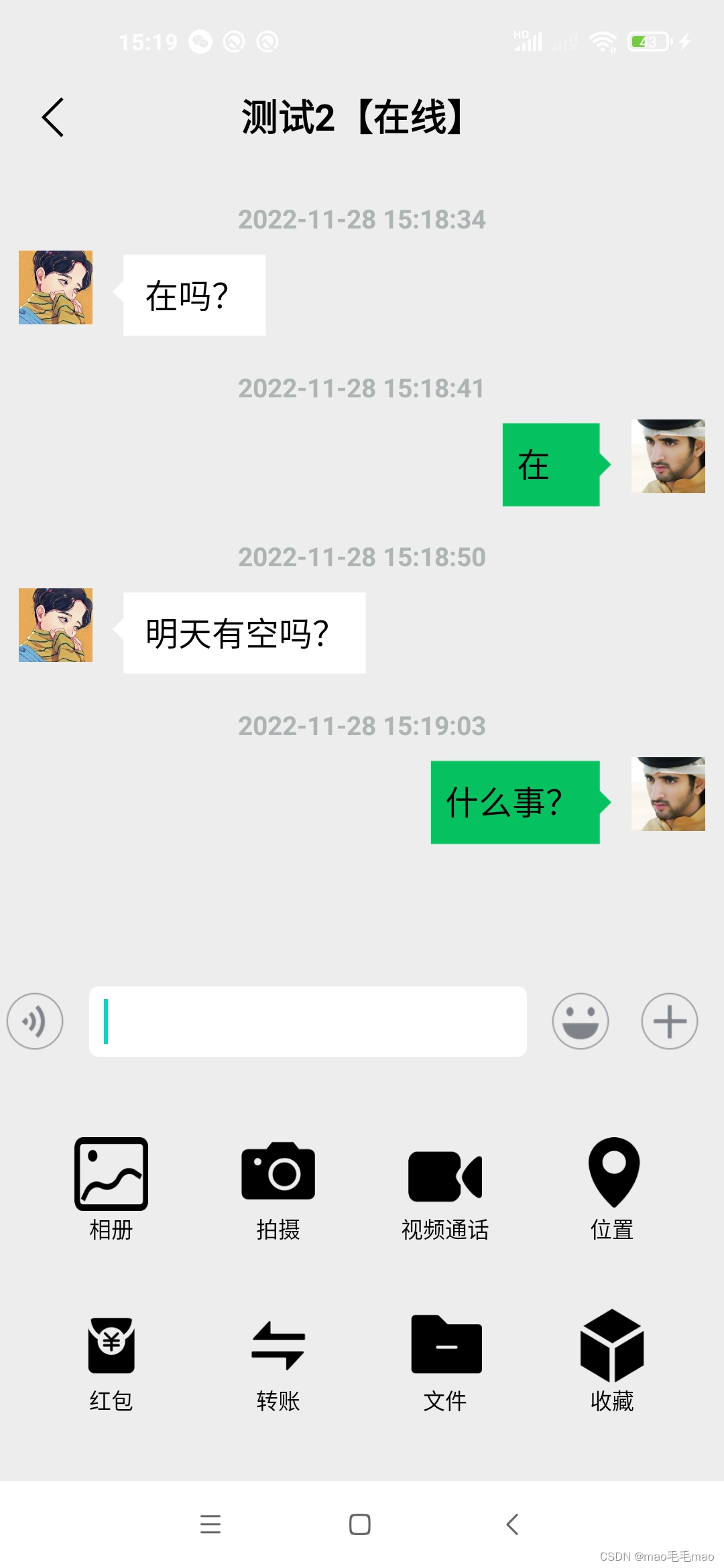

任务是跑起来了,但是出现了一个性能的问题:

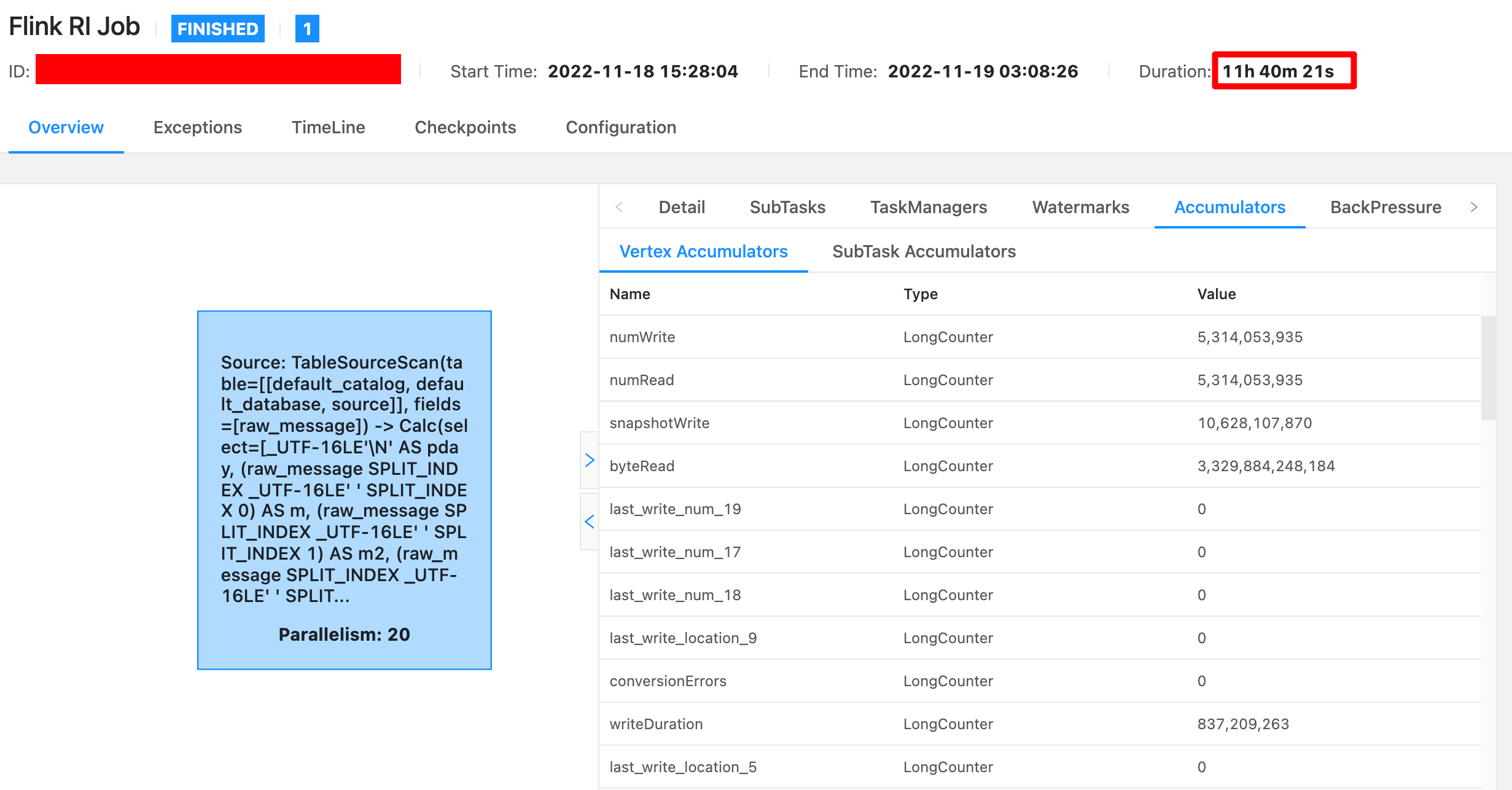

先看下job的运行情况:

job的消费速度:

20并行度下,每个taskmanager的内存为12G,每秒消费11.28万条数据,那每分钟处理的速度是676.89万条/min,每小时4.06亿条/小时

2032.766839793899649 GB 每小时平均

5793.385493412613869 GB 累计2.85小时

最终的结果

- 53.14亿条

- 数据量有:3.03TB

- 消费每条数据的平均大小是:626byte。

- 1G=1,073,741,824 bytes

最终整个job运行完,花了11个小时:

客户的要求是1小时内完成,但速度太慢了。。。

2. 理想化的最优方案

客户集群的资源是够的,所以我们不考虑资源问题,那既然这样,因为hdfs的文件总共有4000个,再有yarn最大资源分配是4(core/container),所以我们部署一个4000并行度的任务,它将运行的最快!!!

所以我将并行度设置为4000时将会有1000个taskmanager启动。等一下。1000个???我心疼jobmanager一秒钟先。

果然,任务还没调度完,就失败了。。。

所以理想有些简单,也没有银弹。

3. "PlanB"的解决方案

既然一个jobmanager不能管理这么多的taskmanager,那就降低taskmanager的数量。

。。。经过多次尝试之后,这里最终给出了方案B的设置:

并行度设置为500,taskmanager启动了125个。

任务最终处理时间缩短到:23分钟

*********************************************

nErrors | 0

nullErrors | 0

duplicateErrors | 0

conversionErrors | 0

otherErrors | 0

numWrite | 3164002700

byteWrite | 17123302298224

numRead | 3164002700

writeDuration | 545313434

byteRead | 1983716362408

readDuration | 539805057

snapshotWrite | 6328005400

*********************************************

2022-11-21 11:48:56 Start to stop flink job(3734d90205a13eff7cfa61b5c0c9686b)

2022-11-21 11:50:11 Success to stop flink job(3734d90205a13eff7cfa61b5c0c9686b)

2022-11-21 11:50:11 Flink process exit code is :0

四. 反思与迭代

针对上述的处理过程,发现几点值得思考和迭代:

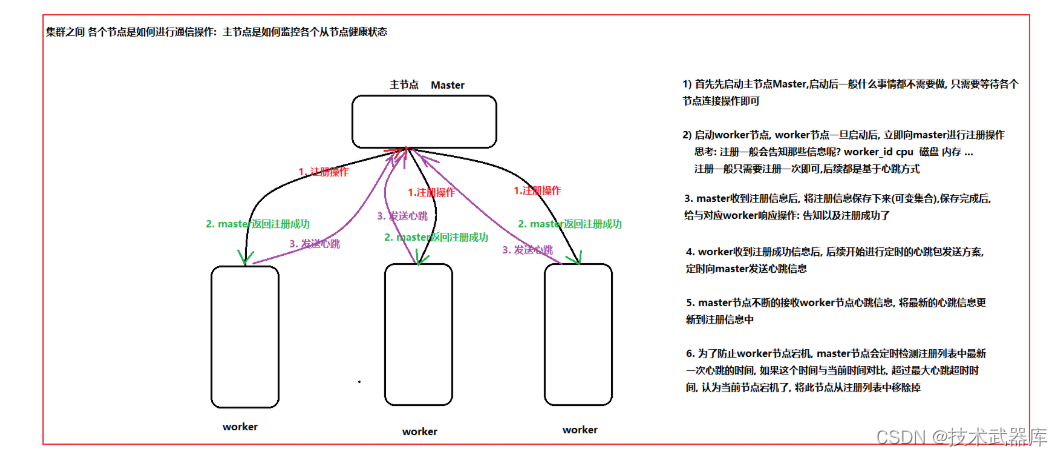

- 我们程序和yarn进行定时通讯,及时获取yarn中调度器的设置,然后动态设置最大核心数,充分利用资源的同时,也保证了程序的稳定;

- 我们在设置并行度时,需要考虑jobmanager能够协调的taskmanager的数量,不是靠尝试。

本文学到的经验是,设置125个taskmanager时,jobmanager是可以顶住压力的,但接下来可以分析分析flink的通讯相关的源码,以便能极大的发挥集群资源。

其次对于jobmanager管理这么多节点是否可以设置flink的高可用,增加job运行的稳定度

还有一个小细节

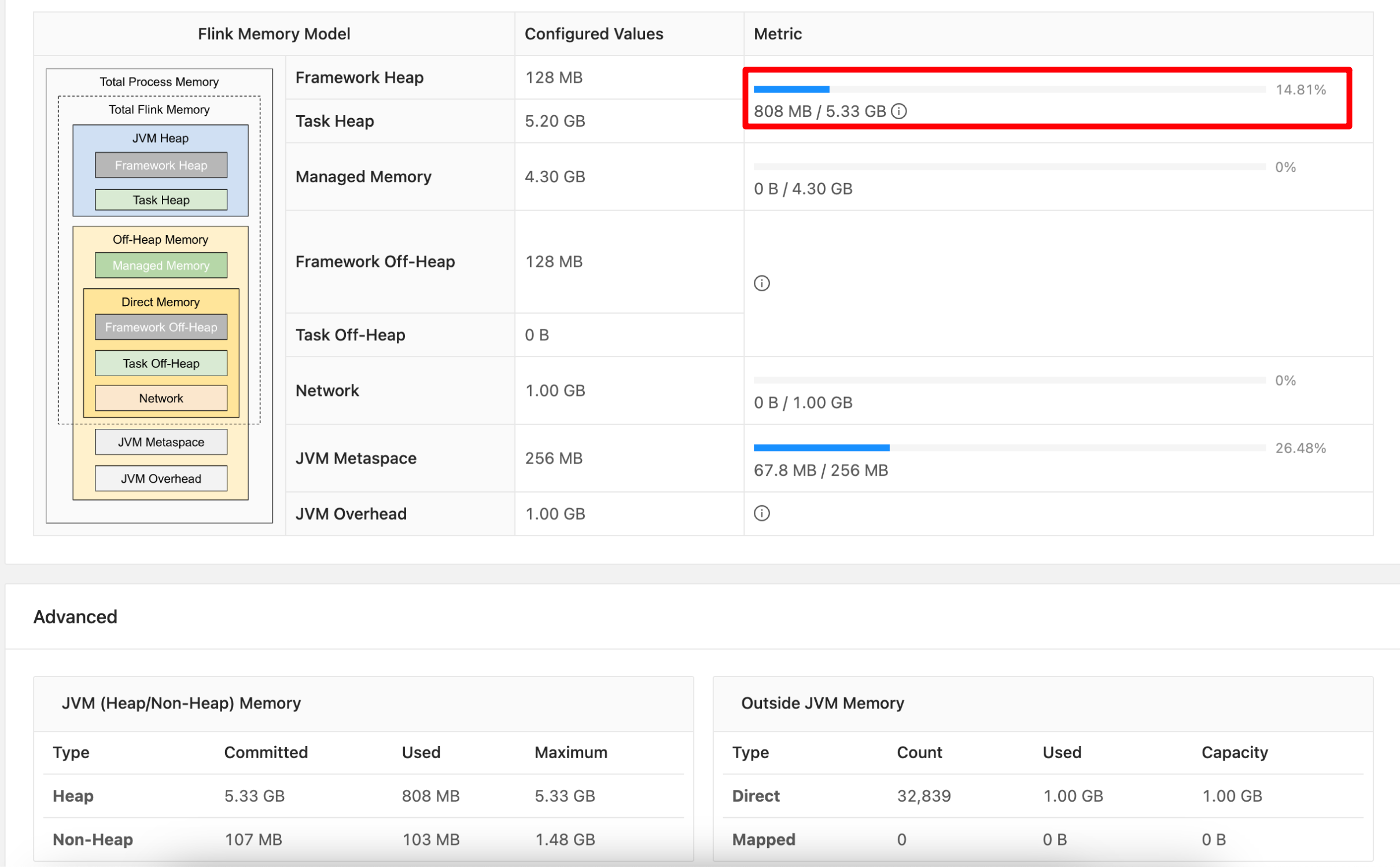

- taskmanager的内存使用有些浪费,如上图,并未充分利用内存资源,这点我们也需思考要如何优化。

仔细点我们也可以发现

- 当container设置的核心数最大为4时,numberOfTaskSlots的设置其实无效了。