将pg日志导入pg数据库的几种方法

文章目录

- 将pg日志导入pg数据库的几种方法

- 1 方法1:官方方法(fiel_fdw)

- 1.1 创建file_fdw数据源(在此之前需要安装pg的file_fdw扩展)

- 1.2 创建外部表并导入数据

- 2 方法2:用csvsql工具导入

- 2.1 pg csv log 表头准备

- 2.2 为 postgresql-2023-04-21_140000.csv 添加表头行(26列)

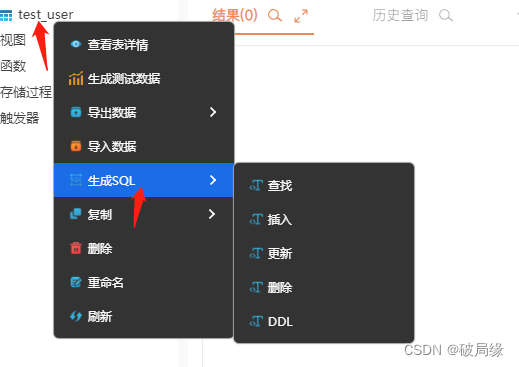

- 2.3 根据pg日志文件,输出创建表的SQL语句(用postgresql数据库格式),并用它创建表

- 2.4 导入数据

- 3 方法3:用awk方法导入

- 3.1 根据官方文档创建数据表

- 3.2 用csv日志文件生成SQL语法文件

- 3.3 导入SQL文件

- 4 方法4:最佳实践

- 4.1 创建表

- 4.2 添加字段说明(多此一举)

- 4.3 导入数据

- 4.4 直接给个脚本吧

- 5 最后

注意:我这里导入的pg日志是csv格式的,Postgresql 14.x日志的字段是26个,网上很多文章都是23个字段

1 方法1:官方方法(fiel_fdw)

参考:https://www.postgresql.org/docs/14/file-fdw.html

结论:好像只能一次一个文件,如何将多个日志文件导入到一个数据库中?out!

1.1 创建file_fdw数据源(在此之前需要安装pg的file_fdw扩展)

CREATE SERVER pg_csvlog_fdw FOREIGN DATA WRAPPER file_fdw;

1.2 创建外部表并导入数据

CREATE FOREIGN TABLE pg_csvlog_file_fdw (

log_time timestamp(3) with time zone,

user_name text,

database_name text,

process_id integer,

connection_from text,

session_id text,

session_line_num bigint,

command_tag text,

session_start_time timestamp with time zone,

virtual_transaction_id text,

transaction_id bigint,

error_severity text,

sql_state_code text,

message text,

detail text,

hint text,

internal_query text,

internal_query_pos integer,

context text,

query text,

query_pos integer,

location text,

application_name text,

backend_type text,

leader_pid integer,

query_id bigint

) SERVER pg_csvlog_fdw

OPTIONS ( filename '/usr/local/pgsql/data/log/postgresql-2023-04-21_130000.csv', format 'csv' );

# 你看,不能copy,有其他方法吗?我不会

pg_log=# copy "pg_csvlog_file_fdw" from '/usr/local/pgsql/data/log/postgresql-2023-04-21_160000.csv' with csv;

ERROR: cannot insert into foreign table "pg_csvlog_file_fdw"

- 查看刚创建的表结构:

pg_log=# \d pg_csvlog_file_fdw

Foreign table "public.pg_csvlog_file_fdw"

Column | Type | Collation | Nullable | Default | FDW options

------------------------+-----------------------------+-----------+----------+---------+-------------

log_time | timestamp(3) with time zone | | | |

user_name | text | | | |

database_name | text | | | |

process_id | integer | | | |

connection_from | text | | | |

session_id | text | | | |

session_line_num | bigint | | | |

command_tag | text | | | |

session_start_time | timestamp with time zone | | | |

virtual_transaction_id | text | | | |

transaction_id | bigint | | | |

error_severity | text | | | |

sql_state_code | text | | | |

message | text | | | |

detail | text | | | |

hint | text | | | |

internal_query | text | | | |

internal_query_pos | integer | | | |

context | text | | | |

query | text | | | |

query_pos | integer | | | |

location | text | | | |

application_name | text | | | |

backend_type | text | | | |

leader_pid | integer | | | |

query_id | bigint | | | |

Server: pg_csvlog_fdw

FDW options: (filename '/usr/local/pgsql/data/log/postgresql-2023-04-21_130000.csv', format 'csv')

2 方法2:用csvsql工具导入

参考:csvkit - 处理csv文件的瑞士军刀: https://segmentfault.com/a/1190000009179225

结论:用它创建的表,字段值可能被重新定义,比如transaction_id的值等于0(他会分析多条记录得出),他会把它当成boolean型,并在导入时直接把插入值改为false,与原值0就会不匹配,如果手动校正数据类型为bigint型,也不行,因为导入数据时,他依然会将插入的值改为false,此时导入会出错。

2.1 pg csv log 表头准备

log_time,user_name,database_name,process_id,connection_from,session_id,session_line_num,command_tag,session_start_time,virtual_transaction_id,transaction_id,error_severity,sql_state_code,message,detail,hint,internal_query,internal_query_pos,context,query,query_pos,location,application_name,backend_type,leader_pid,query_id

2.2 为 postgresql-2023-04-21_140000.csv 添加表头行(26列)

sed -i '1ilog_time,user_name,database_name,process_id,connection_from,session_id,session_line_num,command_tag,session_start_time,virtual_transaction_id,transaction_id,error_severity,sql_state_code,message,detail,hint,internal_query,internal_query_pos,context,query,query_pos,location,application_name,backend_type,leader_pid,query_id

' postgresql-2023-04-21_140000.csv

2.3 根据pg日志文件,输出创建表的SQL语句(用postgresql数据库格式),并用它创建表

^_^ kevin@TM1701-b38cbc23:~$ csvsql -i postgresql --tables pg_csvlog_csvsql /usr/local/pgsql/data/log/postgresql-2023-04-21_140000.csv

CREATE TABLE pg_csvlog (

log_time VARCHAR NOT NULL,

user_name VARCHAR,

database_name VARCHAR,

process_id DECIMAL NOT NULL,

connection_from VARCHAR,

session_id VARCHAR NOT NULL,

session_line_num DECIMAL NOT NULL,

command_tag VARCHAR,

session_start_time VARCHAR NOT NULL,

virtual_transaction_id VARCHAR,

transaction_id BOOLEAN NOT NULL,

error_severity VARCHAR NOT NULL,

sql_state_code VARCHAR NOT NULL,

message VARCHAR NOT NULL,

detail VARCHAR,

hint BOOLEAN,

internal_query BOOLEAN,

internal_query_pos BOOLEAN,

context BOOLEAN,

query VARCHAR,

query_pos BOOLEAN,

location BOOLEAN,

application_name VARCHAR,

backend_type VARCHAR NOT NULL,

leader_pid BOOLEAN,

query_id BOOLEAN NOT NULL

);

这里有个问题,他把【transaction_id】弄成了boolean型,在下面导入时也转换成boolean型了(true或false,不是原本的0或1),所以修改表结构也没用,讨厌,他本来是int型的,这个与sqlalchemy有关,我又不会弄

2.4 导入数据

csvsql --db postgresql://postgres:POSTGRES@192.168.11.145:5432/pg_log --tables pg_csvlog_csvsql --insert /usr/local/pgsql/data/log/postgresql-2023-04-21_140000.csv --no-create

可以导入多个日志文件,但每个文件都要重复执行添加表头命令

3 方法3:用awk方法导入

结论:这个方法也是OK的。创建表,用awk生成sql语法文件,然后导入

3.1 根据官方文档创建数据表

CREATE TABLE pg_csvlog_awk (

log_time timestamp(3) with time zone,

user_name text,

database_name text,

process_id integer,

connection_from text,

session_id text,

session_line_num bigint,

command_tag text,

session_start_time timestamp with time zone,

virtual_transaction_id text,

transaction_id bigint,

error_severity text,

sql_state_code text,

message text,

detail text,

hint text,

internal_query text,

internal_query_pos integer,

context text,

query text,

query_pos integer,

location text,

application_name text,

backend_type text,

leader_pid integer,

query_id bigint,

PRIMARY KEY (session_id, session_line_num)

);

3.2 用csv日志文件生成SQL语法文件

awk -F, '{ print "insert into pg_csvlog_awk ( log_time, user_name, database_name, process_id, connection_from, session_id, session_line_num, command_tag, session_start_time, virtual_transaction_id, transaction_id, error_severity, sql_state_code, message, detail, hint, internal_query, internal_query_pos, context, query, query_pos, location, application_name, backend_type, leader_pid, query_id ) values" , "(" "'\''"$1"'\''" "," "'\''"$2"'\''" "," "'\''"$3"'\''" "," "'\''"$4"'\''" "," "'\''"$5"'\''" "," "'\''"$6"'\''" "," "'\''"$7"'\''" "," "'\''"$8"'\''" "," "'\''"$9"'\''" "," "'\''"$10"'\''" "," "'\''"$11"'\''" "," "'\''"$12"'\''" "," "'\''"$13"'\''" "," "'\''"$14"'\''" "," "'\''"$15"'\''" "," "'\''"$16"'\''" "," "'\''"$17"'\''" "," "'\''"$18"'\''" "," "'\''"$19"'\''" "," "'\''"$20"'\''" "," "'\''"$21"'\''" "," "'\''"$22"'\''" "," "'\''"$23"'\''" "," "'\''"$24"'\''" "," "'\''"$25"'\''" "," "'\''"$26"'\''" ")" ";" }' /usr/local/pgsql/data/log/postgresql-2023-04-21_140000.csv > pg_csvlog_awk.sql

用\换行容易理解:

awk -F , '{ \

print "insert into pg_csvlog_awk ( \

log_time, user_name, database_name, process_id, connection_from, session_id, session_line_num, command_tag, session_start_time, virtual_transaction_id, transaction_id, error_severity, sql_state_code, message, detail, hint, internal_query, internal_query_pos, context, query, query_pos, location, application_name, backend_type, leader_pid, query_id \

) values" , "(" \

"'\''"$1"'\''" "," "'\''"$2"'\''" "," "'\''"$3"'\''" "," "'\''"$4"'\''" "," "'\''"$5"'\''" "," "'\''"$6"'\''" "," "'\''"$7"'\''" "," "'\''"$8"'\''" "," "'\''"$9"'\''" "," "'\''"$10"'\''" "," "'\''"$11"'\''" "," "'\''"$12"'\''" "," "'\''"$13"'\''" "," "'\''"$14"'\''" "," "'\''"$15"'\''" "," "'\''"$16"'\''" "," "'\''"$17"'\''" "," "'\''"$18"'\''" "," "'\''"$19"'\''" "," "'\''"$20"'\''" "," "'\''"$21"'\''" "," "'\''"$22"'\''" "," "'\''"$23"'\''" "," "'\''"$24"'\''" "," "'\''"$25"'\''" "," "'\''"$26"'\''" \

")" ";" \

}' /usr/local/pgsql/data/log/postgresql-2023-04-21_140000.csv > pg_csvlog_awk.sql

3.3 导入SQL文件

psql pg_csvlog_awk < pg_csvlog_awk.sql

4 方法4:最佳实践

根据官方文档手动创建表结构,然后用copy方式导入日志文件

4.1 创建表

CREATE TABLE IF NOT EXISTS pg_csvlog_shell (

log_time timestamp(3) with time zone,

user_name text,

database_name text,

process_id integer,

connection_from text,

session_id text,

session_line_num bigint,

command_tag text,

session_start_time timestamp with time zone,

virtual_transaction_id text,

transaction_id bigint,

error_severity text,

sql_state_code text,

message text,

detail text,

hint text,

internal_query text,

internal_query_pos integer,

context text,

query text,

query_pos integer,

location text,

application_name text,

backend_type text,

leader_pid integer,

query_id bigint,

PRIMARY KEY (session_id, session_line_num)

);

4.2 添加字段说明(多此一举)

comment on column pg_csvlog_shell.log_time is '日志时间,带毫秒的时间戳';

comment on column pg_csvlog_shell.user_name is '当前登录数据库的用户名';

comment on column pg_csvlog_shell.database_name is '数据库名';

comment on column pg_csvlog_shell.process_id is '进程ID';

comment on column pg_csvlog_shell.connection_from is '客户端主机:端口号';

comment on column pg_csvlog_shell.session_id is '会话ID 由后台进程启动时间和PID组成';

comment on column pg_csvlog_shell.session_line_num is '每个会话的行号,类似history命令';

comment on column pg_csvlog_shell.command_tag is '命令标签';

comment on column pg_csvlog_shell.session_start_time is '会话开始时间';

comment on column pg_csvlog_shell.virtual_transaction_id is '虚拟事务ID';

comment on column pg_csvlog_shell.transaction_id is '事务ID';

comment on column pg_csvlog_shell.error_severity is '错误等级';

comment on column pg_csvlog_shell.sql_state_code is 'SQLSTATE 代码';

comment on column pg_csvlog_shell.message is '消息 SQL语句';

comment on column pg_csvlog_shell.detail is '错误消息详情';

comment on column pg_csvlog_shell.hint is '提示';

comment on column pg_csvlog_shell.internal_query is '导致错误的内部查询(如果有)';

comment on column pg_csvlog_shell.internal_query_pos is '错误位置所在的字符计数';

comment on column pg_csvlog_shell.context is '错误上下文';

comment on column pg_csvlog_shell.query is '导致错误的用户查询(如果有且被log_min_error_statement启用)';

comment on column pg_csvlog_shell.query_pos is '错误位置所在的字符计数';

comment on column pg_csvlog_shell.location is '在 PostgreSQL 源代码中错误的位置(如果log_error_verbosity被设置为verbose)';

comment on column pg_csvlog_shell.application_name is '应用名';

comment on column pg_csvlog_shell.backend_type is '后端类型(新加)';

comment on column pg_csvlog_shell.leader_pid is 'Leader进程ID(新加)';

comment on column pg_csvlog_shell.query_id is '查询ID(新加)';

4.3 导入数据

pg_log=# copy "pg_csvlog_shell" from '/usr/local/pgsql/data/log/postgresql-2023-04-21_160000.csv' with csv;

COPY 1165

4.4 直接给个脚本吧

[postgres@v-192-168-11-145-pg pg]$ cat import_pg_log.sh

#!/bin/bash

echo

echo "用法: $0 <csv日志清单文件> #-- 默认导入今天所有的日志"

echo

LOG_PATH="/usr/local/pgsql/data/log"

LOG_FILE_LIST="pg_log.list"

LOG_ANALYSE_DB="pg_ana_log"

LOG_ANALYSE_TABLE="pg_csvlog_shell"

TIME=${TIME:-`date +%Y-%m-%dT%H:%M:%S`}

TIME_START=${TIME}

DATE_DAY=`date -d "${TIME}" +%Y%m%d`

LOG_ANALYSE_TABLE="${LOG_ANALYSE_TABLE}_${DATE_DAY}"

if [[ $# == 0 ]]; then

ls -l ${LOG_PATH}/postgresql-$(date -d "${TIME}" +%Y-%m-%d)_*csv | awk '{print $9}' > ${LOG_FILE_LIST}

else

LOG_FILE_LIST=$1

fi

echo ""

echo "##################################################"

echo "说明:"

echo "数据库名:${LOG_ANALYSE_DB}"

echo "数据库名:${LOG_ANALYSE_TABLE}"

echo "数据库日志文件路径:${LOG_PATH}"

echo "数据库日志文件清单:"

cat ${LOG_FILE_LIST}

echo ""

read -t 10 -p '按任意键继续......'

echo "##################################################"

echo "创建库表"

/usr/local/pgsql/bin/psql << EOF

DROP DATABASE "${LOG_ANALYSE_DB}";

CREATE DATABASE "${LOG_ANALYSE_DB}";

\c "${LOG_ANALYSE_DB}"

CREATE TABLE IF NOT EXISTS "${LOG_ANALYSE_TABLE}" (

log_time timestamp(3) with time zone,

user_name text,

database_name text,

process_id integer,

connection_from text,

session_id text,

session_line_num bigint,

command_tag text,

session_start_time timestamp with time zone,

virtual_transaction_id text,

transaction_id bigint,

error_severity text,

sql_state_code text,

message text,

detail text,

hint text,

internal_query text,

internal_query_pos integer,

context text,

query text,

query_pos integer,

location text,

application_name text,

backend_type text,

leader_pid integer,

query_id bigint,

PRIMARY KEY (session_id, session_line_num)

);

comment on column ${LOG_ANALYSE_TABLE}.log_time is '日志时间,带毫秒的时间戳';

comment on column ${LOG_ANALYSE_TABLE}.user_name is '当前登录数据库的用户名';

comment on column ${LOG_ANALYSE_TABLE}.database_name is '数据库名';

comment on column ${LOG_ANALYSE_TABLE}.process_id is '进程ID';

comment on column ${LOG_ANALYSE_TABLE}.connection_from is '客户端主机:端口号';

comment on column ${LOG_ANALYSE_TABLE}.session_id is '会话ID 由后台进程启动时间和PID组成';

comment on column ${LOG_ANALYSE_TABLE}.session_line_num is '每个会话的行号,类似history命令';

comment on column ${LOG_ANALYSE_TABLE}.command_tag is '命令标签';

comment on column ${LOG_ANALYSE_TABLE}.session_start_time is '会话开始时间';

comment on column ${LOG_ANALYSE_TABLE}.virtual_transaction_id is '虚拟事务ID';

comment on column ${LOG_ANALYSE_TABLE}.transaction_id is '事务ID';

comment on column ${LOG_ANALYSE_TABLE}.error_severity is '错误等级';

comment on column ${LOG_ANALYSE_TABLE}.sql_state_code is 'SQLSTATE 代码';

comment on column ${LOG_ANALYSE_TABLE}.message is '消息 SQL语句';

comment on column ${LOG_ANALYSE_TABLE}.detail is '错误消息详情';

comment on column ${LOG_ANALYSE_TABLE}.hint is '提示';

comment on column ${LOG_ANALYSE_TABLE}.internal_query is '导致错误的内部查询(如果有)';

comment on column ${LOG_ANALYSE_TABLE}.internal_query_pos is '错误位置所在的字符计数';

comment on column ${LOG_ANALYSE_TABLE}.context is '错误上下文';

comment on column ${LOG_ANALYSE_TABLE}.query is '导致错误的用户查询(如果有且被log_min_error_statement启用)';

comment on column ${LOG_ANALYSE_TABLE}.query_pos is '错误位置所在的字符计数';

comment on column ${LOG_ANALYSE_TABLE}.location is '在 PostgreSQL 源代码中错误的位置(如果log_error_verbosity被设置为verbose)';

comment on column ${LOG_ANALYSE_TABLE}.application_name is '应用名';

comment on column ${LOG_ANALYSE_TABLE}.backend_type is '后端类型(新加)';

comment on column ${LOG_ANALYSE_TABLE}.leader_pid is 'Leader进程ID(新加)';

comment on column ${LOG_ANALYSE_TABLE}.query_id is '查询ID(新加)';

\q

EOF

read -t 20 -p '按任意键继续......'

echo "##################################################"

echo "导入csv"

while read file

do

echo "----- ${file} -----"

/usr/local/pgsql/bin/psql << EOF

\c ${LOG_ANALYSE_DB}

copy ${LOG_ANALYSE_TABLE} from '${file}' with csv;

\q

EOF

done < ${LOG_FILE_LIST}

echo ""

echo "OK !"

5 最后

爱你