ES使用总结

- 1.查询es全部索

- 2.根据es索引查询文档

- 3.查看指定索引mapping文件

- 4.默认查询总数10000条

- 5.删除指定索引文档

- 6.删除所有数据包括索引

- 7.設置窗口值

- 8. logstash简单配置

- Logstash配置:

- logstash 控制台输出

- 9. filebenat配置

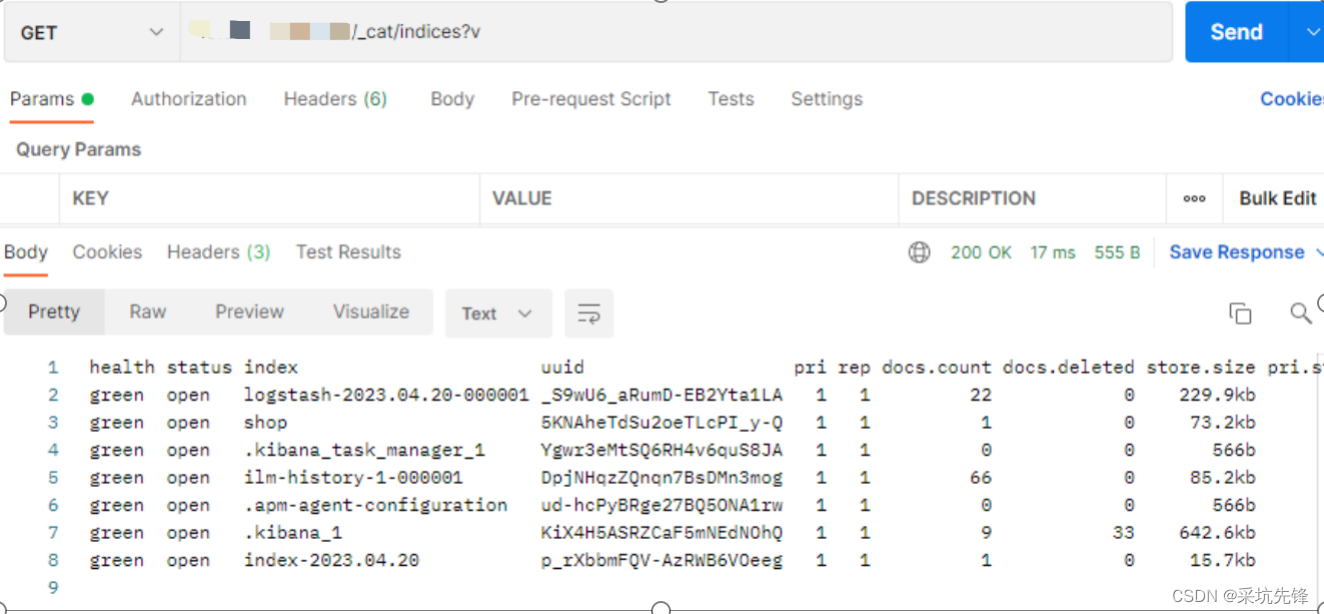

1.查询es全部索

localhost:9200/_cat/indices?v

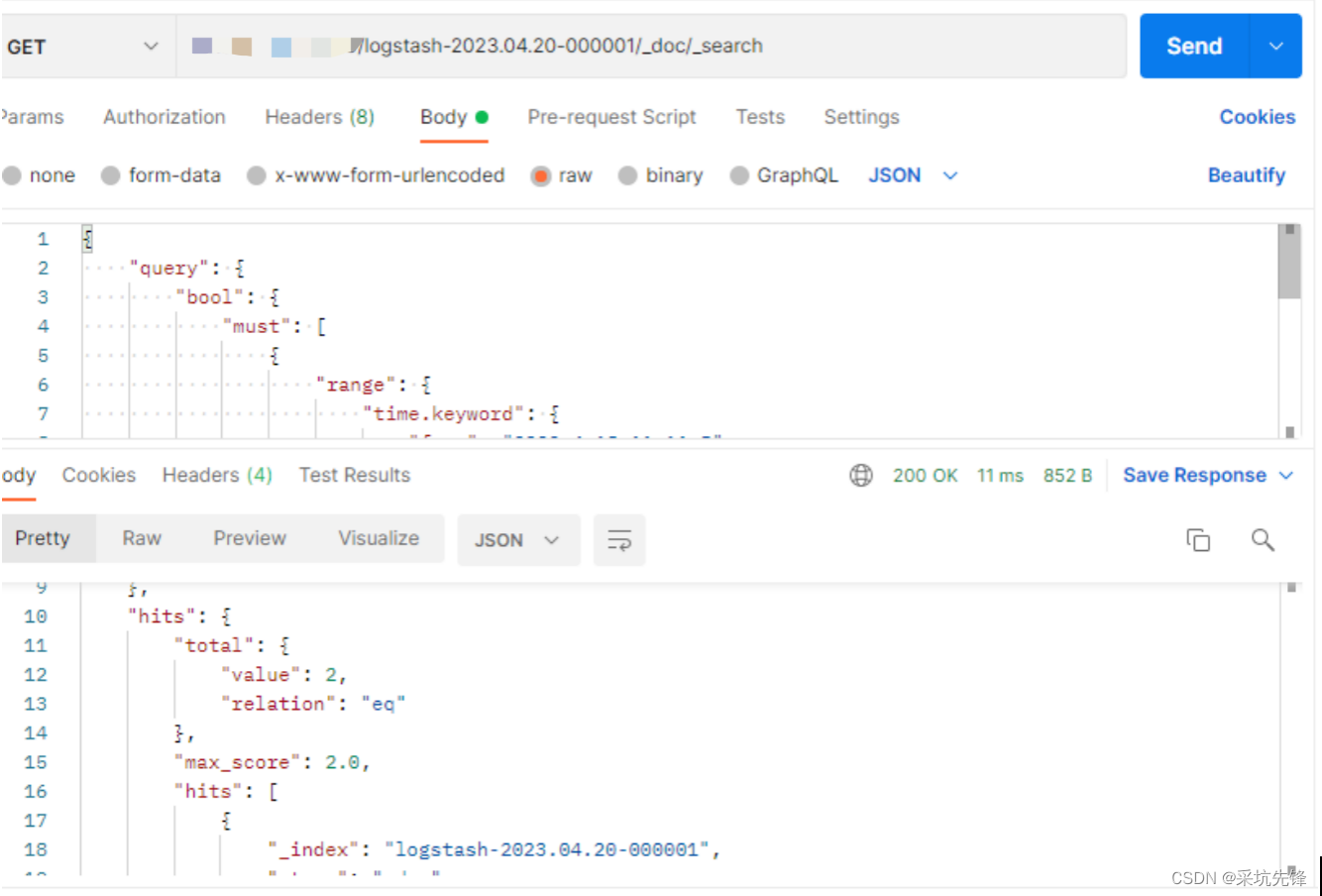

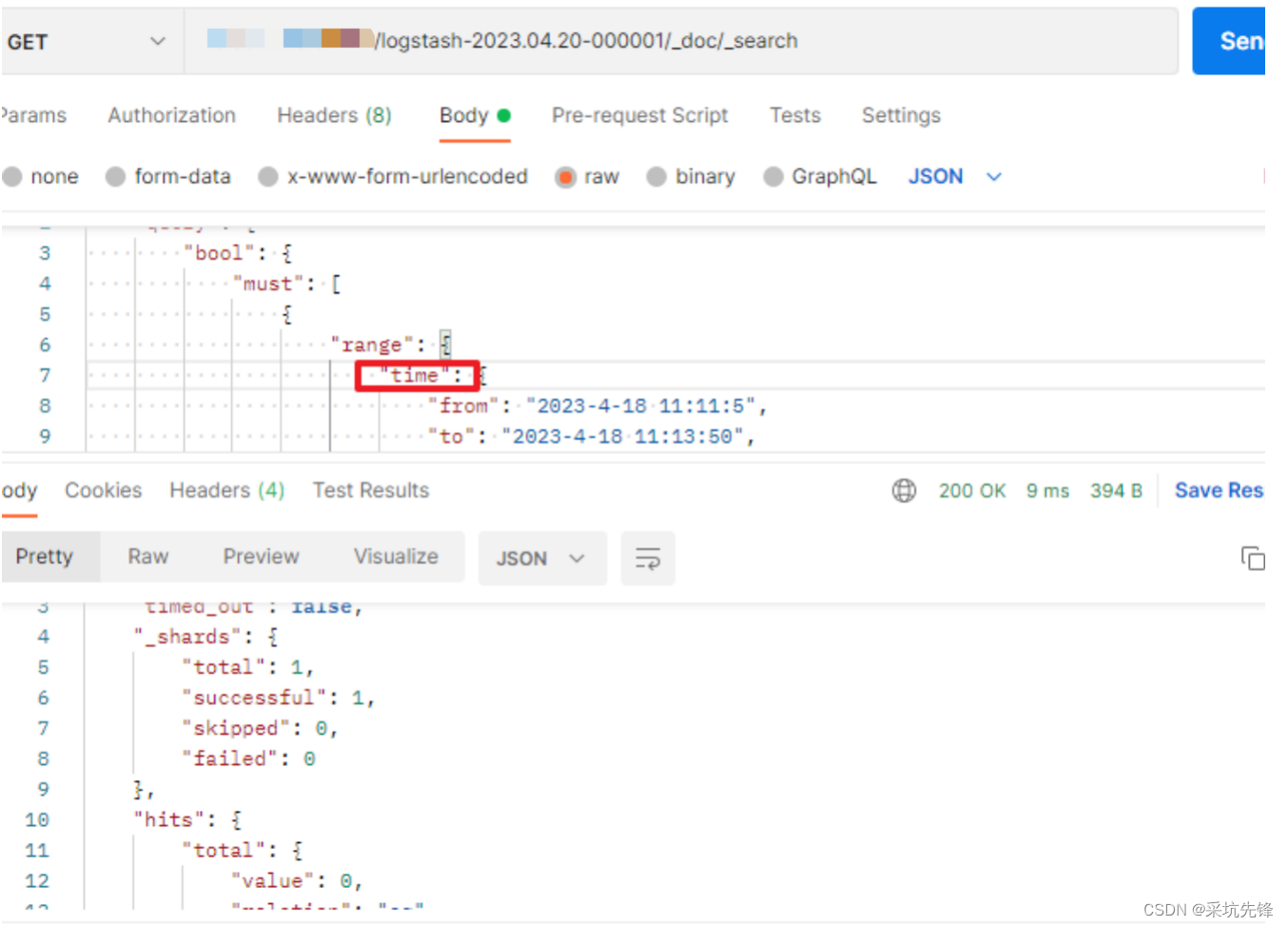

2.根据es索引查询文档

localhost:9200/{index}/_doc/_search

关于参数: range 查询是字符串时,需要指定字段加上.keyword 否则查询不到结果。

查询入参demo:

{

"query": {

"bool": {

"must": [

{

"range": {

"time.keyword": {

"from": "2023-4-18 11:11:5",

"to": "2023-4-18 11:13:50",

"include_lower": true,

"include_upper": true,

"boost": 2.0

}

}

}

],

"adjust_pure_negative": true,

"boost": 1.0

}

}

}

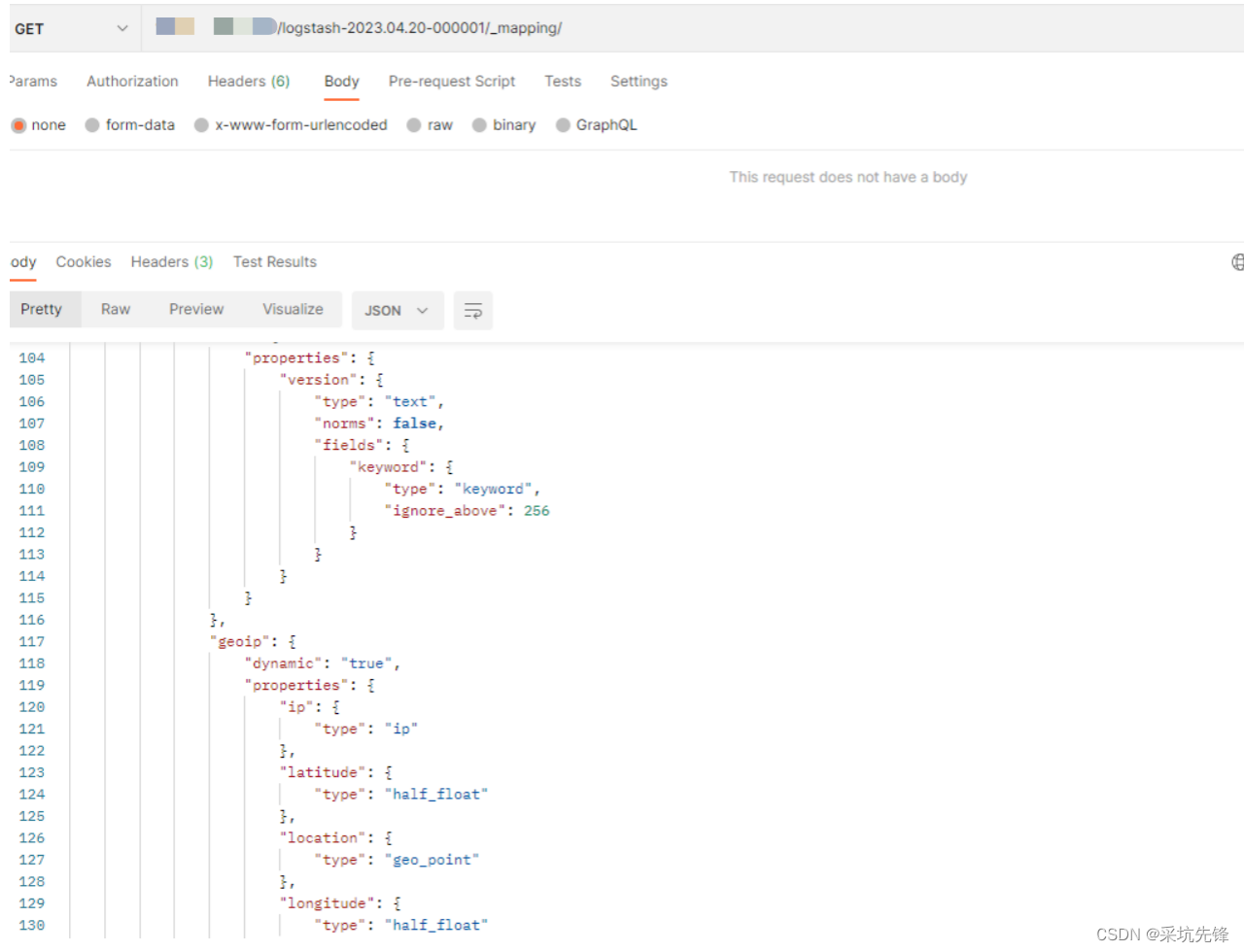

3.查看指定索引mapping文件

localhsot:9200/{index}/_mapping/

4.默认查询总数10000条

设置TrackTotalHits为true可以查询全部文档

5.删除指定索引文档

localhost:9200/{index}/_delete_by_query

注意此时索引仍然有效,文档会被删除,删除的时候文档开始会被标记删除,不会立马删除,此时消耗的内存会比原来大,当es执行segment merge合并时,此时会真正删除文档。

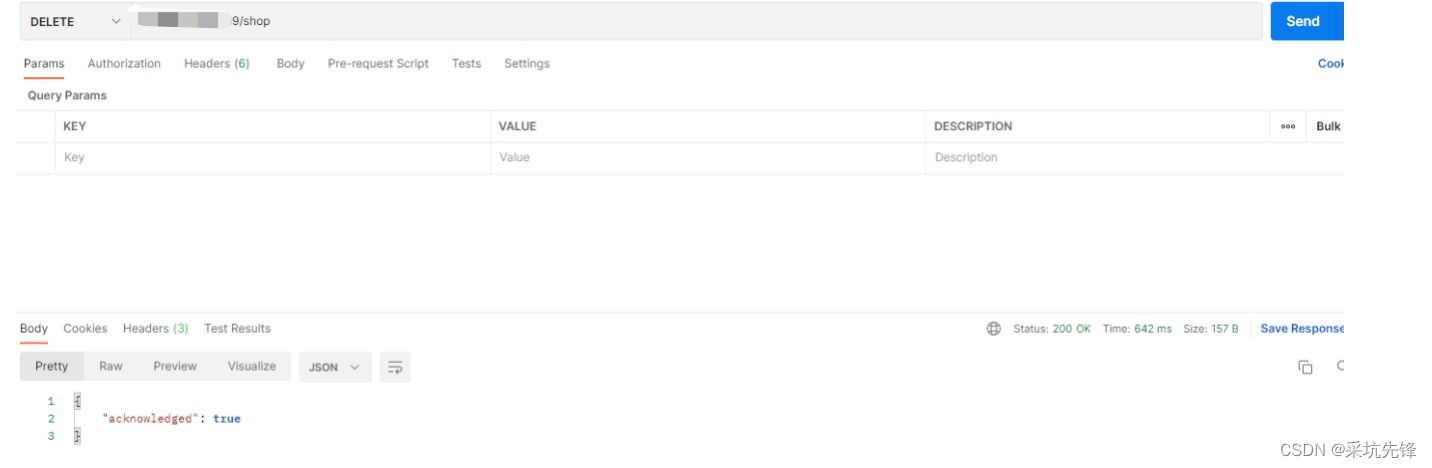

6.删除所有数据包括索引

# method:delete

localhost:9200/{index}

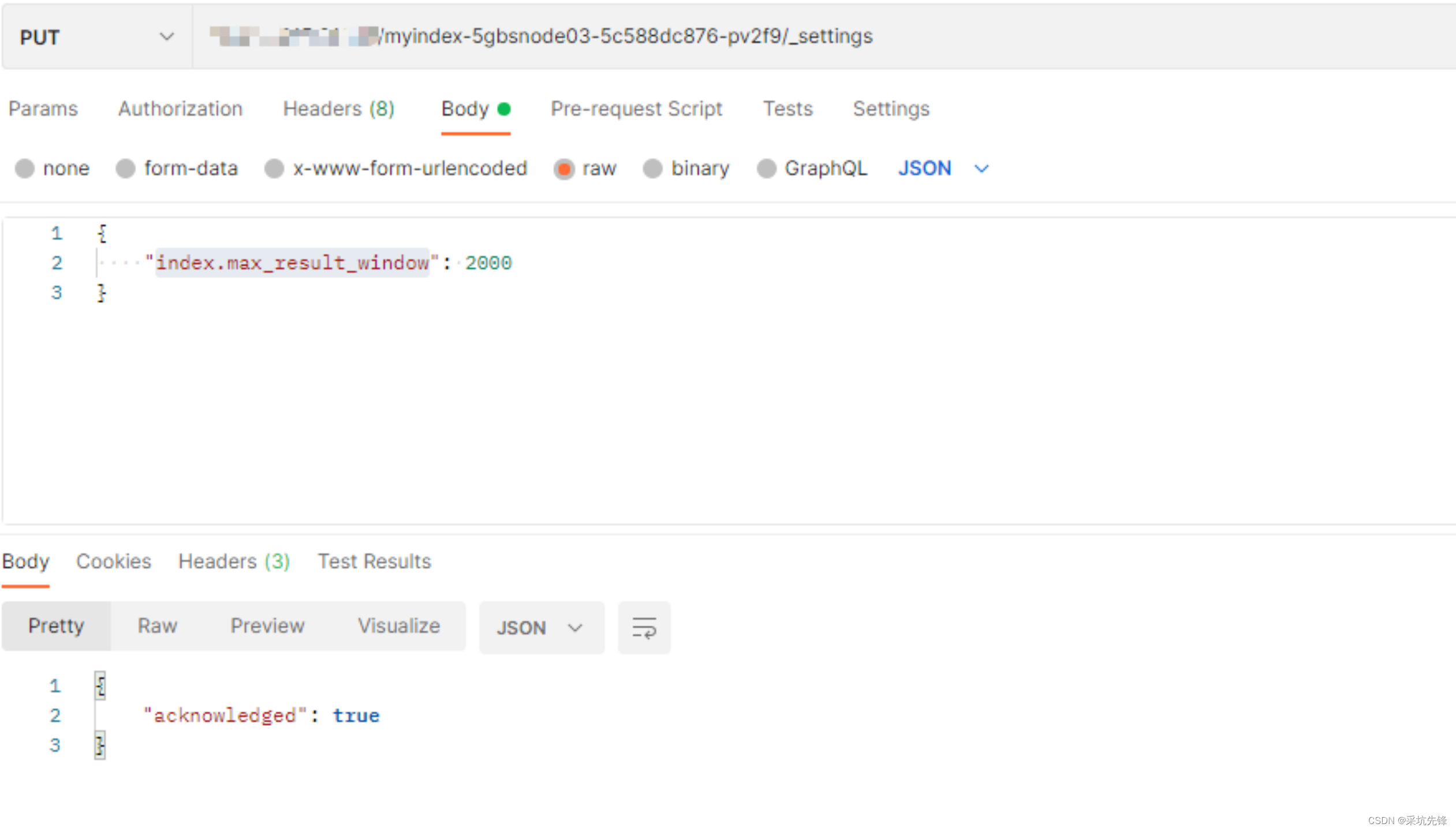

7.設置窗口值

localhost:9200/{index}/_settings

# 参数

# {"index.max_result_window" =20000}

窗口值报错ruxia

org.springframework.data.elasticsearch.UncategorizedElasticsearchException: Elasticsearch exception

[type=search_phase_execution_exception, reason=all shards failed];

nested exception is ElasticsearchStatusException

[Elasticsearch exception [type=search_phase_execution_exception, reason=all shards failed]];

nested: ElasticsearchException[Elasticsearch exception [type=illegal_argument_exception, reason=Result window is too large, from + size must be less than or equal to:

[20000] but was [200000].

See the scroll api for a more efficient way to request large data sets. This limit can be set by changing the [index.max_result_window] index level setting.]];

nested: ElasticsearchException[Elasticsearch exception [type=illegal_argument_exception, reason=Result window is too large, from + size must be less than or equal to: [20000] but was [200000].

See the scroll api for a more efficient way to request large data sets. This limit can be set by changing the [index.max_result_window] index level setting.]];

8. logstash简单配置

Logstash配置:

input { beats { port => 5044} }

filter {

grok {

match => {

"message" => "%{TIMESTAMP_ISO8601:time}%{GREEDYDATA:detailMessage}"

#"message" => "%{TIMESTAMP_ISO8601:time}%\[%LOGLEVEL:loglevel}\[%{DATA:podname}\] %{GREEDYDATA:Message}

}

}

}

output {

# 动态语法 index => "myindex-%{[filed][subfiled]}"

# 通过logstash看嵌套格式

elasticsearch { hosts => ["http://elasticsearch-master:9200"] index => "myindex-%{[host][name]}"}

stdout {codec => rubydebug}

}

logstash 控制台输出

output {

stdout {codec => rubydebug}

}

{

"agent" => {

"version" => "7.6.2",

"ephemeral_id" => "975b78d9-9901-4387-bef8-ef364853f8a3",

"type" => "filebeat",

"id" => "69dc1f45-6eff-440f-b75b-564bef25323a",

"hostname" => "5gbsnode03-5c588dc876-pv2f9"

},

"log" => {

"file" => {

"path" => "/home/log/test-01.log"

},

"offset" => 0

},

"@version" => "1",

"detailMessage" => "[5ggnb-32][ducp-1][DUCP] Send msg to MAC.msgCode:,msgLen:136",

"message" => "2023-04-19 11:12:22[5ggnb-32][ducp-1][DUCP] Send msg to MAC.msgCode:,msgLen:136",

"ecs" => {

"version" => "1.4.0"

},

"input" => {

"type" => "log"

},

"time" => "2023-04-19 11:12:22",

"host" => {

"name" => "5gbsnode03-5c588dc876-pv2f9"

},

"@timestamp" => 2023-04-23T04:44:48.102Z,

"tags" => [

[0] "beats_input_codec_plain_applied"

]

}

9. filebenat配置

filebeat.inputs:

- type: log

#id: my-filestream-id

paths:

- /home/log/*.log

# multiline.pattern: '\n'

# multiline.negate: true

# multiline.match: before

tail_files: true

output.logstash:

enabled: true

host: '${NODE_NAME}'

#注释的部分是可以放开 像es直接发送数据

#hosts: '${ELASTICSEARCH_HOSTS:elasticsearch-master:9200}'

hosts: 'logstash-logstash-headless:5044'

#codec.format:

#string: '{"@timestamp":%{[@timestamp]},"message":%{[message]},"host":%{[host.name]}}'

#pretty: true

# index: 'index-%{+yyyy.MM.dd}'

codec.json:

pretty: false

keep_alive: 10s

xpack.monitoring:

enabled: false

#setup.template.enabled: true

#setup.template.name: 'myfile'

#setup.template.pattern: 'index-*'

#setup.template.overwrite: true

#setup.ilm.enabled: false