目录

简介

原理

使用场景

使用限制

硬件配置

部署

在安装TiDB的时候部署

扩容部署

操作

管理CDC

管理工具

查看状态

创建同步任务

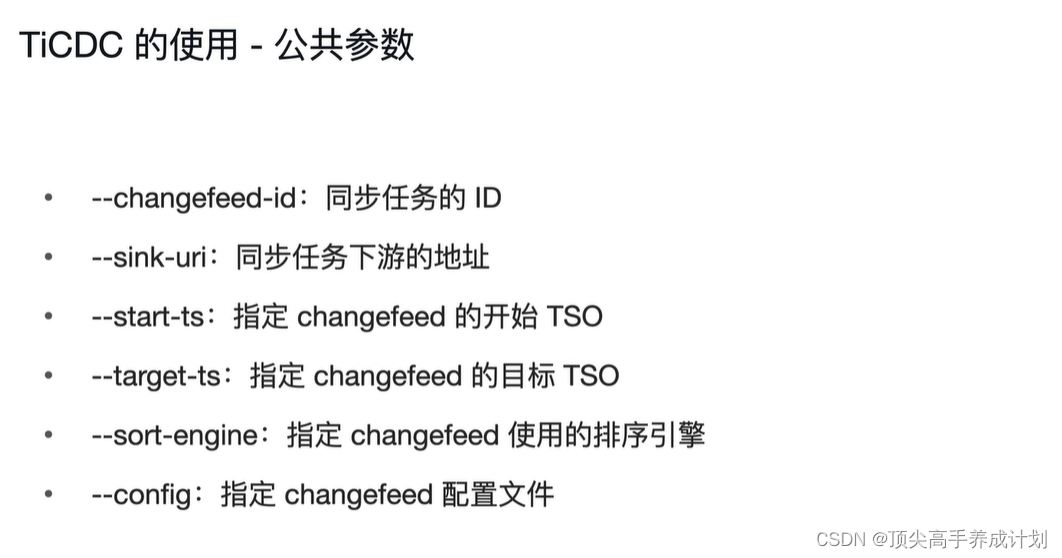

公共参数

CDC任务同步到MySQL实战

同步命令

查看所有的同步任务

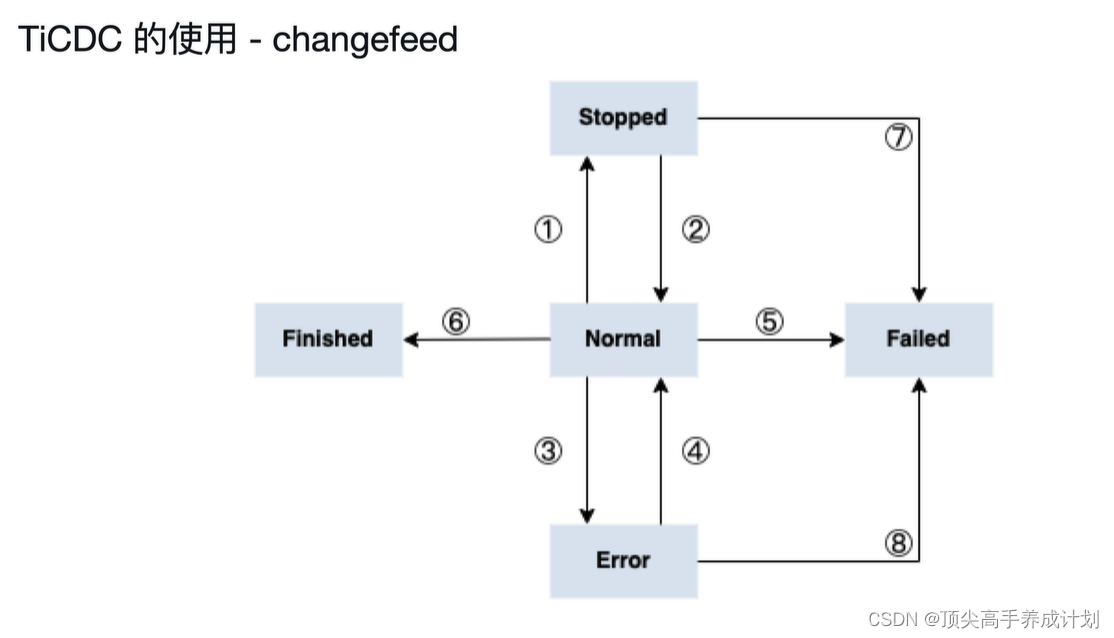

同步任务的状态

管理同步任务

查看一个同步信息的具体情况

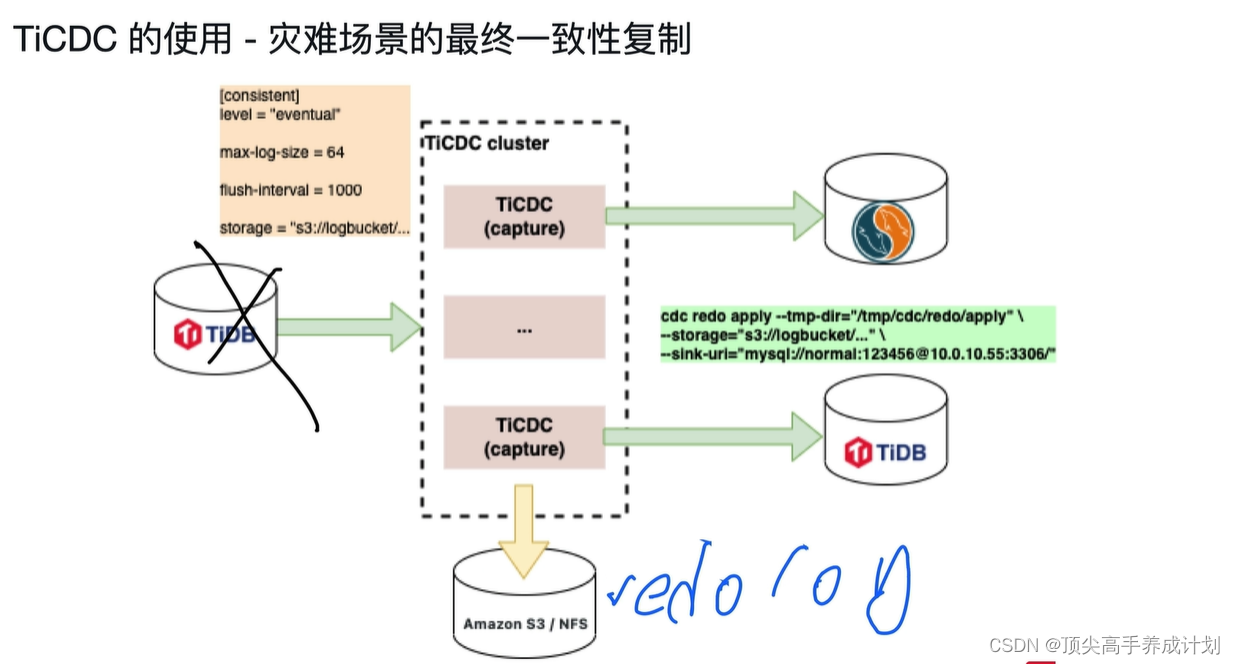

容灾最终一致性复制

总结

简介

操作TiCDC。

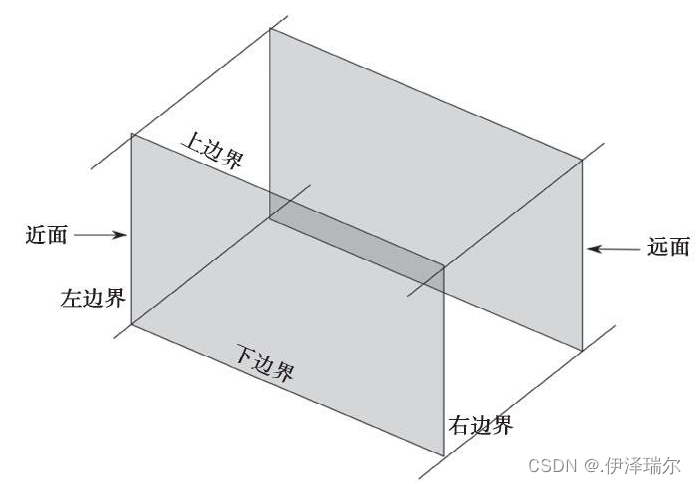

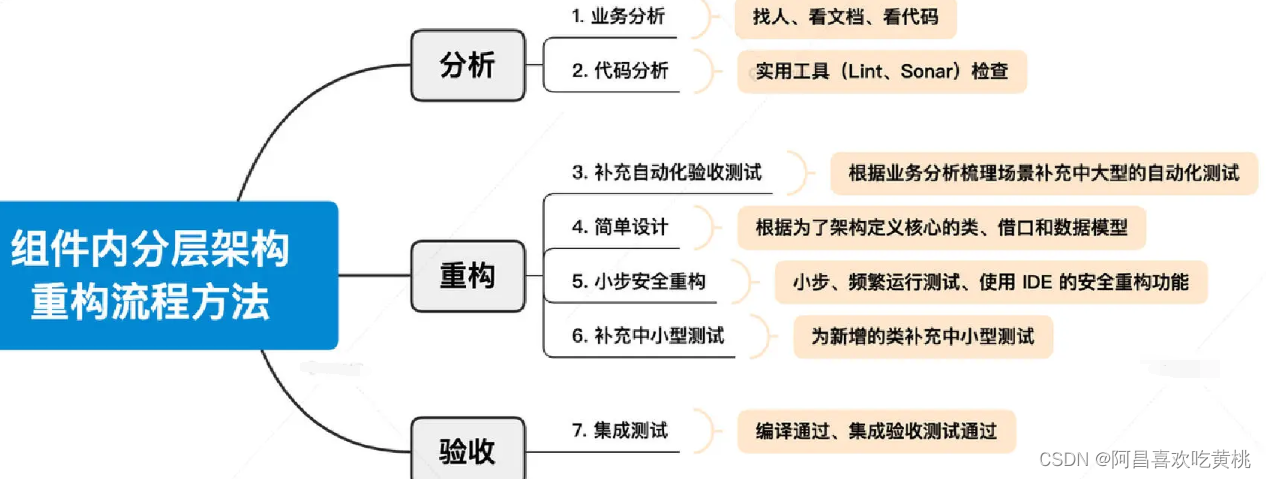

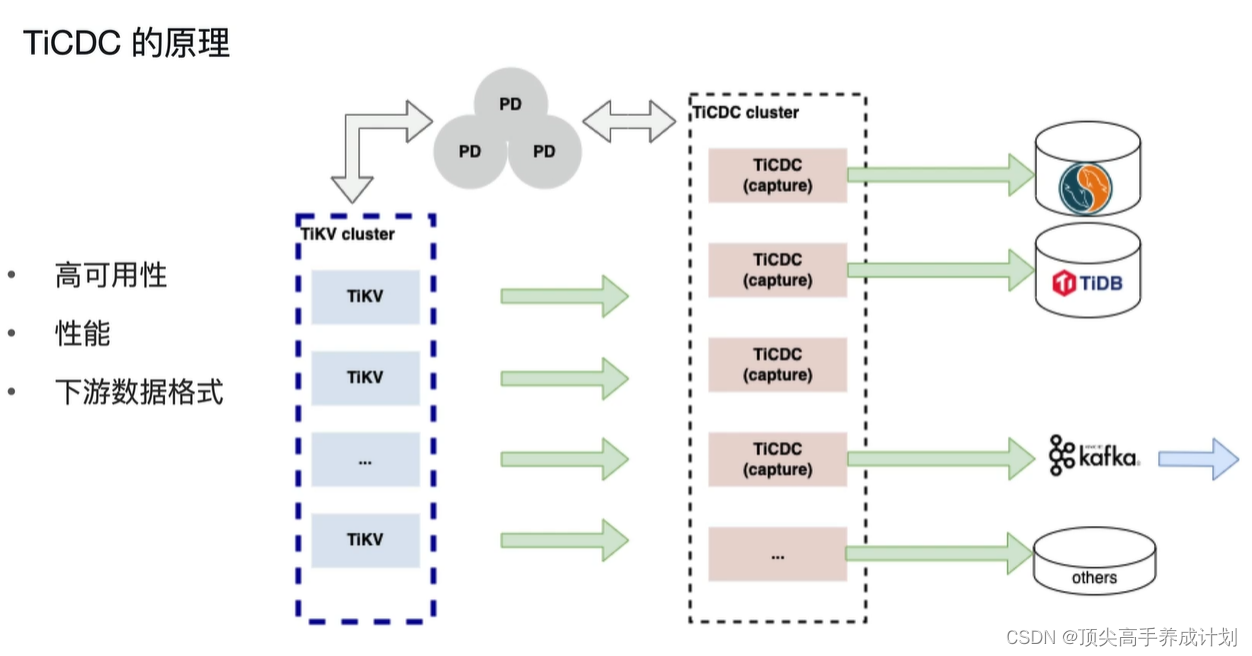

原理

- TiCDC读取的是TiKV产生的change log。TiCDC一对多TiKV,然后把各自得到的change log排好序以后交给最为Master的TiCDC,然后发送给下游。

- 它的同步是异步的。

使用场景

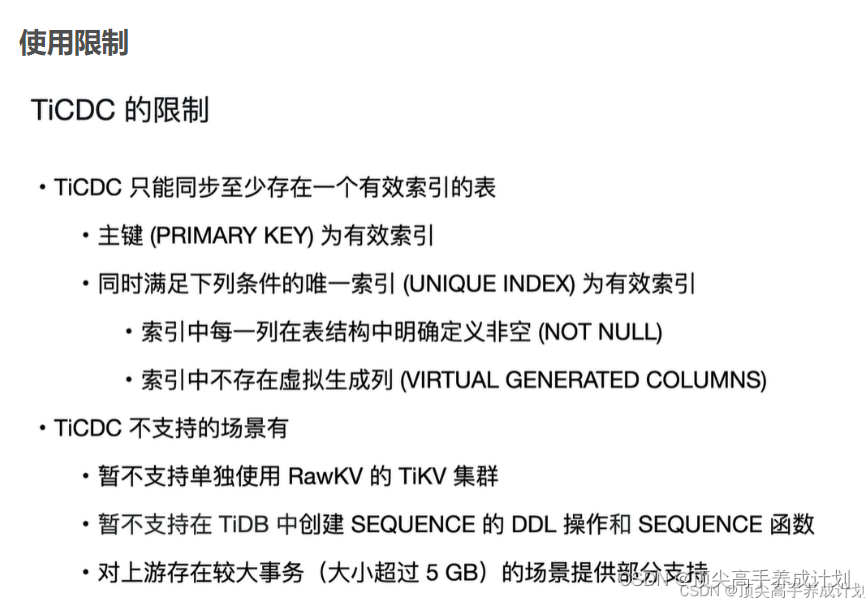

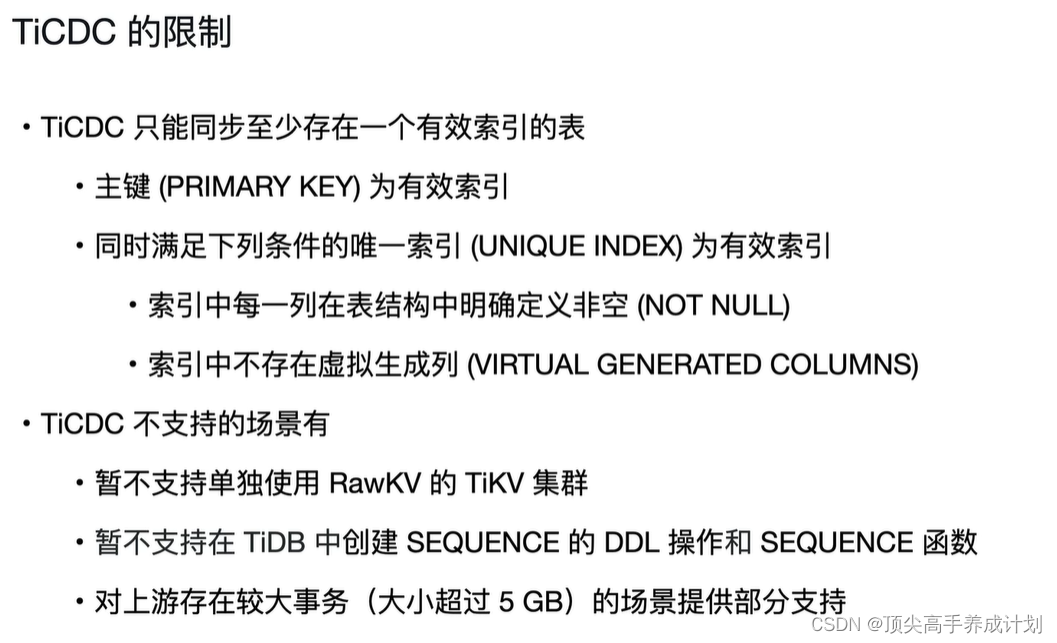

使用限制

必须得有主键或者唯一索引。

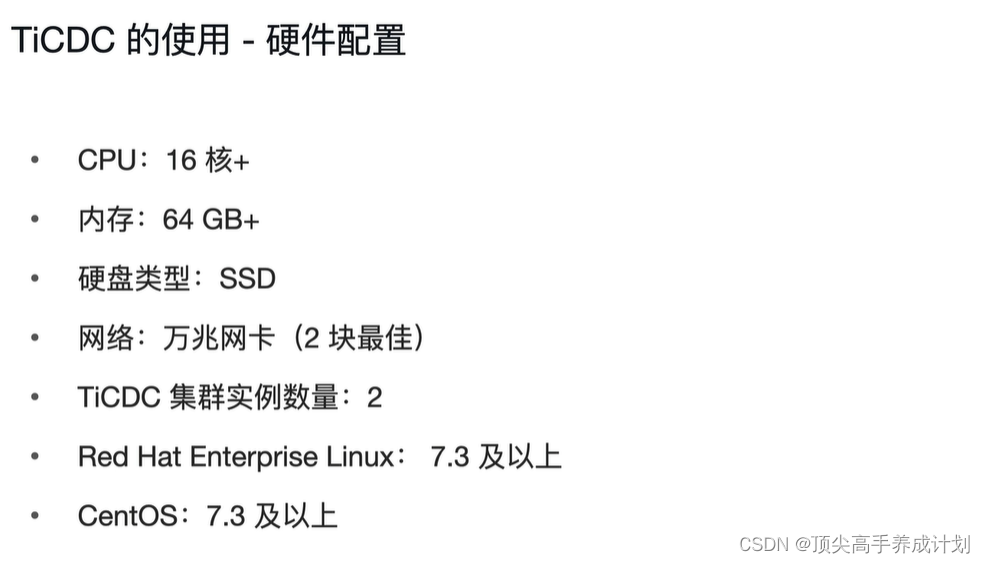

硬件配置

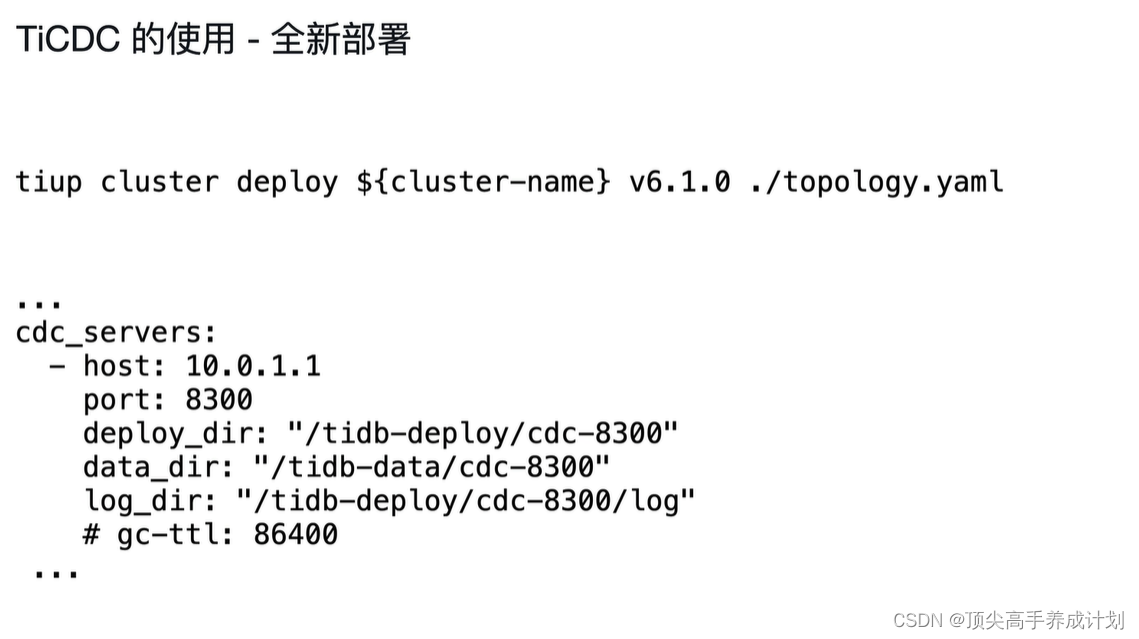

部署

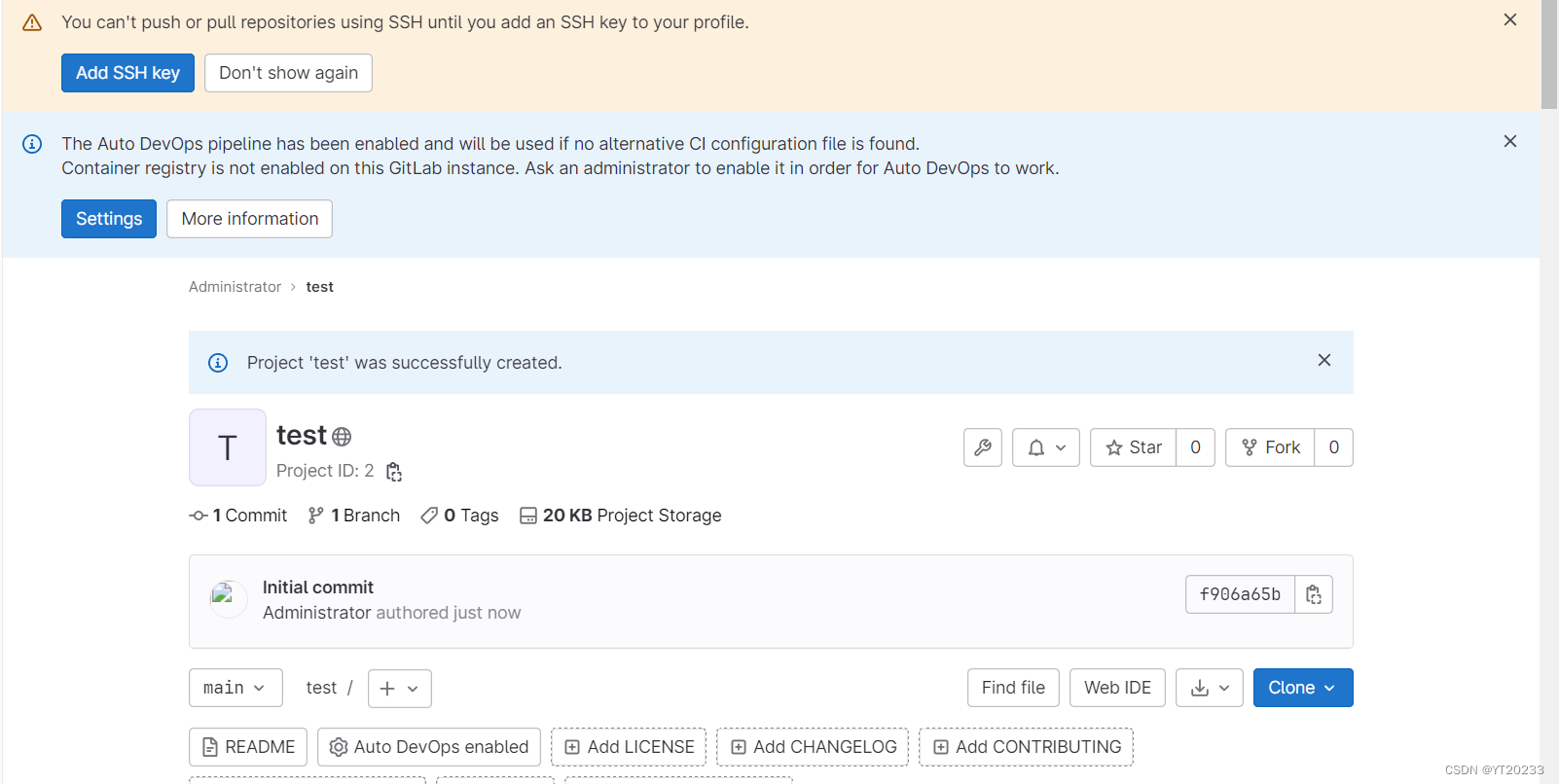

在安装TiDB的时候部署

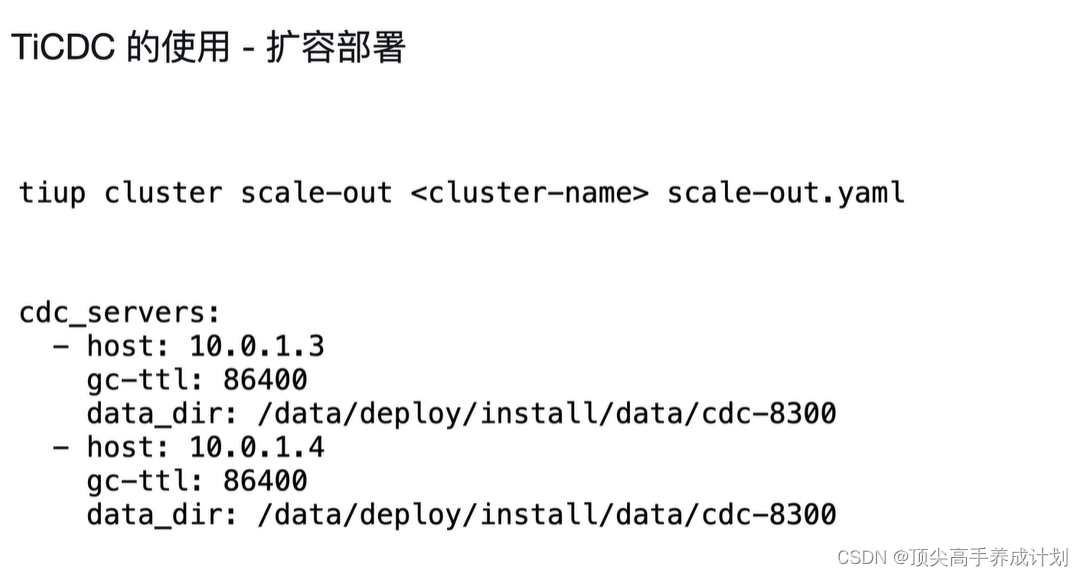

扩容部署

操作

vi scale-out-ticdc.yaml

cdc_servers:

- host: 192.168.66.20

gc-ttl: 86400

data_dir: "/cdc-data"

- host: 192.168.66.21

gc-ttl: 86400

data_dir: "/cdc-data"

#扩容的命令

tiup cluster scale-out tidb-test scale-out-ticdc.yaml -uroot -p

打印

[root@master output]# tiup cluster list

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.12.1/tiup-cluster list

Name User Version Path PrivateKey

---- ---- ------- ---- ----------

tidb-test root v6.5.0 /root/.tiup/storage/cluster/clusters/tidb-test /root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa

[root@master output]# tiup cluster display tidb-test

tiup is checking updates for component cluster ...

Starting component `cluster`: /root/.tiup/components/cluster/v1.12.1/tiup-cluster display tidb-test

Cluster type: tidb

Cluster name: tidb-test

Cluster version: v6.5.0

Deploy user: root

SSH type: builtin

Dashboard URL: http://192.168.66.20:2379/dashboard

Grafana URL: http://192.168.66.20:3000

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

192.168.66.20:9093 alertmanager 192.168.66.20 9093/9094 linux/x86_64 Up /tidb-data/alertmanager-9093 /tidb-deploy/alertmanager-9093

192.168.66.20:8300 cdc 192.168.66.20 8300 linux/x86_64 Up /cdc-data /tidb-deploy/cdc-8300

192.168.66.21:8300 cdc 192.168.66.21 8300 linux/x86_64 Up /cdc-data /tidb-deploy/cdc-8300

192.168.66.20:3000 grafana 192.168.66.20 3000 linux/x86_64 Up - /tidb-deploy/grafana-3000

192.168.66.10:2379 pd 192.168.66.10 2379/2380 linux/x86_64 Up /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.66.20:2379 pd 192.168.66.20 2379/2380 linux/x86_64 Up|UI /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.66.21:2379 pd 192.168.66.21 2379/2380 linux/x86_64 Up|L /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.66.20:9090 prometheus 192.168.66.20 9090/12020 linux/x86_64 Up /tidb-data/prometheus-9090 /tidb-deploy/prometheus-9090

192.168.66.10:4000 tidb 192.168.66.10 4000/10080 linux/x86_64 Up - /tidb-deploy/tidb-4000

192.168.66.10:9000 tiflash 192.168.66.10 9000/8123/3930/20170/20292/8234 linux/x86_64 Up /tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000

192.168.66.10:20160 tikv 192.168.66.10 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.66.20:20160 tikv 192.168.66.20 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.66.21:20160 tikv 192.168.66.21 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

管理CDC

管理工具

#cdc cli 管理工具

cdc cli

tiup ctl:v6.5.0 cdc查看状态

#查看cdc的状态信息

tiup ctl:v6.5.0 cdc capture list --pd=http://192.168.66.10:2379

[root@master output]# tiup ctl:v6.5.0 cdc capture list --pd=http://192.168.66.10:2379

The component `ctl` version v6.5.0 is not installed; downloading from repository.

download https://tiup-mirrors.pingcap.com/ctl-v6.5.0-linux-amd64.tar.gz 340.47 MiB / 340.47 MiB 100.00% 66.37 MiB/s

Starting component `ctl`: /root/.tiup/components/ctl/v6.5.0/ctl cdc capture list --pd=http://192.168.66.10:2379

[

{

"id": "11b2e62f-b32c-4086-928d-d55eaeb95ae7",

"is-owner": true,

"address": "192.168.66.21:8300",

"cluster-id": "default"

},

{

"id": "ca4f341e-8a7b-47fc-bb71-d27334423cb3",

"is-owner": false,

"address": "192.168.66.20:8300",

"cluster-id": "default"

}

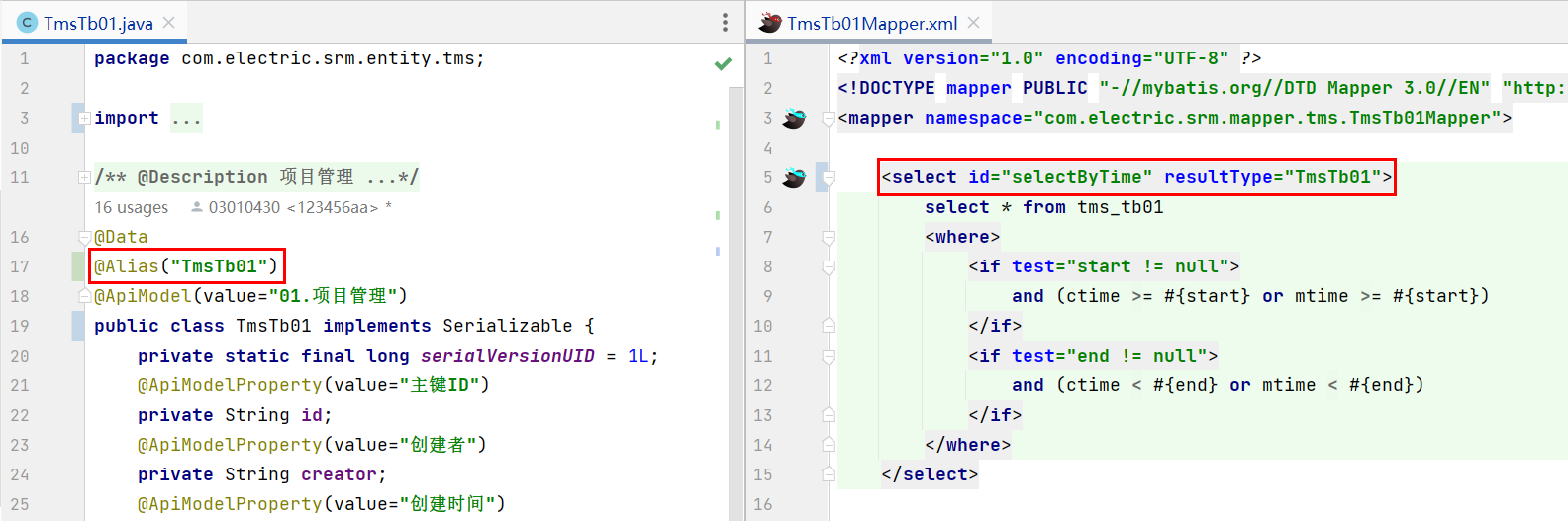

]创建同步任务

#创建同步任务--sort-engine="unified",在数据捕获排序如果内存不够就在磁盘

tiup ctl:v6.5.0 cdc changefeed create \

--pd=http://192.168.66.10:2379 \

--sink-uri="mysql://root:root@192.168.66.10:3306/" \

--changefeed-id="simple-replication-task1" \

--sort-engine="unified"公共参数

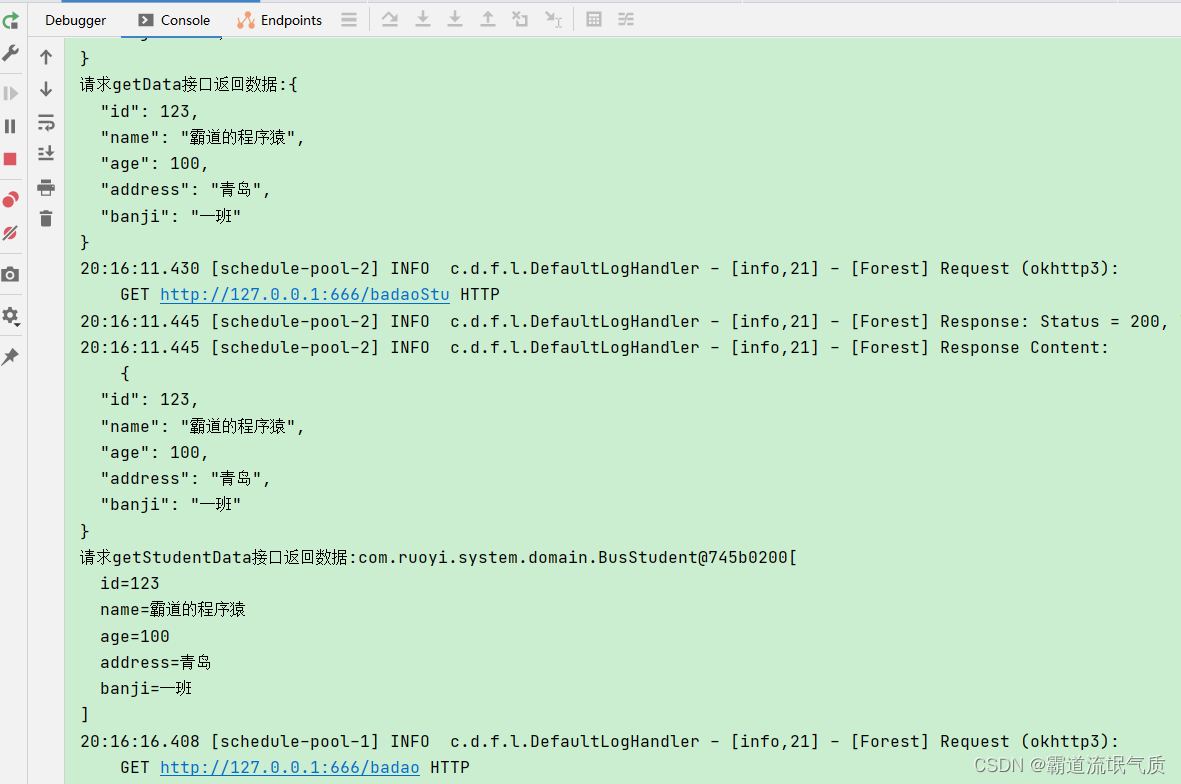

CDC任务同步到MySQL实战

同步命令

#创建同步任务--sort-engine="unified",在数据捕获排序如果内存不够就在磁盘

tiup ctl:v6.5.0 cdc changefeed create \

--pd=http://192.168.66.10:2379 \

--sink-uri="mysql://root:root@192.168.66.10:3306/" \

--changefeed-id="simple-replication-task1" \

--sort-engine="unified"打印

[root@master output]# tiup ctl:v6.5.0 cdc changefeed create \

> --pd=http://192.168.66.10:2379 \

> --sink-uri="mysql://root:root@192.168.66.10:3306/" \

> --changefeed-id="simple-replication-task1" \

> --sort-engine="unified"

Starting component `ctl`: /root/.tiup/components/ctl/v6.5.0/ctl cdc changefeed create --pd=http://192.168.66.10:2379 --sink-uri=mysql://root:root@192.168.66.10:3306/ --changefeed-id=simple-replication-task1 --sort-engine=unified

[WARN] some tables are not eligible to replicate, []v2.TableName{v2.TableName{Schema:"test", Table:"emp", TableID:120, IsPartition:false}, v2.TableName{Schema:"test1", Table:"T1", TableID:125, IsPartition:false}, v2.TableName{Schema:"metastore", Table:"COMPLETED_TXN_COMPONENTS", TableID:417, IsPartition:false}, v2.TableName{Schema:"metastore", Table:"MV_TABLES_USED", TableID:449, IsPartition:false}, v2.TableName{Schema:"metastore", Table:"TXN_COMPONENTS", TableID:499, IsPartition:false}, v2.TableName{Schema:"metastore", Table:"WRITE_SET", TableID:517, IsPartition:false}, v2.TableName{Schema:"metastore", Table:"NEXT_LOCK_ID", TableID:519, IsPartition:false}, v2.TableName{Schema:"metastore", Table:"NEXT_TXN_ID", TableID:521, IsPartition:false}, v2.TableName{Schema:"metastore", Table:"NEXT_COMPACTION_QUEUE_ID", TableID:523, IsPartition:false}, v2.TableName{Schema:"hue_mysql1", Table:"base_region", TableID:613, IsPartition:false}, v2.TableName{Schema:"hue_mysql1", Table:"base_province", TableID:645, IsPartition:false}, v2.TableName{Schema:"hue_mysql1", Table:"base_dic", TableID:651, IsPartition:false}}

Could you agree to ignore those tables, and continue to replicate [Y/N]

y

Create changefeed successfully!

ID: simple-replication-task1

Info: {"upstream_id":7222127049579565349,"namespace":"default","id":"simple-replication-task1","sink_uri":"mysql://root:xxxxx@192.168.66.10:3306/","create_time":"2023-04-22T17:16:02.469900474+08:00","start_ts":440966830030848007,"engine":"unified","config":{"case_sensitive":true,"enable_old_value":true,"force_replicate":false,"ignore_ineligible_table":false,"check_gc_safe_point":true,"enable_sync_point":false,"bdr_mode":false,"sync_point_interval":600000000000,"sync_point_retention":86400000000000,"filter":{"rules":["*.*"],"event_filters":null},"mounter":{"worker_num":16},"sink":{"protocol":"","schema_registry":"","csv":{"delimiter":",","quote":"\"","null":"\\N","include_commit_ts":false},"column_selectors":null,"transaction_atomicity":"none","encoder_concurrency":16,"terminator":"\r\n","date_separator":"none","enable_partition_separator":false},"consistent":{"level":"none","max_log_size":64,"flush_interval":2000,"storage":""}},"state":"normal","creator_version":"v6.5.0"}

查看所有的同步任务

tiup ctl:v6.5.0 cdc changefeed list --pd=http://192.168.66.10:2379同步任务的状态

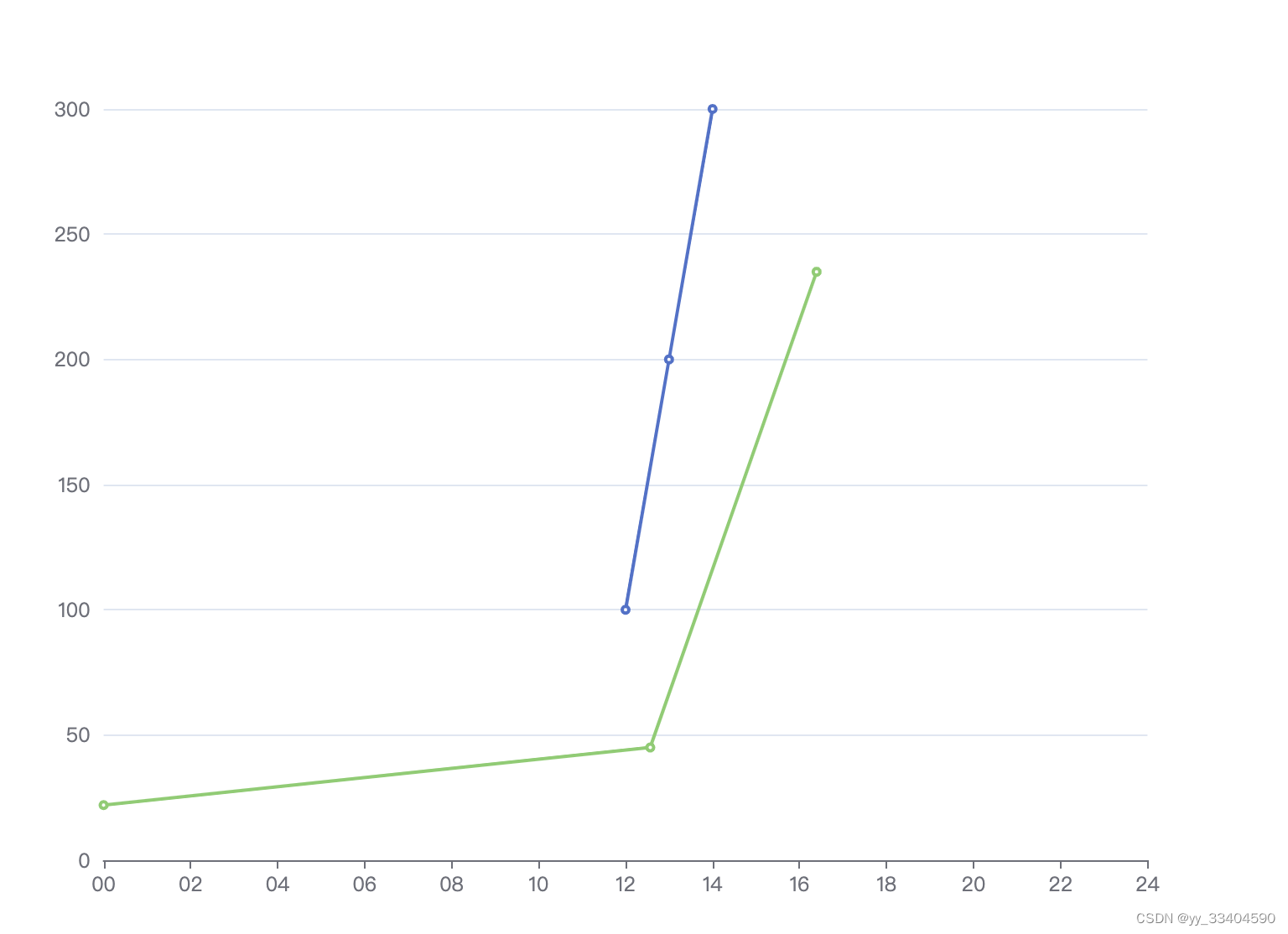

注意:

如果CDC进入Stop状态,那么TiDB Server的GC就会停止,因为不停止的话,如果数据被清理掉了,就没有办法复制过去了。

管理同步任务

删除同步任务

tiup ctl:v6.5.0 cdc changefeed remove --pd=http://192.168.66.10:2379 --changefeed-id simple-replication-task1查看一个同步信息的具体情况

#查看一个同步信息的具体情况

tiup ctl:v6.5.0 cdc changefeed query --pd=http://192.168.66.10:2379 --changefeed-id=simple-replication-task1容灾最终一致性复制

总结

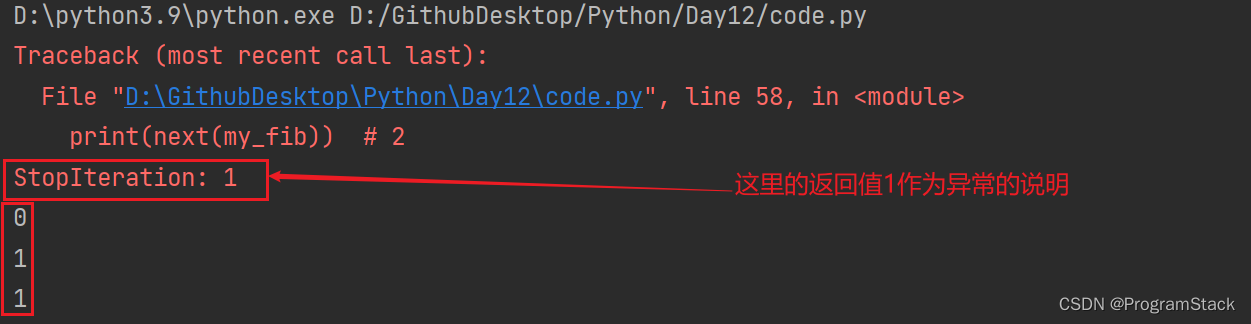

下面的限制很重要,不然会看不到它同步的效果

tidb-cdc日志tables are not eligible to replicate_怎么查看ticdc 日志_与数据交流的路上的博客-CSDN博客