文章目录

- 一、MobileNet

- 1. 深度可分离卷积(Depthwise separable convolution)

- 2. MobileNet V1

- 3. MobileNet V2

- 二、物体检测源码(基于MobileNetV2)

一、MobileNet

1. 深度可分离卷积(Depthwise separable convolution)

深度可分离卷积是卷积神经网络中对标准的卷积计算进行改进所得到的算法,它通过拆分空间维度和通道(深度)维度的相关性,减少了卷积计算所需要的参数个数,并被证明是轻量化网络的有效设计。

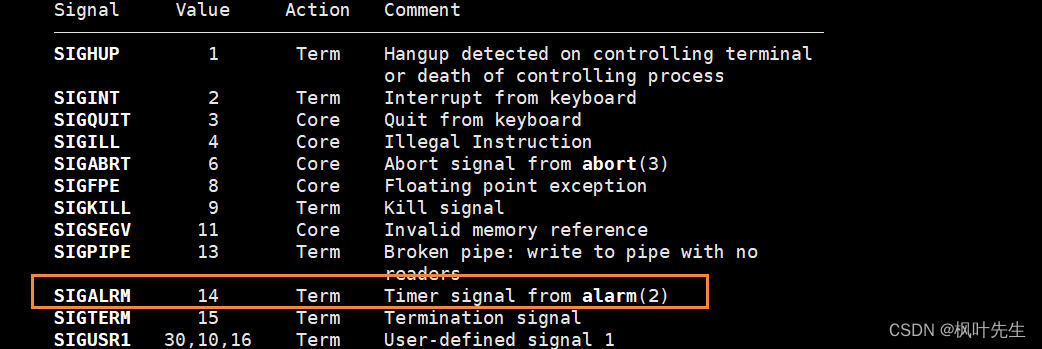

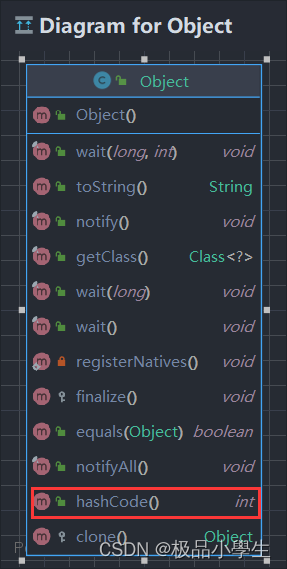

深度可分离卷积由逐深度卷积(Depthwise)和逐点卷积(Pointwise)构成,如下图:

对比于标准卷积,逐深度卷积将卷积核拆分成为单通道形式,在不改变输入特征图像的深度的情况下,对每一通道进行卷积操作,这样就得到了和输入特征图通道数一致的输出特征图;逐点卷积就是1×1卷积,主要作用就是对特征图进行升维和降维。

2. MobileNet V1

MobileNet是针对手机等嵌入式设备提出的一种轻量级的深层神经网络,该网络结构在VGG的基础上使用逐深度卷积和逐点卷积的组合,在保证不损失太大精度的同时降低了模型参数量。

MobileNet V1是MobileNet的第一个版本,是一个类似于VGG的直筒结构,如下图:

整个网络结构中除了第一层是标准的卷积层,最后添加了一层全连接层以外,其余部分都是由深度可分离卷积(3×3×通道数的逐深度卷积 + 1×1×通道数×过滤器个数的逐点卷积)构成的,减少了计算的参数数量,提高了计算的效率。

除了上述优点之外,MobileNet V1还存在以下几个问题:

1) 结构问题:

V1结构过于简单,没有复用图像特征,即没有concat 等操作进行特征融合,而ResNet, DenseNet等结构已经证明复用图像特征的有效性。

2) 逐深度卷积问题:

在处理低维数据(比如逐深度卷积)时,ReLU函数会造成信息的丢失。

逐深度卷积由于本身的计算特性决定它自己没有改变通道数的能力,上一层给它多少通道,它就只能输出多少通道。所以如果上一层给的通道数本身很少的话,逐深度卷积层也只能在低维空间提取特征,因此效果不够好。

3. MobileNet V2

为了解决以上问题,MobileNet V2应用而生。

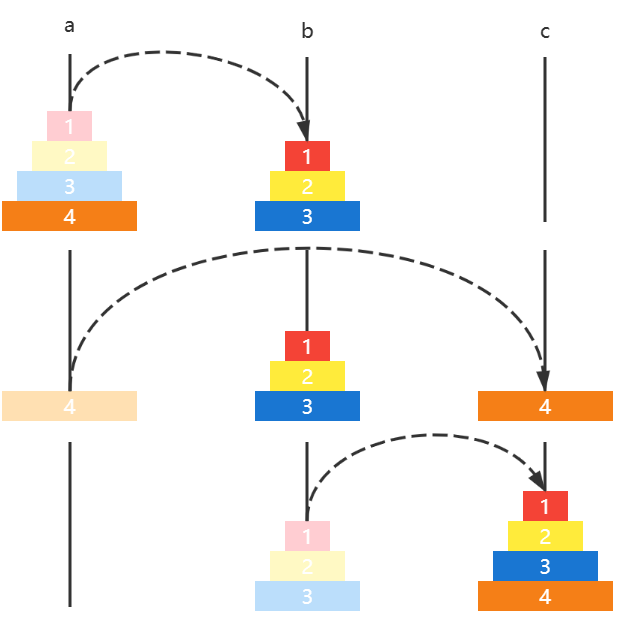

MobileNet V2提出ReLU会破坏在低维空间的数据,而高维空间影响比较少。下图的整个过程就是对一个n维空间中的一个“数据”(Input)做ReLU运算,然后(利用T的逆矩阵T-1恢复)对比ReLU之后的结果与Input的结果相差有多大。

当n = 2和3时,与Input相比有很大一部分的信息已经丢失了。而当n = 15到30,还是有相当多的地方被保留了下来。也就是说,对低维度做ReLU运算,很容易造成信息的丢失。而在高维度进行ReLU运算的话,信息的丢失则会很少。

在整体结构上,V2使用了跟V1类似的深度可分离结构,不同之处也正对应着V1中逐深度卷积的缺点改进,如下图:

主要有以下两个变化:

1) V2去掉了第二个PW(逐点卷积)的激活函数改为线性激活。 V2作者称其为 Linear Bottleneck。原因如上所述,是因为作者认为激活函数在高维空间能够有效的增加非线性,而在低维空间时则会破坏特征,不如线性的效果好。

2) V2在DW(逐深度卷积)之前新加了一个PW卷积。 目的是用来升维,经过PW升维之后,DW可以在相对的更高维度进行更好的特征提取。

除此之外,MobileNet V2还参照ResNet做了如下改进:

3) Shortcut Connection: 将输出与输入相加,可以防止过深的网络层次中特征的丢失。

至此,以上改进后的结构被称为Inverted Residual Block with Linear Bottleneck。而最终的V2网络结构如下图:

注:V3的变化不再详述。

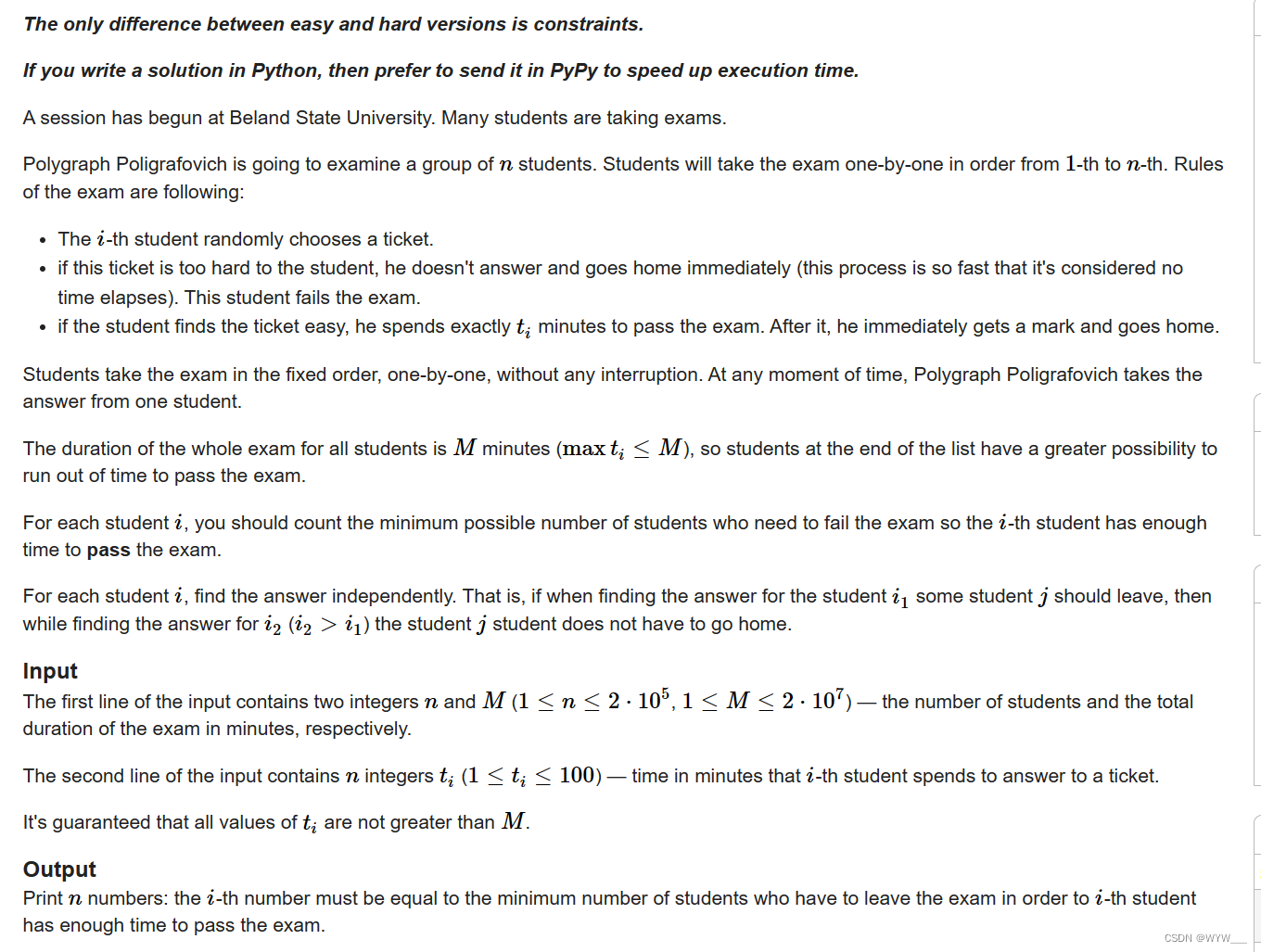

二、物体检测源码(基于MobileNetV2)

import os, re, time, json

import PIL.Image, PIL.ImageFont, PIL.ImageDraw

import numpy as np

import tensorflow as tf

from matplotlib import pyplot as plt

import tensorflow_datasets as tfds

import cv2

# 定义数据来源

data_dir = "/content/drive/My Drive/TF3 C3 W1 Data/"

# 工具函数:画方框,物体检测需要在图片上画方框

def draw_bounding_box_on_image(image, ymin, xmin, ymax, xmax, color=(255, 0, 0), thickness=5):

"""

Args:

image: PIL.Image object.

ymin: 方框左下y坐标

xmin: 方框左下x坐标

ymax: 方框右上y坐标

xmax: 方框右上x坐标

color: 框线颜色,默认红色

thickness: 线宽,默认4

"""

image_width = image.shape[1]

image_height = image.shape[0]

cv2.rectangle(image, (int(xmin), int(ymin)), (int(xmax), int(ymax)), color, thickness)

def draw_bounding_boxes_on_image(image, boxes, color=[], thickness=5):

"""

Args:

image: PIL.Image object.

boxes: 二维numpy数组[N, 4]: (ymin, xmin, ymax, xmax)

坐标会标准化到[0, 1]范围内

color: 框线颜色,默认红色

thickness: 线宽,默认4

"""

boxes_shape = boxes.shape

if not boxes_shape:

return

if len(boxes_shape) != 2 or boxes_shape[1] != 4:

raise ValueError('Input must be of size [N, 4]')

for i in range(boxes_shape[0]):

draw_bounding_box_on_image(image, boxes[i, 1], boxes[i, 0], boxes[i, 3],

boxes[i, 2], color[i], thickness)

def draw_bounding_boxes_on_image_array(image, boxes, color=[], thickness=5):

"""

Args:

image: a numpy array object.

boxes: 二维numpy数组[N, 4]: (ymin, xmin, ymax, xmax)

坐标会标准化到[0, 1]范围内

color: 框线颜色,默认红色

thickness: 线宽,默认4

display_str_list_list: 每个方框上的标注

"""

draw_bounding_boxes_on_image(image, boxes, color, thickness)

return image

# Matplotlib画图的通用配置

plt.rc('image', cmap='gray')

plt.rc('grid', linewidth=0)

plt.rc('xtick', top=False, bottom=False, labelsize='large')

plt.rc('ytick', left=False, right=False, labelsize='large')

plt.rc('axes', facecolor='F8F8F8', titlesize="large", edgecolor='white')

plt.rc('text', color='a8151a')

plt.rc('figure', facecolor='F0F0F0')# Matplotlib fonts

MATPLOTLIB_FONT_DIR = os.path.join(os.path.dirname(plt.__file__), "mpl-data/fonts/ttf")

# 工具函数:画带预测结果的图片,用于验证结果

def display_digits_with_boxes(images, pred_bboxes, bboxes, iou, title, boxes_normalized=False):

n = len(images)

fig = plt.figure(figsize=(20, 4))

plt.title(title)

plt.yticks([])

plt.xticks([])

for i in range(n):

ax = fig.add_subplot(1, 10, i+1)

bboxes_to_plot = []

if (len(pred_bboxes) > i):

bbox = pred_bboxes[i]

bbox = [bbox[0] * images[i].shape[1], bbox[1] * images[i].shape[0], bbox[2] * images[i].shape[1], bbox[3] * images[i].shape[0]]

bboxes_to_plot.append(bbox)

if (len(bboxes) > i):

bbox = bboxes[i]

if bboxes_normalized == True:

bbox = [bbox[0] * images[i].shape[1],bbox[1] * images[i].shape[0], bbox[2] * images[i].shape[1], bbox[3] * images[i].shape[0] ]

bboxes_to_plot.append(bbox)

img_to_draw = draw_bounding_boxes_on_image_array(image=images[i], boxes=np.asarray(bboxes_to_plot), color=[(255,0,0), (0, 255, 0)])

plt.xticks([])

plt.yticks([])

plt.imshow(img_to_draw)

if len(iou) > i :

color = "black"

if (iou[i][0] < iou_threshold):

color = "red"

ax.text(0.2, -0.3, "iou: %s" %(iou[i][0]), color=color, transform=ax.transAxes)

# 工具函数:画训练曲线工具,观测训练结果用

def plot_metrics(metric_name, title, ylim=5):

plt.title(title)

plt.ylim(0,ylim)

plt.plot(history.history[metric_name],color='blue',label=metric_name)

plt.plot(history.history['val_' + metric_name],color='green',label='val_' + metric_name)

# 工具函数:数据预处理,图像大小设置及标准化,方框坐标标准化

def read_image_tfds(image, bbox):

image = tf.cast(image, tf.float32)

shape = tf.shape(image)

factor_x = tf.cast(shape[1], tf.float32)

factor_y = tf.cast(shape[0], tf.float32)

image = tf.image.resize(image, (224, 224,))

image = image/127.5

image -= 1

bbox_list = [bbox[0] / factor_x,

bbox[1] / factor_y,

bbox[2] / factor_x,

bbox[3] / factor_y]

return image, bbox_list

# 工具函数:获取图像副本,并调用图像预处理函数

def read_image_with_shape(image, bbox):

original_image = image

image, bbox_list = read_image_tfds(image, bbox)

return original_image, image, bbox_list

# 工具函数:从data中获取图像,及其真实坐标

def read_image_tfds_with_original_bbox(data):

image = data["image"]

bbox = data["bbox"]

shape = tf.shape(image)

factor_x = tf.cast(shape[1], tf.float32)

factor_y = tf.cast(shape[0], tf.float32)

bbox_list = [bbox[1] * factor_x,

bbox[0] * factor_y,

bbox[3] * factor_x,

bbox[2] * factor_y]

return image, bbox_list

# 工具函数:dataset转换成图像和坐标的numpy list

# 查看图片和方框时使用

def dataset_to_numpy_util(dataset, batch_size=0, N=0):

take_dataset = dataset.shuffle(1024)

if batch_size > 0:

take_dataset = take_dataset.batch(batch_size)

if N > 0:

take_dataset = take_dataset.take(N)

if tf.executing_eagerly():

ds_images, ds_bboxes = [], []

for images, bboxes in take_dataset:

ds_images.append(images.numpy())

ds_bboxes.append(bboxes.numpy())

return (np.array(ds_images), np.array(ds_bboxes))

# 工具函数:dataset转换成图片副本、标准化后的图片、标准化后的边框坐标

# 用于对于图片的原始边框和预测边框

def dataset_to_numpy_with_original_bboxes_util(dataset, batch_size=0, N=0):

normalized_dataset = dataset.map(read_image_with_shape)

if batch_size > 0:

normalized_dataset = normalized_dataset.batch(batch_size)

if N > 0:

normalized_dataset = normalized_dataset.take(N)

if tf.executing_eagerly():

ds_original_images, ds_images, ds_bboxes = [], [], []

for original_images, images, bboxes in normalized_dataset:

ds_images.append(images.numpy())

ds_bboxes.append(bboxes.numpy())

ds_original_images.append(original_images.numpy())

return np.array(ds_original_images), np.array(ds_images), np.array(ds_bboxes)

# 随机取部分训练图片,并展示

def get_visualization_training_dataset():

dataset, info = tfds.load("caltech_birds2010", split="train", with_info=True, data_dir=data_dir, download=False)

visualization_training_dataset = dataset.map(read_image_tfds_with_original_bbox,

num_parallel_calls=16)

return visualization_training_dataset

visualization_training_dataset = get_visualization_training_dataset()

(visualization_training_images, visualization_training_bboxes) = dataset_to_numpy_util(visualization_training_dataset, N=10)

display_digits_with_boxes(np.array(visualization_training_images), np.array([]), np.array(visualization_training_bboxes), np.array([]), "training images and their bboxes")

# 随机取部分验证图片,并展示

def get_visualization_validation_dataset():

dataset = tfds.load("caltech_birds2010", split="test", data_dir=data_dir, download=False)

visualization_validation_dataset = dataset.map(read_image_tfds_with_original_bbox, num_parallel_calls=16)

return visualization_validation_dataset

visualization_validation_dataset = get_visualization_validation_dataset()

(visualization_validation_images, visualization_validation_bboxes) = dataset_to_numpy_util(visualization_validation_dataset, N=10)

display_digits_with_boxes(np.array(visualization_validation_images), np.array([]), np.array(visualization_validation_bboxes), np.array([]), "validation images and their bboxes")

# 准备模型数据

BATCH_SIZE = 64

def get_training_dataset(dataset):

dataset = dataset.map(read_image_tfds, num_parallel_calls=16)

dataset = dataset.shuffle(512, reshuffle_each_iteration=True)

dataset = dataset.repeat()

dataset = dataset.batch(BATCH_SIZE)

dataset = dataset.prefetch(-1)

return dataset

def get_validation_dataset(dataset):

dataset = dataset.map(read_image_tfds, num_parallel_calls=16)

dataset = dataset.batch(BATCH_SIZE)

dataset = dataset.repeat()

return dataset

training_dataset = get_training_dataset(visualization_training_dataset)

validation_dataset = get_validation_dataset(visualization_validation_dataset)

# MobileNet的Transfer模型

def feature_extractor(inputs):

mobilenet_model = tf.keras.applications.mobilenet_v2.MobileNetV2(input_shape=(224,224,3), include_top=False, weights='imagenet')

feature_extractor = mobilenet_model(inputs)

return feature_extractor

# 顶层模型

def dense_layers(features):

x = tf.keras.layers.GlobalAveragePooling2D()(features)

x = tf.keras.layers.Flatten()(x)

x = tf.keras.layers.Dense(1024, activation='relu')(x)

x = tf.keras.layers.Dense(512, activation='relu')(x)

return x

# 边框输出

def bounding_box_regression(x):

bounding_box_regression_output = tf.keras.layers.Dense(4, name='bounding_box')(x)

return bounding_box_regression_output

# 整体模型

def final_model(inputs):

feature_cnn = feature_extractor(inputs)

last_dense_layer = dense_layers(feature_cnn)

bounding_box_output = bounding_box_regression(last_dense_layer)

model = tf.keras.Model(inputs=inputs, outputs=bounding_box_output)

return model

# 模型编译

def define_and_compile_model():

inputs = tf.keras.layers.Input(shape=(224, 224, 3))

model = final_model(inputs)

model.compile(optimizer=tf.keras.optimizers.SGD(momentum=0.9), loss={'bounding_box':'mse'})

return model

# 模型训练

EPOCHS = 50

BATCH_SIZE = 64

length_of_training_dataset = tf.data.experimental.cardinality(visualization_training_dataset).numpy()

length_of_validation_dataset = tf.data.experimental.cardinality(visualization_validation_dataset).numpy()

steps_per_epoch = length_of_training_dataset//BATCH_SIZE # epoch内训练步进次数

if length_of_training_dataset % BATCH_SIZE > 0:

steps_per_epoch += 1

validation_steps = length_of_validation_dataset//BATCH_SIZE # epoch内验证步进次数

if length_of_validation_dataset % BATCH_SIZE > 0:

validation_steps += 1

history = model.fit(training_dataset, steps_per_epoch=steps_per_epoch, validation_data=validation_dataset, validation_steps=validation_steps, epochs=EPOCHS)

# 损失评价

loss = model.evaluate(validation_dataset, steps=validation_steps)

# 模型保存

model.save("xxx.h5")

# 工具函数:对比实际值和预测值得准确性

def intersection_over_union(pred_box, true_box):

xmin_pred, ymin_pred, xmax_pred, ymax_pred = np.split(pred_box, 4, axis = 1)

xmin_true, ymin_true, xmax_true, ymax_true = np.split(true_box, 4, axis = 1)

# Calculate coordinates of overlap area between boxes

xmin_overlap = np.maximum(xmin_pred, xmin_true)

xmax_overlap = np.minimum(xmax_pred, xmax_true)

ymin_overlap = np.maximum(ymin_pred, ymin_true)

ymax_overlap = np.minimum(ymax_pred, ymax_true)

# Calculates area of true and predicted boxes

pred_box_area = (xmax_pred - xmin_pred) * (ymax_pred - ymin_pred)

true_box_area = (xmax_true - xmin_true) * (ymax_true - ymin_true)

# Calculates overlap area and union area.

overlap_area = np.maximum((xmax_overlap - xmin_overlap),0) * np.maximum((ymax_overlap - ymin_overlap), 0)

union_area = (pred_box_area + true_box_area) - overlap_area

# Defines a smoothing factor to prevent division by 0

smoothing_factor = 1e-10

# Updates iou score

iou = (overlap_area + smoothing_factor) / (union_area + smoothing_factor)

return iou

# 预测测试

original_images, normalized_images, normalized_bboxes = dataset_to_numpy_with_original_bboxes_util(visualization_validation_dataset, N=500)

predicted_bboxes = model.predict(normalized_images, batch_size=32)

# 预测边框和实际边框对比

iou = intersection_over_union(predicted_bboxes, normalized_bboxes)

iou_threshold = 0.5

# 打印对比结果

print("Number of predictions where iou > threshold(%s): %s" % (iou_threshold, (iou >= iou_threshold).sum()))

print("Number of predictions where iou < threshold(%s): %s" % (iou_threshold, (iou < iou_threshold).sum()))

![[pgrx开发postgresql数据库扩展]2.安装与开发环境的搭建](https://img-blog.csdnimg.cn/img_convert/5532f1f571da5d6ccc54a556a30a4d5f.webp?x-oss-process=image/format,png)