发布于2022-11-25 15:52:32阅读 1020

Kubernetes优秀的架构设计,借助multus cni + intel userspace cni 可以屏蔽了DPDK底层的复杂,让KubeVirt 支持DPDK变得比较容易。

因为 e2e验证 等原因,KubeVirt社区至今未加入对DPDK支持,本篇试着在最新版的KubeVirt v0.53加入DPDK功能。

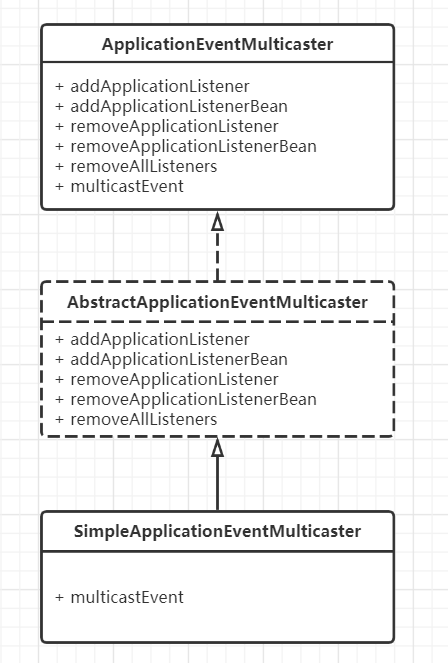

network Phase1 & Phase2

| Phase1 | Phase2 | |

|---|---|---|

| 权限 | 大(privileged networking configuration) | 小(unprivileged networking configuration) |

| 发生场所 | the virt-handler process | virt-launcher process |

| 主要功能 | 第一步是决定改使用哪种 BindMechanism,一旦 BindMechanism 确定,则依次调用以下方法:1. discoverPodNetworkInterface:获取 Pod 网卡设备相关的信息,包括 IP 地址,路由,网关等信息2. preparePodNetworkInterfaces:基于前面获取的信息,配置网络3. setCachedInterface:缓存接口信息在内存中4. setCachedVIF:在文件系统中持久化 VIF 对象 | 和Phase1一样Phase2 也会选择正确的 BindMechanism,然后取得 Phase1 的配置信息(by loading cached VIF object)。基于 VIF 信息, proceed to decorate the domain xml configuration of the VM it will encapsulate |

DPDK不需要Phase1做任何事情,因为不需要获取Pod的网络信息,也不需要缓存Pod的网络和配置vm的网络。

Phase2是基于Phase1的后续,所以DPDK也不需要Phase2做任何事情。

func (l *podNIC) PlugPhase1() error {

...

if l.vmiSpecIface.Vhostuser != nil {

return nil

}

...language-go复制代码复制

func (l *podNIC) PlugPhase2(domain *api.Domain) error {

...

if l.vmiSpecIface.Vhostuser != nil {

return nil

}

...language-go复制代码复制

准备Pod with DPDK mainifest

pkg/virt-controller/services/template.go

const VhostuserSocketDir = "/var/lib/cni/usrcni/"const OvsRunDirDefault = "/var/run/openvswitch/"const PodNetInfoDefault = "/etc/podnetinfo"func addVhostuserVolumes(volumeMounts *[]k8sv1.VolumeMount, volumes *[]k8sv1.Volume) {

// "shared-dir" volume name will be used by userspace cni to place the vhostuser socket file`

*volumeMounts = append(*volumeMounts, k8sv1.VolumeMount{

Name: "shared-dir",

MountPath: VhostuserSocketDir,

})

*volumes = append(*volumes, k8sv1.Volume{

Name: "shared-dir",

VolumeSource: k8sv1.VolumeSource{

EmptyDir: &k8sv1.EmptyDirVolumeSource{

Medium: k8sv1.StorageMediumDefault,

},

},

})

// Libvirt uses ovs-vsctl commands to get interface stats

*volumeMounts = append(*volumeMounts, k8sv1.VolumeMount{

Name: "ovs-run-dir",

MountPath: OvsRunDirDefault,

})

*volumes = append(*volumes, k8sv1.Volume{

Name: "ovs-run-dir",

VolumeSource: k8sv1.VolumeSource{

HostPath: &k8sv1.HostPathVolumeSource{

Path: OvsRunDirDefault,

},

},

})}func addPodInfoVolumes(volumeMounts *[]k8sv1.VolumeMount, volumes *[]k8sv1.Volume) {

// userspace cni will set the vhostuser socket details in annotations, app-netutil helper

// will parse annotations from /etc/podnetinfo to get the interface details of

// vhostuser socket (which will be added to VM xml)

*volumeMounts = append(*volumeMounts, k8sv1.VolumeMount{

Name: "podinfo",

// TODO: (skramaja): app-netutil expects path to be /etc/podnetinfo, make it customizable

MountPath: PodNetInfoDefault,

})

*volumes = append(*volumes, k8sv1.Volume{

Name: "podinfo",

VolumeSource: k8sv1.VolumeSource{

DownwardAPI: &k8sv1.DownwardAPIVolumeSource{

Items: []k8sv1.DownwardAPIVolumeFile{

{

Path: "labels",

FieldRef: &k8sv1.ObjectFieldSelector{

APIVersion: "v1",

FieldPath: "metadata.labels",

},

},

{

Path: "annotations",

FieldRef: &k8sv1.ObjectFieldSelector{

APIVersion: "v1",

FieldPath: "metadata.annotations",

},

},

},

},

},

})}func (t *templateService) renderLaunchManifest(vmi *v1.VirtualMachineInstance, imageIDs map[string]string, tempPod bool) (*k8sv1.Pod, error) {

...

if util.IsVhostuserVmi(vmi) {

...

addVhostuserVolumes(&volumeMounts, &volumes)

addPodInfoVolumes(&volumeMounts, &volumes)

...

}

...language-go复制代码复制

生成类似下面的pod yaml

volumes:

- name: vhostuser-sockets

emptyDir: {}containers:

- name: vm

image: vm-vhostuser:latest

volumeMounts:

- name: vhostuser-sockets

mountPath: /var/run/vmlanguage-yaml复制代码复制

准备libvirt xml define

pkg/virt-launcher/virtwrap/converter/network.go

...} else if iface.Vhostuser != nil {

networks := map[string]*v1.Network{}

cniNetworks := map[string]int{}

multusNetworkIndex := 1

for _, network := range vmi.Spec.Networks {

numberOfSources := 0

if network.Pod != nil {

numberOfSources++

}

if network.Multus != nil {

if network.Multus.Default {

// default network is eth0

cniNetworks[network.Name] = 0

} else {

cniNetworks[network.Name] = multusNetworkIndex

multusNetworkIndex++

}

numberOfSources++

}

if numberOfSources == 0 {

return nil, fmt.Errorf("fail network %s must have a network type", network.Name)

} else if numberOfSources > 1 {

return nil, fmt.Errorf("fail network %s must have only one network type", network.Name)

}

networks[network.Name] = network.DeepCopy()

}

domainIface.Type = "vhostuser"

interfaceName := GetPodInterfaceName(networks, cniNetworks, iface.Name)

vhostPath, vhostMode, err := getVhostuserInfo(interfaceName, c)

if err != nil {

log.Log.Errorf("Failed to get vhostuser interface info: %v", err)

return nil, err }

vhostPathParts := strings.Split(vhostPath, "/")

vhostDevice := vhostPathParts[len(vhostPathParts)-1]

domainIface.Source = api.InterfaceSource{

Type: "unix",

Path: vhostPath,

Mode: vhostMode,

}

domainIface.Target = &api.InterfaceTarget{

Device: vhostDevice,

}

//var vhostuserQueueSize uint32 = 1024

domainIface.Driver = &api.InterfaceDriver{

//RxQueueSize: &vhostuserQueueSize,

//TxQueueSize: &vhostuserQueueSize,

}}language-go复制代码复制

func getVhostuserInfo(ifaceName string, c *ConverterContext) (string, string, error) {

if c.PodNetInterfaces == nil {

err := fmt.Errorf("PodNetInterfaces cannot be nil for vhostuser interface")

return "", "", err }

for _, iface := range c.PodNetInterfaces.Interface {

if iface.DeviceType == "vhost" && iface.NetworkStatus.Interface == ifaceName {

return iface.NetworkStatus.DeviceInfo.VhostUser.Path, iface.NetworkStatus.DeviceInfo.VhostUser.Mode, nil

}

}

err := fmt.Errorf("Unable to get vhostuser interface info for %s", ifaceName)

return "", "", err}func GetPodInterfaceName(networks map[string]*v1.Network, cniNetworks map[string]int, ifaceName string) string {

if networks[ifaceName].Multus != nil && !networks[ifaceName].Multus.Default {

// multus pod interfaces named netX

return fmt.Sprintf("net%d", cniNetworks[ifaceName])

} else {

return PodInterfaceNameDefault }}language-go复制代码复制

生成类似下面的libvrit xml define

<interface type='vhostuser'>

<mac address='00:00:00:0A:30:89'/>

<source type='unix' path='/var/run/vm/sock' mode='server'/>

<model type='virtio'/>

<driver queues='2'>

<host mrg_rxbuf='off'/>

</driver></interface>language-markup复制代码复制

变更KubeVirt API

staging/src/kubevirt.io/api/core/v1/schema.go

type InterfaceBindingMethod struct {

Bridge *InterfaceBridge `json:"bridge,omitempty"`

Slirp *InterfaceSlirp `json:"slirp,omitempty"`

Masquerade *InterfaceMasquerade `json:"masquerade,omitempty"`

SRIOV *InterfaceSRIOV `json:"sriov,omitempty"`

Vhostuser *InterfaceVhostuser `json:"vhostuser,omitempty"`

Macvtap *InterfaceMacvtap `json:"macvtap,omitempty"`}language-go复制代码复制

type InterfaceVhostuser struct{}language-go复制代码复制

支持类似下面的CRD

apiVersion: kubevirt.io/v1kind: VirtualMachineInstancemetadata:

name: vm-trex-1spec:

domain:

devices:

interfaces:

- name: default

masquerade: {}

- name: vhost-user-net-1

vhostuser: {}

networks:

- name: default

pod: {}

- name: vhost-user-net-1

multus:

networkName: userspace-ovs-net-1language-yaml复制代码复制

感觉KubeVirt增加DPDK不是很复杂,只是在KubeVirt自动化流程上多加点DPDK相关的pod yaml部分,vm define xml部分。关键是对DPDK功能的验证,今后再开一篇补上验证相关的内容。

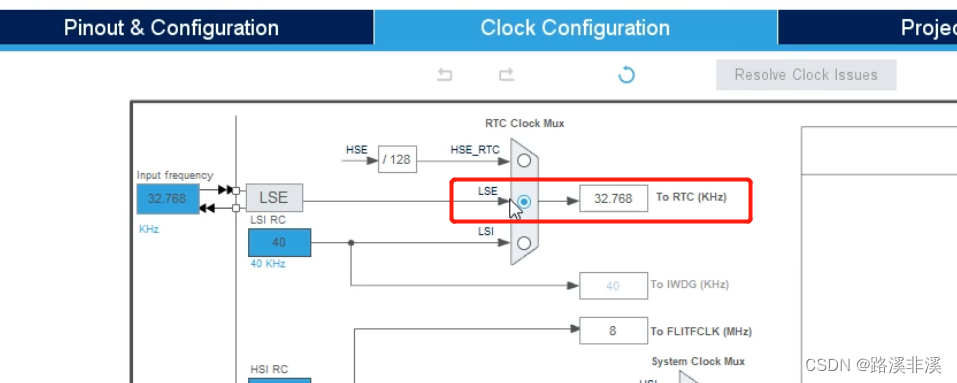

附:DPDK vHost User Ports

Open vSwitch 提供两种类型的 vHost User ports:

- vhost-user (dpdkvhostuser)

- vhost-user-client (dpdkvhostuserclient)

vHost User采用客户端服务端模式。服务端 creates/manages/destroys the vHost User sockets。客户端连接到服务端。

For vhost-user ports, Open vSwitch acts as the server and QEMU the client.

ovs-vsctl add-port br0 vhost-user-1 -- set Interface vhost-user-1 type=dpdkvhostuser复制代码复制

libvirt xml define

<interface type='vhostuser'>

<mac address='00:00:00:00:00:01'/>

<source type='unix' path='/usr/local/var/run/openvswitch/dpdkvhostuser0' mode='client'/>

<model type='virtio'/>

<driver queues='2'>

<host mrg_rxbuf='off'/>

</driver>

</interface>复制代码复制

For vhost-user-client ports, Open vSwitch acts as the client and QEMU the server.

VHOST_USER_SOCKET_PATH=/path/to/socket

ovs-vsctl add-port br0 vhost-client-1 -- set Interface vhost-client-1 type=dpdkvhostuserclient options:vhost-server-path=$VHOST_USER_SOCKET_PATH复制代码复制

libvirt xml define

<interface type='vhostuser'>

<mac address='00:00:00:0A:30:89'/>

<source type='unix' path='/var/run/vm/sock' mode='server'/>

<model type='virtio'/>

<driver queues='2'>

<host mrg_rxbuf='off'/>

</driver>

</interface>复制代码复制

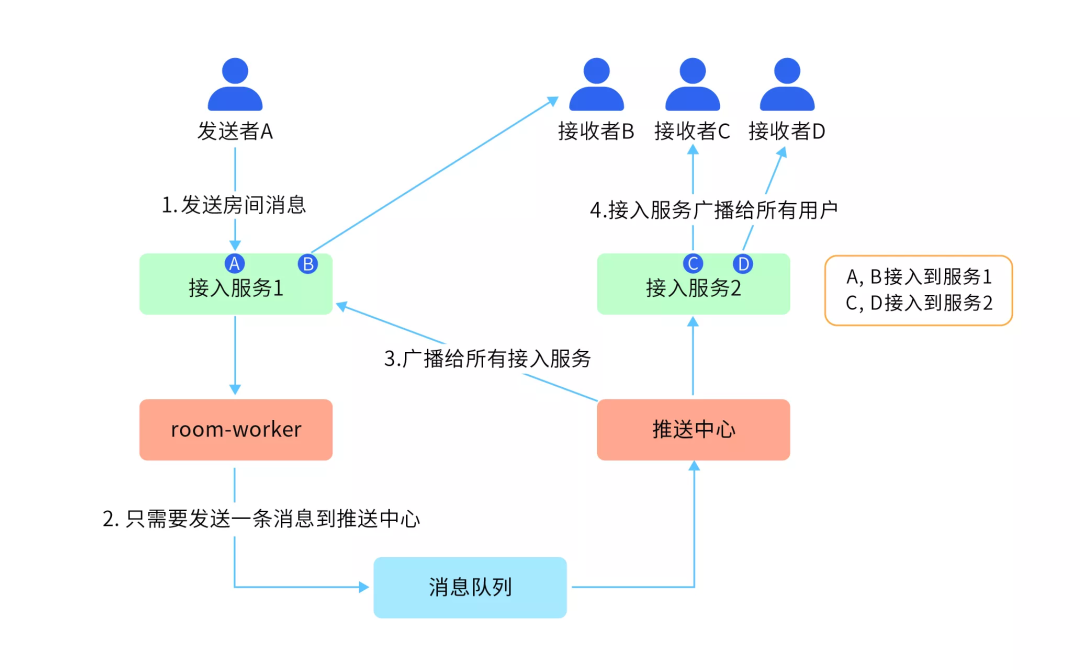

由于在QEMU中不支持动态重新连接中,因此在OVS-DPDK中已弃用了dpdkvhostuser模式。dpdkvhostuser模式重新启动OVS需要重新启动VM。 在 DPDK vhostuserclient 模式下,QEMU充当服务器,QEMU创建了Vhostuser套接字文件,此时OVS充当客户端。即使重新启动OVS也不会影响VM,因为OVS可以在重新启动后连接到socket,OVS支持重启后自动重新连接。 由于旧模式 DPDK VHostuser 被弃用,KubeVirt仅实现了DPDK VHostUserClient模式。 OVS-DPDK 借助vhostuser socket绕过内核空间,增强了应用程序的数据包处理性能,它需要启用大页内存,在host和guest间共享数据包处理。 userspace CNI 借助 multus CNI增加一个额外的DPDK网络,在 OVS-DPDK 和 kubevirt (Qemu) 间共享 vhostuser socket。

原文链接:https://cloud.tencent.com/developer/article/2175858

(免费订阅,永久学习)学习地址: Dpdk/网络协议栈/vpp/OvS/DDos/NFV/虚拟化/高性能专家-学习视频教程-腾讯课堂

更多DPDK相关学习资料有需要的可以自行报名学习,免费订阅,永久学习,或点击这里加qun免费

领取,关注我持续更新哦! !