参考FAQ:AM62x & AM64x: How to use CCS to debug a running M4F core that was started by Linux?

问题记录:

1.使用SD卡启动模式,板上运行Linux。

当Linux系统启动后,9表示M4F core:

am64xx-evm login: root

root@am64xx-evm:~# rpmsg_char_simple -r 9 -n 3

Created endpt device rpmsg-char-9-1035, fd = 3 port = 1024

Exchanging 3 messages with rpmsg device ti.ipc4.ping-pong on rproc id 9 ...

Sending message #0: hello there 0!

Receiving message #0: hello there 0!

Sending message #1: hello there 1!

Receiving message #1: hello there 1!

Sending message #2: hello there 2!

Receiving message #2: hello there 2!

Communicated 3 messages successfully on rpmsg-char-9-1035

TEST STATUS: PASSED

下面的输出怀疑存在问题:

root@am64xx-evm:~# rpmsg_char_simple -r 2 -n 3

Created endpt device rpmsg-char-2-1042, fd = 3 port = 1024

Exchanging 3 messages with rpmsg device ti.ipc4.ping-pong on rproc id 2 ...

Sending message #0: hello there 0!

Receiving message #0:

Sending message #1: hello there 1!

Receiving message #1:

Sending message #2: hello there 2!

Receiving message #2:

Communicated 3 messages successfully on rpmsg-char-2-1042

TEST STATUS: PASSED

接下来在CCS端进行Debug,选择hello_world_am64x-evm_m4fss0-0_nortos_ti-arm-clang例程

运行后卡在0xfd38

结束调试后在Linux终端执行:仍然能正确输出

root@am64xx-evm:~# rpmsg_char_simple -r 9 -n 3

Created endpt device rpmsg-char-9-1573, fd = 3 port = 1024

Exchanging 3 messages with rpmsg device ti.ipc4.ping-pong on rproc id 9 ...

Sending message #0: hello there 0!

Receiving message #0: hello there 0!

Sending message #1: hello there 1!

Receiving message #1: hello there 1!

Sending message #2: hello there 2!

Receiving message #2: hello there 2!

Communicated 3 messages successfully on rpmsg-char-9-1573

TEST STATUS: PASSED

接下来采用Load Program的方式:可以正确加载程序并输出

但是在Linux终端执行,程序会卡死

root@am64xx-evm:~# rpmsg_char_simple -r 9 -n 3

Created endpt device rpmsg-char-9-1720, fd = 3 port = 1024

Exchanging 3 messages with rpmsg device ti.ipc4.ping-pong on rproc id 9 ...

Sending message #0: hello there 0!

正确做法:

-

在CCS中构建remote core project(ipc_rpmsg_echo_linux_am64x-evm_m4fss0-0_freertos_ti-arm-clang)

-

将生成的.out文件拷贝到Linux filesystem下的/lib/firmware,并更新links。

root@am64xx-evm:~# head /sys/class/remoteproc/remoteproc*/name ==> /sys/class/remoteproc/remoteproc0/name <==5000000.m4fss //可以确定M4F的remoteproc是0 ... root@am64xx-evm:~# echo stop > /sys/class/remoteproc/remoteproc0/state [ 263.325676] remoteproc remoteproc0: stopped remote processor 5000000.m4fss root@am64xx-evm:/lib/firmware# ln -sf /lib/firmware/ipc_rpmsg_echo_linux_am64x-evm_m4fss0-0_freertos_ti-arm-clang.out am64-mcu-m4f0_0-fw root@am64xx-evm:/lib/firmware# ls -l /lib/firmware/ total 32452 lrwxrwxrwx 1 root root 79 Dec 14 12:22 am64-mcu-m4f0_0-fw -> /lib/firmware/ipc_rpmsg_echo_linux_am64x- evm_m4fss0-0_freertos_ti-arm-clang.out -

重启EVM,并测试RPMsg example is running properly

root@am64xx-evm:~# rpmsg_char_simple -r 9 -n 3 Created endpt device rpmsg-char-9-1034, fd = 3 port = 1024 Exchanging 3 messages with rpmsg device ti.ipc4.ping-pong on rproc id 9 ... Sending message #0: hello there 0! Receiving message #0: hello there 0! Sending message #1: hello there 1! Receiving message #1: hello there 1! Sending message #2: hello there 2! Receiving message #2: hello there 2! Communicated 3 messages successfully on rpmsg-char-9-1034 TEST STATUS: PASSED -

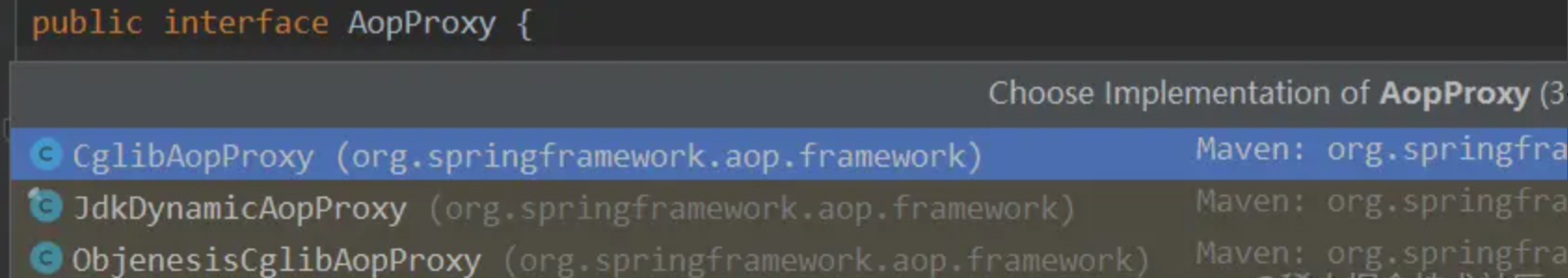

启动AM64 Target Configuration. Connect to the core BLAZAR_Cortex_M4F_0, Load Symbols

在这里打断点,并启动程序:

并在linux端启动:

在CCS里单步运行可以看到,Linux同步输出

代码解析:

main.c

#include <stdlib.h>

#include <kernel/dpl/DebugP.h>

#include "ti_drivers_config.h"

#include "ti_board_config.h"

#include "FreeRTOS.h"

#include "task.h"

#define MAIN_TASK_PRI (configMAX_PRIORITIES-1)//代表最高优先级

#define MAIN_TASK_SIZE (16384U/sizeof(configSTACK_DEPTH_TYPE))//4096

StackType_t gMainTaskStack[MAIN_TASK_SIZE] __attribute__((aligned(32)));

StaticTask_t gMainTaskObj;

TaskHandle_t gMainTask;

void ipc_rpmsg_echo_main(void *args);

void freertos_main(void *args)

{

ipc_rpmsg_echo_main(NULL);

vTaskDelete(NULL);

}

int main(void)

{

/* init SOC specific modules */

System_init();

Board_init();

/* This task is created at highest priority, it should create more tasks and then delete itself */

gMainTask = xTaskCreateStatic( freertos_main, /* Pointer to the function that implements the task. */

"freertos_main", /* Text name for the task. This is to facilitate debugging only. */

MAIN_TASK_SIZE, /* Stack depth in units of StackType_t typically uint32_t on 32b CPUs */

NULL, /* We are not using the task parameter. */

MAIN_TASK_PRI, /* task priority, 0 is lowest priority, configMAX_PRIORITIES-1 is highest */

gMainTaskStack, /* pointer to stack base */

&gMainTaskObj ); /* pointer to statically allocated task object memory */

configASSERT(gMainTask != NULL);

/* Start the scheduler to start the tasks executing. */

vTaskStartScheduler();

/* The following line should never be reached because vTaskStartScheduler()

will only return if there was not enough FreeRTOS heap memory available to

create the Idle and (if configured) Timer tasks. Heap management, and

techniques for trapping heap exhaustion, are described in the book text. */

DebugP_assertNoLog(0);

return 0;

}

ipc_rpmsg_echo.c

#include <stdio.h>

#include <string.h>

#include <inttypes.h>

#include <kernel/dpl/ClockP.h>

#include <kernel/dpl/DebugP.h>

#include <kernel/dpl/TaskP.h>

#include <drivers/ipc_notify.h>

#include <drivers/ipc_rpmsg.h>

#include "ti_drivers_open_close.h"

#include "ti_board_open_close.h"

/* This example shows message exchange bewteen Linux and RTOS/NORTOS cores.

* This example also does message exchange between the RTOS/NORTOS cores themselves

*

* The Linux core initiates IPC with other core's by sending it a message.

* The other cores echo the same message to the Linux core.

*

* At the same time all RTOS/NORTOS cores, also send messages to each others

* and reply back to each other. i.e all CPUs send and recevive messages from each other

*

* This example can very well have been NORTOS based, however for convinience

* of creating two tasks to talk to two clients on linux side, we use FreeRTOS

* for the same.

*/

/*

* Remote core service end point

*

* pick any unique value on that core between 0..RPMESSAGE_MAX_LOCAL_ENDPT-1

* the value need not be unique across cores

*

* The service names MUST match what linux is expecting

*/

/* This is used to run the echo test with linux kernel */

#define IPC_RPMESSAGE_SERVICE_PING "ti.ipc4.ping-pong"

#define IPC_RPMESSAGE_ENDPT_PING (13U)

/* This is used to run the echo test with user space kernel */

#define IPC_RPMESSAGE_SERVICE_CHRDEV "rpmsg_chrdev"

#define IPC_RPMESSAGE_ENDPT_CHRDEV_PING (14U)

/* Use by this to receive ACK messages that it sends to other RTOS cores */

#define IPC_RPMESSAGE_RNDPT_ACK_REPLY (11U)

/* maximum size that message can have in this example */

#define IPC_RPMESSAGE_MAX_MSG_SIZE (96u)

/*

* Number of RP Message ping "servers" we will start,

* - one for ping messages for linux kernel "sample ping" client

* - and another for ping messages from linux "user space" client using "rpmsg char"

*/

#define IPC_RPMESSAGE_NUM_RECV_TASKS (2u)

/* RPMessage object used to recvice messages */

RPMessage_Object gIpcRecvMsgObject[IPC_RPMESSAGE_NUM_RECV_TASKS];

/* RPMessage object used to send messages to other non-Linux remote cores */

RPMessage_Object gIpcAckReplyMsgObject;

/* Task priority, stack, stack size and task objects, these MUST be global's */

#define IPC_RPMESSAFE_TASK_PRI (8U)

#define IPC_RPMESSAFE_TASK_STACK_SIZE (8*1024U)

uint8_t gIpcTaskStack[IPC_RPMESSAGE_NUM_RECV_TASKS][IPC_RPMESSAFE_TASK_STACK_SIZE] __attribute__((aligned(32)));

TaskP_Object gIpcTask[IPC_RPMESSAGE_NUM_RECV_TASKS];

/* number of iterations of message exchange to do */

uint32_t gMsgEchoCount = 100000u;

/* non-Linux cores that exchange messages among each other */

#if defined (SOC_AM64X)

uint32_t gRemoteCoreId[] = {

CSL_CORE_ID_R5FSS0_0,

CSL_CORE_ID_R5FSS0_1,

CSL_CORE_ID_R5FSS1_0,

CSL_CORE_ID_R5FSS1_1,

CSL_CORE_ID_M4FSS0_0,

CSL_CORE_ID_MAX /* this value indicates the end of the array */

};

#endif

#if defined (SOC_AM62X)

uint32_t gRemoteCoreId[] = {

CSL_CORE_ID_M4FSS0_0,

CSL_CORE_ID_MAX /* this value indicates the end of the array */

};

#endif

void ipc_recv_task_main(void *args)

{

int32_t status;

char recvMsg[IPC_RPMESSAGE_MAX_MSG_SIZE+1]; /* +1 for NULL char in worst case */

uint16_t recvMsgSize, remoteCoreId, remoteCoreEndPt;

RPMessage_Object *pRpmsgObj = (RPMessage_Object *)args;

DebugP_log("[IPC RPMSG ECHO] Remote Core waiting for messages at end point %d ... !!!\r\n",

RPMessage_getLocalEndPt(pRpmsgObj)

);

/* wait for messages forever in a loop */

while(1)

{

/* set 'recvMsgSize' to size of recv buffer,

* after return `recvMsgSize` contains actual size of valid data in recv buffer

*/

recvMsgSize = IPC_RPMESSAGE_MAX_MSG_SIZE;

status = RPMessage_recv(pRpmsgObj,

recvMsg, &recvMsgSize,

&remoteCoreId, &remoteCoreEndPt,

SystemP_WAIT_FOREVER);

DebugP_assert(status==SystemP_SUCCESS);

/* echo the same message string as reply */

#if 0 /* not logging this so that this does not add to the latency of message exchange */

recvMsg[recvMsgSize] = 0; /* add a NULL char at the end of message */

DebugP_log("%s\r\n", recvMsg);

#endif

/* send ack to sender CPU at the sender end point */

status = RPMessage_send(

recvMsg, recvMsgSize,

remoteCoreId, remoteCoreEndPt,

RPMessage_getLocalEndPt(pRpmsgObj),

SystemP_WAIT_FOREVER);

DebugP_assert(status==SystemP_SUCCESS);

}

/* This loop will never exit */

}

void ipc_rpmsg_send_messages(void)

{

RPMessage_CreateParams createParams;

uint32_t msg, i, numRemoteCores;

uint64_t curTime;

char msgBuf[IPC_RPMESSAGE_MAX_MSG_SIZE];

int32_t status;

uint16_t remoteCoreId, remoteCoreEndPt, msgSize;

RPMessage_CreateParams_init(&createParams);

createParams.localEndPt = IPC_RPMESSAGE_RNDPT_ACK_REPLY;

status = RPMessage_construct(&gIpcAckReplyMsgObject, &createParams);

DebugP_assert(status==SystemP_SUCCESS);

numRemoteCores = 0;

for(i=0; gRemoteCoreId[i]!=CSL_CORE_ID_MAX; i++)

{

if(gRemoteCoreId[i] != IpcNotify_getSelfCoreId()) /* dont count self */

{

numRemoteCores++;

}

}

DebugP_log("[IPC RPMSG ECHO] Message exchange started with RTOS cores !!!\r\n");

curTime = ClockP_getTimeUsec();

for(msg=0; msg<gMsgEchoCount; msg++)

{

snprintf(msgBuf, IPC_RPMESSAGE_MAX_MSG_SIZE-1, "%d", msg);

msgBuf[IPC_RPMESSAGE_MAX_MSG_SIZE-1] = 0;

msgSize = strlen(msgBuf) + 1; /* count the terminating char as well */

/* send the same messages to all cores */

for(i=0; gRemoteCoreId[i]!=CSL_CORE_ID_MAX; i++ )

{

if(gRemoteCoreId[i] != IpcNotify_getSelfCoreId()) /* dont send message to self */

{

status = RPMessage_send(

msgBuf, msgSize,

gRemoteCoreId[i], IPC_RPMESSAGE_ENDPT_CHRDEV_PING,

RPMessage_getLocalEndPt(&gIpcAckReplyMsgObject),

SystemP_WAIT_FOREVER);

DebugP_assert(status==SystemP_SUCCESS);

}

}

/* wait for response from all cores */

for(i=0; gRemoteCoreId[i]!=CSL_CORE_ID_MAX; i++ )

{

if(gRemoteCoreId[i] != IpcNotify_getSelfCoreId()) /* dont send message to self */

{

/* set 'msgSize' to size of recv buffer,

* after return `msgSize` contains actual size of valid data in recv buffer

*/

msgSize = sizeof(msgBuf);

status = RPMessage_recv(&gIpcAckReplyMsgObject,

msgBuf, &msgSize,

&remoteCoreId, &remoteCoreEndPt,

SystemP_WAIT_FOREVER);

DebugP_assert(status==SystemP_SUCCESS);

}

}

}

curTime = ClockP_getTimeUsec() - curTime;

DebugP_log("[IPC RPMSG ECHO] All echoed messages received by main core from %d remote cores !!!\r\n", numRemoteCores);

DebugP_log("[IPC RPMSG ECHO] Messages sent to each core = %d \r\n", gMsgEchoCount);

DebugP_log("[IPC RPMSG ECHO] Number of remote cores = %d \r\n", numRemoteCores);

DebugP_log("[IPC RPMSG ECHO] Total execution time = %" PRId64 " usecs\r\n", curTime);

DebugP_log("[IPC RPMSG ECHO] One way message latency = %" PRId32 " nsec\r\n",

(uint32_t)(curTime*1000u/(gMsgEchoCount*numRemoteCores*2)));

RPMessage_destruct(&gIpcAckReplyMsgObject);

}

void ipc_rpmsg_create_recv_tasks(void)

{

int32_t status;

RPMessage_CreateParams createParams;

TaskP_Params taskParams;

RPMessage_CreateParams_init(&createParams);

createParams.localEndPt = IPC_RPMESSAGE_ENDPT_PING;

status = RPMessage_construct(&gIpcRecvMsgObject[0], &createParams);

DebugP_assert(status==SystemP_SUCCESS);

RPMessage_CreateParams_init(&createParams);

createParams.localEndPt = IPC_RPMESSAGE_ENDPT_CHRDEV_PING;

status = RPMessage_construct(&gIpcRecvMsgObject[1], &createParams);

DebugP_assert(status==SystemP_SUCCESS);

/* We need to "announce" to Linux client else Linux does not know a service exists on this CPU

* This is not mandatory to do for RTOS clients

*/

status = RPMessage_announce(CSL_CORE_ID_A53SS0_0, IPC_RPMESSAGE_ENDPT_PING, IPC_RPMESSAGE_SERVICE_PING);

DebugP_assert(status==SystemP_SUCCESS);

status = RPMessage_announce(CSL_CORE_ID_A53SS0_0, IPC_RPMESSAGE_ENDPT_CHRDEV_PING, IPC_RPMESSAGE_SERVICE_CHRDEV);

DebugP_assert(status==SystemP_SUCCESS);

/* Create the tasks which will handle the ping service */

TaskP_Params_init(&taskParams);

taskParams.name = "RPMESSAGE_PING";

taskParams.stackSize = IPC_RPMESSAFE_TASK_STACK_SIZE;

taskParams.stack = gIpcTaskStack[0];

taskParams.priority = IPC_RPMESSAFE_TASK_PRI;

/* we use the same task function for echo but pass the appropiate rpmsg handle to it, to echo messages */

taskParams.args = &gIpcRecvMsgObject[0];

taskParams.taskMain = ipc_recv_task_main;

status = TaskP_construct(&gIpcTask[0], &taskParams);

DebugP_assert(status == SystemP_SUCCESS);

TaskP_Params_init(&taskParams);

taskParams.name = "RPMESSAGE_CHAR_PING";

taskParams.stackSize = IPC_RPMESSAFE_TASK_STACK_SIZE;

taskParams.stack = gIpcTaskStack[1];

taskParams.priority = IPC_RPMESSAFE_TASK_PRI;

/* we use the same task function for echo but pass the appropiate rpmsg handle to it, to echo messages */

taskParams.args = &gIpcRecvMsgObject[1];

taskParams.taskMain = ipc_recv_task_main;

status = TaskP_construct(&gIpcTask[1], &taskParams);

DebugP_assert(status == SystemP_SUCCESS);

}

void ipc_rpmsg_echo_main(void *args)

{

int32_t status;

Drivers_open();

Board_driversOpen();

DebugP_log("[IPC RPMSG ECHO] %s %s\r\n", __DATE__, __TIME__);

/* This API MUST be called by applications when its ready to talk to Linux */

status = RPMessage_waitForLinuxReady(SystemP_WAIT_FOREVER);

DebugP_assert(status==SystemP_SUCCESS);

/* create message receive tasks, these tasks always run and never exit */

ipc_rpmsg_create_recv_tasks();

/* wait for all non-Linux cores to be ready, this ensure that when we send messages below

* they wont be lost due to rpmsg end point not created at remote core

*/

IpcNotify_syncAll(SystemP_WAIT_FOREVER);

/* Due to below "if" condition only one non-Linux core sends messages to all other non-Linux Cores

* This is done mainly to show deterministic latency measurement

*/

if( IpcNotify_getSelfCoreId() == CSL_CORE_ID_R5FSS0_0 )

{

ipc_rpmsg_send_messages();

}

/* exit from this task, vTaskDelete() is called outside this function, so simply return */

Board_driversClose();

/* We dont close drivers since threads are running in background */

/* Drivers_close(); */

}