《On clustering using random walks》阅读笔记

1. 问题建模

1.1 问题描述

let G ( V , E , ω ) G(V,E,\omega) G(V,E,ω) be a weighted graph, V V V is the set of nodes, E E E is the edge between nodes in V V V, ω \omega ω is the function ω : E → R n \omega:E \to \mathbb{R}^n ω:E→Rn, that measures the simularity between pairs of items(a higher value means more similar).

p

i

j

=

ω

(

i

,

j

)

d

i

p_{ij} = \frac{\omega(i,j)}{d_i}

pij=diω(i,j)

d

i

=

∑

k

=

1

n

ω

(

i

,

k

)

d_i = \sum_{k=1}^n\omega(i,k)

di=k=1∑nω(i,k)

M

G

∈

R

n

×

n

M^G \in \mathbb{R}^{n \times n}

MG∈Rn×n is the associated transition matrix,

M

i

j

G

=

{

p

i

j

⟨

i

,

j

⟩

∈

E

0

otherwise

M^G_{ij} = \begin{cases} p_{ij} & \langle i,j \rangle \in E \\ 0 & \textrm{otherwise} \end{cases}

MijG={pij0⟨i,j⟩∈Eotherwise

Question:

- ω \omega ω表示节点之间的相似性,实际上我们只有无向图,表示节点之间是否有连接,怎么通过已有的信息构建 ω \omega ω

answer: 这里的相似度可以认为是节点之间边的权值,所以 M i j G M^G_{ij} MijG可以认为是认为是以邻接矩阵操作后的数据。

这里的内容比较坑,我在论文中一直找不到关于 P visit k ( i ) P^{k}_{\textrm{visit}}(i) Pvisitk(i)是怎么计算的,在这里卡了好久好久。

在原文中的描述是这样的:

Now, denote by P v i s i t k ( i ) ∈ R n P^k_{visit}(i) \in \mathbb{R}^n Pvisitk(i)∈Rn the vector whose j-th component is the probability that a random walk originating at i will visit node j in its k-th step. Thus, P v i s i t k ( i ) P^k_{visit}(i) Pvisitk(i) is the i-th row in the matrix ( M G ) k (M^G)^k (MG)k, the k’th power of M G M^G MG.

现在我们知道 M G M^G MG是怎样计算的,但是 ( M G ) k (M^G)^k (MG)k呢,在原文中的描述是’'the k’th power of M G M^G MG", 我理解的应该是原有矩阵 M G M^G MG的k次方(矩阵的乘法)。

P v i s i t k ( i ) P^k_{visit}(i) Pvisitk(i) is the i-th row in the matrix ( M G ) k (M^G)^k (MG)k,

P

v

i

s

i

t

k

(

i

)

=

(

M

G

)

i

k

P^k_{visit}(i) = (M^G)^k_i

Pvisitk(i)=(MG)ik

(

M

G

)

k

=

{

P

v

i

s

i

t

k

(

1

)

T

,

P

v

i

s

i

t

k

(

2

)

T

,

…

,

P

v

i

s

i

t

k

(

n

)

T

}

(M^G)^k=\{P^k_{visit}(1)^{\mathbf{T}}, P^k_{visit}(2)^{\mathbf{T}}, \dots, P^k_{visit}(n)^{\mathbf{T}}\}

(MG)k={Pvisitk(1)T,Pvisitk(2)T,…,Pvisitk(n)T}

Notice: 其实到这里,和马尔可夫聚类算法(MCL)是一样的。MCL是不断迭代,知道矩阵不再改变,这里作者考虑到计算复杂,采用前k次计算结果的和来作为替代。

We now offer two methods for performing the edge separation, both based on deterministic analysis of random walks.

边缘分离,锐化

NS: Separation by neighborhood similarity.

CE: Separation by circular escape.

the weighted neighborhood : 加权领域

bipartite subgraph

P visit ≤ k ( v ) = ∑ i = 1 k P visit i ( v ) P^{\leq k}_{\textrm{visit}}(v) = \sum_{i=1}^kP^{i}_{\textrm{visit}}(v) Pvisit≤k(v)=i=1∑kPvisiti(v)

2. NS: Separation by neighborhood similarity.

Now, in order to estimate the closeness of the two node v v v and u u u , we fix some small k(eg. k = 3) and compare P visit ≤ k ( v ) P^{\leq k}_{\textrm{visit}}(v) Pvisit≤k(v) and P visit ≤ k ( u ) P^{\leq k}_{\textrm{visit}}(u) Pvisit≤k(u). The smaller the difference, the greater the intimacy between u u u and v v v.

N

S

(

G

)

=

d

f

n

G

s

(

V

,

E

,

ω

s

)

NS(G) \xlongequal{dfn} G_s(V, E, \omega_s)

NS(G)dfnGs(V,E,ωs),

where

∀

⟨

v

,

u

⟩

∈

E

,

ω

s

(

u

,

v

)

=

s

i

m

k

(

P

v

i

s

i

t

≤

k

(

v

)

,

P

v

i

s

i

t

≤

k

(

u

)

)

\forall \langle v, u \rangle \in E, \omega_s(u, v) = sim^k(P^{\leq k}_{visit}(v),P^{\leq k}_{visit}(u))

∀⟨v,u⟩∈E,ωs(u,v)=simk(Pvisit≤k(v),Pvisit≤k(u))

s i m k ( x , y ) sim^k(x,y) simk(x,y) is some similarity measure of the vectors x \mathrm{x} x and y \mathrm{y} y, whose value increases as x \mathrm{x} x and y \mathrm{y} y are more similar.

s

i

m

k

(

x

,

y

)

sim^k(x,y)

simk(x,y) the suitable choose:

f

k

(

x

,

y

)

=

d

f

n

exp

(

2

k

−

∥

x

−

y

∥

L

1

)

−

1

(1)

f^k(x,y) \xlongequal{dfn} \exp(2k − \|x − y\|_{L_1}) − 1 \tag{1}

fk(x,y)dfnexp(2k−∥x−y∥L1)−1(1)

∥

x

−

y

∥

L

1

=

∑

i

=

1

n

∣

x

i

−

y

i

∣

\|x − y\|_{L_1} = \sum_{i=1}^n|x_i-y_i|

∥x−y∥L1=i=1∑n∣xi−yi∣

another choose is:

cos

(

x

,

y

)

=

(

x

,

y

)

(

x

,

x

)

.

(

y

,

y

)

(2)

\cos(x,y)= \frac{(x,y)}{\sqrt{(x,x)}.\sqrt{(y,y)}} \tag{2}

cos(x,y)=(x,x).(y,y)(x,y)(2)

where (·,·) denotes inner-product.(内积)

3.2 CE: Separation by circular escape.

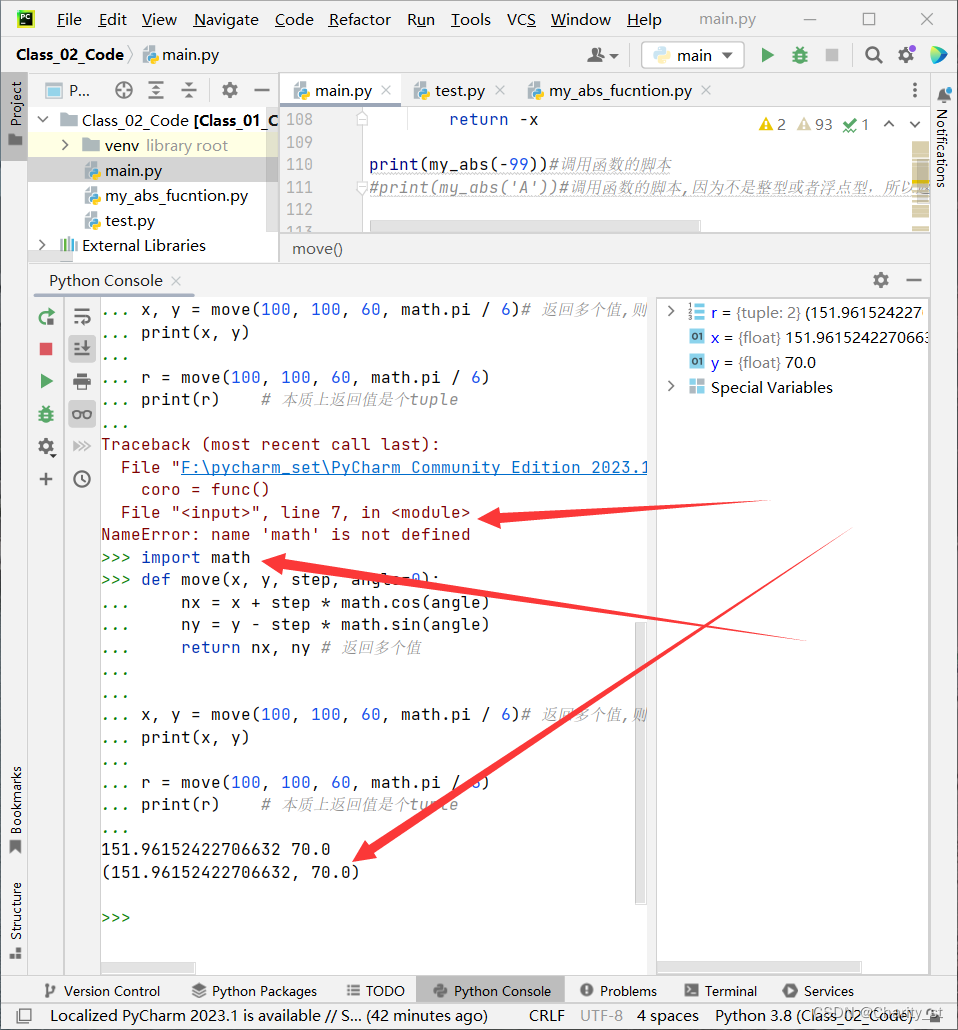

3.3 代码实现

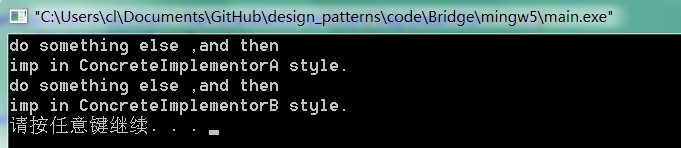

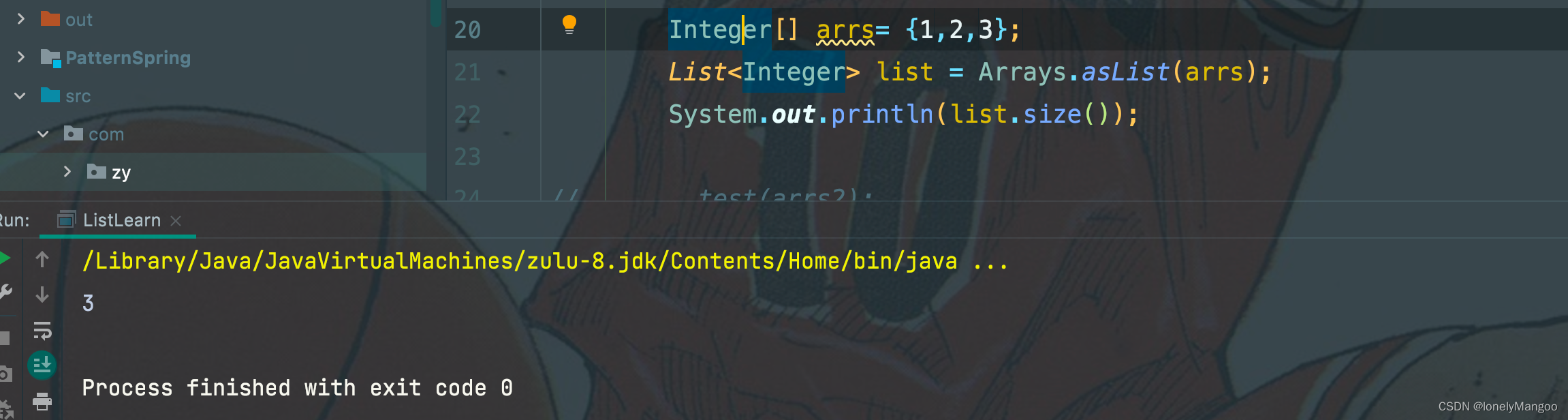

import numpy as np

def markovCluster(adjacencyMat, dimension, numIter, power=2, inflation=2):

columnSum = np.sum(adjacencyMat, axis=0)

probabilityMat = adjacencyMat / columnSum

# Expand by taking the e^th power of the matrix.

def _expand(probabilityMat, power):

expandMat = probabilityMat

for i in range(power - 1):

expandMat = np.dot(expandMat, probabilityMat)

return expandMat

expandMat = _expand(probabilityMat, power)

# Inflate by taking inflation of the resulting

# matrix with parameter inflation.

def _inflate(expandMat, inflation):

powerMat = expandMat

for i in range(inflation - 1):

powerMat = powerMat * expandMat

inflateColumnSum = np.sum(powerMat, axis=0)

inflateMat = powerMat / inflateColumnSum

return inflateMat

inflateMat = _inflate(expandMat, inflation)

for i in range(numIter):

expand = _expand(inflateMat, power)

inflateMat = _inflate(expand, inflation)

print(inflateMat)

print(np.zeros((7, 7)) != inflateMat)

if __name__ == "__main__":

dimension = 4

numIter = 10

adjacencyMat = np.array([[1, 1, 1, 1],

[1, 1, 0, 1],

[1, 0, 1, 0],

[1, 1, 0, 1]])

# adjacencyMat = np.array([[1, 1, 1, 1, 0, 0, 0],

# [1, 1, 1, 1, 1, 0, 0],

# [1, 1, 1, 1, 0, 0, 0],

# [1, 1, 1, 1, 0, 0, 0],

# [0, 1, 0, 0, 1, 1, 1],

# [0, 0, 0, 0, 1, 1, 1],

# [0, 0, 0, 0, 1, 1, 1],

# ])

markovCluster(adjacencyMat, dimension, numIter)

[[1.00000000e+000 1.00000000e+000 1.00000000e+000 1.00000000e+000]

[5.23869755e-218 5.23869755e-218 5.23869755e-218 5.23869755e-218]

[0.00000000e+000 0.00000000e+000 0.00000000e+000 0.00000000e+000]

[5.23869755e-218 5.23869755e-218 5.23869755e-218 5.23869755e-218]]

[[ True True True True]

[ True True True True]

[False False False False]

[ True True True True]]

可以从中得到聚类效果 { { 1 , 2 , 4 } , { 3 } } \{\{1,2,4\},\{3\}\} {{1,2,4},{3}}

谱聚类

MCL

MCL GitHub