资源中心

资源中心介绍

资源中心提供文件管理,UDF管理,任务组管理。

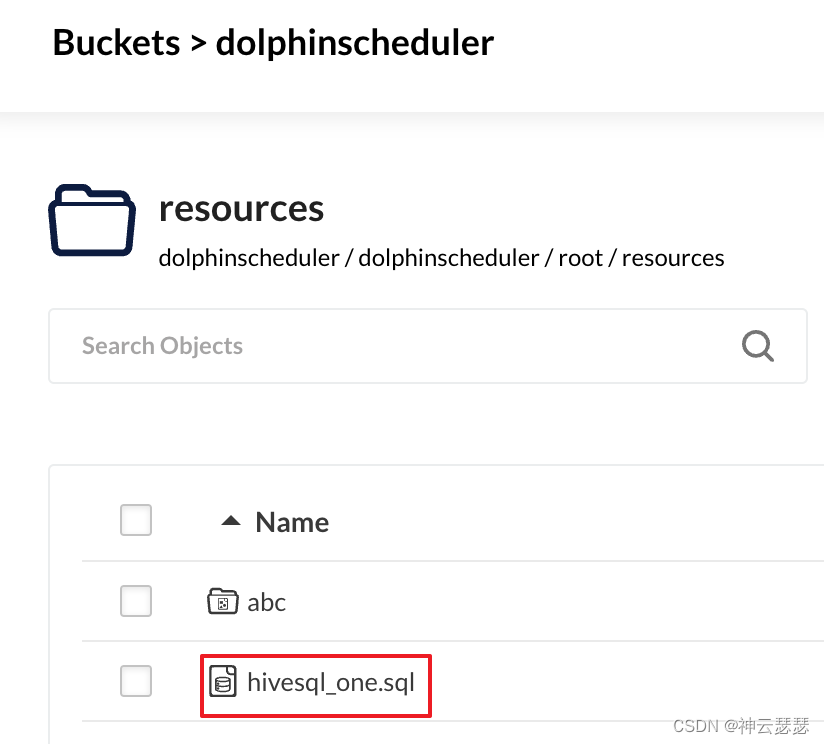

文件管理可以访问要执行的hive的sql文件

UDF管理可以放置fllink执行的自定义udf函数jar包,hive自定义的UDF函数jar包

以上的*.sql,*.jar文件可以理解为资源,这些资源需要有个存储的地方,本文以minio存储作为介绍

使用minio作为资源中心的存储

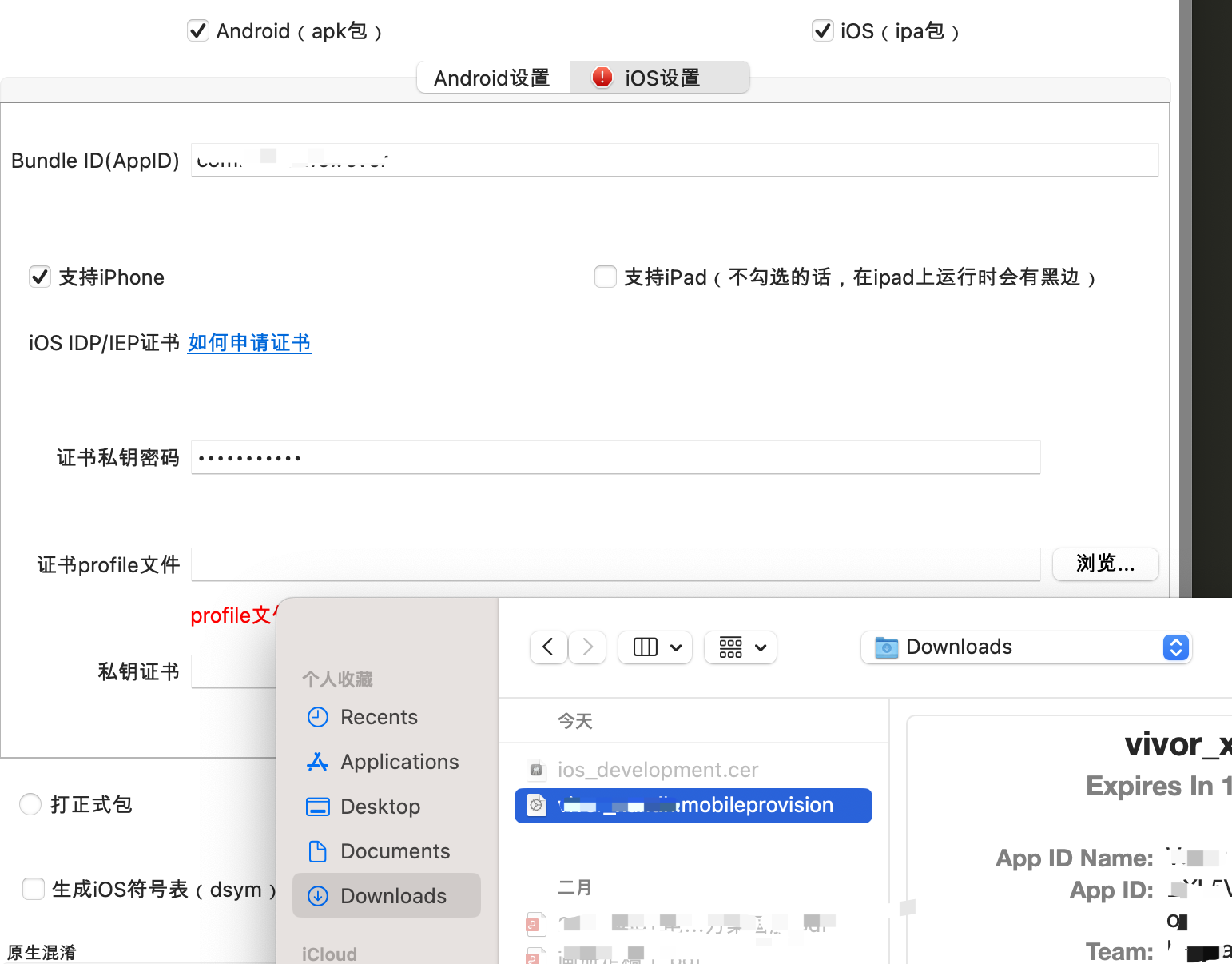

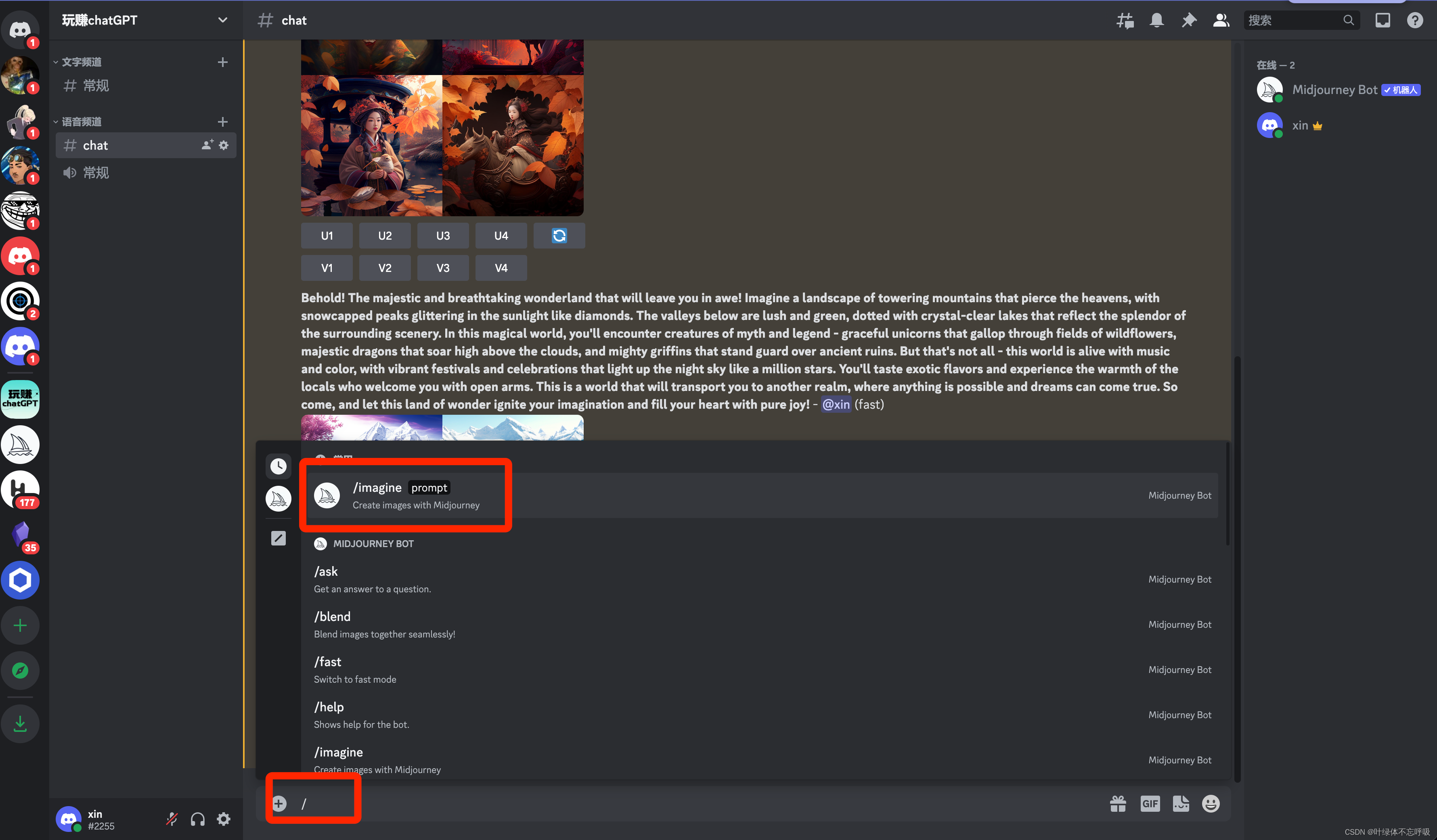

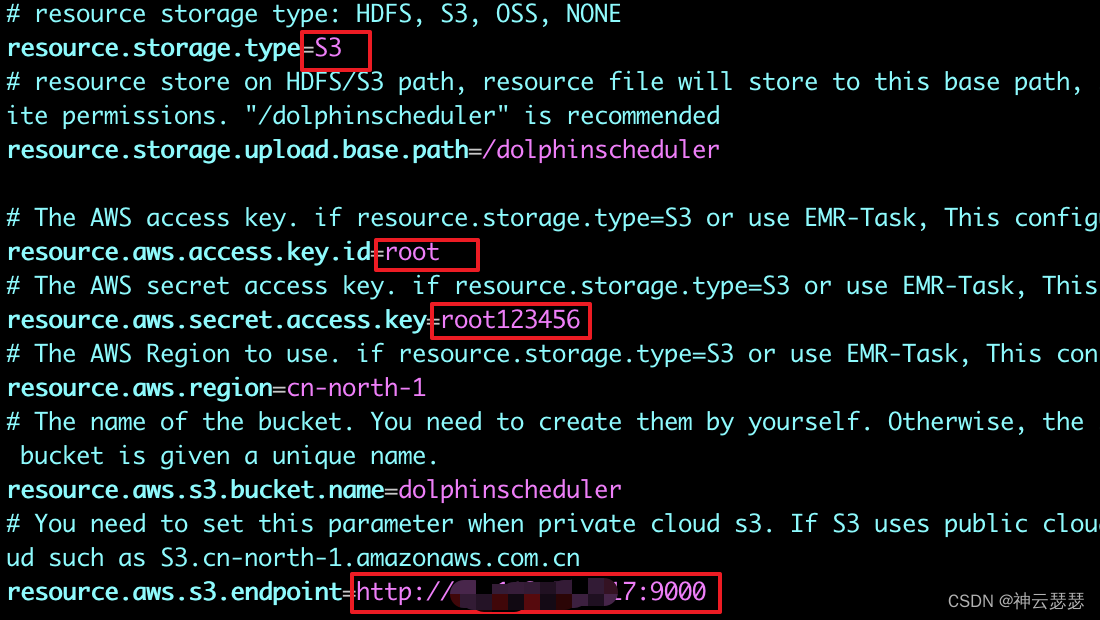

- 编辑 common.properties

vi ${DOPHINSCHEDULER_HOME}/tools/conf/common.properties

- 修改如图中所示

- 修改部分的代码段

# resource storage type: HDFS, S3, OSS, NONE

resource.storage.type=S3

# resource store on HDFS/S3 path, resource file will store to this base path, self configuration, please make sure the directory exists on hdfs and have read write permissions. "/dolphinscheduler" is recommended

resource.storage.upload.base.path=/dolphinscheduler

# The AWS access key. if resource.storage.type=S3 or use EMR-Task, This configuration is required

resource.aws.access.key.id=root

# The AWS secret access key. if resource.storage.type=S3 or use EMR-Task, This configuration is required

resource.aws.secret.access.key=root123456

# The AWS Region to use. if resource.storage.type=S3 or use EMR-Task, This configuration is required

resource.aws.region=cn-north-1

# The name of the bucket. You need to create them by yourself. Otherwise, the system cannot start. All buckets in Amazon S3 share a single namespace; ensure the bucket is given a unique name.

resource.aws.s3.bucket.name=dolphinscheduler

# You need to set this parameter when private cloud s3. If S3 uses public cloud, you only need to set resource.aws.region or set to the endpoint of a public cloud such as S3.cn-north-1.amazonaws.com.cn

resource.aws.s3.endpoint=http://192.168.75.117:9000

注意在修改配置之后,需要在minio创建对应的桶,桶名字dolphinscheduler

-

配置文件生效

#进入到主目录 cd ${DOPHINSCHEDULER_HOME} #复制配置文件到模块 cp ./tools/conf/common.properties ./api-server/conf/ cp ./tools/conf/common.properties ./master-server/conf/ cp ./tools/conf/common.properties ./worker-server/conf/ cp ./tools/conf/common.properties ./alert-server/conf/ # 一键停止集群所有服务 bash ./bin/stop-all.sh #重新安装部署并启动 bash ./bin/install.sh

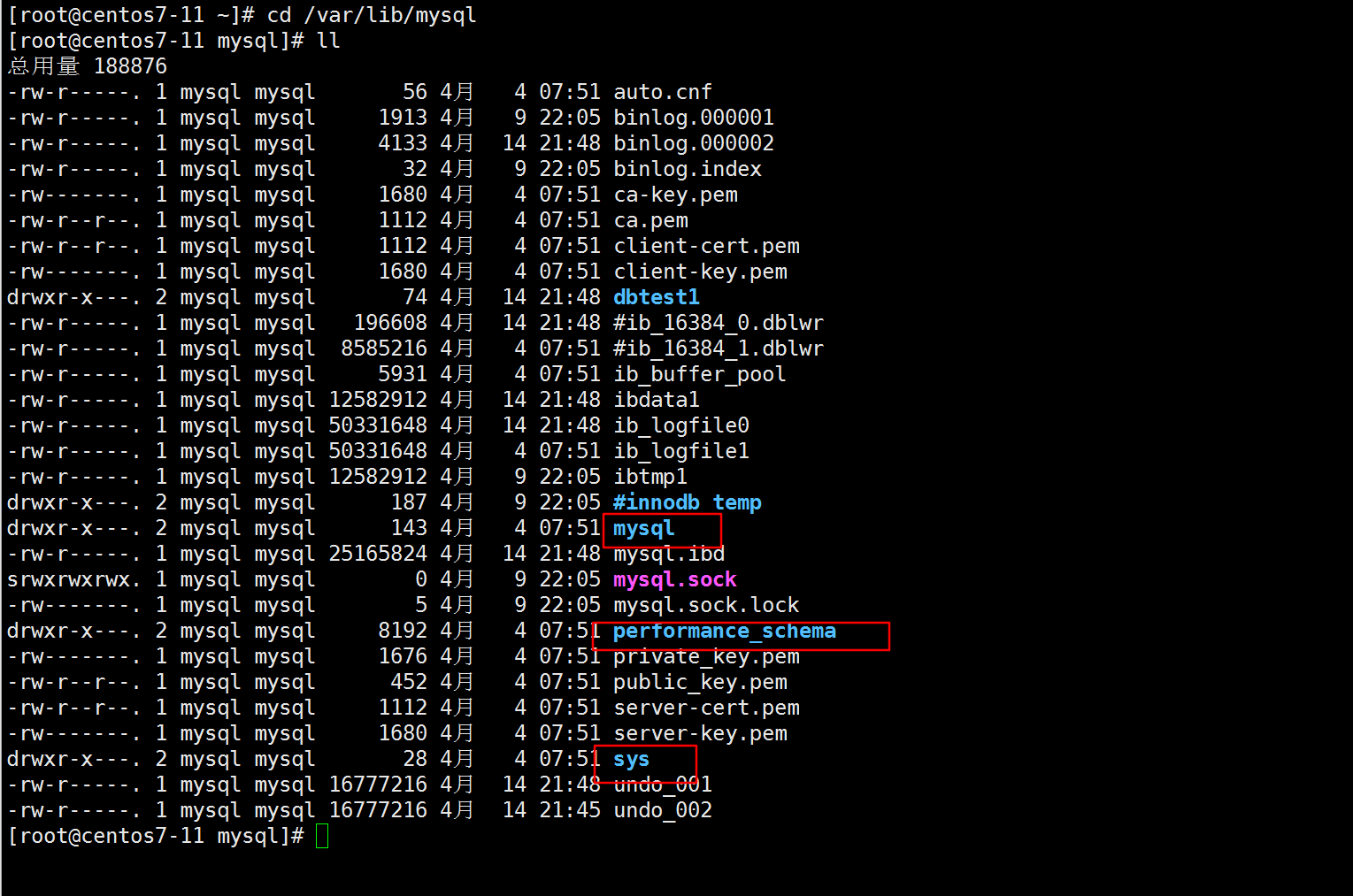

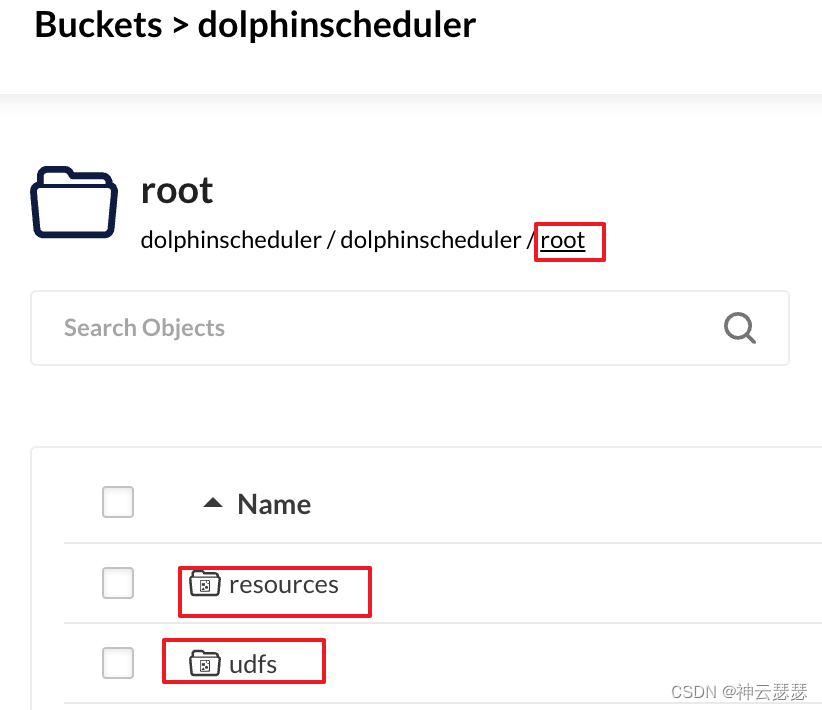

minio资源预览

dolphinscheduler会在minio中创建文件的结果如下

其中root代表用户所在的租户名

#资源路径,

dolphinscheduler/root/resources/

#udf函数路径

dolphinscheduler/root/udf