| 服务器IP | 部暑角色 |

|---|---|

| 192.168.11.100 | zookeeper kafka elasticsearch |

一、docker部暑

。。。

二、.安装Zookeeper

path=/data/zookeeper

mkdir -p ${path}/{data,conf,log}

chown -R 1000.1000 ${path}

echo "0" > ${path}/data/myid

#zookeeper配置文件

cat > ${path}/conf/zoo.cfg << 'EOF'

4lw.commands.whitelist=mntr,ruok

clientPort=2181

dataDir=/data/zookeeper/data

dataLogDir=/data/zookeeper/log

tickTime=2000

initLimit=5

syncLimit=2

autopurge.snapRetainCount=3

autopurge.purgeInterval=0

maxClientCnxns=60

server.0=192.168.11.100:2888:3888

EOF

cat > ${path}/start.sh << 'EOF'

cd `dirname $0`

docker run -d \

--network host \

--restart=always \

-v `pwd`/data:/data/zookeeper/data \

-v /etc/localtime:/etc/localtime \

-v `pwd`/conf/zoo.cfg:/conf/zoo.cfg \

--name zookeeper \

zookeeper:3.6.3

EOF

bash ${path}/start.sh

#查询zookeeper状态

docker exec -i zookeeper zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: standalone

#zookeeper安全问题

#对根目录进行基于IP或auth的授权

docker exec -i zookeeper zkCli.sh -server 127.0.0.1 << EOF

setAcl / ip:127.0.0.1:rwcda,ip:192.168.11.0/24:rwcda,ip:172.19.0.0/16:rwcda

getAcl /

quit

EOF

[zk: 127.0.0.1(CONNECTED) 1] getAcl /

'ip,'127.0.0.1

: cdrwa

'ip,'192.168.11.0/24

: cdrwa

'ip,'172.19.0.0/16

: cdrwa

#关闭acl

setAcl / world:anyone:cdrwa

三、部暑kafka

#KAFKA_BROKER_ID,KAFKA_LISTENERS,KAFKA_ADVERTISED_LISTENERS,KAFKA_ZOOKEEPER_CONNECT等四个参数按实际情况修改

path=/data/kafka

mkdir ${path}/{log,data} -p

cat > ${path}/start.sh << 'EOF'

#!/bin/bash

cd `dirname $0`

docker run -d \

--name kafka \

--restart=always \

--network host \

-e LOG_DIRS=/data/kafka/log \

-e KAFKA_BROKER_ID=0 \

-e KAFKA_LISTENERS=PLAINTEXT://192.168.11.100:9092 \

-e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://192.168.11.100:9092 \

-e KAFKA_ZOOKEEPER_CONNECT=192.168.11.100:2181/kafka \

-e KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR=1 \

-e KAFKA_PORT=9092 \

-v `pwd`/data:/kafka \

-v `pwd`/log:/data/kafka/log \

-v /etc/localtime:/etc/localtime \

wurstmeister/kafka:2.13-2.8.1

EOF

bash ${path}/start.sh

验证kafka

#创建topic

docker exec -it kafka bash

kafka-topics.sh --create \

--zookeeper 192.168.11.100:2181/kafka \

--topic test \

--partitions 1 \

--replication-factor 1

#生产者

docker exec -it kafka bash

/opt/kafka_2.13-2.8.1/bin/kafka-console-producer.sh \

--broker-list 192.168.11.100:9092 \

--topic test

#消费者

docker exec -it kafka bash

/opt/kafka_2.13-2.8.1/bin/kafka-console-consumer.sh \

--bootstrap-server 192.168.11.100:9092 \

--topic test --from-beginning

四、elasticsearch

4.1、集群证书生成,生成elastic-certificates.p12证书(此步要手动执行确认)

mkdir -p /data/elasticsearch/{config,logs,data}/

mkdir -p /data/elasticsearch/config/certs/

chown 1000:root /data/elasticsearch/{config,logs,data}

docker run -i --rm \

-v /data/elasticsearch/config/:/usr/share/elasticsearch/config/ \

elasticsearch:7.17.6 bash << 'EOF'

bin/elasticsearch-certutil ca -s --pass '' --days 10000 --out elastic-stack-ca.p12

bin/elasticsearch-certutil cert -s --ca-pass '' --pass '' --days 5000 --ca elastic-stack-ca.p12 --out elastic-certificates.p12

mv elastic-* config/certs

chown -R 1000:root config

exit

EOF

4.2 准备elasticsearch.yml

mkdir -p /data/elasticsearch/{config,data}

cat > /data/elasticsearch/config/elasticsearch.yml << 'EOF'

cluster.name: smartgate-cluster

discovery.seed_hosts: 192.168.11.100

cluster.initial_master_nodes: 192.168.11.100

network.host: 192.168.11.100

#增加了写队列的大小

thread_pool.write.queue_size: 1000

#锁定内存

bootstrap.memory_lock: true

xpack.license.self_generated.type: basic

xpack.ml.enabled: false

#xpack.security.enrollment.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: "certificate"

xpack.security.transport.ssl.keystore.path: "certs/elastic-certificates.p12"

xpack.security.transport.ssl.truststore.path: "certs/elastic-certificates.p12"

xpack.security.enabled: true

#xpack.security.http.ssl.enabled: true

#xpack.security.http.ssl.keystore.path: certs/elastic-certificates.p12

#xpack.security.http.ssl.truststore.path: certs/elastic-certificates.p12

#xpack.security.http.ssl.client_authentication: optional

#xpack.security.authc.realms.pki.pki1.order: 1

node.roles: ['master','data','ingest','remote_cluster_client']

node.name: 192.168.11.100

http.port: 9200

http.cors.allow-origin: "*"

http.cors.allow-headers: Authorization

http.cors.enabled: true

http.host: "192.168.11.100,127.0.0.1"

transport.host: "192.168.11.100,127.0.0.1"

ingest.geoip.downloader.enabled: false

EOF

cat >/data/elasticsearch/start.sh << 'EOF'

#!/bin/bash

cd `dirname $0`

dockerd --iptables=false >/dev/nul 2>&1 &

sleep 1

docker start elasticsearch >/dev/nul 2>&1

if [ "$?" == "0" ]

then

docker rm elasticsearch -f

fi

sleep 1

docker start elasticsearch >/dev/nul 2>&1

if [ "$?" != "0" ]

then

echo "run elasticsearch"

docker run -d \

--restart=always \

--name elasticsearch \

--network host \

--privileged \

--ulimit memlock=-1:-1 \

--ulimit nofile=65536:65536 \

-e ELASTIC_PASSWORD=xxxxxxxx \

-e KIBANA_PASSWORD=xxxxxxxx \

-e "ES_JAVA_OPTS=-Xms1g -Xmx1g" \

-v /etc/localtime:/etc/localtime \

-v `pwd`/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml \

-v `pwd`/config/certs/:/usr/share/elasticsearch/config/certs \

-v `pwd`/data/:/usr/share/elasticsearch/data/ \

-v `pwd`/logs/:/usr/share/elasticsearch/logs/ \

elasticsearch:7.17.6

fi

EOF

bash /data/elasticsearch/start.sh

4.3 验证es

curl -u elastic:xxxxxxxx http://192.168.11.100:9200/

{

"name" : "192.168.11.101",

"cluster_name" : "smartgate-cluster",

"cluster_uuid" : "arM00fRrTy-FsqohMaftAA",

"version" : {

"number" : "7.17.6",

"build_flavor" : "default",

"build_type" : "docker",

"build_hash" : "f65e9d338dc1d07b642e14a27f338990148ee5b6",

"build_date" : "2022-08-23T11:08:48.893373482Z",

"build_snapshot" : false,

"lucene_version" : "8.11.1",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

五、skywalking-oap-server

path=/data/sw-oap

mkdir ${path}/{log,config} -p

cat > ${path}/start.sh << 'EOF'

#!/bin/bash

cd `dirname $0`

docker run -d \

--restart always \

--name sw-oap \

-p 1234:1234 \

-p 11800:11800 \

-p 12800:12800 \

-e TZ=Asia/Shanghai \

-e SW_STORAGE=elasticsearch \

-e SW_STORAGE_ES_CLUSTER_NODES=192.168.11.100:9200 \

-e SW_ES_USER=elastic \

-e SW_ES_PASSWORD="xxxxxxxx" \

-e SW_CLUSTER_ZK_HOST_PORT="192.168.11.100:2181/oap" \

-e "SW_KAFKA_FETCHER=default" \

-e "SW_KAFKA_FETCHER_ENABLE_METER_SYSTEM=true" \

-e "SW_KAFKA_FETCHER_PARTITIONS=2" \

-e "SW_KAFKA_FETCHER_PARTITIONS_FACTOR=1" \

-e "SW_KAFKA_FETCHER_SERVERS=192.168.11.100:9092" \

-e "SW_NAMESPACE=yzy" \

-v /etc/localtime:/etc/localtime \

apache/skywalking-oap-server:9.3.0

EOF

bash ${path}/start.sh

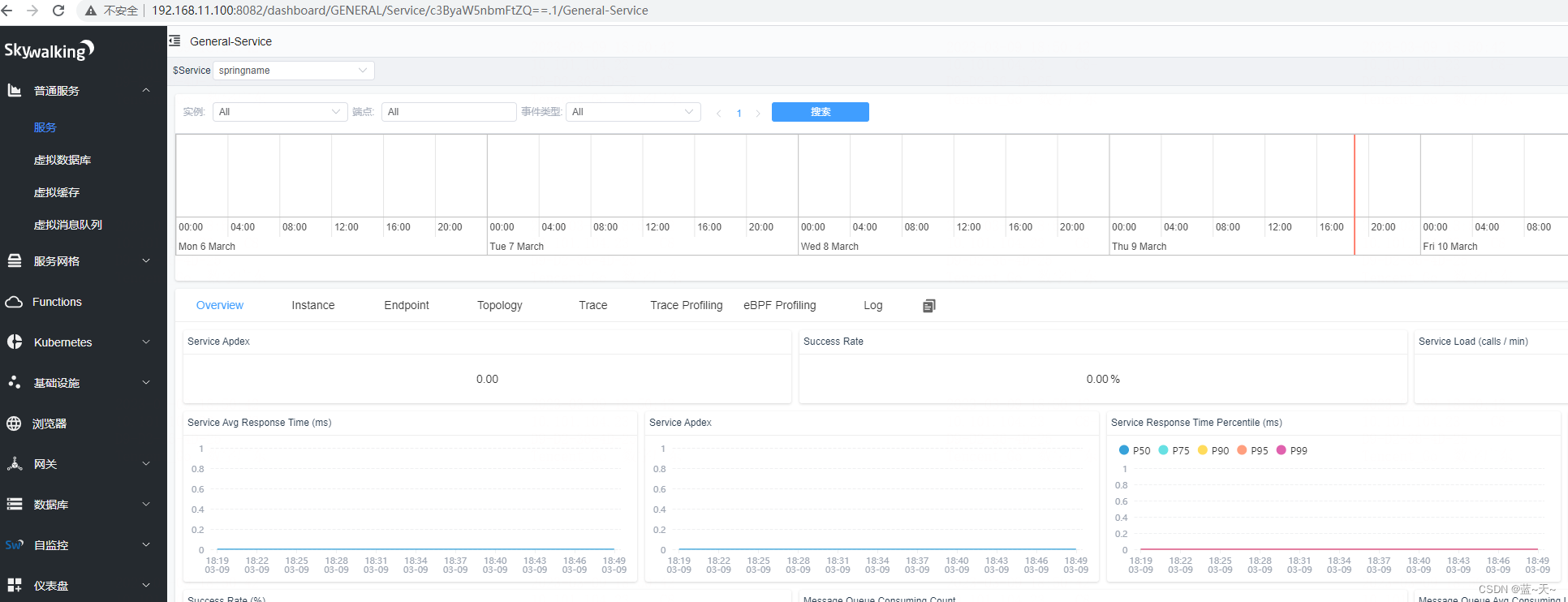

六 skywalking ui

path=/data/swui

mkdir ${path}/{log,config} -p

cat > ${path}/start.sh << 'EOF'

#!/bin/bash

cd `dirname $0`

docker run -d \

--restart always \

--name swui \

-p 8082:8080 \

-e TZ=Asia/Shanghai \

-e SW_OAP_ADDRESS="http://192.168.11.100:12800" \

-e SW_TIMEOUT=20000 \

-v /etc/localtime:/etc/localtime \

apache/skywalking-ui:9.3.0

EOF

bash ${path}/start.sh

七、demo

path=/data/sw-java

mkdir ${path}/agent/config -p

cd ${path}

curl -L https://archive.apache.org/dist/skywalking/java-agent/8.9.0/apache-skywalking-java-agent-8.9.0.tgz -o apache-skywalking-java-agent-8.9.0.tgz

tar zxvf apache-skywalking-java-agent-8.9.0.tgz

mv skywalking-agent/skywalking-agent.jar ${path}/agent/

mv skywalking-agent/optional-plugins/apm-trace-ignore-plugin-8.9.0.jar ${path}/agent/

mv skywalking-agent/optional-reporter-plugins/kafka-reporter-plugin-8.9.0.jar ${path}/agent/

mv skywalking-agent/config/agent.config ${path}/agent/config/

cat > ${path}/agent/config/apm-trace-ignore-plugin.config << 'EOF'

trace.ignore_path=${SW_AGENT_TRACE_IGNORE_PATH:/actuator/**}

EOF

cat > ${path}/start.sh << 'EOF'

#!/bin/bash

cd `dirname $0`

docker rm -f sw-java

docker run -d \

--restart always \

--name sw-java \

-p 18080:8080 \

-e TZ=Asia/Shanghai \

-e JAVA_OPTS=" -javaagent:/agent/skywalking-agent.jar -DSW_AGENT_COLLECTOR_BACKEND_SERVICES=192.168.11.100:11800 -DSW_KAFKA_BOOTSTRAP_SERVERS=192.168.11.100:9092 -DSW_KAFKA_NAMESPACE=yzy -DSW_AGENT_NAME=yzy-app " \

-v /etc/localtime:/etc/localtime \

-v `pwd`/agent:/agent/ \

maskerade/springboot-demo

EOF

bash ${path}/start.sh

访问几次:

http://192.168.11.100:18080

sw-ui:

http://192.168.11.100:8082